Get in touch if you think you or someone else should be added!

🧵

🧵

Foundation vision encoders like CLIP and DINOv2 have transformed general computer vision, but what happens when we scale them for medical imaging?

📄 Read the full preprint here: arxiv.org/abs/2509.12818

Foundation vision encoders like CLIP and DINOv2 have transformed general computer vision, but what happens when we scale them for medical imaging?

📄 Read the full preprint here: arxiv.org/abs/2509.12818

🧵1/3

🧵1/3

On my increasing disgust with the AI discourse, even though I still like the technical and philosophical. And how I wish I could be excited about AI again.

togelius.blogspot.com/2025/08/ai-a...

A SotA-enabling vision foundation model, trained with pure self-supervised learning (SSL) at scale.

High quality dense features, combining unprecedented semantic and geometric scene understanding.

Three reasons why this matters👇

A SotA-enabling vision foundation model, trained with pure self-supervised learning (SSL) at scale.

High quality dense features, combining unprecedented semantic and geometric scene understanding.

Three reasons why this matters👇

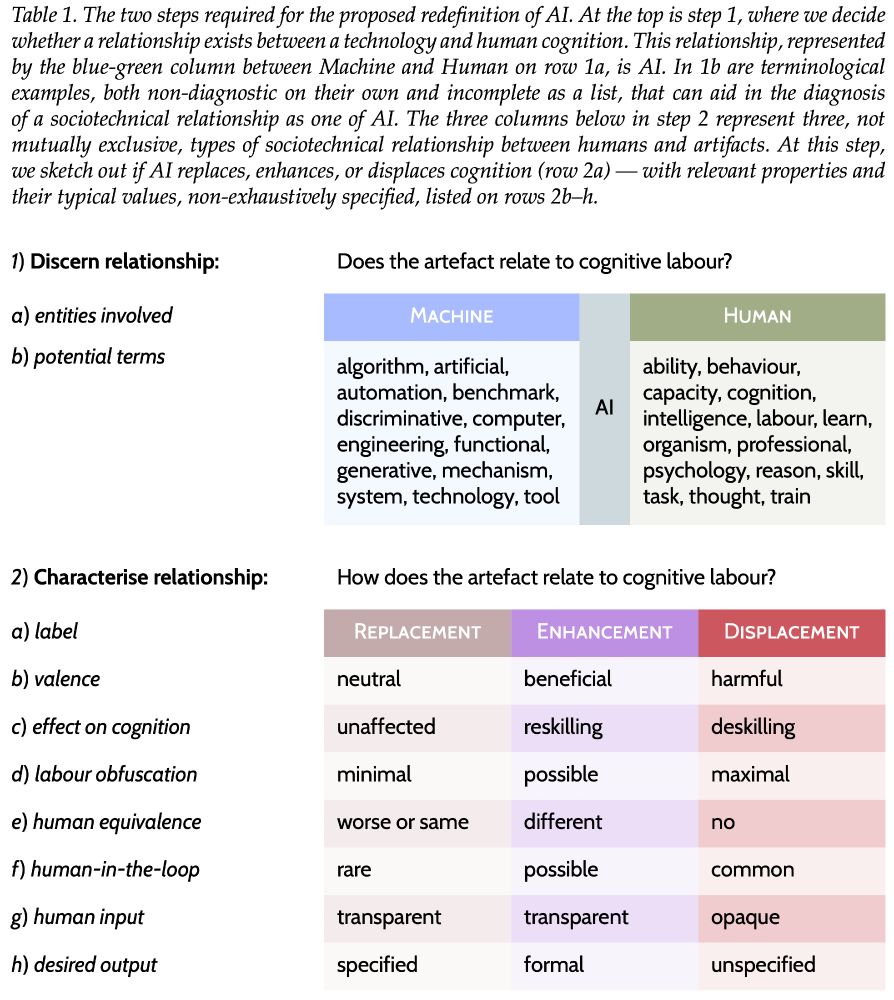

Wherein I analyse HCAI & demonstrate through 3 triplets my new tripartite definition of AI (Table 1) that properly centres the human. 1/n

Wherein I analyse HCAI & demonstrate through 3 triplets my new tripartite definition of AI (Table 1) that properly centres the human. 1/n

go.nature.com/450KElr

go.nature.com/450KElr

feels really bad when there's a PR that's been open for a while, but i know i can't easily do a good job of reviewing it, so it's constantly "later"

feels really bad when there's a PR that's been open for a while, but i know i can't easily do a good job of reviewing it, so it's constantly "later"

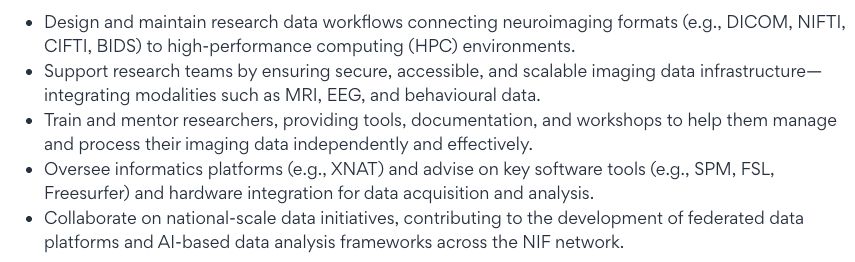

Located a the Hunter Medical Research Institute (HMRI) & the University of Newcastle (UoN), in partnership with the National Imaging Facility (NIF),

Apply here: www.seek.com.au/job/85537666

Located a the Hunter Medical Research Institute (HMRI) & the University of Newcastle (UoN), in partnership with the National Imaging Facility (NIF),

Apply here: www.seek.com.au/job/85537666

Intersecting mechanistic interpretability and health AI 😎

We trained and interpreted sparse autoencoders on MAIRA-2, our radiology MLLM. We found a range of human-interpretable radiology reporting concepts, but also many uninterpretable SAE features.

Intersecting mechanistic interpretability and health AI 😎

We trained and interpreted sparse autoencoders on MAIRA-2, our radiology MLLM. We found a range of human-interpretable radiology reporting concepts, but also many uninterpretable SAE features.

📰 Also check out our blog post for why we're so excited about it: www.microsoft.com/en-us/resear...

💾 The dataset can be downloaded here: bimcv.cipf.es/bimcv-projec...

📰 Also check out our blog post for why we're so excited about it: www.microsoft.com/en-us/resear...

💾 The dataset can be downloaded here: bimcv.cipf.es/bimcv-projec...

@dccastr0.bsky.social #AI #MedSky #MLSky

@dccastr0.bsky.social #AI #MedSky #MLSky

One important step towards usability of AI in real-world radiology applications.

One important step towards usability of AI in real-world radiology applications.

255-character placeholders, $ORIGIN rpaths, and platform-specific binary patching.

We explain how Conda packages achieve relocatability:

prefix.dev/blog/what-i...