Interested in extracting world understanding from models and more controlled generation. 🌐 https://stefan-baumann.eu/

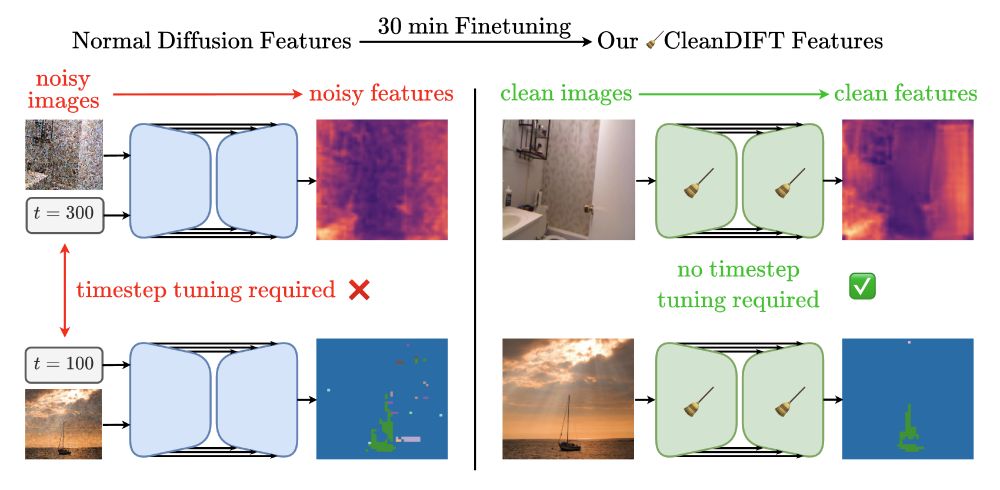

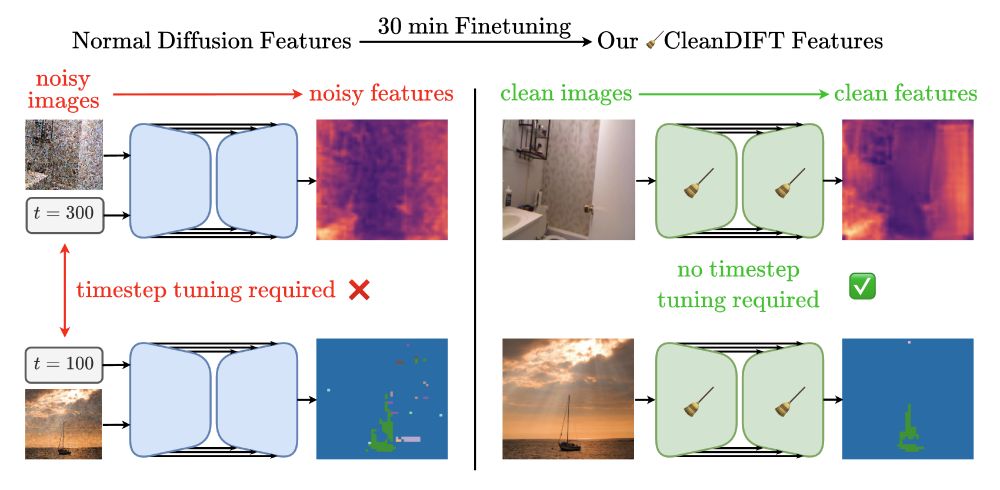

Yes, it is - but we found a way to do better. 🚀

Here’s how we unlock better features, no noise, no hassle.

📝 Project Page: compvis.github.io/cleandift

💻 Code: github.com/CompVis/clea...

🧵👇

Turns out they can! We show how adapting the backbone unlocks clean, powerful features for better results across the board. 🚀🧹

Check it out! ⬇️

Come say hi in Honolulu!

👋 Pingchuan, Ming, Felix, Stefan, Timy, and Björn Ommer will be attending.

Come say hi in Honolulu!

👋 Pingchuan, Ming, Felix, Stefan, Timy, and Björn Ommer will be attending.

💡 It works if we leverage a self-supervised representation!

Meet RepTok🦎: A generative model that encodes an image into a single continuous latent while keeping realism and semantics. 🧵 👇

💡 It works if we leverage a self-supervised representation!

Meet RepTok🦎: A generative model that encodes an image into a single continuous latent while keeping realism and semantics. 🧵 👇

We built the Flow Poke Transformer (FPT) to model multi-modal scene dynamics from sparse interactions.

It learns to predict the 𝘥𝘪𝘴𝘵𝘳𝘪𝘣𝘶𝘵𝘪𝘰𝘯 of motion itself 🧵👇

We built the Flow Poke Transformer (FPT) to model multi-modal scene dynamics from sparse interactions.

It learns to predict the 𝘥𝘪𝘴𝘵𝘳𝘪𝘣𝘶𝘵𝘪𝘰𝘯 of motion itself 🧵👇

In short: we emphasize how autoencoders are implemented—but not always what they represent (and some of the implications of that representation).🧵

In short: we emphasize how autoencoders are implemented—but not always what they represent (and some of the implications of that representation).🧵

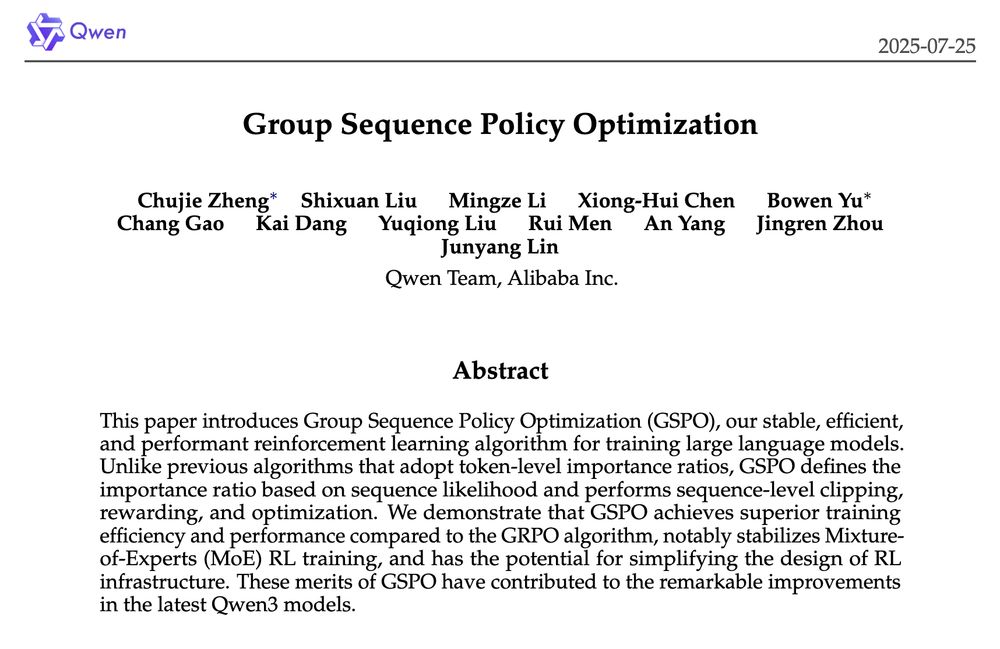

It makes so much more sense intuitively to work on a sequence rather than on a token level when our rewards are on a sequence level.

It makes so much more sense intuitively to work on a sequence rather than on a token level when our rewards are on a sequence level.

Come say hi in Nashville!

👋 Johannes, Ming, Kolja, Stefan, and Björn will be attending.

Come say hi in Nashville!

👋 Johannes, Ming, Kolja, Stefan, and Björn will be attending.

📌 Poster Session 6, Sunday 4:00 to 6:00 PM, Poster #208

📌 Poster Session 6, Sunday 4:00 to 6:00 PM, Poster #208

The other two interviewees' research played a pivotal role in the rise of diffusion models, whereas I just like to yap about them 😬 this was a wonderful opportunity to do exactly that!

The other two interviewees' research played a pivotal role in the rise of diffusion models, whereas I just like to yap about them 😬 this was a wonderful opportunity to do exactly that!

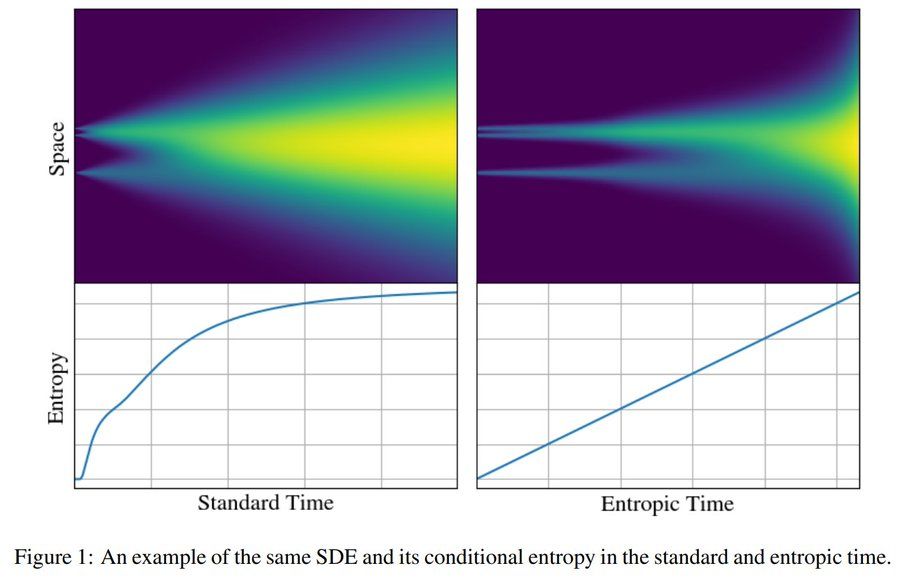

"Entropic Time Schedulers for Generative Diffusion Models"

We find that the conditional entropy offers a natural data-dependent notion of time during generation

Link: arxiv.org/abs/2504.13612

"Entropic Time Schedulers for Generative Diffusion Models"

We find that the conditional entropy offers a natural data-dependent notion of time during generation

Link: arxiv.org/abs/2504.13612

sander.ai/2025/04/15/l...

sander.ai/2025/04/15/l...

Project Page: vgg-t.github.io

Code & Weights: github.com/facebookrese...

Project Page: vgg-t.github.io

Code & Weights: github.com/facebookrese...

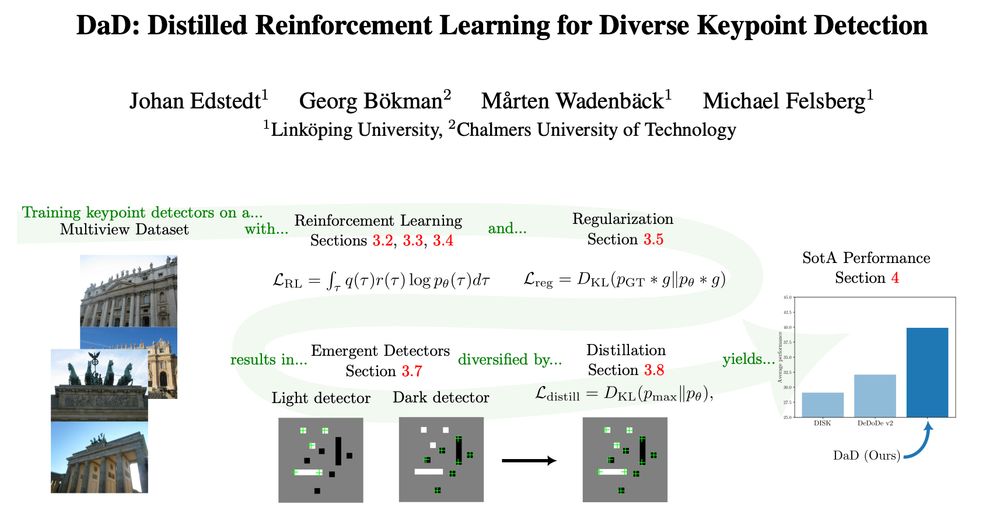

As this will get pretty long, this will be two threads.

The first will go into the RL part, and the second on the emergence and distillation.

As this will get pretty long, this will be two threads.

The first will go into the RL part, and the second on the emergence and distillation.

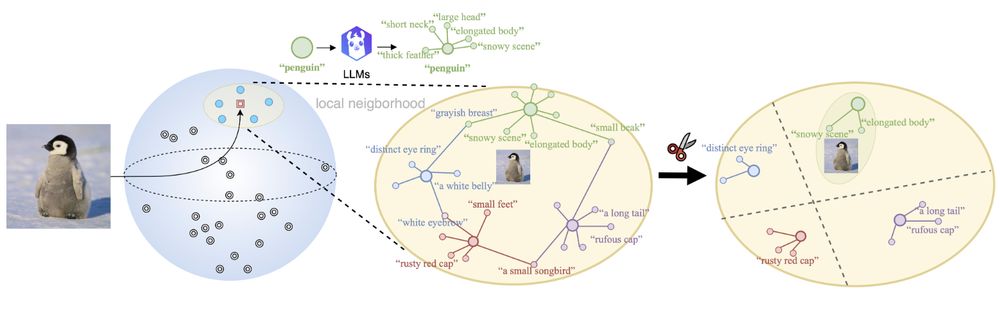

🤨Interested? Check out our latest work at #AAAI25:

💻Code and 📝Paper at: github.com/CompVis/DisCLIP

🧵👇

🤨Interested? Check out our latest work at #AAAI25:

💻Code and 📝Paper at: github.com/CompVis/DisCLIP

🧵👇

Recently with NICO: Apart from a class, the images have a context, one of them is 'autumn'. There is also a pumpkin class. Surprise surprise, many autumn images contain pumpkins.

Recently with NICO: Apart from a class, the images have a context, one of them is 'autumn'. There is also a pumpkin class. Surprise surprise, many autumn images contain pumpkins.

When you ask for a meeting with others, you are asking for their time. You are asking for their most valuable, finite resource to benefit yourself (e.g., for advice, networking, questions, and opportunities).

Here are some tips that I found useful.

When you ask for a meeting with others, you are asking for their time. You are asking for their most valuable, finite resource to benefit yourself (e.g., for advice, networking, questions, and opportunities).

Here are some tips that I found useful.

What if you could have even better features at a fraction of the cost? If this sounds enticing, take a look at this paper! ⬇️

We showed exactly that for a fundamental task:

semantic correspondence📍

A thread 🧵👇

What if you could have even better features at a fraction of the cost? If this sounds enticing, take a look at this paper! ⬇️

Turns out they can! We show how adapting the backbone unlocks clean, powerful features for better results across the board. 🚀🧹

Check it out! ⬇️

Yes, it is - but we found a way to do better. 🚀

Here’s how we unlock better features, no noise, no hassle.

📝 Project Page: compvis.github.io/cleandift

💻 Code: github.com/CompVis/clea...

🧵👇

Turns out they can! We show how adapting the backbone unlocks clean, powerful features for better results across the board. 🚀🧹

Check it out! ⬇️

Despite seeming similar, there is some confusion in the community about the exact connection between the two frameworks. We aim to clear up the confusion by showing how to convert one framework to another, for both training and sampling.

Despite seeming similar, there is some confusion in the community about the exact connection between the two frameworks. We aim to clear up the confusion by showing how to convert one framework to another, for both training and sampling.

Turns out you can, and here is how: arxiv.org/abs/2411.15099

Really excited to this work on multimodal pretraining for my first bluesky entry!

🧵 A short and hopefully informative thread:

Turns out you can, and here is how: arxiv.org/abs/2411.15099

Really excited to this work on multimodal pretraining for my first bluesky entry!

🧵 A short and hopefully informative thread:

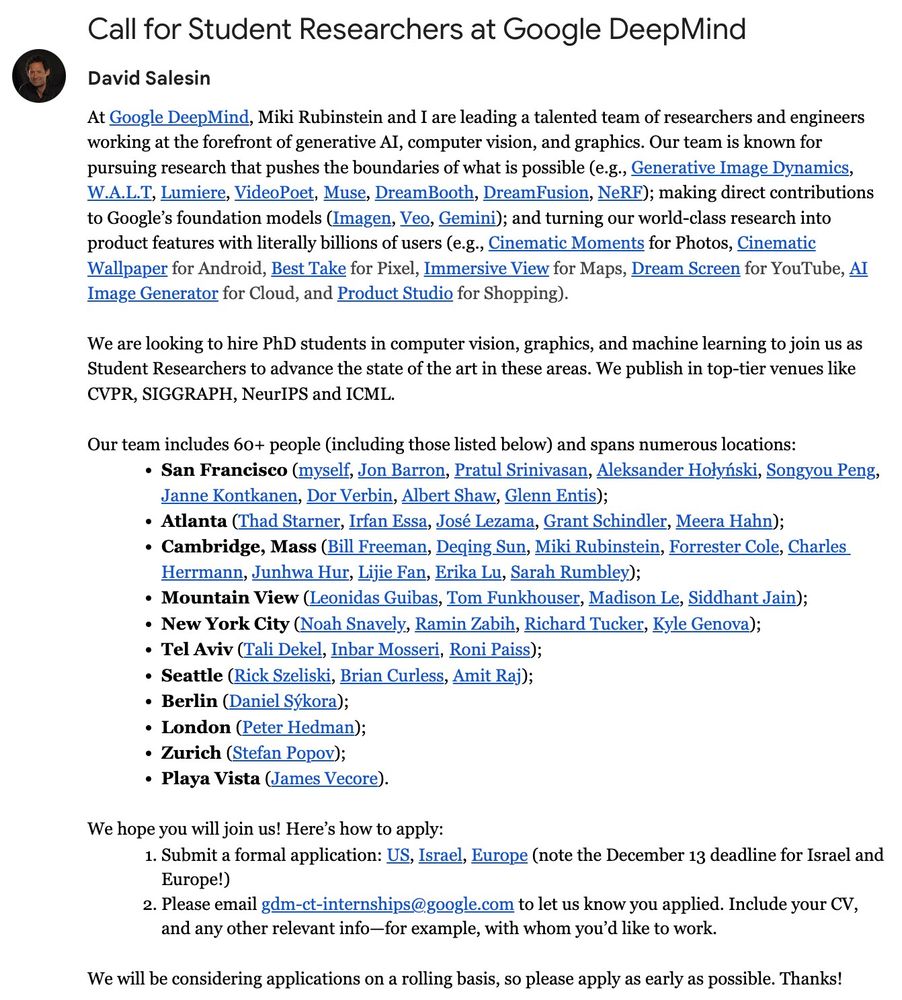

@serge.belongie.com and I created this feed to compile internship opportunities in AI, ML, CV, NLP, and related areas.

The feed is rule-based. Please help us improve the rules by sharing feedback 🧡

🔗 Link to the feed: bsky.app/profile/did:...

@serge.belongie.com and I created this feed to compile internship opportunities in AI, ML, CV, NLP, and related areas.

The feed is rule-based. Please help us improve the rules by sharing feedback 🧡

🔗 Link to the feed: bsky.app/profile/did:...