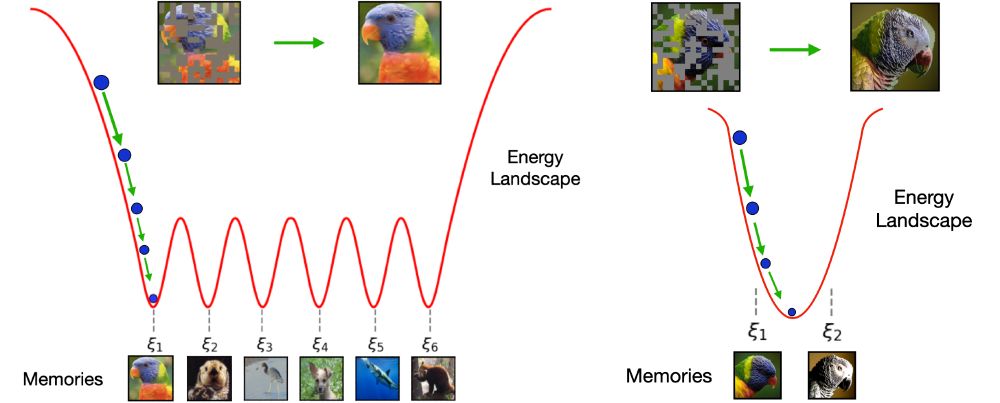

We found that the generative capabilities of diffusion models are the result of a phase transition!

Preprint: arxiv.org/abs/2305.19693

Code: github.com/gabrielraya/...

Come join me at ND! Feel free to reach out with any questions.

And please share!

apply.interfolio.com/173031

Come join me at ND! Feel free to reach out with any questions.

And please share!

apply.interfolio.com/173031

"The Information Dynamics of Generative Diffusion"

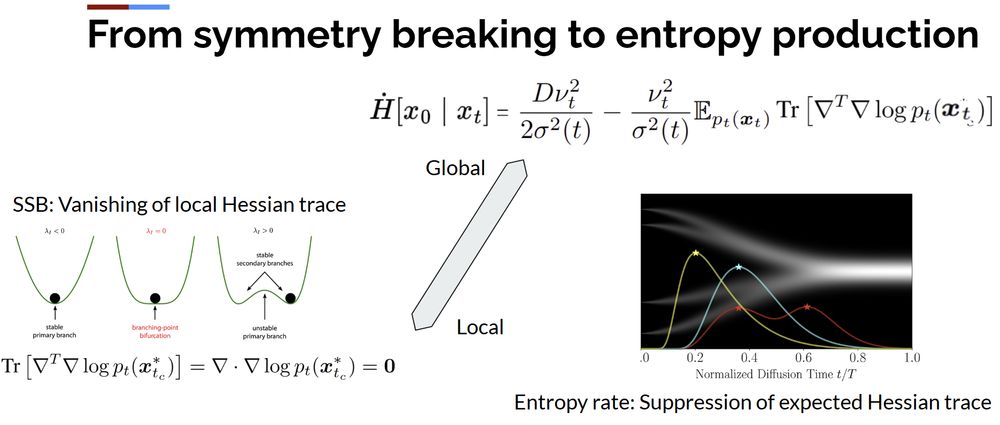

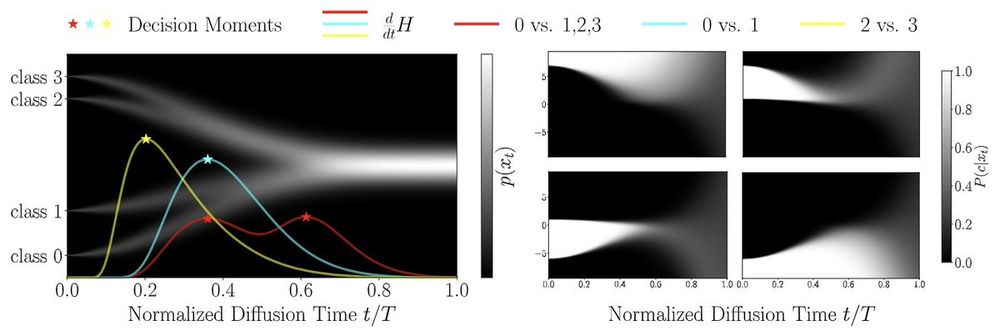

This paper connects entropy production, divergence of vector fields and spontaneous symmetry breaking

link: arxiv.org/abs/2508.19897

"The Information Dynamics of Generative Diffusion"

This paper connects entropy production, divergence of vector fields and spontaneous symmetry breaking

link: arxiv.org/abs/2508.19897

The both corresponds to a (local or global) suppression of the quadratic potential (Hessian trace).

The both corresponds to a (local or global) suppression of the quadratic potential (Hessian trace).

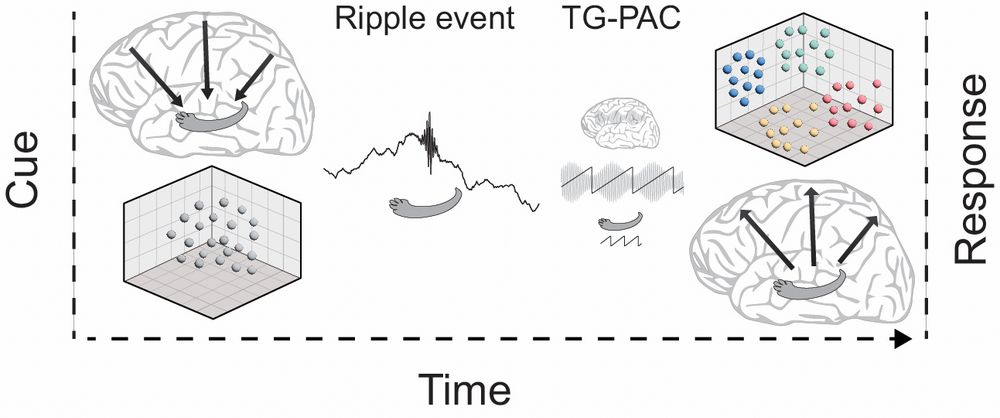

We recorded iEEG from patients during memory retrieval... and found something really cool 👇(thread)

We recorded iEEG from patients during memory retrieval... and found something really cool 👇(thread)

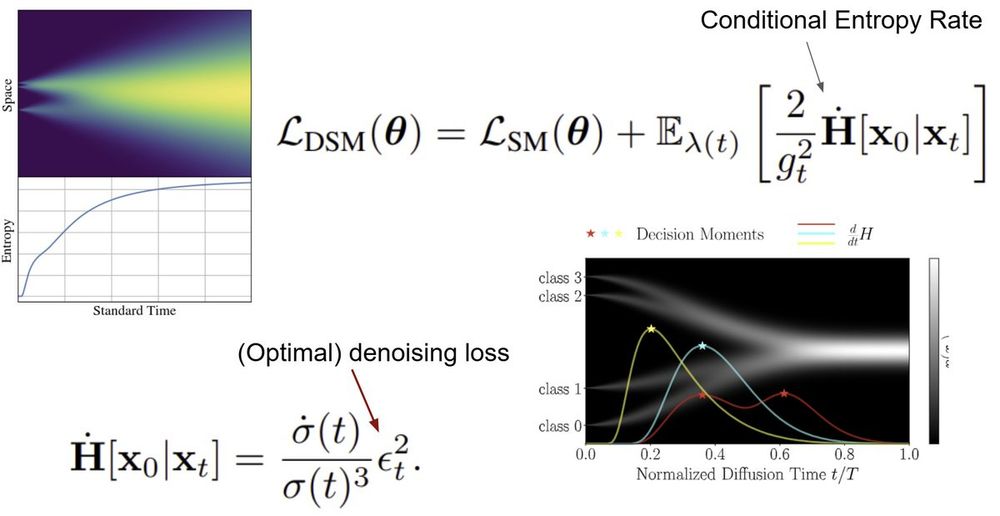

This can be directly interpreted as the information transfer (bit rate) from the state x_t and the final generation x_0.

This can be directly interpreted as the information transfer (bit rate) from the state x_t and the final generation x_0.

@tyrellturing.bsky.social's Deep learning framework

www.nature.com/articles/s41...

@tonyzador.bsky.social's Next-gen AI through neuroAI

www.nature.com/articles/s41...

@adriendoerig.bsky.social's Neuroconnectionist framework

www.nature.com/articles/s41...

@tyrellturing.bsky.social's Deep learning framework

www.nature.com/articles/s41...

@tonyzador.bsky.social's Next-gen AI through neuroAI

www.nature.com/articles/s41...

@adriendoerig.bsky.social's Neuroconnectionist framework

www.nature.com/articles/s41...

#ML #SDE #Diffusion #GenAI 🤖🧠

#ML #SDE #Diffusion #GenAI 🤖🧠

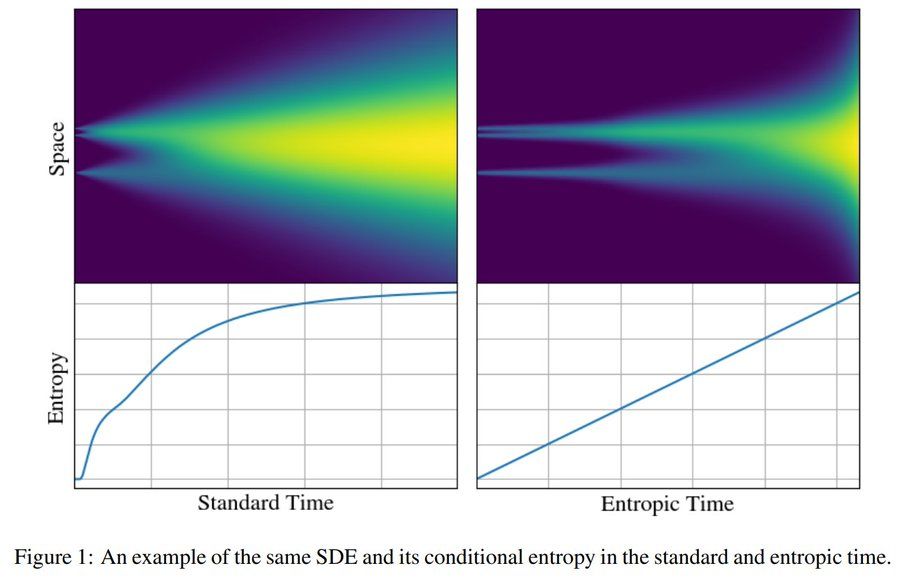

"Entropic Time Schedulers for Generative Diffusion Models"

We find that the conditional entropy offers a natural data-dependent notion of time during generation

Link: arxiv.org/abs/2504.13612

"Entropic Time Schedulers for Generative Diffusion Models"

We find that the conditional entropy offers a natural data-dependent notion of time during generation

Link: arxiv.org/abs/2504.13612

Here’s my current team at Aalto University: users.aalto.fi/~asolin/group/

Here’s my current team at Aalto University: users.aalto.fi/~asolin/group/

We train LMs on a formal grammar, then prompt them OUTSIDE of this grammar. We find that LMs often extrapolate logical rules and apply them OOD, too. Proof of a useful inductive bias.

Check it out at NeurIPS:

nips.cc/virtual/2024...

We train LMs on a formal grammar, then prompt them OUTSIDE of this grammar. We find that LMs often extrapolate logical rules and apply them OOD, too. Proof of a useful inductive bias.

Check it out at NeurIPS:

nips.cc/virtual/2024...

moleculediscovery.github.io/workshop2024/

moleculediscovery.github.io/workshop2024/

🧑🔬 Interested in understanding reasoning capabilities of neural networks from first principles?

🧑🎓 Currently studying for a BS/MS/PhD?

🧑💻 Have solid engineering and research skills?

🌟 We want to hear from you! Details in thread.

🧑🔬 Interested in understanding reasoning capabilities of neural networks from first principles?

🧑🎓 Currently studying for a BS/MS/PhD?

🧑💻 Have solid engineering and research skills?

🌟 We want to hear from you! Details in thread.

They could be equally applied to human beings, and they would work as well

They could be equally applied to human beings, and they would work as well

Is it supposed to be some sort of news now??

Is it supposed to be some sort of news now??

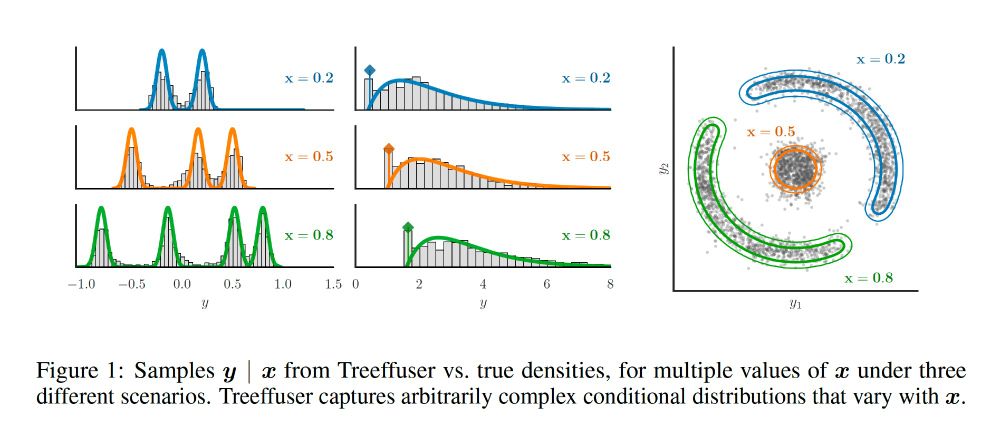

paper: arxiv.org/abs/2406.07658

repo: github.com/blei-lab/tre...

🧵(1/8)

paper: arxiv.org/abs/2406.07658

repo: github.com/blei-lab/tre...

🧵(1/8)

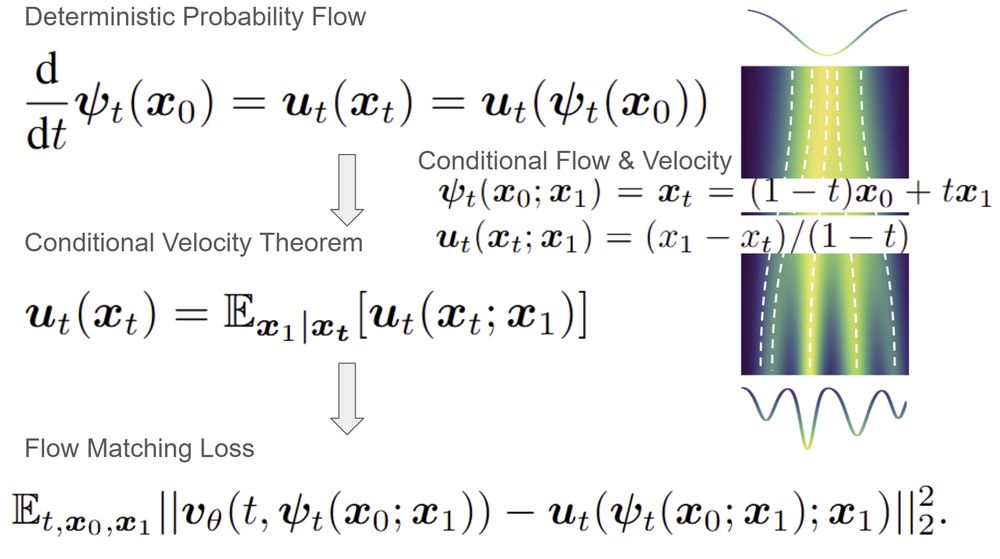

Our answer: They’re two sides of the same coin. We wrote a blog post to show how diffusion models and Gaussian flow matching are equivalent. That’s great: It means you can use them interchangeably.

Our answer: They’re two sides of the same coin. We wrote a blog post to show how diffusion models and Gaussian flow matching are equivalent. That’s great: It means you can use them interchangeably.

Excited to share our latest work (accepted to NeurIPS2024) on understanding working memory in multi-task RNN models using naturalistic stimuli!: with @takuito.bsky.social and @bashivan.bsky.social

#tweeprint below:

Excited to share our latest work (accepted to NeurIPS2024) on understanding working memory in multi-task RNN models using naturalistic stimuli!: with @takuito.bsky.social and @bashivan.bsky.social

#tweeprint below: