@ Blei Lab & Columbia University.

Working on probabilistic ML | uncertainty quantification | LLM interpretability.

Excited about everything ML, AI and engineering!

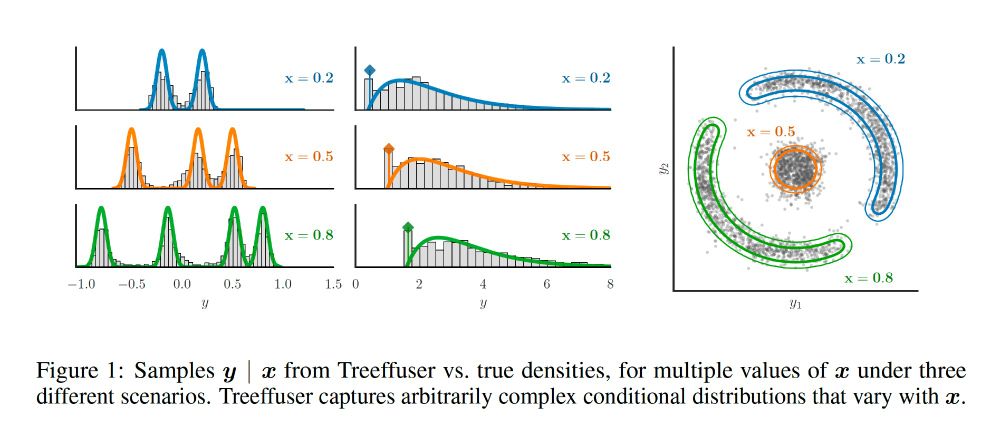

paper: arxiv.org/abs/2406.07658

repo: github.com/blei-lab/tre...

🧵(1/8)

Wishing you all the best in your next chapter — we’re proud of you! 💙 #Columbia2025

@bleilab.bsky.social @khanhndinh.bsky.social @elhamazizi.bsky.social

Wishing you all the best in your next chapter — we’re proud of you! 💙 #Columbia2025

@bleilab.bsky.social @khanhndinh.bsky.social @elhamazizi.bsky.social

arXiv: arxiv.org/abs/2505.13111

(3/4)

arXiv: arxiv.org/abs/2505.13111

(3/4)

Next:

* Crafting more blog content into future topics,

* DPO+ chapter,

* Meeting with publishers to get wheels turning on physical copies,

* Cleaning & cohesiveness

rlhfbook.com

Next:

* Crafting more blog content into future topics,

* DPO+ chapter,

* Meeting with publishers to get wheels turning on physical copies,

* Cleaning & cohesiveness

rlhfbook.com

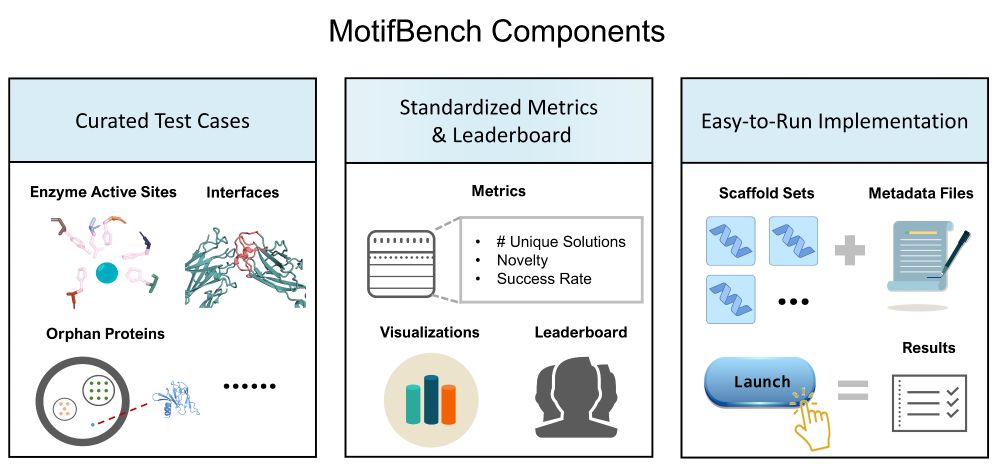

Why does this matter? Reproducibility & fair comparison have been lacking—until now.

Paper: arxiv.org/abs/2502.12479 | Repo: github.com/blt2114/Moti...

A thread ⬇️

Why does this matter? Reproducibility & fair comparison have been lacking—until now.

Paper: arxiv.org/abs/2502.12479 | Repo: github.com/blt2114/Moti...

A thread ⬇️

It’s not just a great pedagogic support, but many unprecedented data and experiments presented for the first time in a systematic way.

It’s not just a great pedagogic support, but many unprecedented data and experiments presented for the first time in a systematic way.

I have lost a lot of respect for the ML community.

I have lost a lot of respect for the ML community.

🐍 github.com/gle-bellier/...

🐍 github.com/facebookrese...

📄 arxiv.org/abs/2407.15595

🐍 github.com/gle-bellier/...

🐍 github.com/facebookrese...

📄 arxiv.org/abs/2407.15595

arxiv.org/abs/2502.02472

arxiv.org/abs/2502.02472

Policy gradient chapter is coming together. Plugging away at the book every day now.

rlhfbook.com/c/11-policy-...

Policy gradient chapter is coming together. Plugging away at the book every day now.

rlhfbook.com/c/11-policy-...

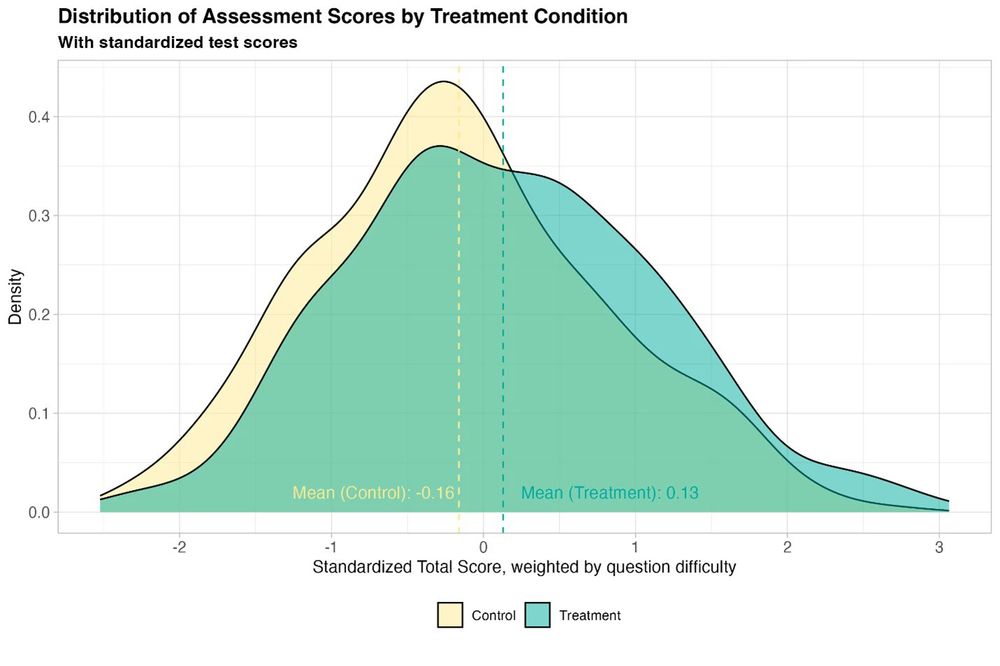

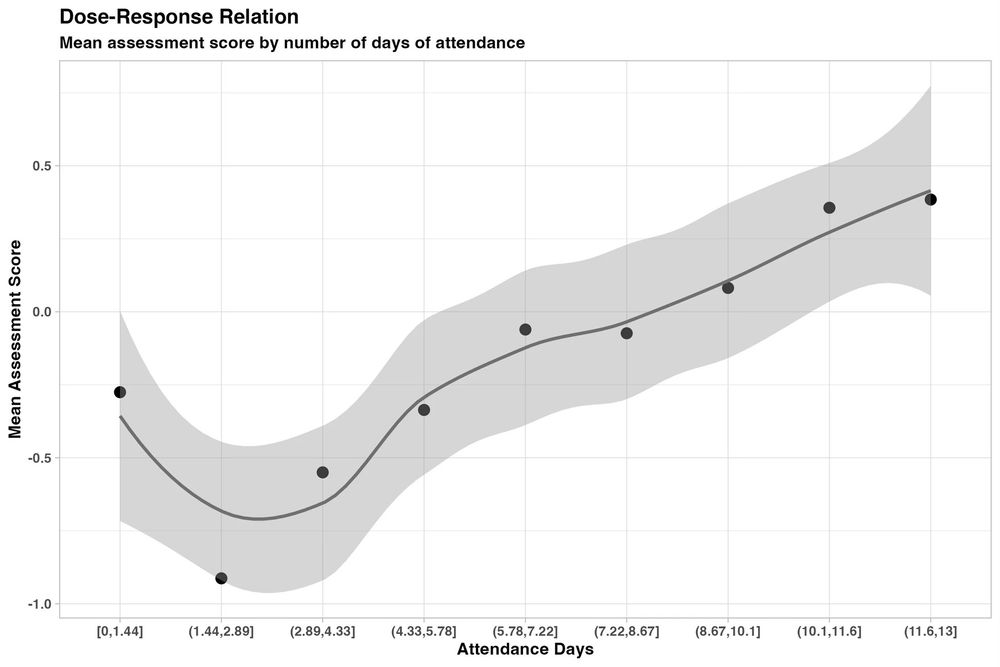

And it helped all students, especially girls who were initially behind.

And it helped all students, especially girls who were initially behind.

I guess we need AGI just to figure out how to name things

I guess we need AGI just to figure out how to name things

With @elhamazizi.bsky.social and @mingxz.bsky.social, we integrate scRNA-seq & WGS to uncover how CNAs drive tumor evolution and transcriptional variability.

With @elhamazizi.bsky.social and @mingxz.bsky.social, we integrate scRNA-seq & WGS to uncover how CNAs drive tumor evolution and transcriptional variability.