📍SF Bay Area 🔗 http://jonbarron.info

This feed is a mostly-incomplete mirror of https://x.com/jon_barron, I recommend you just follow me there.

www.youtube.com/watch?v=hFlF...

Alexander Mai, Trevor Hedstrom, @grgkopanas.bsky.social, Janne Kontkanen, Falko Kuester, @jonbarron.bsky.social

tl;dr: Delaunay tetrahedralization->constant density and linear color radian->radiance mesh->radiance field

arxiv.org/abs/2512.04076

Alexander Mai, Trevor Hedstrom, @grgkopanas.bsky.social, Janne Kontkanen, Falko Kuester, @jonbarron.bsky.social

tl;dr: Delaunay tetrahedralization->constant density and linear color radian->radiance mesh->radiance field

arxiv.org/abs/2512.04076

We present VisualOverload, a VQA benchmark testing simple vision skills (like counting & OCR) in dense scenes. Even the best model (o3) only scores 19.8% on our hardest split.

We present VisualOverload, a VQA benchmark testing simple vision skills (like counting & OCR) in dense scenes. Even the best model (o3) only scores 19.8% on our hardest split.

Interested? Join our world-class team:

🌍 spaitial.ai

youtu.be/FiGX82RUz8U

Interested? Join our world-class team:

🌍 spaitial.ai

youtu.be/FiGX82RUz8U

@uoftartsci.bsky.social

@uoftartsci.bsky.social

www.youtube.com/watch?v=hFlF...

www.youtube.com/watch?v=hFlF...

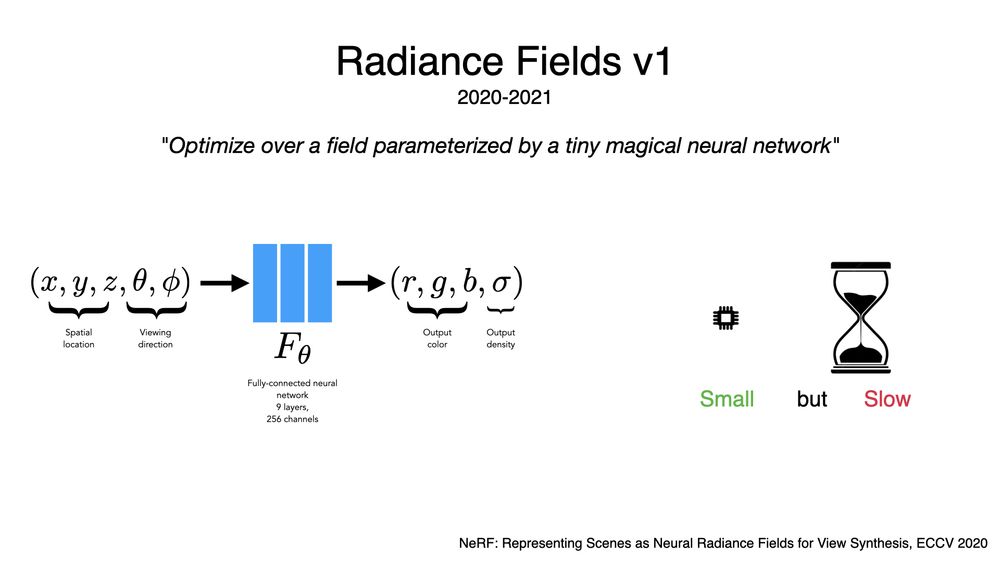

Radiance fields have had 3 distinct generations. First was NeRF: just posenc and a tiny MLP. This was slow to train but worked really well, and it was unusually compressed --- The NeRF was smaller than the images.

Radiance fields have had 3 distinct generations. First was NeRF: just posenc and a tiny MLP. This was slow to train but worked really well, and it was unusually compressed --- The NeRF was smaller than the images.

Project page: szymanowiczs.github.io/bolt3d

Arxiv: arxiv.org/abs/2503.14445

Project page: szymanowiczs.github.io/bolt3d

Arxiv: arxiv.org/abs/2503.14445

1) Train an LLM on a new paper and record the average loss during training.

2) Evaluate the retrained LLM on benchmarks.

Your Google Scholar records avg_train_loss * benchmark_delta, and you go write your next paper.

1) Train an LLM on a new paper and record the average loss during training.

2) Evaluate the retrained LLM on benchmarks.

Your Google Scholar records avg_train_loss * benchmark_delta, and you go write your next paper.

(One of these is not like the others -- both of them basically invented the field, and I occasionally write a blog post 🥲)

(One of these is not like the others -- both of them basically invented the field, and I occasionally write a blog post 🥲)

I'm surprised by how well Veo 2 understands human anatomy, and amused by the things that it doesn't yet understand.

I'm surprised by how well Veo 2 understands human anatomy, and amused by the things that it doesn't yet understand.

Here's `A kraken emerging from the ocean at the beach on coney island`, which I forgot to post.

Here's `A kraken emerging from the ocean at the beach on coney island`, which I forgot to post.