Paul Gavrikov

@paulgavrikov.bsky.social

PostDoc Tübingen AI Center | Machine Learning & Computer Vision

paulgavrikov.github.io

paulgavrikov.github.io

Tracking the spookiest runs @weightsbiases.bsky.social

October 30, 2025 at 8:42 AM

Tracking the spookiest runs @weightsbiases.bsky.social

The worst part about leaving academia is that you loose eduroam access :(

October 14, 2025 at 3:00 PM

The worst part about leaving academia is that you loose eduroam access :(

lol, a #WACV AC just poorly rephrased the weaknesses I raised in my review as their justification and ignored all other reviews ... I feel bad for the authors ...

October 7, 2025 at 4:00 PM

lol, a #WACV AC just poorly rephrased the weaknesses I raised in my review as their justification and ignored all other reviews ... I feel bad for the authors ...

🚨 New paper out!

"VisualOverload: Probing Visual Understanding of VLMs in Really Dense Scenes"

👉 arxiv.org/abs/2509.25339

We test 37 VLMs on 2,700+ VQA questions about dense scenes.

Findings: even top models fumble badly—<20% on the hardest split and key failure modes in counting, OCR & consistency.

"VisualOverload: Probing Visual Understanding of VLMs in Really Dense Scenes"

👉 arxiv.org/abs/2509.25339

We test 37 VLMs on 2,700+ VQA questions about dense scenes.

Findings: even top models fumble badly—<20% on the hardest split and key failure modes in counting, OCR & consistency.

October 1, 2025 at 1:17 PM

🚨 New paper out!

"VisualOverload: Probing Visual Understanding of VLMs in Really Dense Scenes"

👉 arxiv.org/abs/2509.25339

We test 37 VLMs on 2,700+ VQA questions about dense scenes.

Findings: even top models fumble badly—<20% on the hardest split and key failure modes in counting, OCR & consistency.

"VisualOverload: Probing Visual Understanding of VLMs in Really Dense Scenes"

👉 arxiv.org/abs/2509.25339

We test 37 VLMs on 2,700+ VQA questions about dense scenes.

Findings: even top models fumble badly—<20% on the hardest split and key failure modes in counting, OCR & consistency.

Is basic image understanding solved in today’s SOTA VLMs? Not quite.

We present VisualOverload, a VQA benchmark testing simple vision skills (like counting & OCR) in dense scenes. Even the best model (o3) only scores 19.8% on our hardest split.

We present VisualOverload, a VQA benchmark testing simple vision skills (like counting & OCR) in dense scenes. Even the best model (o3) only scores 19.8% on our hardest split.

September 8, 2025 at 3:28 PM

Is basic image understanding solved in today’s SOTA VLMs? Not quite.

We present VisualOverload, a VQA benchmark testing simple vision skills (like counting & OCR) in dense scenes. Even the best model (o3) only scores 19.8% on our hardest split.

We present VisualOverload, a VQA benchmark testing simple vision skills (like counting & OCR) in dense scenes. Even the best model (o3) only scores 19.8% on our hardest split.

Reposted by Paul Gavrikov

Congratulations to @paulgavrikov.bsky.social for an excellent PhD defense today!

July 8, 2025 at 7:02 PM

Congratulations to @paulgavrikov.bsky.social for an excellent PhD defense today!

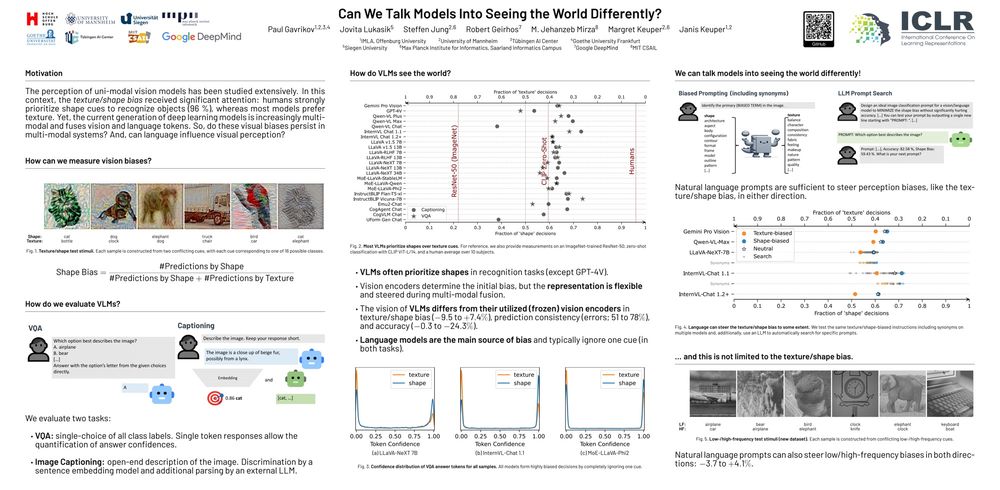

Yesterday, I had the great honor of delivering a talk on feature biases in vision models at the VAL Lab at the Indian Institute of Science (IISc). I covered our ICLR 2025 paper and a few older works in the same realm.

youtu.be/9efpCs1ltcM

youtu.be/9efpCs1ltcM

Paul Gavrikov - Feature Biases in Vision Models (Research Talk @ IISc, Bengaluru)

YouTube video by Paul Gavrikov

youtu.be

May 28, 2025 at 12:07 PM

Yesterday, I had the great honor of delivering a talk on feature biases in vision models at the VAL Lab at the Indian Institute of Science (IISc). I covered our ICLR 2025 paper and a few older works in the same realm.

youtu.be/9efpCs1ltcM

youtu.be/9efpCs1ltcM

What an incredible week at #ICLR 2025! 🌟

I had an amazing time presenting our poster "Can We Talk Models Into Seeing the World Differently?" with

@jovitalukasik.bsky.social. Huge thanks to everyone who stopped by — your questions, insights, and conversations made it such a rewarding experience.

I had an amazing time presenting our poster "Can We Talk Models Into Seeing the World Differently?" with

@jovitalukasik.bsky.social. Huge thanks to everyone who stopped by — your questions, insights, and conversations made it such a rewarding experience.

May 3, 2025 at 10:03 AM

What an incredible week at #ICLR 2025! 🌟

I had an amazing time presenting our poster "Can We Talk Models Into Seeing the World Differently?" with

@jovitalukasik.bsky.social. Huge thanks to everyone who stopped by — your questions, insights, and conversations made it such a rewarding experience.

I had an amazing time presenting our poster "Can We Talk Models Into Seeing the World Differently?" with

@jovitalukasik.bsky.social. Huge thanks to everyone who stopped by — your questions, insights, and conversations made it such a rewarding experience.

Today at 3pm - poster #328. See you there!

On Thursday, I'll be presenting our paper "Can We Talk Models Into Seeing the World Differently?" (#328) at ICLR 2025 in Singapore! If you're attending or just around Singapore, l'd love to connect—feel free to reach out! Also, I’m exploring postdoc or industry opportunities–happy to chat!

April 24, 2025 at 1:45 AM

Today at 3pm - poster #328. See you there!

On Thursday, I'll be presenting our paper "Can We Talk Models Into Seeing the World Differently?" (#328) at ICLR 2025 in Singapore! If you're attending or just around Singapore, l'd love to connect—feel free to reach out! Also, I’m exploring postdoc or industry opportunities–happy to chat!

April 21, 2025 at 10:47 AM

On Thursday, I'll be presenting our paper "Can We Talk Models Into Seeing the World Differently?" (#328) at ICLR 2025 in Singapore! If you're attending or just around Singapore, l'd love to connect—feel free to reach out! Also, I’m exploring postdoc or industry opportunities–happy to chat!

We should start boycotting proceedings that do not have a "cite this article" button.

February 1, 2025 at 12:19 AM

We should start boycotting proceedings that do not have a "cite this article" button.

Proud to announce that our paper "Can We Talk Models Into Seeing the World Differently?" was accepted at #ICLR2025 🇸🇬. This marks my last PhD paper, and we are honored that all 4 reviewers recommended acceptance, placing us in the top 6% of all submissions.

January 27, 2025 at 5:33 PM

Proud to announce that our paper "Can We Talk Models Into Seeing the World Differently?" was accepted at #ICLR2025 🇸🇬. This marks my last PhD paper, and we are honored that all 4 reviewers recommended acceptance, placing us in the top 6% of all submissions.

I analyzed the top 20 most common words in titles of all computer vision (cs.CV) papers on arxiv since 2007. A lot of "learning" papers ...

January 13, 2025 at 2:32 PM

I analyzed the top 20 most common words in titles of all computer vision (cs.CV) papers on arxiv since 2007. A lot of "learning" papers ...

10 years ago #ICLR (2014) only had 69 submissions, today nearly 12k. So many good papers: openreview.net/group?id=ICL...

ICLR 2014

Welcome to the OpenReview homepage for ICLR 2014

openreview.net

December 31, 2024 at 5:44 PM

10 years ago #ICLR (2014) only had 69 submissions, today nearly 12k. So many good papers: openreview.net/group?id=ICL...

Yeah, I am sure my paper on Feature Biases in ImageNet classifiers is a great match for a journal on lifestyle and sustainable goals.

December 16, 2024 at 10:55 PM

Yeah, I am sure my paper on Feature Biases in ImageNet classifiers is a great match for a journal on lifestyle and sustainable goals.

Today at #NeurIPS2024 Interpretable AI Workshop! Poster sessions are at 10am and 4pm.

"How Do Training Methods Influence the Utilization of Vision Models?" arxiv.org/abs/2410.14470

We won’t be there, but Ruta Binkyte is presenting for us!

"How Do Training Methods Influence the Utilization of Vision Models?" arxiv.org/abs/2410.14470

We won’t be there, but Ruta Binkyte is presenting for us!

December 15, 2024 at 1:52 PM

Today at #NeurIPS2024 Interpretable AI Workshop! Poster sessions are at 10am and 4pm.

"How Do Training Methods Influence the Utilization of Vision Models?" arxiv.org/abs/2410.14470

We won’t be there, but Ruta Binkyte is presenting for us!

"How Do Training Methods Influence the Utilization of Vision Models?" arxiv.org/abs/2410.14470

We won’t be there, but Ruta Binkyte is presenting for us!

Gemini 2.0 Flash is the best free-to-use LLM for writing tasks, blazing fast, and last-but-not-least available in the EU ...

December 13, 2024 at 8:24 PM

Gemini 2.0 Flash is the best free-to-use LLM for writing tasks, blazing fast, and last-but-not-least available in the EU ...

One of the most interesting aspects of adversarial training to me is not that it increases robustness, but it's ability to revert biases. If a model is strongly biased for X, it will likely completely ignore X at sufficient eps. This is clearly visible in the texture/shape bias!

December 7, 2024 at 10:18 AM

One of the most interesting aspects of adversarial training to me is not that it increases robustness, but it's ability to revert biases. If a model is strongly biased for X, it will likely completely ignore X at sufficient eps. This is clearly visible in the texture/shape bias!

The adaption of ChatGPT amongst non-tech users is incredible - I see so many people in public transport using the app.

December 3, 2024 at 6:27 AM

The adaption of ChatGPT amongst non-tech users is incredible - I see so many people in public transport using the app.

Tired of showing the same old same adversarial attack examples? Generate your own with this little Foolbox-based Colab: colab.research.google.com/drive/1WfqEW...

November 29, 2024 at 1:57 PM

Tired of showing the same old same adversarial attack examples? Generate your own with this little Foolbox-based Colab: colab.research.google.com/drive/1WfqEW...

WILD! Some researchers from have republished ResNet under their own names at some predatory journal.

@csprofkgd.bsky.social

Predatory: ijircce.com/admin/main/s...

@csprofkgd.bsky.social

Predatory: ijircce.com/admin/main/s...

November 28, 2024 at 10:57 AM

WILD! Some researchers from have republished ResNet under their own names at some predatory journal.

@csprofkgd.bsky.social

Predatory: ijircce.com/admin/main/s...

@csprofkgd.bsky.social

Predatory: ijircce.com/admin/main/s...