paulgavrikov.github.io

"VisualOverload: Probing Visual Understanding of VLMs in Really Dense Scenes"

👉 arxiv.org/abs/2509.25339

We test 37 VLMs on 2,700+ VQA questions about dense scenes.

Findings: even top models fumble badly—<20% on the hardest split and key failure modes in counting, OCR & consistency.

"VisualOverload: Probing Visual Understanding of VLMs in Really Dense Scenes"

👉 arxiv.org/abs/2509.25339

We test 37 VLMs on 2,700+ VQA questions about dense scenes.

Findings: even top models fumble badly—<20% on the hardest split and key failure modes in counting, OCR & consistency.

We present VisualOverload, a VQA benchmark testing simple vision skills (like counting & OCR) in dense scenes. Even the best model (o3) only scores 19.8% on our hardest split.

We present VisualOverload, a VQA benchmark testing simple vision skills (like counting & OCR) in dense scenes. Even the best model (o3) only scores 19.8% on our hardest split.

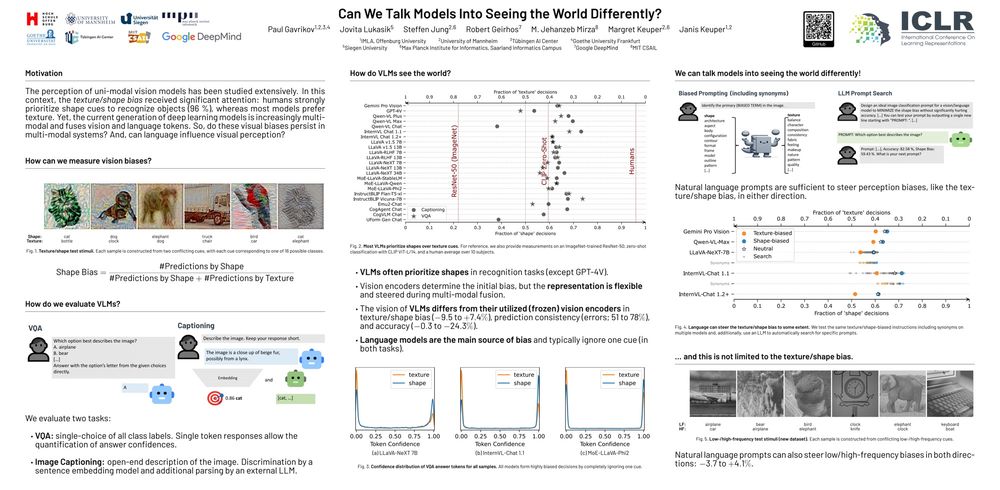

I had an amazing time presenting our poster "Can We Talk Models Into Seeing the World Differently?" with

@jovitalukasik.bsky.social. Huge thanks to everyone who stopped by — your questions, insights, and conversations made it such a rewarding experience.

I had an amazing time presenting our poster "Can We Talk Models Into Seeing the World Differently?" with

@jovitalukasik.bsky.social. Huge thanks to everyone who stopped by — your questions, insights, and conversations made it such a rewarding experience.

"How Do Training Methods Influence the Utilization of Vision Models?" arxiv.org/abs/2410.14470

We won’t be there, but Ruta Binkyte is presenting for us!

"How Do Training Methods Influence the Utilization of Vision Models?" arxiv.org/abs/2410.14470

We won’t be there, but Ruta Binkyte is presenting for us!

@csprofkgd.bsky.social

Predatory: ijircce.com/admin/main/s...

@csprofkgd.bsky.social

Predatory: ijircce.com/admin/main/s...