I like 3D vision and training neural networks.

Code: https://github.com/parskatt

Weights: https://github.com/Parskatt/storage/releases/tag/roma

Pixel correspondence makes more sense than feature matching (what is a feature?).

Pixel correspondence makes more sense than feature matching (what is a feature?).

Not sure if this will be a good or bad thing.

Not sure if this will be a good or bad thing.

The posted figure is only showing the top-50.

No shit it's gonna show the biggest companies and unis.

There's a productive conversation to be had, but this aint it.

aiworld.eu/story/from-b...

The posted figure is only showing the top-50.

No shit it's gonna show the biggest companies and unis.

There's a productive conversation to be had, but this aint it.

aiworld.eu/story/from-b...

pypi.org/project/pyco...

pypi.org/project/pyco...

- debacle will not impact reviewing in a significant way

- ratings will likely be similar next year

- peer review will continue to struggle with review load

- debacle will not impact reviewing in a significant way

- ratings will likely be similar next year

- peer review will continue to struggle with review load

Zhimin Shao, Abhay Yadav, Rama Chellappa, Cheng Peng

tl;dr: 3D VFM+2D ConvNet->feature extraction backbone; 3D descriptor head (for geometry)+2D warp head (for pattern) fusion

arxiv.org/abs/2511.17750

Zhimin Shao, Abhay Yadav, Rama Chellappa, Cheng Peng

tl;dr: 3D VFM+2D ConvNet->feature extraction backbone; 3D descriptor head (for geometry)+2D warp head (for pattern) fusion

arxiv.org/abs/2511.17750

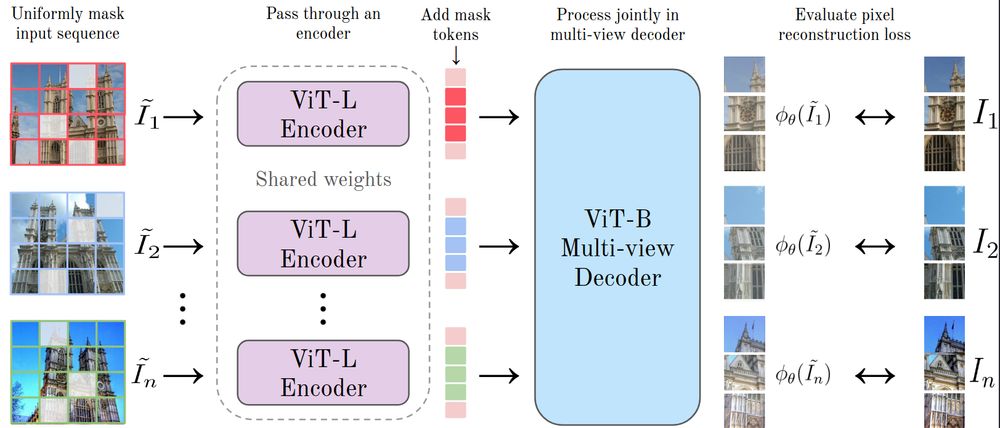

However, for 3D tasks we show that a scaled and simplified version of multi-view MAE (which we call MuM) can outperform DINOv3, all while using orders of magnitude less compute!

TLDR; Spiritual successor to CroCo with a simpler multi-view objective and larger scale. Beats DINOv3 and CroCo v2 in RoMa, feedforward reconstruction, and rel. pose.

arxiv.org/abs/2511.17309

github.com/davnords/mum

However, for 3D tasks we show that a scaled and simplified version of multi-view MAE (which we call MuM) can outperform DINOv3, all while using orders of magnitude less compute!

Results are pretty crisp, but it doesn't really deal with clouds (it's literally just a linear model on top of some coarse segmentation output).

Results are pretty crisp, but it doesn't really deal with clouds (it's literally just a linear model on top of some coarse segmentation output).

Here are the main improvements we made since RoMa:

Here are the main improvements we made since RoMa: