Deep Learning for Computer Vision.

Strengthen your ViTs: https://github.com/davnords/octic-vits

If you’re at the conference, welcome to come to our poster #516!

bmvc2025.bmva.org/proceedings/...

If you’re at the conference, welcome to come to our poster #516!

bmvc2025.bmva.org/proceedings/...

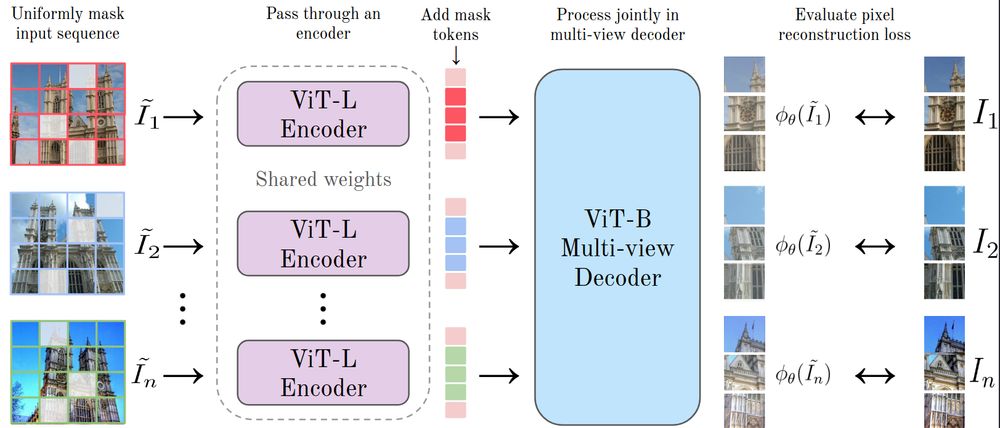

TLDR; Spiritual successor to CroCo with a simpler multi-view objective and larger scale. Beats DINOv3 and CroCo v2 in RoMa, feedforward reconstruction, and rel. pose.

arxiv.org/abs/2511.17309

github.com/davnords/mum

TLDR; Spiritual successor to CroCo with a simpler multi-view objective and larger scale. Beats DINOv3 and CroCo v2 in RoMa, feedforward reconstruction, and rel. pose.

arxiv.org/abs/2511.17309

github.com/davnords/mum

Very strong and versatile model overall. RoMa v2 can match anything you'd like. Even @bokmangeorg.bsky.social 's hand annotated satellite images.

Here are the main improvements we made since RoMa:

Very strong and versatile model overall. RoMa v2 can match anything you'd like. Even @bokmangeorg.bsky.social 's hand annotated satellite images.

@parskatt.bsky.social et 11 al.

tl;dr: in title.

Predict covariance per-pixel, more datasets, use DINOv3, adjust architecture.

arxiv.org/abs/2511.15706

@parskatt.bsky.social et 11 al.

tl;dr: in title.

Predict covariance per-pixel, more datasets, use DINOv3, adjust architecture.

arxiv.org/abs/2511.15706

Glyph is just a framework, not a model, but they got Qwen3-8B (128k context) to handle over 1 million context by rendering input as images

arxiv.org/abs/2510.17800

I wonder if it is just a data issue for VGGT or if it is deeper than that. I mean VGGT was trained on mostly synthetic data. What do you think?

I wonder if it is just a data issue for VGGT or if it is deeper than that. I mean VGGT was trained on mostly synthetic data. What do you think?

@parskatt.bsky.social PhD Thesis.

tl;dr: would be useful in teaching image matching - nice explanations. (too) Fancy and stylish notation. Cool Ack section and cover image.

liu.diva-portal.org/smash/record...

@parskatt.bsky.social PhD Thesis.

tl;dr: would be useful in teaching image matching - nice explanations. (too) Fancy and stylish notation. Cool Ack section and cover image.

liu.diva-portal.org/smash/record...

Born too early to explore the galaxy.

Born just in time to \nabla_{\theta}f_{\theta}

Born too early to explore the galaxy.

Born just in time to \nabla_{\theta}f_{\theta}

🗓️ Date: 16:00-18:00, Fri, Jun 13 (Today)

📍Place: Poster #115 in Session 2 (ExHall D)

💻 Code: github.com/ericssonrese...

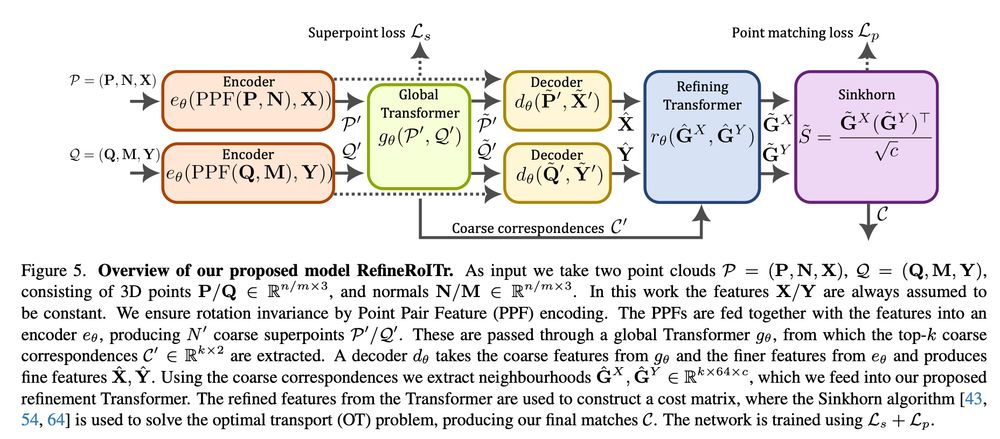

@parskatt.bsky.social , André Mateus, Alberto Jaenal

tl;dr: in title, learning to register SfM point clouds.

arxiv.org/abs/2503.17093

🗓️ Date: 16:00-18:00, Fri, Jun 13 (Today)

📍Place: Poster #115 in Session 2 (ExHall D)

💻 Code: github.com/ericssonrese...

Turns out that this is perfect for octic equivariance, as our biggest gains are there!

Turns out that this is perfect for octic equivariance, as our biggest gains are there!

TLDR; We extend @bokmangeorg.bsky.social's reflection-equivariant ViTs to the (octic) group of 90-degree rotations and reflections and... it just works... (DINOv2+DeiT)

Code: github.com/davnords/octic-vits

TLDR; We extend @bokmangeorg.bsky.social's reflection-equivariant ViTs to the (octic) group of 90-degree rotations and reflections and... it just works... (DINOv2+DeiT)

Code: github.com/davnords/octic-vits

We merge SfM reconstructions with point cloud registration.

Link: arxiv.org/abs/2503.17093

Code: Not yet public, but coming later.

We merge SfM reconstructions with point cloud registration.

Link: arxiv.org/abs/2503.17093

Code: Not yet public, but coming later.

Project Page: vgg-t.github.io

Code & Weights: github.com/facebookrese...

Project Page: vgg-t.github.io

Code & Weights: github.com/facebookrese...

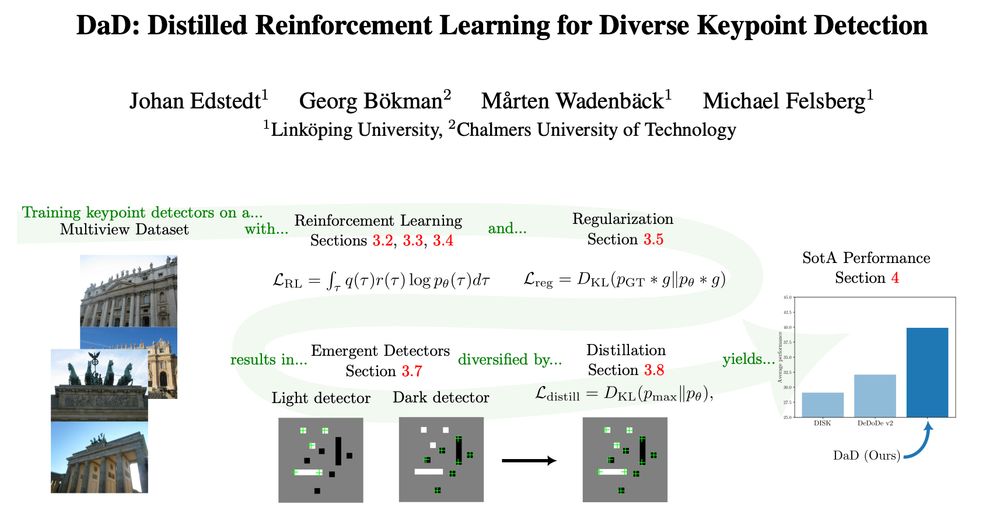

As this will get pretty long, this will be two threads.

The first will go into the RL part, and the second on the emergence and distillation.