Generative Vision (Stable Diffusion, VQGAN) & Representation Learning

🌐 https://ommer-lab.com

Come say hi in Honolulu!

👋 Pingchuan, Ming, Felix, Stefan, Timy, and Björn Ommer will be attending.

Come say hi in Honolulu!

👋 Pingchuan, Ming, Felix, Stefan, Timy, and Björn Ommer will be attending.

Come say hi in Honolulu!

👋 Pingchuan, Ming, Felix, Stefan, Timy, and Björn Ommer will be attending.

Everything you need to know 👉 elsa-ai.eu/elsa-welcome...

Everything you need to know 👉 elsa-ai.eu/elsa-welcome...

RepTok 🦎 highlights how compact generative representations can retain both realism and semantic structure.

💡 It works if we leverage a self-supervised representation!

Meet RepTok🦎: A generative model that encodes an image into a single continuous latent while keeping realism and semantics. 🧵 👇

RepTok 🦎 highlights how compact generative representations can retain both realism and semantic structure.

We built the Flow Poke Transformer (FPT) to model multi-modal scene dynamics from sparse interactions.

It learns to predict the 𝘥𝘪𝘴𝘵𝘳𝘪𝘣𝘶𝘵𝘪𝘰𝘯 of motion itself 🧵👇

We built the Flow Poke Transformer (FPT) to model multi-modal scene dynamics from sparse interactions.

It learns to predict the 𝘥𝘪𝘴𝘵𝘳𝘪𝘣𝘶𝘵𝘪𝘰𝘯 of motion itself 🧵👇

‼️ The application portal will be open from 15 October to 14 November 2025.

Find out more: mcml.ai/opportunitie...

‼️ The application portal will be open from 15 October to 14 November 2025.

Find out more: mcml.ai/opportunitie...

How do we build open and trustworthy AI in Europe?

🎙️ In a recent radio interview, Luk Overmeire from VRT shared insights on ELLIOT, #FoundationModels and the role of public broadcasters in shaping human-centred AI.

📻 Interview in Dutch: mimir.mjoll.no/shares/JRqlO...

How do we build open and trustworthy AI in Europe?

🎙️ In a recent radio interview, Luk Overmeire from VRT shared insights on ELLIOT, #FoundationModels and the role of public broadcasters in shaping human-centred AI.

📻 Interview in Dutch: mimir.mjoll.no/shares/JRqlO...

Björn Ommer followed with insights into how GenAI is commodifying intelligence and reshaping how we use computers.

Björn Ommer followed with insights into how GenAI is commodifying intelligence and reshaping how we use computers.

30 partners from 12 countries 🌍 launched this exciting journey to advance open, trustworthy AI and #FoundationModels across Europe. 🤖

Stay tuned for more updates on #AIresearch and #TrustworthyAI! 💡

30 partners from 12 countries 🌍 launched this exciting journey to advance open, trustworthy AI and #FoundationModels across Europe. 🤖

Stay tuned for more updates on #AIresearch and #TrustworthyAI! 💡

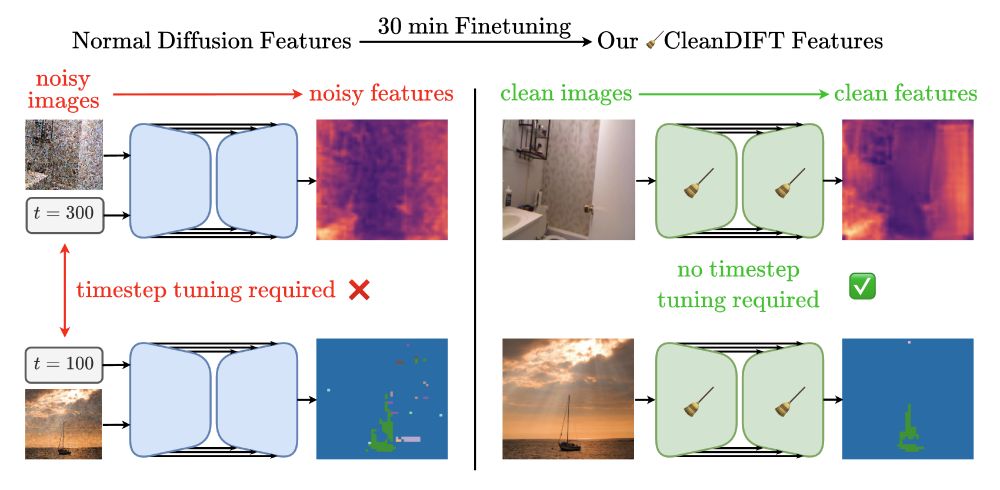

@rmsnorm.bsky.social*, @stefanabaumann.bsky.social*, @koljabauer.bsky.social*, @frankfundel.bsky.social, Björn Ommer

Oral Session 1C (Davidson Ballroom): Friday 9:00

Poster Session 1 (ExHall D): Friday 10:30-12:30, # 218

compvis.github.io/cleandift/

@rmsnorm.bsky.social*, @stefanabaumann.bsky.social*, @koljabauer.bsky.social*, @frankfundel.bsky.social, Björn Ommer

Oral Session 1C (Davidson Ballroom): Friday 9:00

Poster Session 1 (ExHall D): Friday 10:30-12:30, # 218

compvis.github.io/cleandift/

Come say hi in Nashville!

👋 Johannes, Ming, Kolja, Stefan, and Björn will be attending.

Come say hi in Nashville!

👋 Johannes, Ming, Kolja, Stefan, and Björn will be attending.

🔍 Follow for updates on #OpenScience & #FoundationModels.

🔍 Follow for updates on #OpenScience & #FoundationModels.

Come say hi in Nashville!

👋 Johannes, Ming, Kolja, Stefan, and Björn will be attending.

Come say hi in Nashville!

👋 Johannes, Ming, Kolja, Stefan, and Björn will be attending.

📌 Poster Session 6, Sunday 4:00 to 6:00 PM, Poster #208

📌 Poster Session 6, Sunday 4:00 to 6:00 PM, Poster #208

📅January 29, 2025, 6:00 PM

📍 Große Aula, LMU Munich

Full program here: www.ai-news.lmu.de/grand-openin...

📅January 29, 2025, 6:00 PM

📍 Große Aula, LMU Munich

Full program here: www.ai-news.lmu.de/grand-openin...

Meanwhile, academic conferences are struggling to afford coffee breaks. Want this for EPSA!

@compvis.bsky.social

Meanwhile, academic conferences are struggling to afford coffee breaks. Want this for EPSA!

@compvis.bsky.social

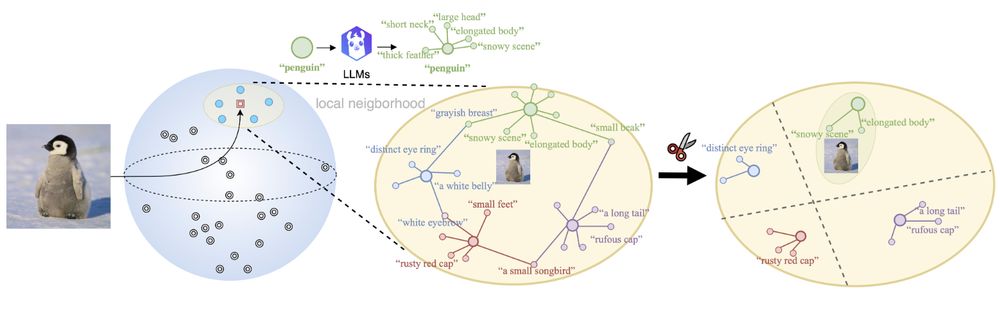

🤨Interested? Check out our latest work at #AAAI25:

💻Code and 📝Paper at: github.com/CompVis/DisCLIP

🧵👇

🤨Interested? Check out our latest work at #AAAI25:

💻Code and 📝Paper at: github.com/CompVis/DisCLIP

🧵👇

We showed exactly that for a fundamental task:

semantic correspondence📍

A thread 🧵👇

We showed exactly that for a fundamental task:

semantic correspondence📍

A thread 🧵👇

Yes, it is - but we found a way to do better. 🚀

Here’s how we unlock better features, no noise, no hassle.

📝 Project Page: compvis.github.io/cleandift

💻 Code: github.com/CompVis/clea...

🧵👇

Yes, it is - but we found a way to do better. 🚀

Here’s how we unlock better features, no noise, no hassle.

📝 Project Page: compvis.github.io/cleandift

💻 Code: github.com/CompVis/clea...

🧵👇