Interested in extracting world understanding from models and more controlled generation. 🌐 https://stefan-baumann.eu/

• Articulated motion (Drag-A-Move): fine-tuned FPT outperforms specialized models for motion prediction

• Face motion: zero-shot, beats specialized baselines

• Moving part segmentation: emerges from formulation

• Articulated motion (Drag-A-Move): fine-tuned FPT outperforms specialized models for motion prediction

• Face motion: zero-shot, beats specialized baselines

• Moving part segmentation: emerges from formulation

FPT 𝘳𝘦𝘱𝘳𝘦𝘴𝘦𝘯𝘵𝘴 𝘵𝘩𝘦 𝘧𝘶𝘭𝘭 𝘮𝘰𝘵𝘪𝘰𝘯 𝘥𝘪𝘴𝘵𝘳𝘪𝘣𝘶𝘵𝘪𝘰𝘯, enabling:

• interpretable uncertainty

• controllable interaction effects

• efficient prediction (>100k predictions/s)

FPT 𝘳𝘦𝘱𝘳𝘦𝘴𝘦𝘯𝘵𝘴 𝘵𝘩𝘦 𝘧𝘶𝘭𝘭 𝘮𝘰𝘵𝘪𝘰𝘯 𝘥𝘪𝘴𝘵𝘳𝘪𝘣𝘶𝘵𝘪𝘰𝘯, enabling:

• interpretable uncertainty

• controllable interaction effects

• efficient prediction (>100k predictions/s)

Predict 𝗱𝗶𝘀𝘁𝗿𝗶𝗯𝘂𝘁𝗶𝗼𝗻𝘀 of motion, not just one flow field instance.

Given a few pokes, our model outputs the probability 𝘥𝘪𝘴𝘵𝘳𝘪𝘣𝘶𝘵𝘪𝘰𝘯 of how parts of the scene might move.

→ This directly captures 𝘶𝘯𝘤𝘦𝘳𝘵𝘢𝘪𝘯𝘵𝘺 and interactions.

Predict 𝗱𝗶𝘀𝘁𝗿𝗶𝗯𝘂𝘁𝗶𝗼𝗻𝘀 of motion, not just one flow field instance.

Given a few pokes, our model outputs the probability 𝘥𝘪𝘴𝘵𝘳𝘪𝘣𝘶𝘵𝘪𝘰𝘯 of how parts of the scene might move.

→ This directly captures 𝘶𝘯𝘤𝘦𝘳𝘵𝘢𝘪𝘯𝘵𝘺 and interactions.

But most models predict 𝗼𝗻𝗲 𝗳𝘂𝘁𝘂𝗿𝗲, a single deterministic motion.

The reality is 𝘶𝘯𝘤𝘦𝘳𝘵𝘢𝘪𝘯 and 𝘮𝘶𝘭𝘵𝘪-𝘮𝘰𝘥𝘢𝘭: one poke can lead to many outcomes.

But most models predict 𝗼𝗻𝗲 𝗳𝘂𝘁𝘂𝗿𝗲, a single deterministic motion.

The reality is 𝘶𝘯𝘤𝘦𝘳𝘵𝘢𝘪𝘯 and 𝘮𝘶𝘭𝘵𝘪-𝘮𝘰𝘥𝘢𝘭: one poke can lead to many outcomes.

We built the Flow Poke Transformer (FPT) to model multi-modal scene dynamics from sparse interactions.

It learns to predict the 𝘥𝘪𝘴𝘵𝘳𝘪𝘣𝘶𝘵𝘪𝘰𝘯 of motion itself 🧵👇

We built the Flow Poke Transformer (FPT) to model multi-modal scene dynamics from sparse interactions.

It learns to predict the 𝘥𝘪𝘴𝘵𝘳𝘪𝘣𝘶𝘵𝘪𝘰𝘯 of motion itself 🧵👇

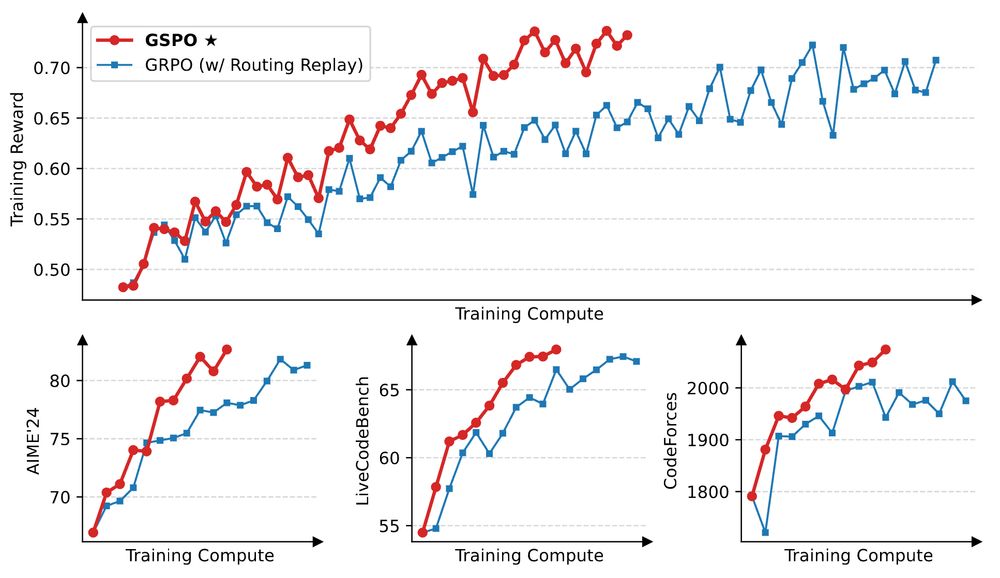

Makes everything behave much better (see below) and makes more sense from a theoretical perspective, too.

Paper: www.arxiv.org/abs/2507.18071

Makes everything behave much better (see below) and makes more sense from a theoretical perspective, too.

Paper: www.arxiv.org/abs/2507.18071

It makes so much more sense intuitively to work on a sequence rather than on a token level when our rewards are on a sequence level.

It makes so much more sense intuitively to work on a sequence rather than on a token level when our rewards are on a sequence level.