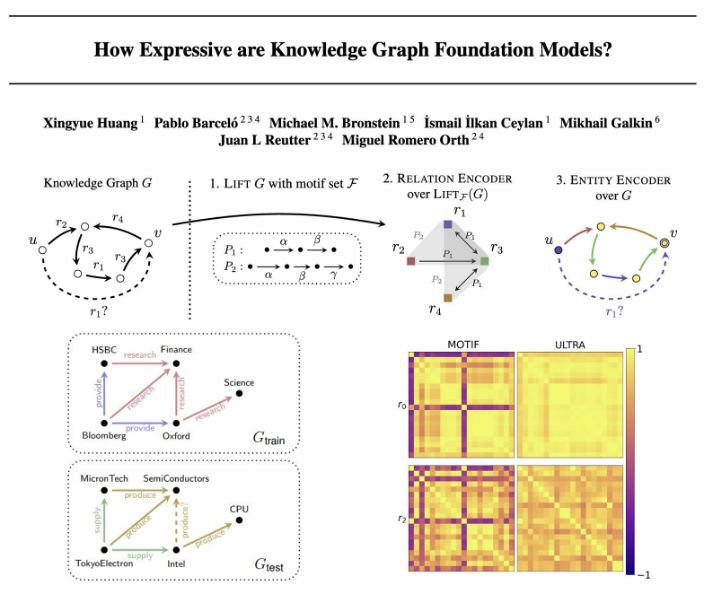

With Pablo Barcelo, Ismail Ceylan, @mmbronstein.bsky.social , @mgalkin.bsky.social, Juan Reutter, Miguel Romero!

With Pablo Barcelo, Ismail Ceylan, @mmbronstein.bsky.social , @mgalkin.bsky.social, Juan Reutter, Miguel Romero!

nature.com/articles/s41...

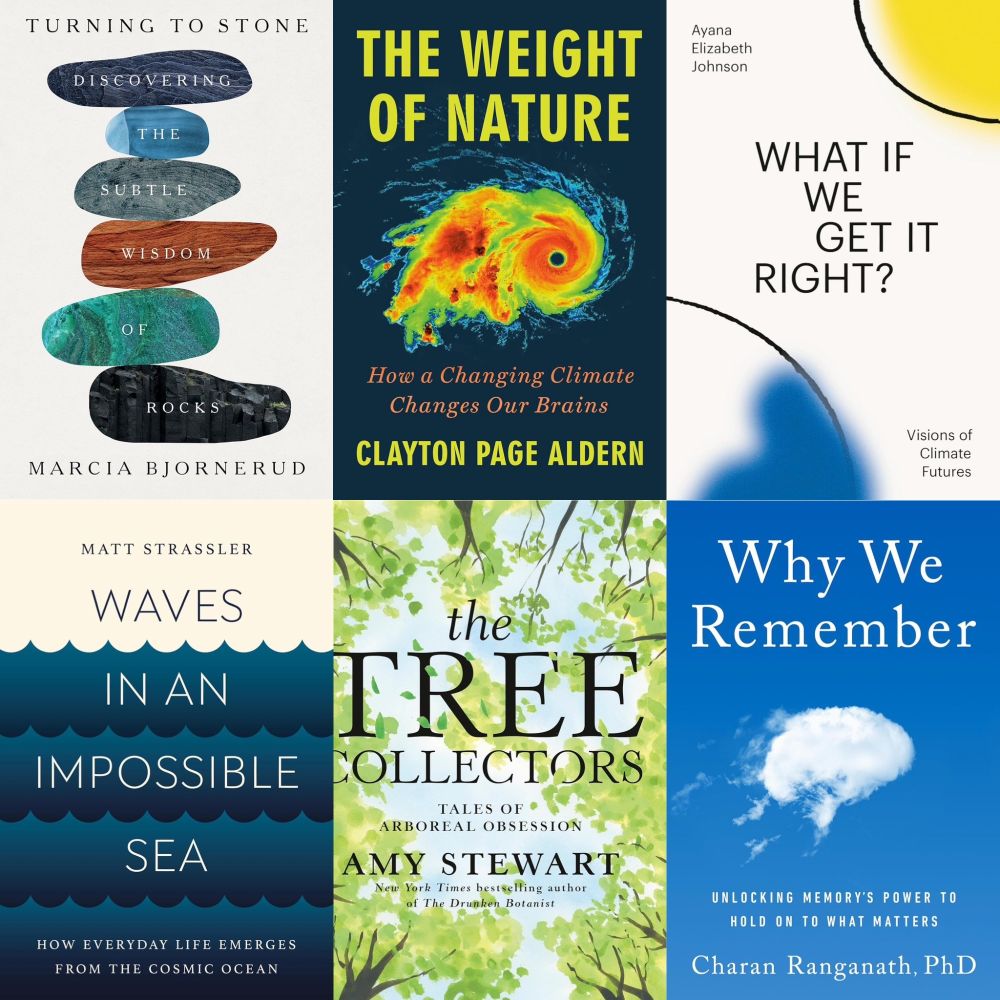

🧠 #compneuro

nature.com/articles/s41...

🧠 #compneuro

youtu.be/UGO_Ehywuxc

youtu.be/UGO_Ehywuxc

Please consider donating to Sesame Workshop to ensure the residents of 123 Sesame Street are still around to teach kids of all needs and backgrounds.

Please consider donating to Sesame Workshop to ensure the residents of 123 Sesame Street are still around to teach kids of all needs and backgrounds.

A 🧵 of our results below (each paper is linked):

A 🧵 of our results below (each paper is linked):

Apple introduces TarFlow, a new Transformer-based variant of Masked Autoregressive Flows.

SOTA on likelihood estimation for images, quality and diversity comparable to diffusion models.

arxiv.org/abs/2412.06329

Apple introduces TarFlow, a new Transformer-based variant of Masked Autoregressive Flows.

SOTA on likelihood estimation for images, quality and diversity comparable to diffusion models.

arxiv.org/abs/2412.06329

(E.g., where does that “log” come from? Are there other possible formulas?)

Yet there's an intuitive & almost inevitable way to arrive at this expression.

(E.g., where does that “log” come from? Are there other possible formulas?)

Yet there's an intuitive & almost inevitable way to arrive at this expression.

Just make your neural network weights noisy (like 🧠?) and reap the benefits of robustness to corruptions with no loss on clean data.

🌟Spotlight paper at #NeurIPS2024 led by Trung Trinh & w/ Markus Heinonen and @samikaski.bsky.social

trungtrinh44.github.io/DAMP/

Just make your neural network weights noisy (like 🧠?) and reap the benefits of robustness to corruptions with no loss on clean data.

🌟Spotlight paper at #NeurIPS2024 led by Trung Trinh & w/ Markus Heinonen and @samikaski.bsky.social

trungtrinh44.github.io/DAMP/

📽️ Watch here: www.youtube.com/watch?v=bMXq...

Big thanks to @giffmana.ai for this excellent content! 🙌

📽️ Watch here: www.youtube.com/watch?v=bMXq...

Big thanks to @giffmana.ai for this excellent content! 🙌

We trained a model to play four games, and the performance in each increases by "external search" (MCTS using a learned world model) and "internal search" where the model outputs the whole plan on its own!

We trained a model to play four games, and the performance in each increases by "external search" (MCTS using a learned world model) and "internal search" where the model outputs the whole plan on its own!

probnum25.github.io

probnum25.github.io

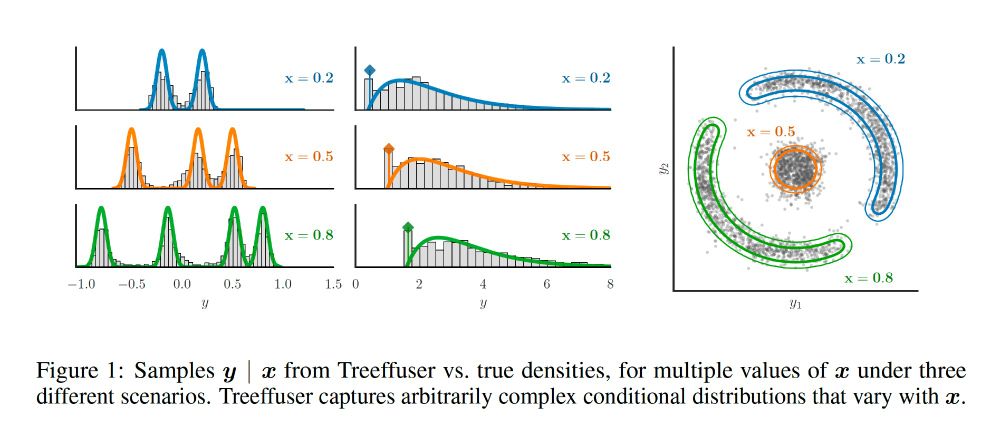

paper: arxiv.org/abs/2406.07658

repo: github.com/blei-lab/tre...

🧵(1/8)

paper: arxiv.org/abs/2406.07658

repo: github.com/blei-lab/tre...

🧵(1/8)

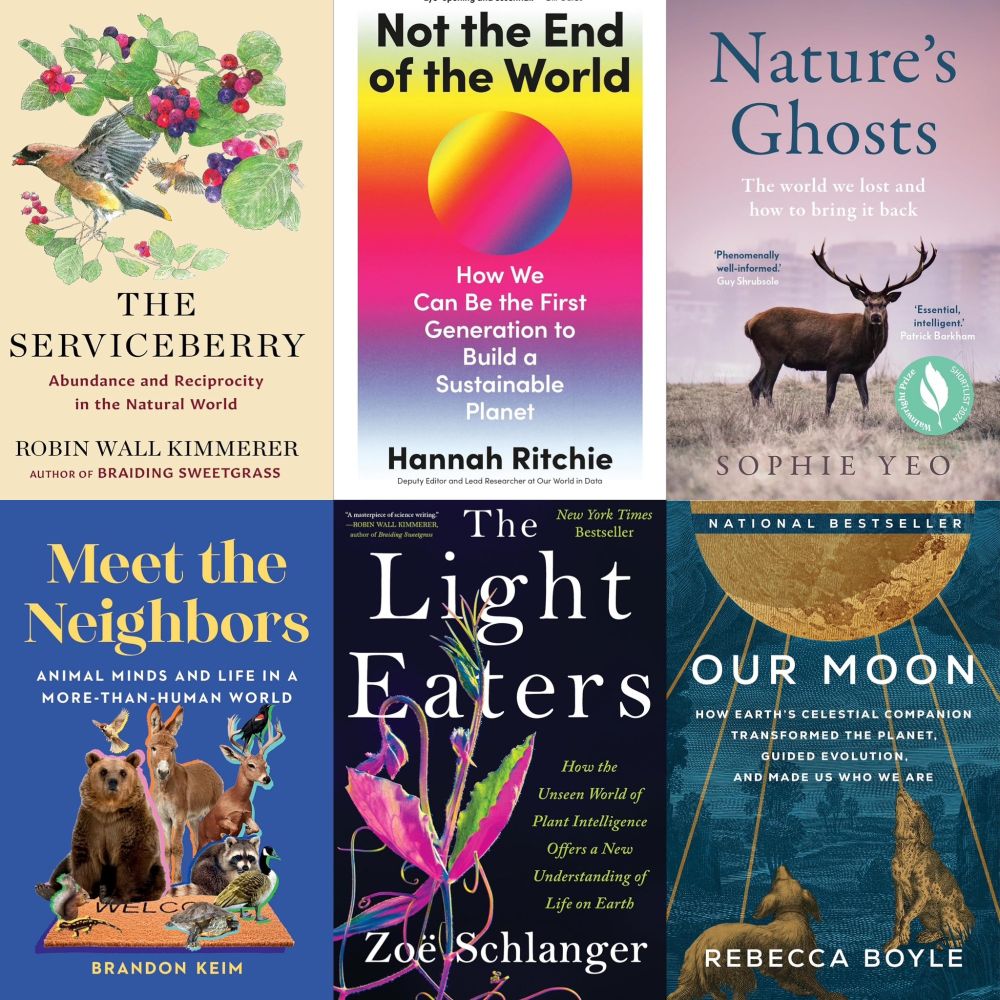

Introducing: ✨🎁📚 The 2024 Holiday Gift Guide to Nature & Science Books ✨🎁📚

Please share: Let's make this go viral in time for Black Friday / holiday shopping!

Introducing: ✨🎁📚 The 2024 Holiday Gift Guide to Nature & Science Books ✨🎁📚

Please share: Let's make this go viral in time for Black Friday / holiday shopping!

Say 𝐱 is a noisy version of 𝐮 with 𝐞 ∼ 𝒩(𝟎, σ² 𝐈)

𝐱 = 𝐮 + 𝐞

MMSE estimate of 𝐮 is 𝔼[𝐮|𝐱] & would seem to require P(𝐮|𝐱). Yet Tweedie says P(𝐱) is all you need

1/3

Say 𝐱 is a noisy version of 𝐮 with 𝐞 ∼ 𝒩(𝟎, σ² 𝐈)

𝐱 = 𝐮 + 𝐞

MMSE estimate of 𝐮 is 𝔼[𝐮|𝐱] & would seem to require P(𝐮|𝐱). Yet Tweedie says P(𝐱) is all you need

1/3

-NeurIPS2024 Communication Chairs

-NeurIPS2024 Communication Chairs

We’re looking for candidates across data science and AI, including science, health, medicine, the humanities, engineering, policy, and ethics.

Spread the word and apply!

ai.jhu.edu/postdoctoral...

We’re looking for candidates across data science and AI, including science, health, medicine, the humanities, engineering, policy, and ethics.

Spread the word and apply!

ai.jhu.edu/postdoctoral...

These are self-contained notebooks in which you have to implement famous algorithms from the literature (k-NN, SVM, DT, etc), with a custom dataset that I (painstakingly) made!

These are self-contained notebooks in which you have to implement famous algorithms from the literature (k-NN, SVM, DT, etc), with a custom dataset that I (painstakingly) made!

But then I found the paper "Mechanistic?" by

@nsaphra.bsky.social and @sarah-nlp.bsky.social, which clarified things.

But then I found the paper "Mechanistic?" by

@nsaphra.bsky.social and @sarah-nlp.bsky.social, which clarified things.

arxiv.org/abs/2407.11072

TL;DR — An attacker can convince your favorite LLM to suggest vulnerable code with just a minor change to the prompt!

arxiv.org/abs/2407.11072

TL;DR — An attacker can convince your favorite LLM to suggest vulnerable code with just a minor change to the prompt!

fleetwood.dev/posts/you-co...

fleetwood.dev/posts/you-co...