Newsletter: https://mindfulmodeler.substack.com/

Website: https://christophmolnar.com/

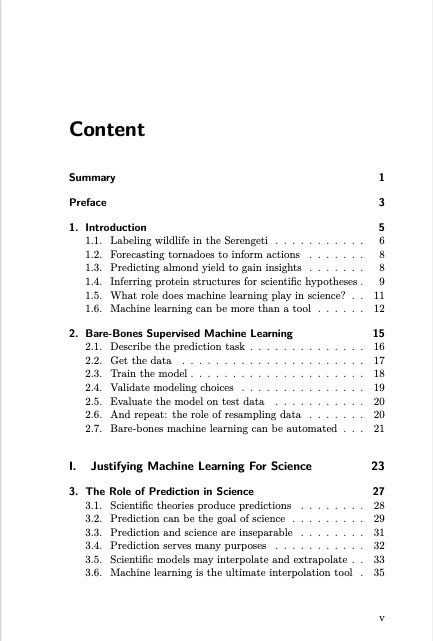

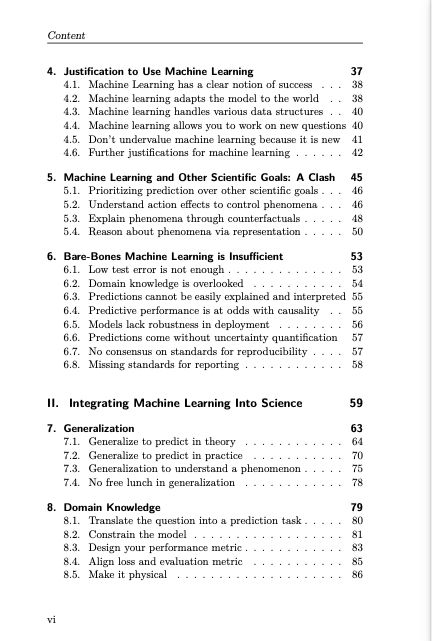

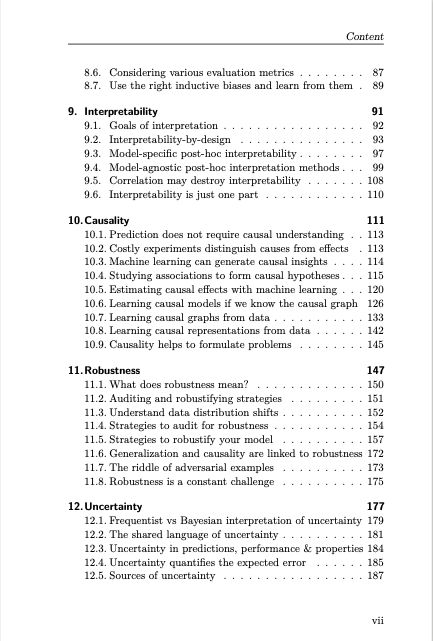

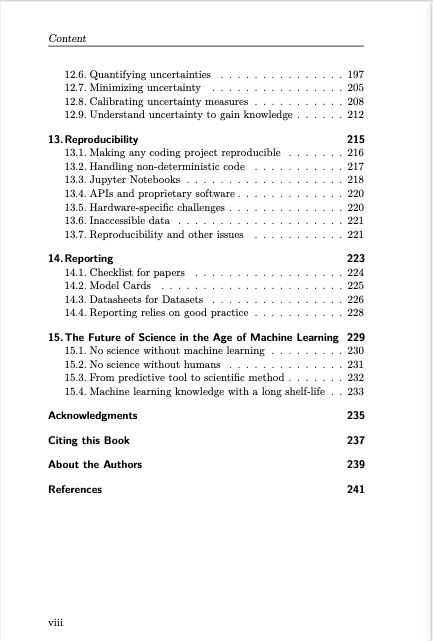

Timo and I recently published a book, and even if you are not a scientist, you'll find useful overviews of topics like causality and robustness.

The best part is that you can read it for free: ml-science-book.com

This paper might be of interest to you. Papers by @gunnark.bsky.social are always worth checking out.

This paper might be of interest to you. Papers by @gunnark.bsky.social are always worth checking out.

Read more:

Read more:

The book remains free to read for everyone. But you can also buy ebook or paperback.

The book remains free to read for everyone. But you can also buy ebook or paperback.

They are often discussed in research papers, but I have yet to see them being used somewhere in an actual process or product.

They are often discussed in research papers, but I have yet to see them being used somewhere in an actual process or product.

So sometimes it's 20 cents for saving you 20 minutes of work.

Other times it's $1 for wasting 10 minutes.

So sometimes it's 20 cents for saving you 20 minutes of work.

Other times it's $1 for wasting 10 minutes.

As always, it was more work than anticipated—especially moving the entire book project from Bookdown to Quarto, which took a bit of effort.

As always, it was more work than anticipated—especially moving the entire book project from Bookdown to Quarto, which took a bit of effort.

My most recent post on Mindful Modeler dives into the wisdom of the crowds and prediction markets.

Read the full story here:

My most recent post on Mindful Modeler dives into the wisdom of the crowds and prediction markets.

Read the full story here:

Here's a summary of the journey, challenges, & key insights from my winning solution (water supply forecasting)

Here's a summary of the journey, challenges, & key insights from my winning solution (water supply forecasting)

The paper showed that attribution methods, like LIME and LRP, compute Shapley values (with some adaptations).

The paper also introduces estimation methods for Shapley values, like KernelSHAP, which today is deprecated.

The paper showed that attribution methods, like LIME and LRP, compute Shapley values (with some adaptations).

The paper also introduces estimation methods for Shapley values, like KernelSHAP, which today is deprecated.

Read more here:

Read more here:

How I sometimes feel working on "traditional" machine learning topics instead of generative AI stuff 😂

How I sometimes feel working on "traditional" machine learning topics instead of generative AI stuff 😂

They throw all their knowledge about how to make good predictions overboard and just claim things like: AI will replace radiologists in a few years or when they expect AGI.

They throw all their knowledge about how to make good predictions overboard and just claim things like: AI will replace radiologists in a few years or when they expect AGI.

They are the most impressive, cherry-picked examples. That includes cherry-picking prompts and themes that produced better results.

But as a user, you want good results for every prompt/theme relevant to your use case

They are the most impressive, cherry-picked examples. That includes cherry-picking prompts and themes that produced better results.

But as a user, you want good results for every prompt/theme relevant to your use case

Modeling Mindsets is a short read to broaden your perspective on data modeling.

christophmolnar.com/books/modeli...

*Hat not included.

Modeling Mindsets is a short read to broaden your perspective on data modeling.

christophmolnar.com/books/modeli...

*Hat not included.

• Use AI only for small and specific stuff, like grammar fixes or making suggestions for factual corrections.

• Never let an LLM change voice and tone.

• I review any changes made by AI.

• Use AI only for small and specific stuff, like grammar fixes or making suggestions for factual corrections.

• Never let an LLM change voice and tone.

• I review any changes made by AI.

Can't we have like an anti-knee arthroscopy movement or whatever instead?

Can't we have like an anti-knee arthroscopy movement or whatever instead?

While the norms and best practices are evolving, citing them seems the wrong way.

(even wilder when some people add "ChatGPT" as their co-authors)

I say normalized because many univs & scholarly associations recommend it as an element of proper scholarship.

But it doesn't make sense when you consider what a citation means. 1/

While the norms and best practices are evolving, citing them seems the wrong way.

(even wilder when some people add "ChatGPT" as their co-authors)

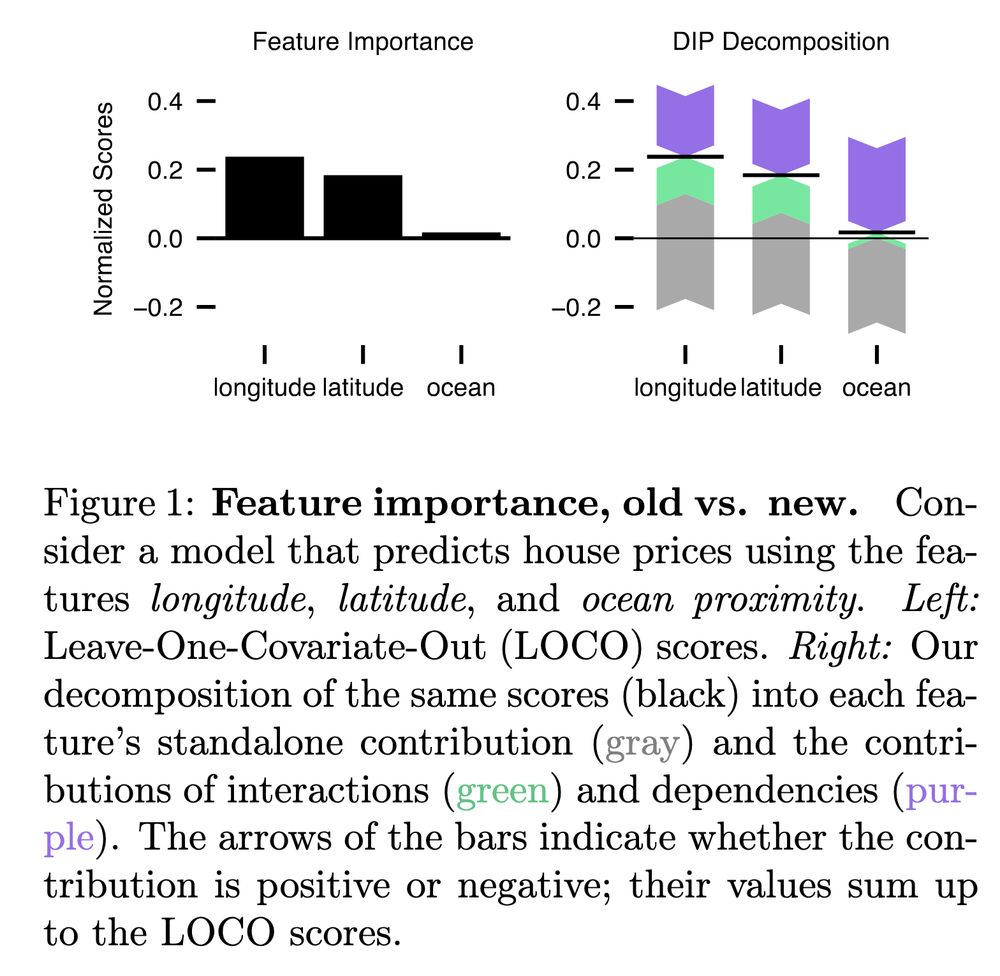

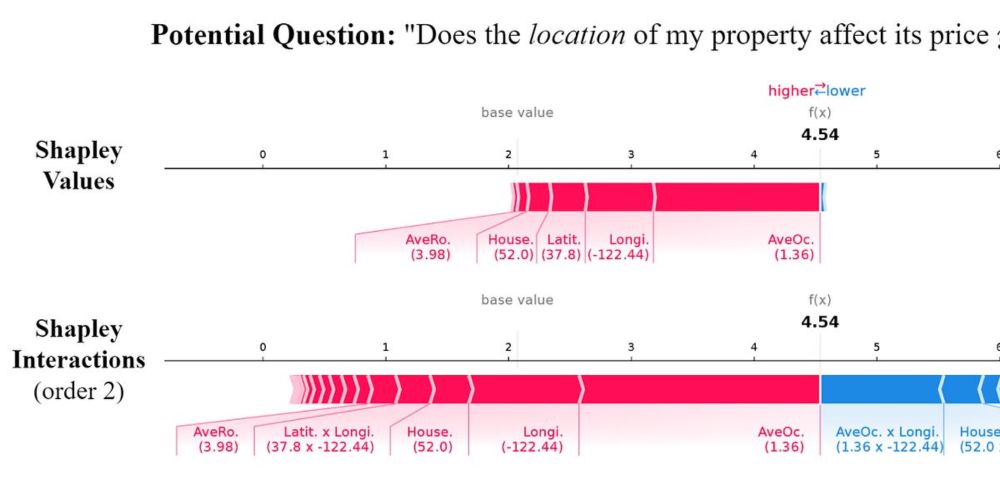

This is a guest post by Julia, Max, Fabian and Hubert on my newsletter Mindful Modeler.

I also learned a lot from this post and definitely recommend checking out the shapiq package.

mindfulmodeler.substack.com/p/what-are-s...

This is a guest post by Julia, Max, Fabian and Hubert on my newsletter Mindful Modeler.

I also learned a lot from this post and definitely recommend checking out the shapiq package.

mindfulmodeler.substack.com/p/what-are-s...

Excluding books that are dedicated "AI experiments" and where the book is more about the experiment.

Also excluding AI-assisted books where generative AI played a minor role

Excluding books that are dedicated "AI experiments" and where the book is more about the experiment.

Also excluding AI-assisted books where generative AI played a minor role

pandas.DataFrame.rolling

pandas.DataFrame.rolling

(and yes, I'm reading my own book here as a reference for another project, feeling like an imposter because I don't have everything memorized 😂)

(and yes, I'm reading my own book here as a reference for another project, feeling like an imposter because I don't have everything memorized 😂)