Previously at AI2, Harvard

mattf1n.github.io

📄 arxiv.org/abs/2510.14086 1/

LLM architect: the uhh model’s outputs lie on a high-dimensional ellipse

Gordon: on a high-dimensional ellipse?

Architect: correct, a high-dimensional ellipse

Gordon: fuck me. Ok thank you darling

📄 arxiv.org/abs/2510.14086 1/

LLM architect: the uhh model’s outputs lie on a high-dimensional ellipse

Gordon: on a high-dimensional ellipse?

Architect: correct, a high-dimensional ellipse

Gordon: fuck me. Ok thank you darling

📄 arxiv.org/abs/2510.14086 1/

📄 arxiv.org/abs/2510.14086 1/

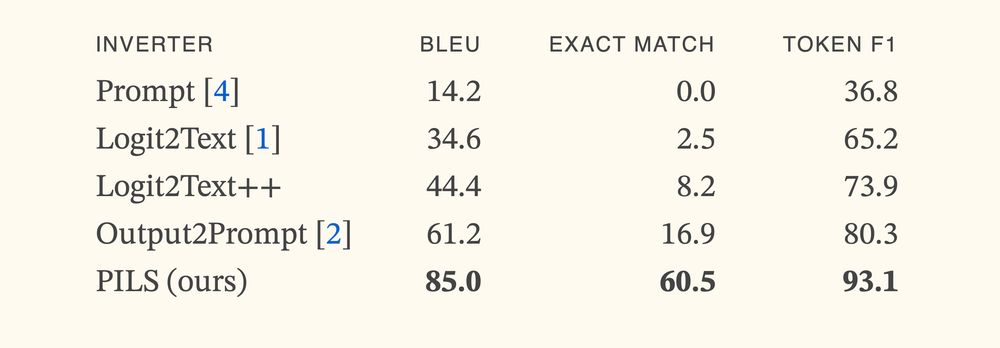

We trained a prompt stealing model that gets >3x SoTA accuracy.

The secret is representing LLM outputs *correctly*

🚲 Demo/blog: mattf1n.github.io/pils

📄: arxiv.org/abs/2506.17090

🤖: huggingface.co/dill-lab/pi...

🧑💻: github.com/dill-lab/PILS

We trained a prompt stealing model that gets >3x SoTA accuracy.

The secret is representing LLM outputs *correctly*

🚲 Demo/blog: mattf1n.github.io/pils

📄: arxiv.org/abs/2506.17090

🤖: huggingface.co/dill-lab/pi...

🧑💻: github.com/dill-lab/PILS

Forced backronyms like this are counter productive.

Forced backronyms like this are counter productive.

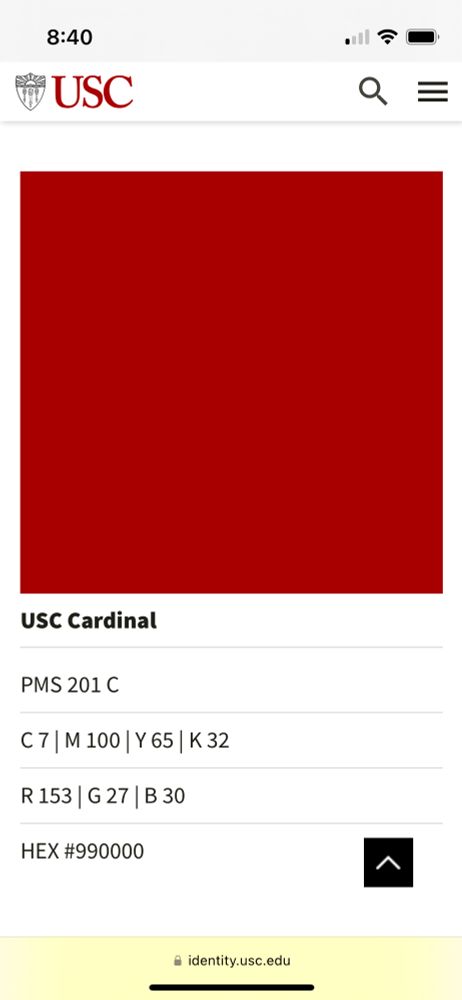

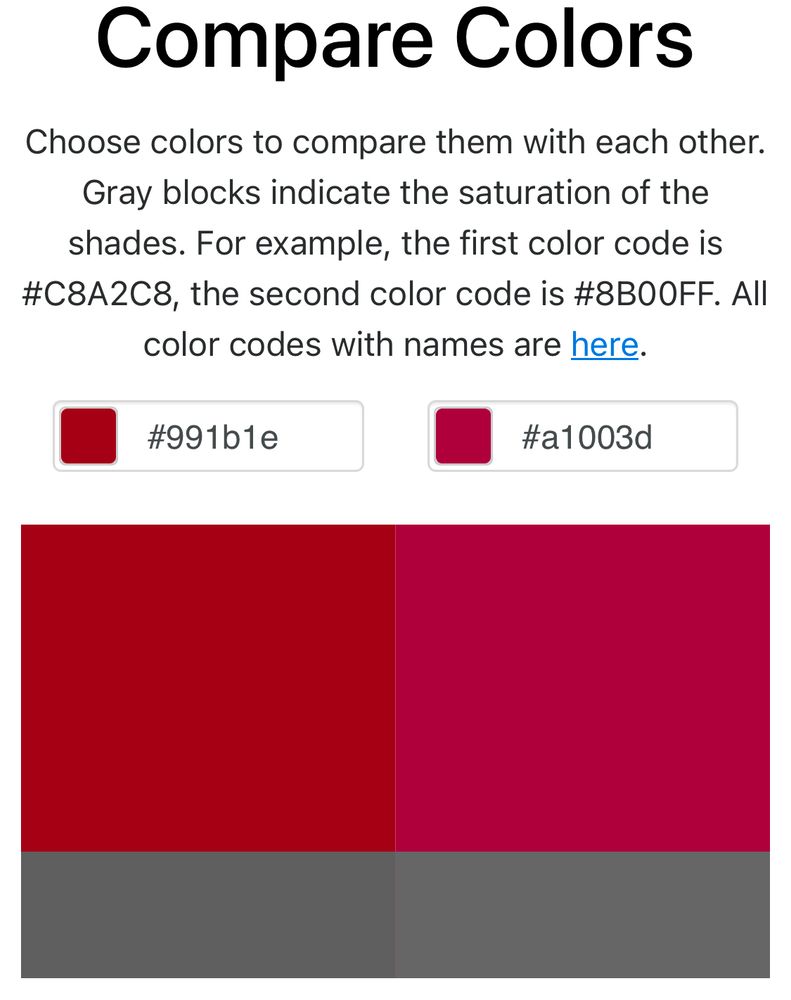

PMS 201 C is #9D2235

CMYK: 7, 100, 65, 32 is #A1003D

RGB: 135, 27, 30 is #991B1E

HEX: #990000

Is this normal? The CMYK is especially egregious.

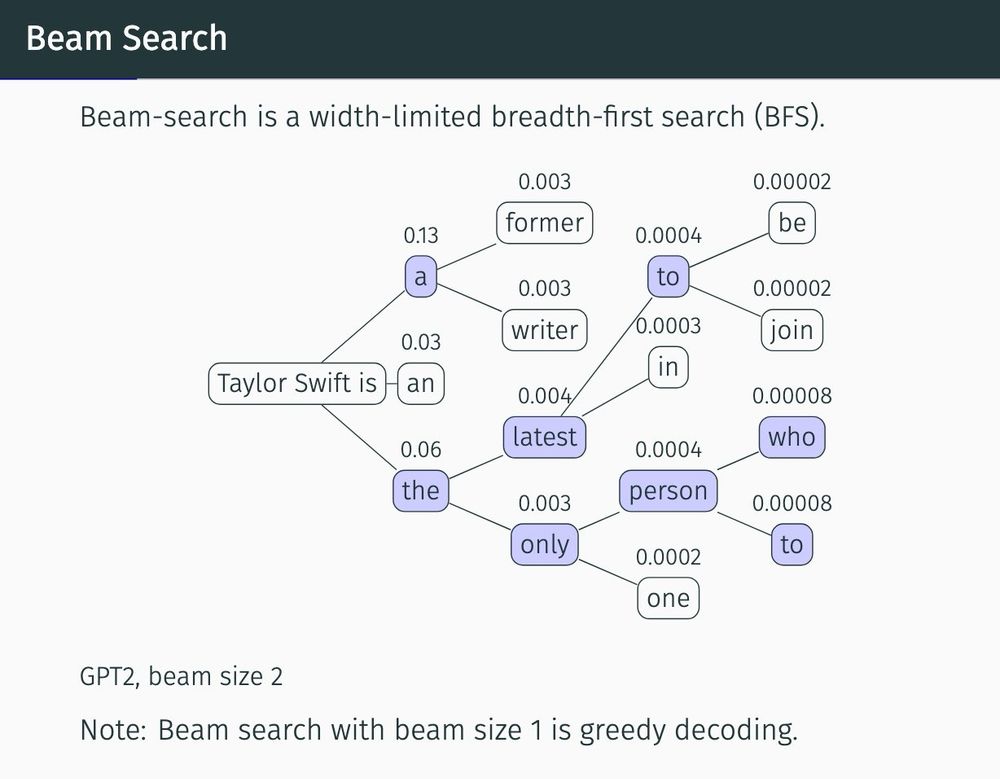

You can still spend the day going through our tutorial reading list:

cmu-l3.github.io/neurips2024-...

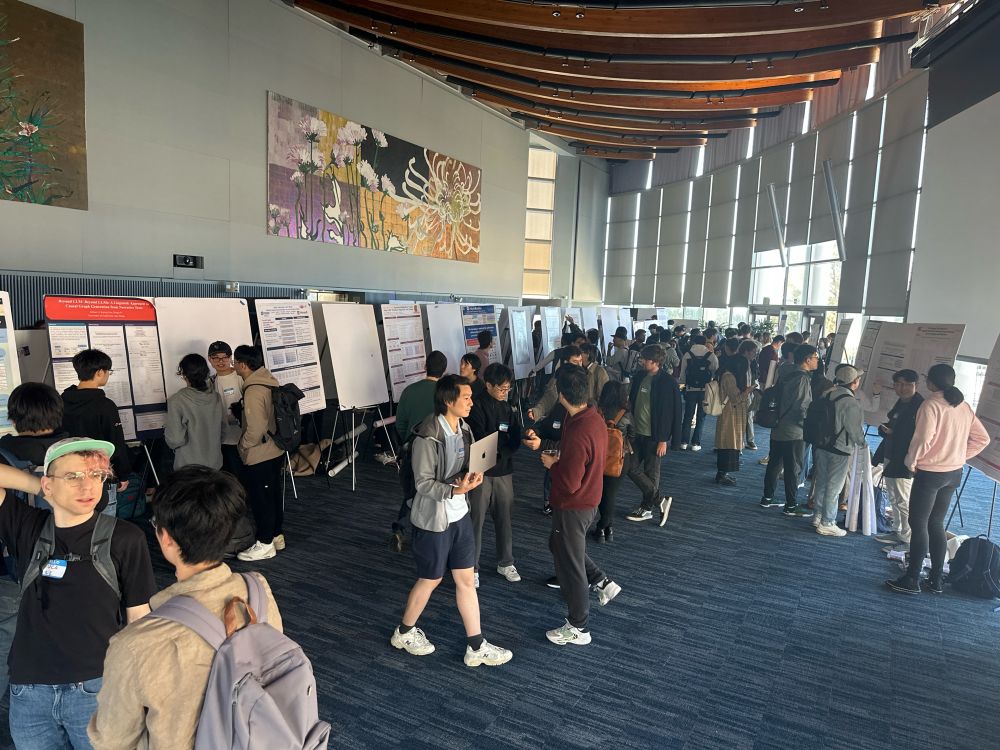

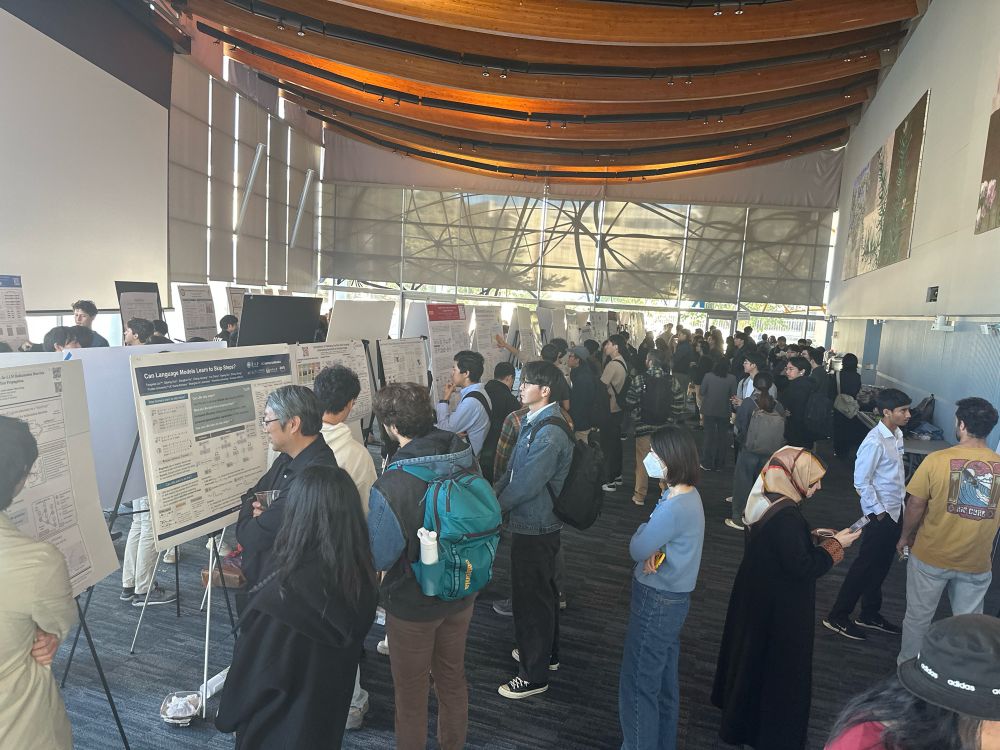

Tuesday December 10, 1:30-4:00pm @ West Exhibition Hall C, NeurIPS

You can still spend the day going through our tutorial reading list:

cmu-l3.github.io/neurips2024-...

Tuesday December 10, 1:30-4:00pm @ West Exhibition Hall C, NeurIPS

Our website: cmu-l3.github.io/neurips2024-...

Our website: cmu-l3.github.io/neurips2024-...

Happy to have helped a bit with this release (Tulu 3 recipe used here)! OLMo-2 13B actually beats Tulu 3 8B on these evals, making it a SOTA fully open LM!!!

(*on the benchmarks we looked at, see tweet for more)

Happy to have helped a bit with this release (Tulu 3 recipe used here)! OLMo-2 13B actually beats Tulu 3 8B on these evals, making it a SOTA fully open LM!!!

(*on the benchmarks we looked at, see tweet for more)

405B is being planned 🤞🤡

simons.berkeley.edu/talks/sean-w...

It was a sneak-preview subset of our NeurIPS tutorial:

cmu-l3.github.io/neurips2024-...

- X of thought

- Chain of Y

- "[topic] a comprehensive survey" where [topic] is exclusively post-2022 papers

- Claims of reasoning/world model/planning based on private defns of one

- X of thought

- Chain of Y

- "[topic] a comprehensive survey" where [topic] is exclusively post-2022 papers

- Claims of reasoning/world model/planning based on private defns of one

arxiv.org/abs/2408.11443

The idea is that stochastic variants of BPE/MaxMatch produce very biased tokenization distributions, which is probably bad for modeling.

#NLP

arxiv.org/abs/2408.11443

The idea is that stochastic variants of BPE/MaxMatch produce very biased tokenization distributions, which is probably bad for modeling.

#NLP