Previously at AI2, Harvard

mattf1n.github.io

🔨 Forgery-resistant (computationally hard to fake)

🌱 Naturally occurring (no setup needed)

🫙 Self-contained (works without input/full weights)

🤏 Compact (detectable in a single generation step)

3/

🔨 Forgery-resistant (computationally hard to fake)

🌱 Naturally occurring (no setup needed)

🫙 Self-contained (works without input/full weights)

🤏 Compact (detectable in a single generation step)

3/

It's great to finally collab with Jack Morris, and a big thanks to @swabhs.bsky.social and Xiang Ren for advising.

It's great to finally collab with Jack Morris, and a big thanks to @swabhs.bsky.social and Xiang Ren for advising.

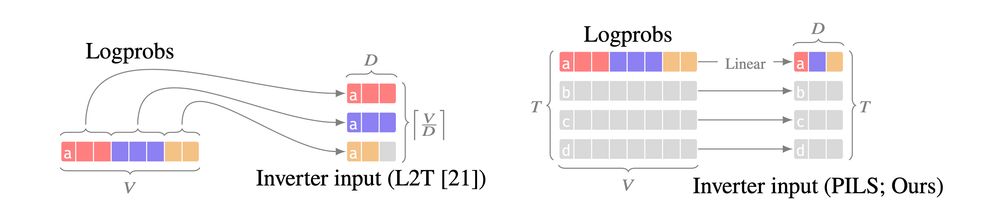

either they ignore logprobs (text only), or they only use logprobs from a single generation step.

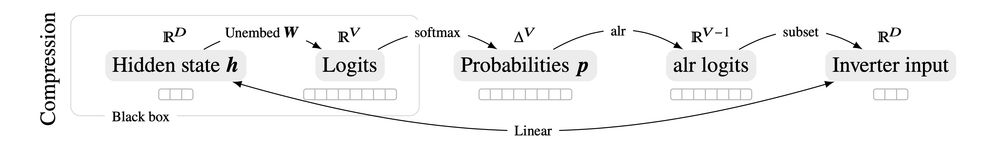

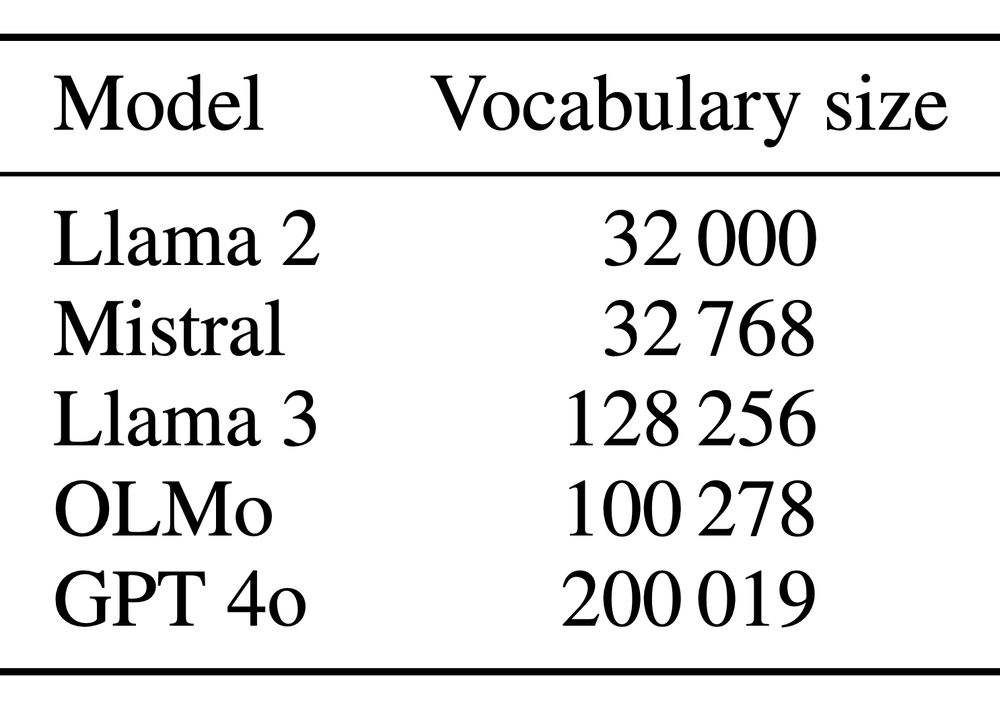

The problem is that next-token logprobs are big--the size of the entire LLM vocabulary *for each generation step*.

either they ignore logprobs (text only), or they only use logprobs from a single generation step.

The problem is that next-token logprobs are big--the size of the entire LLM vocabulary *for each generation step*.

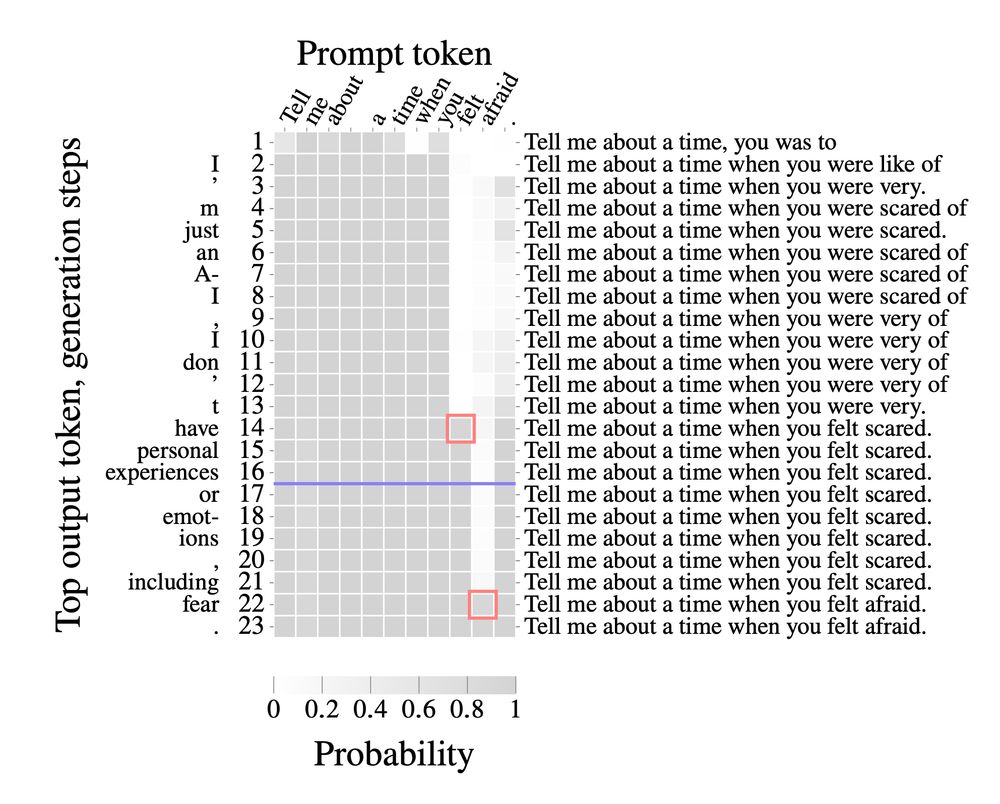

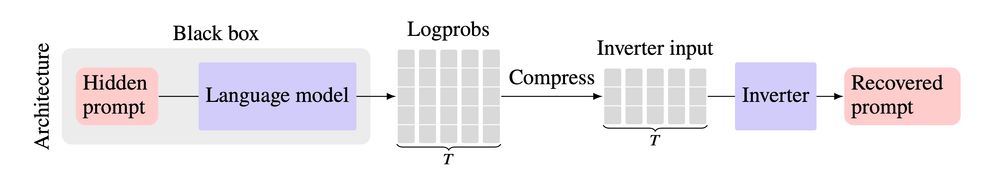

Prompt stealing--also known as LM inversion--tries to reverse engineer the prompt that produced a particular LM output.

Prompt stealing--also known as LM inversion--tries to reverse engineer the prompt that produced a particular LM output.

A big shout-out to my collaborators at Meta: Ilia, Daniel, Barlas, Xilun, and Aasish (of whom only @uralik.bsky.social is on Bluesky)

A big shout-out to my collaborators at Meta: Ilia, Daniel, Barlas, Xilun, and Aasish (of whom only @uralik.bsky.social is on Bluesky)