phd @ mila/udem, prev. @ uwaterloo

averyryoo.github.io 🇨🇦🇰🇷

How do we build neural decoders that are:

⚡️ fast enough for real-time use

🎯 accurate across diverse tasks

🌍 generalizable to new sessions, subjects, and even species?

We present POSSM, a hybrid SSM architecture that optimizes for all three of these axes!

🧵1/7

🧠🤖

We propose a theory of how learning curriculum affects generalization through neural population dimensionality. Learning curriculum is a determining factor of neural dimensionality - where you start from determines where you end up.

🧠📈

A 🧵:

tinyurl.com/yr8tawj3

🧠🤖

We propose a theory of how learning curriculum affects generalization through neural population dimensionality. Learning curriculum is a determining factor of neural dimensionality - where you start from determines where you end up.

🧠📈

A 🧵:

tinyurl.com/yr8tawj3

How do we build neural decoders that are:

⚡️ fast enough for real-time use

🎯 accurate across diverse tasks

🌍 generalizable to new sessions, subjects, and even species?

We present POSSM, a hybrid SSM architecture that optimizes for all three of these axes!

🧵1/7

shop.elsevier.com/books/neural...

shop.elsevier.com/books/neural...

Step 2: Directly target underlying mechanism.

Step 3: Improve LLMs independent of scale. Profit.

In our ACL 2025 paper we look at Step 1 in terms of training dynamics.

Project: mirandrom.github.io/zsl

Paper: arxiv.org/pdf/2506.05447

Step 2: Directly target underlying mechanism.

Step 3: Improve LLMs independent of scale. Profit.

In our ACL 2025 paper we look at Step 1 in terms of training dynamics.

Project: mirandrom.github.io/zsl

Paper: arxiv.org/pdf/2506.05447

Introducing Self-Refining Training for Amortized DFT: a variational method that predicts ground-state solutions across geometries and generates its own training data!

📜 arxiv.org/abs/2506.01225

💻 github.com/majhas/self-...

Introducing Self-Refining Training for Amortized DFT: a variational method that predicts ground-state solutions across geometries and generates its own training data!

📜 arxiv.org/abs/2506.01225

💻 github.com/majhas/self-...

Multi-agent reinforcement learning (MARL) often assumes that agents know when other agents cooperate with them. But for humans, this isn’t always the case. For example, plains indigenous groups used to leave resources for others to use at effigies called Manitokan.

1/8

Multi-agent reinforcement learning (MARL) often assumes that agents know when other agents cooperate with them. But for humans, this isn’t always the case. For example, plains indigenous groups used to leave resources for others to use at effigies called Manitokan.

1/8

How do we build neural decoders that are:

⚡️ fast enough for real-time use

🎯 accurate across diverse tasks

🌍 generalizable to new sessions, subjects, and even species?

We present POSSM, a hybrid SSM architecture that optimizes for all three of these axes!

🧵1/7

How do we build neural decoders that are:

⚡️ fast enough for real-time use

🎯 accurate across diverse tasks

🌍 generalizable to new sessions, subjects, and even species?

We present POSSM, a hybrid SSM architecture that optimizes for all three of these axes!

🧵1/7

@amiithinks.bsky.social!

My research group is recruiting MSc and PhD students at the University of Alberta in Canada. Research topics include generative modeling, representation learning, interpretability, inverse problems, and neuroAI.

@amiithinks.bsky.social!

My research group is recruiting MSc and PhD students at the University of Alberta in Canada. Research topics include generative modeling, representation learning, interpretability, inverse problems, and neuroAI.

Excited to share our #ICLR2025 Spotlight paper introducing POYO+ 🧠

poyo-plus.github.io

🧵

Excited to share our #ICLR2025 Spotlight paper introducing POYO+ 🧠

poyo-plus.github.io

🧵

We're hiring a full-time machine learning specialist for this work.

Please share widely!

#NeuroAI 🧠📈 🧪

Join the IVADO Research Regroupement - AI and Neuroscience (R1) to develop foundational models in the field of neuroscience.

More info: ivado.ca/2025/04/08/s...

#JobOffer #AI #Neuroscience #Research #MachineLearning

We're hiring a full-time machine learning specialist for this work.

Please share widely!

#NeuroAI 🧠📈 🧪

@cosynemeeting.bsky.social

#COSYNE2025 workshop on “Agent-Based Models in Neuroscience: Complex Planning, Embodiment, and Beyond" are now online: neuro-agent-models.github.io

🧠🤖

@cosynemeeting.bsky.social

#COSYNE2025 workshop on “Agent-Based Models in Neuroscience: Complex Planning, Embodiment, and Beyond" are now online: neuro-agent-models.github.io

🧠🤖

neurofm-workshop.github.io

neurofm-workshop.github.io

#Cosyne2025 @cosynemeeting.bsky.social

#Cosyne2025 @cosynemeeting.bsky.social

🧠🤖 🧠📈

1/3

🧠🤖 🧠📈

1/3

But, we can still make this an awesome meeting as usual, y'all. Let's pull together and make it happen!

🧠📈

#Cosyne2025

But, we can still make this an awesome meeting as usual, y'all. Let's pull together and make it happen!

🧠📈

#Cosyne2025

We also made a starter pack with (most of) our speakers: go.bsky.app/Ss6RaEF

We also made a starter pack with (most of) our speakers: go.bsky.app/Ss6RaEF

🚀 Introducing SuperDiff 🦹♀️ – a principled method for efficiently combining multiple pre-trained diffusion models solely during inference!

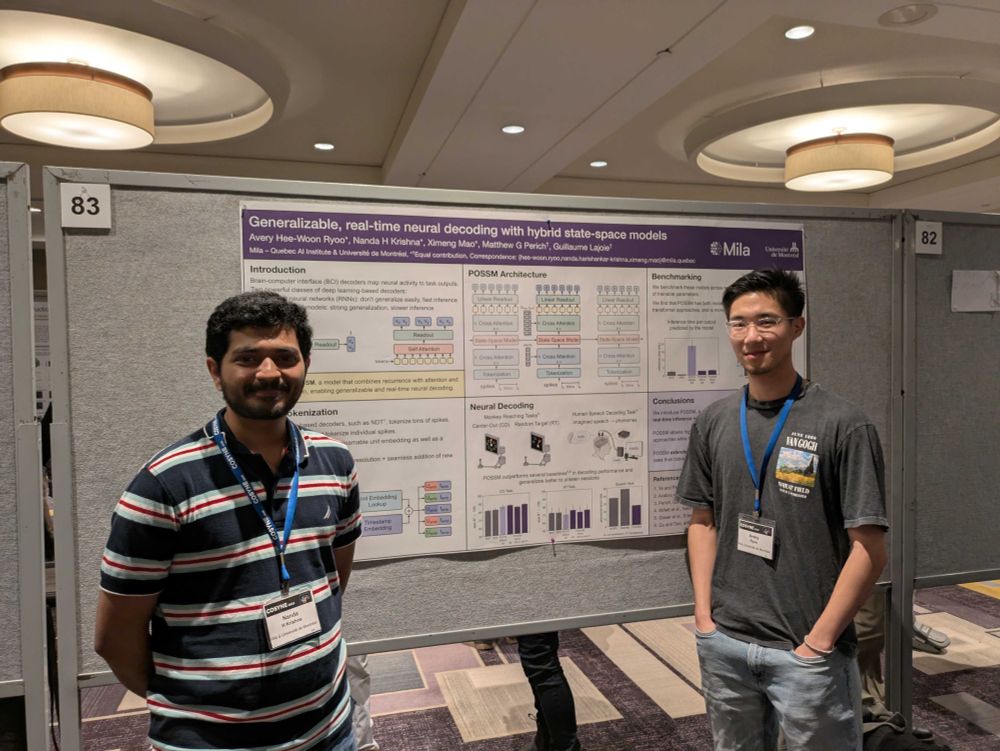

I'll be at Cosyne 2025 (@cosynemeeting.bsky.social) to present our work on generalizable real-time decoding for BCIs 🧠🦾

Really looking forward to seeing everyone in Montréal 🇨🇦! Stay tuned for more details in the new year🤘

I'll be at Cosyne 2025 (@cosynemeeting.bsky.social) to present our work on generalizable real-time decoding for BCIs 🧠🦾

Really looking forward to seeing everyone in Montréal 🇨🇦! Stay tuned for more details in the new year🤘

drive.google.com/file/d/1eLa3...

drive.google.com/file/d/1eLa3...