You'll be working on Multimodal Diffusions for science. Apply here google.com/about/career...

📣Call for: Full workshop papers (5-8 pages) and Tiny papers (2-4 pages)

📅Submission deadline: 7 February 2026 AoE

🌐Learn more: genai-in-genomics.github.io

(1/7)

📣Call for: Full workshop papers (5-8 pages) and Tiny papers (2-4 pages)

📅Submission deadline: 7 February 2026 AoE

🌐Learn more: genai-in-genomics.github.io

(1/7)

arxiv.org/abs/2511.11497

'A Recursive Theory of Variational State Estimation: The Dynamic Programming Approach'

- Filip Tronarp

You'll be working on Multimodal Diffusions for science. Apply here google.com/about/career...

You'll be working on Multimodal Diffusions for science. Apply here google.com/about/career...

arxiv.org/abs/2510.07559

The main problem we solve in it is to construct importance weights for Markov chain Monte Carlo. We achieve it via a method we call harmonization by coupling.

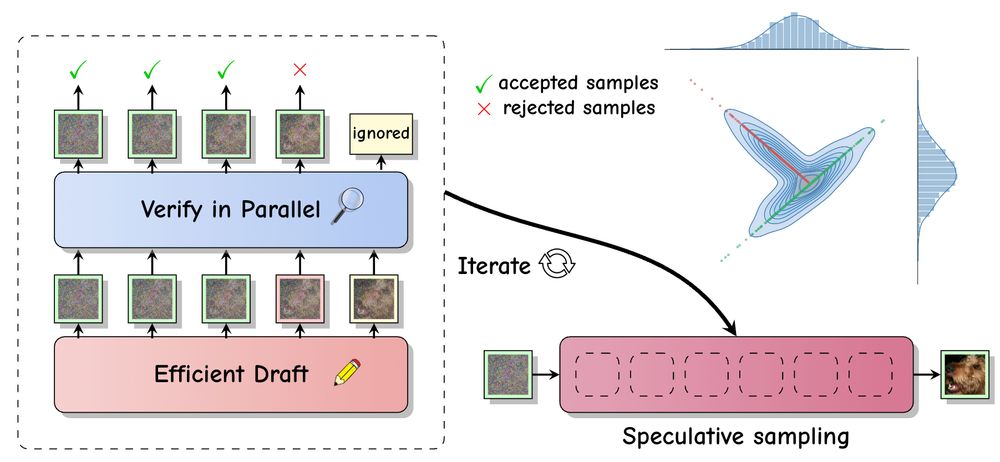

We speed up sampling of masked diffusion models by ~2x by using speculative sampling and a hybrid non-causal / causal transformer

arxiv.org/abs/2510.03929

w/ @vdebortoli.bsky.social, Jiaxin Shi, @arnauddoucet.bsky.social

We speed up sampling of masked diffusion models by ~2x by using speculative sampling and a hybrid non-causal / causal transformer

arxiv.org/abs/2510.03929

w/ @vdebortoli.bsky.social, Jiaxin Shi, @arnauddoucet.bsky.social

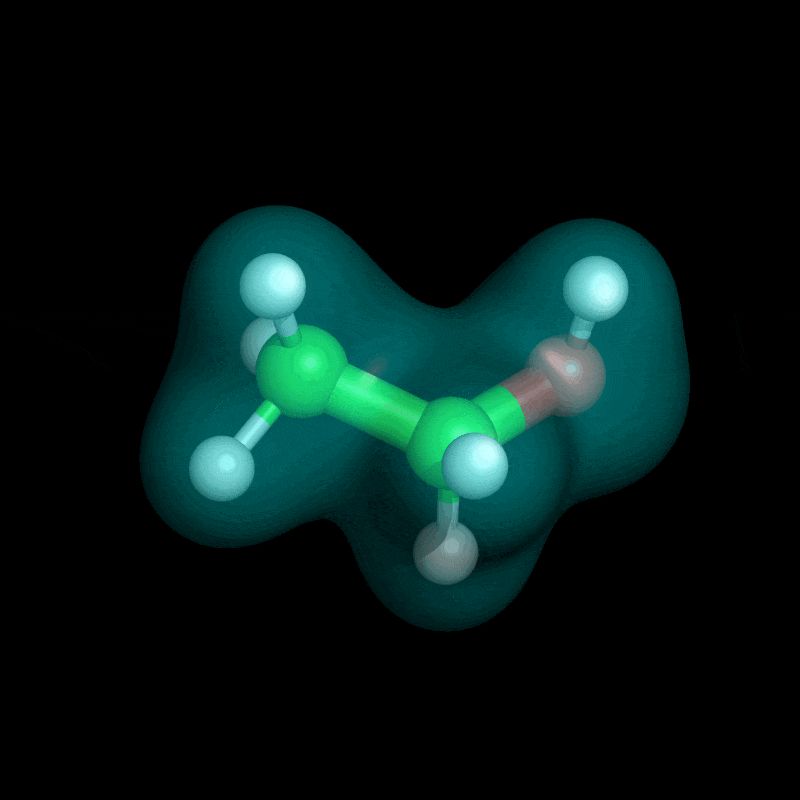

Introducing Self-Refining Training for Amortized DFT: a variational method that predicts ground-state solutions across geometries and generates its own training data!

📜 arxiv.org/abs/2506.01225

💻 github.com/majhas/self-...

Introducing Self-Refining Training for Amortized DFT: a variational method that predicts ground-state solutions across geometries and generates its own training data!

📜 arxiv.org/abs/2506.01225

💻 github.com/majhas/self-...

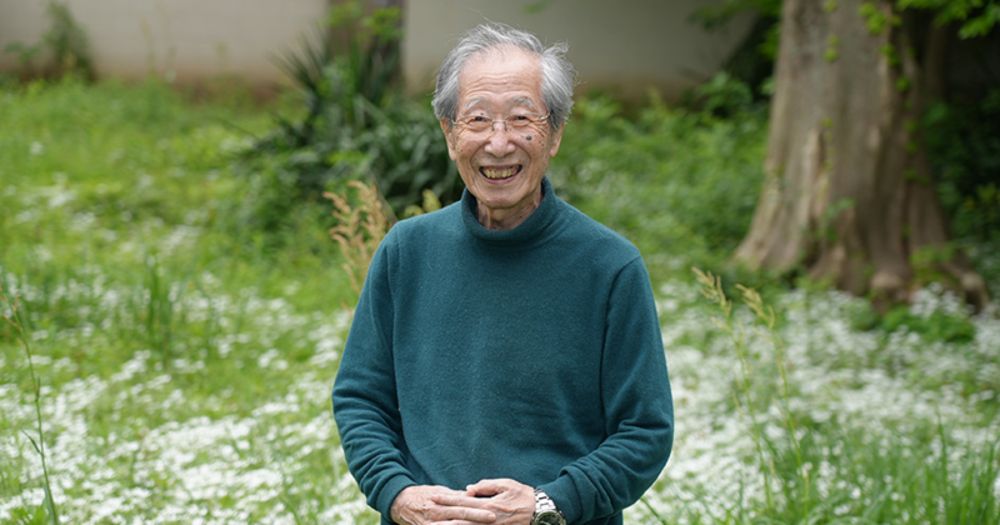

www.riken.jp/pr/news/2025...

www.riken.jp/pr/news/2025...

👥Mentor-led projects, expert talks, tutorials, socials, and a networking night

✍️Application form: logml.ai

🔬Projects: www.logml.ai/projects.html

📅Apply by 6th April 2025

✉️Questions? logml.committee@gmail.com

#MachineLearning #SummerSchool #LOGML #Geometry

👥Mentor-led projects, expert talks, tutorials, socials, and a networking night

✍️Application form: logml.ai

🔬Projects: www.logml.ai/projects.html

📅Apply by 6th April 2025

✉️Questions? logml.committee@gmail.com

#MachineLearning #SummerSchool #LOGML #Geometry

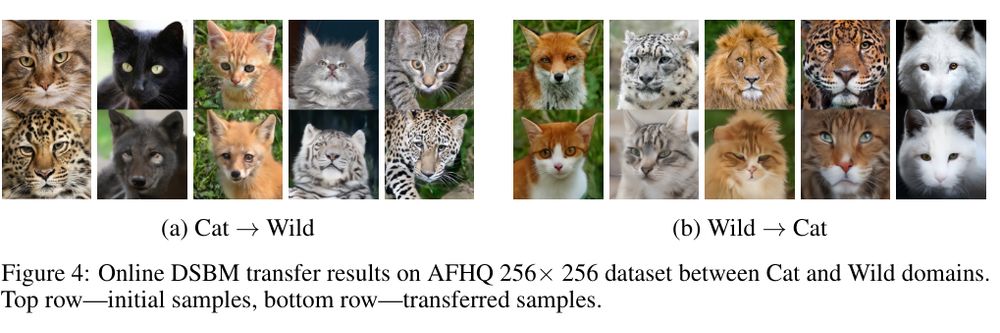

- Spotlight at #ICLR2025!🥳

- Stable Diffusion XL pipeline on HuggingFace huggingface.co/superdiff/su... made by Viktor Ohanesian

- New results for molecules in the camera-ready arxiv.org/abs/2412.17762

Let's celebrate with a prompt guessing game in the thread👇

- Spotlight at #ICLR2025!🥳

- Stable Diffusion XL pipeline on HuggingFace huggingface.co/superdiff/su... made by Viktor Ohanesian

- New results for molecules in the camera-ready arxiv.org/abs/2412.17762

Let's celebrate with a prompt guessing game in the thread👇

academic.oup.com/brain/articl...

academic.oup.com/brain/articl...

This week, with the agreement of the publisher, I uploaded the published version on arXiv.

Less typos, more references and additional sections including PAC-Bayes Bernstein.

arxiv.org/abs/2110.11216

Fewer, larger denoising steps using distributional losses; learn the posterior distribution of clean samples given the noisy versions.

arxiv.org/pdf/2502.02483

@vdebortoli.bsky.social Galashov Guntupalli Zhou @sirbayes.bsky.social @arnauddoucet.bsky.social

Fewer, larger denoising steps using distributional losses; learn the posterior distribution of clean samples given the noisy versions.

arxiv.org/pdf/2502.02483

@vdebortoli.bsky.social Galashov Guntupalli Zhou @sirbayes.bsky.social @arnauddoucet.bsky.social

🔗 website: sites.google.com/view/fpiwork...

🔥 Call for papers: sites.google.com/view/fpiwork...

more details in thread below👇 🧵

🔗 website: sites.google.com/view/fpiwork...

🔥 Call for papers: sites.google.com/view/fpiwork...

more details in thread below👇 🧵

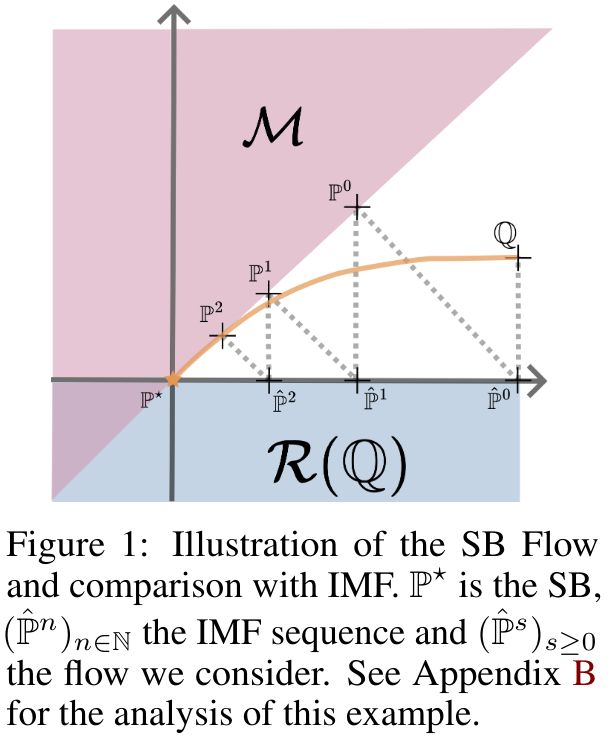

It will take me some time to digest this article fully, but it's important to follow the authors' advice and read the appendices, as the examples are helpful and well-illustrated.

📄 arxiv.org/abs/2409.09347

It will take me some time to digest this article fully, but it's important to follow the authors' advice and read the appendices, as the examples are helpful and well-illustrated.

📄 arxiv.org/abs/2409.09347

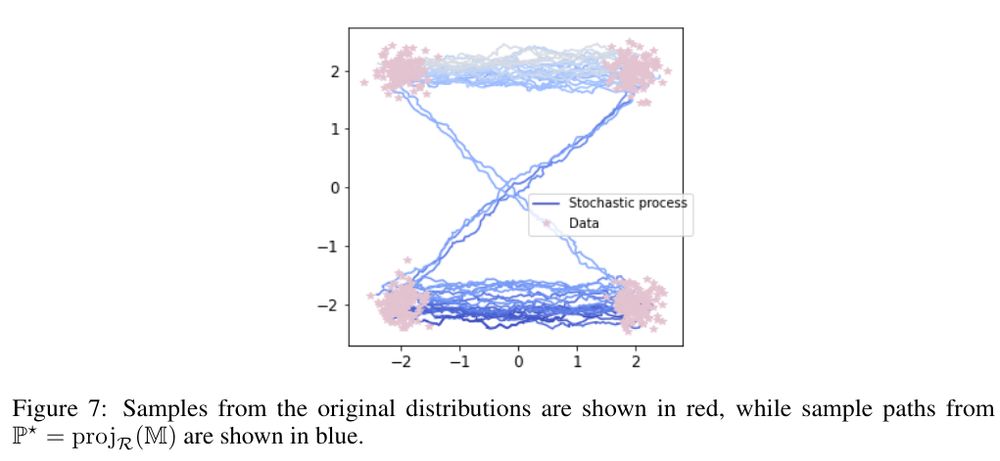

drive.google.com/file/d/1eLa3...

drive.google.com/file/d/1eLa3...

alexxthiery.github.io/jobs/2024_di...

alexxthiery.github.io/jobs/2024_di...

for fans of: mean estimation, online learning with log loss, optimal portfolios, hypothesis testing with E-values, etc.

dig in:

arxiv.org/abs/2412.02640

for fans of: mean estimation, online learning with log loss, optimal portfolios, hypothesis testing with E-values, etc.

dig in:

arxiv.org/abs/2412.02640