Wanna meet and chat? Book a meeting here: https://zcal.co/shivam-raval

📝 Blog post: www.anthropic.com/research/tra...

🧪 "Biology" paper: transformer-circuits.pub/2025/attribu...

⚙️ Methods paper: transformer-circuits.pub/2025/attribu...

Featuring basic multi-step reasoning, planning, introspection and more!

📝 Blog post: www.anthropic.com/research/tra...

🧪 "Biology" paper: transformer-circuits.pub/2025/attribu...

⚙️ Methods paper: transformer-circuits.pub/2025/attribu...

Featuring basic multi-step reasoning, planning, introspection and more!

One discusses a review ethics violation from last year. ieeevis.org/blog/vis-202...

The other describes ongoing efforts to revise the organizational structure of VIS. ieeevis.org/blog/vis-202...

See the ARBOR discussion board for a thread for each project underway.

github.com/ArborProjec...

github.com/ARBORproject...

(ARBOR = Analysis of Reasoning Behavior through Open Research)

github.com/ARBORproject...

(ARBOR = Analysis of Reasoning Behavior through Open Research)

dsthoughts.baulab.info

I'd be interested in your thoughts.

dsthoughts.baulab.info

I'd be interested in your thoughts.

Also updated the Tailwind CSS color palette cheat sheet 👀 added a button to see the old v3 and new v4 color Tailwind color palette.

#buildinpublic

Also updated the Tailwind CSS color palette cheat sheet 👀 added a button to see the old v3 and new v4 color Tailwind color palette.

#buildinpublic

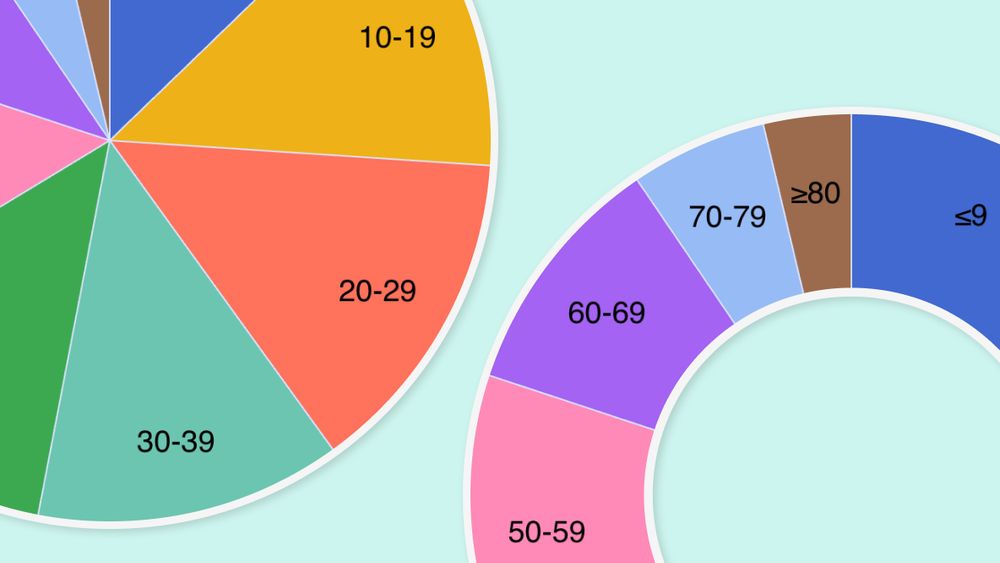

Readings are a mix of NLP, CSS-y, and ML work on how machines (focus LLMs) "behave" within sociotechnical systems and on how they can be used to study human behavior.

Syllabus: manoelhortaribeiro.github.io/teaching/spr...

Readings are a mix of NLP, CSS-y, and ML work on how machines (focus LLMs) "behave" within sociotechnical systems and on how they can be used to study human behavior.

Syllabus: manoelhortaribeiro.github.io/teaching/spr...

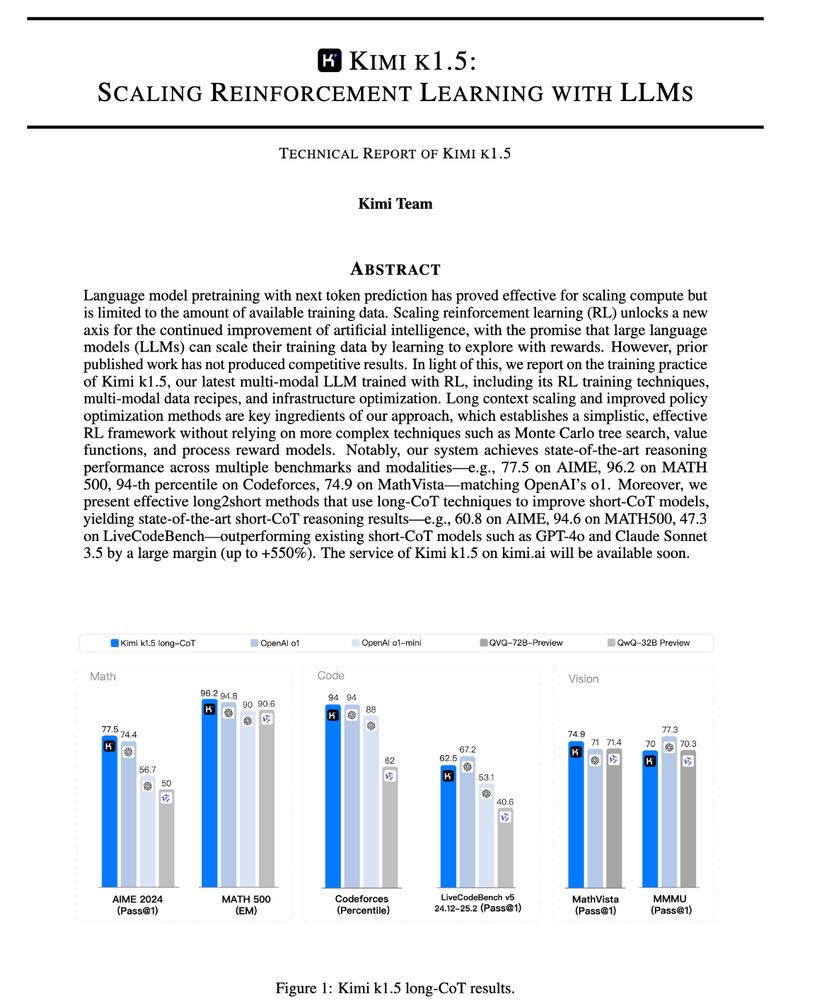

kimi 1.5 report: https://buff.ly/4jqgCOa

kimi 1.5 report: https://buff.ly/4jqgCOa

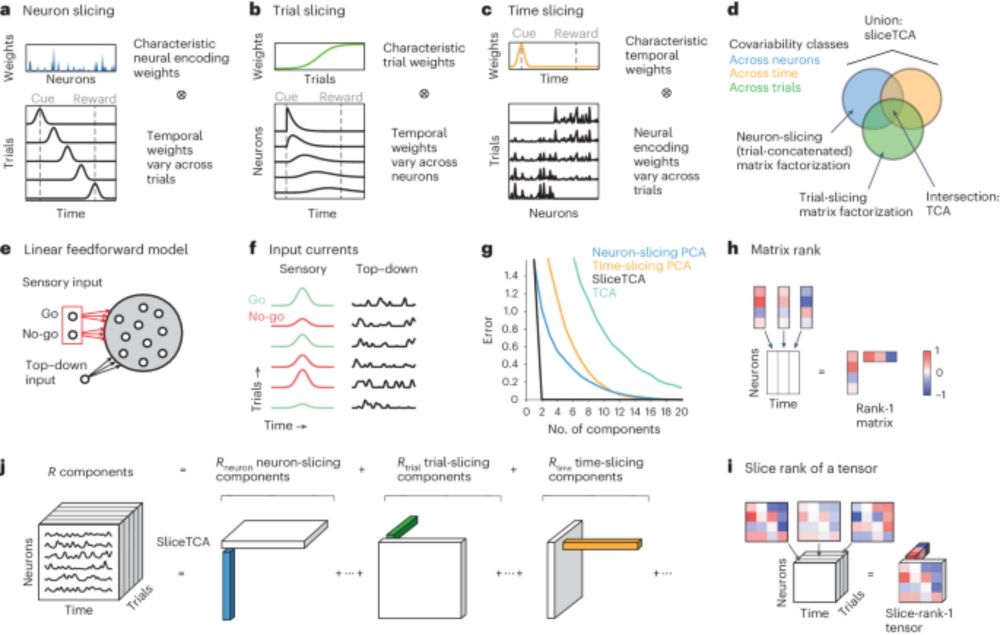

www.nature.com/articles/s41...

www.nature.com/articles/s41...

It wasn't for certain whether it would survive it's closest approach to the sun on January 13th, but it did and delivered us a spectacular show!

#comet #C2024G3 🔭

huggingface.co/blog/ethics-...

huggingface.co/blog/ethics-...

by @alanjeffares.bsky.social @aliciacurth.bsky.social

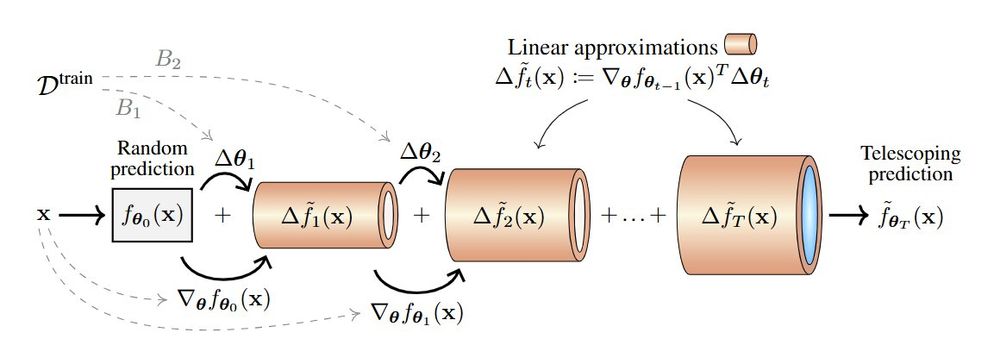

Shows that tracking 1st-order approximations to the training dynamics provides insights into many phenomena (e.g., double descent, grokking).

arxiv.org/abs/2411.00247

by @alanjeffares.bsky.social @aliciacurth.bsky.social

Shows that tracking 1st-order approximations to the training dynamics provides insights into many phenomena (e.g., double descent, grokking).

arxiv.org/abs/2411.00247

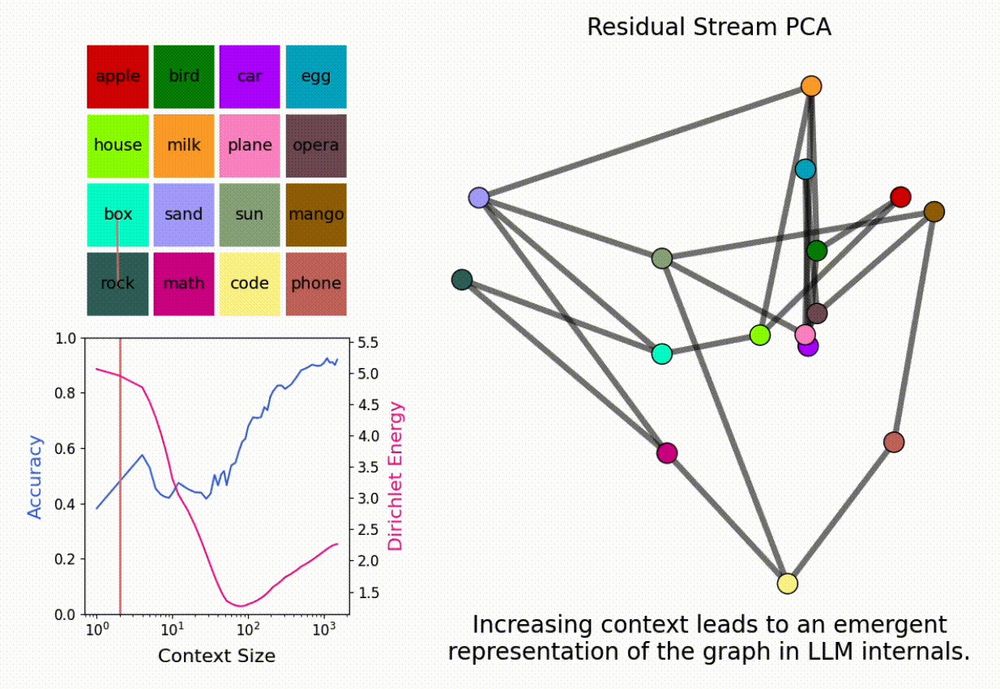

Interested in inference-time scaling? In-context Learning? Mech Interp?

LMs can solve novel in-context tasks, with sufficient examples (longer contexts). Why? Bc they dynamically form *in-context representations*!

1/N

Interested in inference-time scaling? In-context Learning? Mech Interp?

LMs can solve novel in-context tasks, with sufficient examples (longer contexts). Why? Bc they dynamically form *in-context representations*!

1/N

Plus it's got a cute furry mascot! 😘

bsky.app/profile/did:...

#ResearchIntegrity

#PredatoryPublisher

#PredatoryPublishing

#EditorialIndependence

#SciRetraction

Plus it's got a cute furry mascot! 😘

bsky.app/profile/did:...

#ResearchIntegrity

#PredatoryPublisher

#PredatoryPublishing

#EditorialIndependence

#SciRetraction

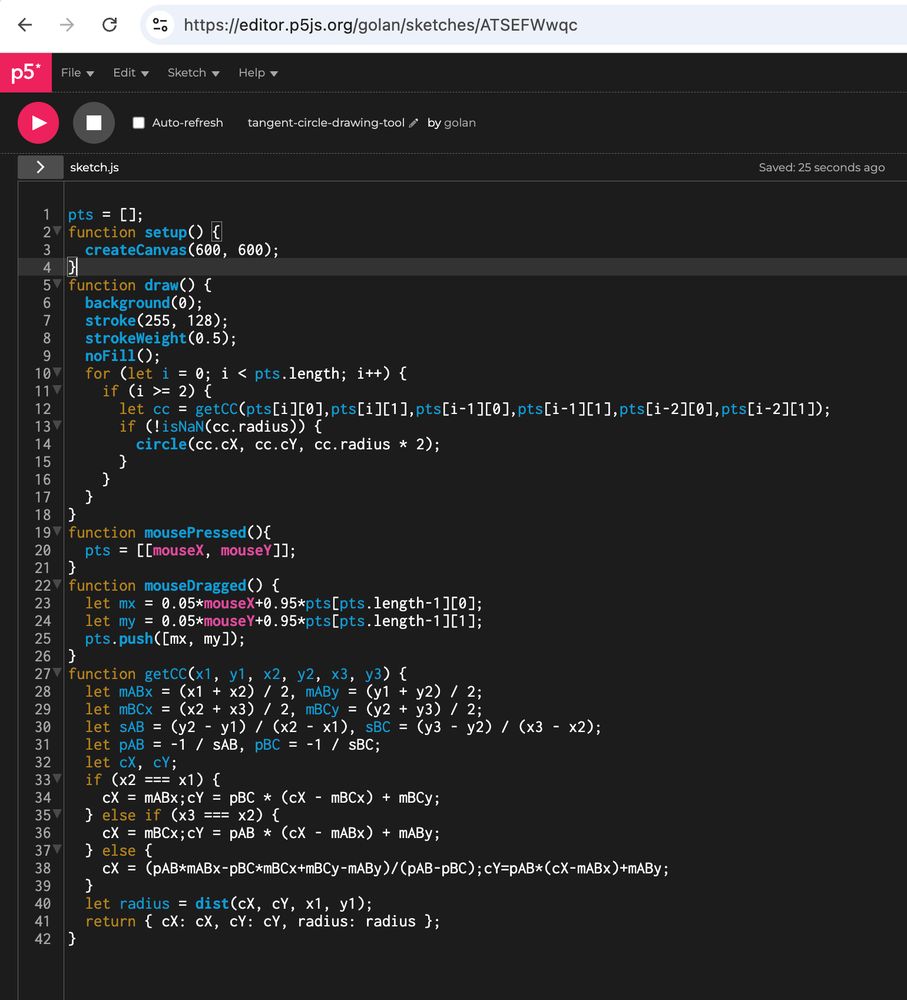

#genuary #genuary2025 #genuary3

#genuary #genuary2025 #genuary3