Ass. Prof. Sapienza (Rome) | Author: Alice in a differentiable wonderland (https://www.sscardapane.it/alice-book/)

And a special thanks to @edoardo-ponti.bsky.social for the academic visit that made this work possible!

And a special thanks to @edoardo-ponti.bsky.social for the academic visit that made this work possible!

Kudos to the team 👏

Antonio A. Gargiulo, @mariasofiab.bsky.social, @sscardapane.bsky.social, Fabrizio Silvestri, Emanuele Rodolà.

1. Perform a low-rank approximation of layer-wise task vectors.

2. Minimize task interference by orthogonalizing inter-task singular vectors.

🧵(1/6)

Kudos to the team 👏

Antonio A. Gargiulo, @mariasofiab.bsky.social, @sscardapane.bsky.social, Fabrizio Silvestri, Emanuele Rodolà.

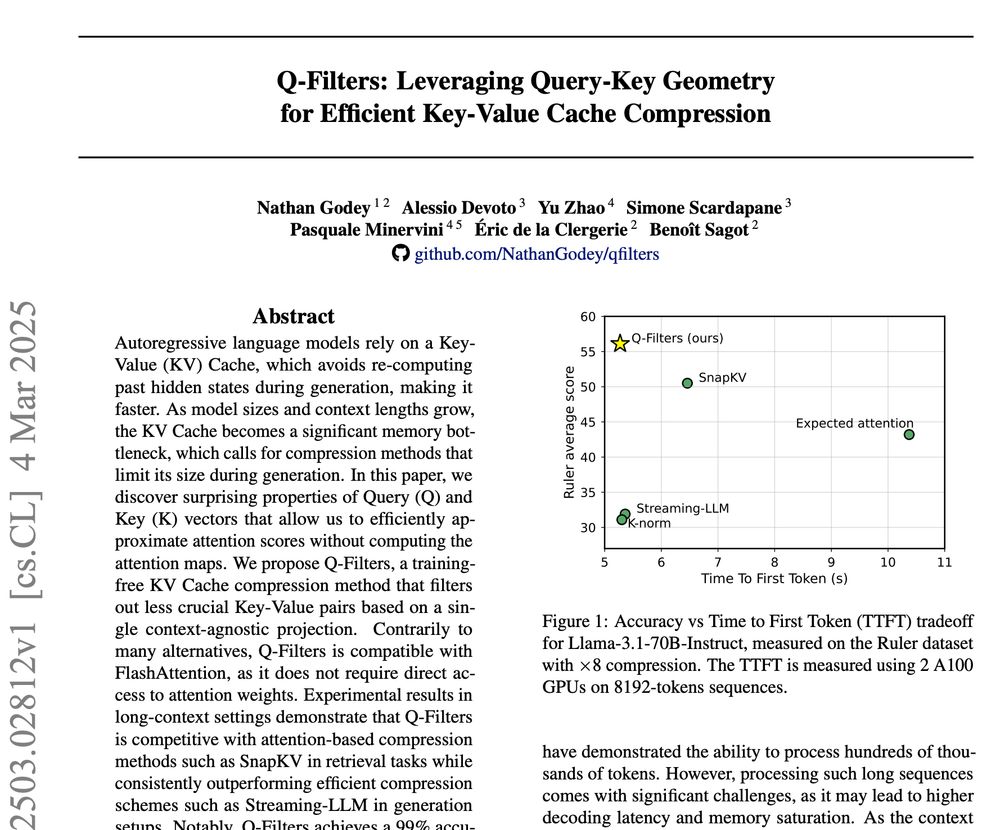

We introduce Q-Filters, a training-free method for efficient KV Cache compression!

It is compatible with FlashAttention and can compress along generation which is particularly useful for reasoning models ⚡

TLDR: we make Streaming-LLM smarter using the geometry of attention

We introduce Q-Filters, a training-free method for efficient KV Cache compression!

It is compatible with FlashAttention and can compress along generation which is particularly useful for reasoning models ⚡

TLDR: we make Streaming-LLM smarter using the geometry of attention

...but it is also much better at retaining relevant KV pairs compared to fast alternatives (and can even beat slower algorithms such as SnapKV)

...but it is also much better at retaining relevant KV pairs compared to fast alternatives (and can even beat slower algorithms such as SnapKV)

by @maclarke.bsky.social et al.

Studies co-occurence of SAE features and how they can be understood as composite / ambiguous concepts.

www.lesswrong.com/posts/WNoqEi...

by @maclarke.bsky.social et al.

Studies co-occurence of SAE features and how they can be understood as composite / ambiguous concepts.

www.lesswrong.com/posts/WNoqEi...

by @rupspace.bsky.social

Cool blog post "in defense" of weighted variants of ResNets (aka HighwayNets) - as a follow up to a previous post by @giffmana.ai.

rupeshks.cc/blog/skip.html

by @rupspace.bsky.social

Cool blog post "in defense" of weighted variants of ResNets (aka HighwayNets) - as a follow up to a previous post by @giffmana.ai.

rupeshks.cc/blog/skip.html

by @junhongshen1.bsky.social @lukezettlemoyer.bsky.social et al.

They use an LLM to predict a "complexity score" for each image token, which in turns decides the size of its VAE latent representation.

arxiv.org/abs/2501.03120

by @junhongshen1.bsky.social @lukezettlemoyer.bsky.social et al.

They use an LLM to predict a "complexity score" for each image token, which in turns decides the size of its VAE latent representation.

arxiv.org/abs/2501.03120

by Noah Hollmann et al.

A transformer for tabular data that takes an entire training set as input and provides predictions - trained on millions of synthetic datasets.

www.nature.com/articles/s41...

by Noah Hollmann et al.

A transformer for tabular data that takes an entire training set as input and provides predictions - trained on millions of synthetic datasets.

www.nature.com/articles/s41...

by @jwuphysics.bsky.social

Integrates a sparse dictionary step on the last layer of a CNN to obtain a set of interpretable features on multiple astronomical prediction tasks.

arxiv.org/abs/2501.00089

by @jwuphysics.bsky.social

Integrates a sparse dictionary step on the last layer of a CNN to obtain a set of interpretable features on multiple astronomical prediction tasks.

arxiv.org/abs/2501.00089

by @petar-v.bsky.social et al.

They show RoPE has distinct behavior for different rotation angles - high freq for position, low freq for semantics.

arxiv.org/abs/2410.06205

by @petar-v.bsky.social et al.

They show RoPE has distinct behavior for different rotation angles - high freq for position, low freq for semantics.

arxiv.org/abs/2410.06205

by Liang et al.

Adding a simple masking operation to momentum-based optimizers can significantly boost their speed.

arxiv.org/abs/2411.16085

by Liang et al.

Adding a simple masking operation to momentum-based optimizers can significantly boost their speed.

arxiv.org/abs/2411.16085

by @artidoro.bsky.social et al.

Trains a small encoder to dynamically aggregate bytes into tokens, which are input to a standard autoregressive model. Nice direction!

arxiv.org/abs/2412.09871

by @artidoro.bsky.social et al.

Trains a small encoder to dynamically aggregate bytes into tokens, which are input to a standard autoregressive model. Nice direction!

arxiv.org/abs/2412.09871

by @norabelrose.bsky.social @eleutherai.bsky.social

Analyzes training through the spectrum of the "training Jacobian" (∇ of trained weights wrt initial weights), identifying a large inactive subspace.

arxiv.org/abs/2412.07003

by @norabelrose.bsky.social @eleutherai.bsky.social

Analyzes training through the spectrum of the "training Jacobian" (∇ of trained weights wrt initial weights), identifying a large inactive subspace.

arxiv.org/abs/2412.07003

by Xu Owen He

Scales a MoE architecture up to millions of experts by implementing a fast retrieval method in the router, inspired by recent MoE scaling laws.

arxiv.org/abs/2407.04153

by Xu Owen He

Scales a MoE architecture up to millions of experts by implementing a fast retrieval method in the router, inspired by recent MoE scaling laws.

arxiv.org/abs/2407.04153

by Fifty et al.

Replaces the "closest codebook" operation in vector quantization with a rotation and rescaling operations to improve the back-propagation of gradients.

arxiv.org/abs/2410.06424

by Fifty et al.

Replaces the "closest codebook" operation in vector quantization with a rotation and rescaling operations to improve the back-propagation of gradients.

arxiv.org/abs/2410.06424

for Vision Transformers*

by Li et al.

Shows that distilling attention patterns in ViTs is competitive with standard fine-tuning.

arxiv.org/abs/2411.09702

for Vision Transformers*

by Li et al.

Shows that distilling attention patterns in ViTs is competitive with standard fine-tuning.

arxiv.org/abs/2411.09702

by Yu et al.

Identifies single weights in LLMs that destroy inference when deactivated. Tracks their mechanisms through the LLM and proposes quantization-specific techniques.

arxiv.org/abs/2411.07191

by Yu et al.

Identifies single weights in LLMs that destroy inference when deactivated. Tracks their mechanisms through the LLM and proposes quantization-specific techniques.

arxiv.org/abs/2411.07191

by @ekinakyurek.bsky.social et al.

Shows that test-time training (fine-tuning at inference time) strongly improves performance on the ARC dataset.

arxiv.org/abs/2411.07279

by @ekinakyurek.bsky.social et al.

Shows that test-time training (fine-tuning at inference time) strongly improves performance on the ARC dataset.

arxiv.org/abs/2411.07279

By myself, @sscardapane.bsky.social, @rgring.bsky.social and @lanalpa.bsky.social

📄 arxiv.org/abs/2501.07451

By myself, @sscardapane.bsky.social, @rgring.bsky.social and @lanalpa.bsky.social

📄 arxiv.org/abs/2501.07451

by Barrault et al.

Builds an autoregressive model in a "concept" space by wrapping the LLM in a pre-trained sentence embedder (also works with diffusion models).

arxiv.org/abs/2412.08821

by Barrault et al.

Builds an autoregressive model in a "concept" space by wrapping the LLM in a pre-trained sentence embedder (also works with diffusion models).

arxiv.org/abs/2412.08821

Paper: arxiv.org/abs/2412.00081

Code: github.com/AntoAndGar/t...

#machinelearning

Paper: arxiv.org/abs/2412.00081

Code: github.com/AntoAndGar/t...

#machinelearning

by @phillipisola.bsky.social et al.

An encoder to compress an image into a sequence of 1D tokens whose length can dynamically vary depending on the specific image.

arxiv.org/abs/2411.02393

by @phillipisola.bsky.social et al.

An encoder to compress an image into a sequence of 1D tokens whose length can dynamically vary depending on the specific image.

arxiv.org/abs/2411.02393

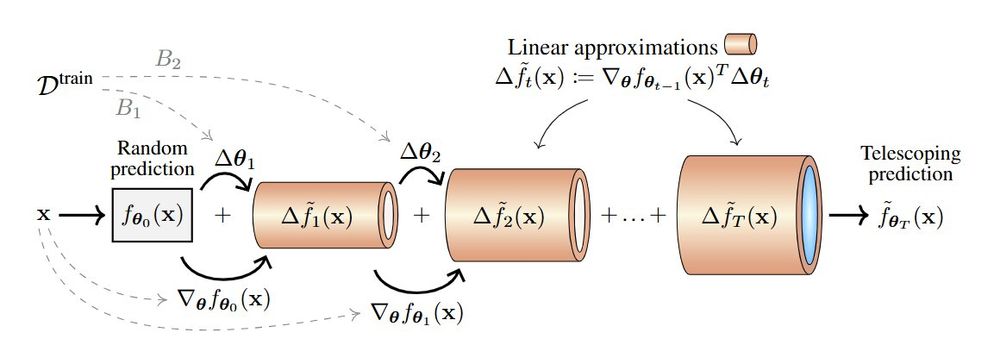

by @alanjeffares.bsky.social @aliciacurth.bsky.social

Shows that tracking 1st-order approximations to the training dynamics provides insights into many phenomena (e.g., double descent, grokking).

arxiv.org/abs/2411.00247

by @alanjeffares.bsky.social @aliciacurth.bsky.social

Shows that tracking 1st-order approximations to the training dynamics provides insights into many phenomena (e.g., double descent, grokking).

arxiv.org/abs/2411.00247

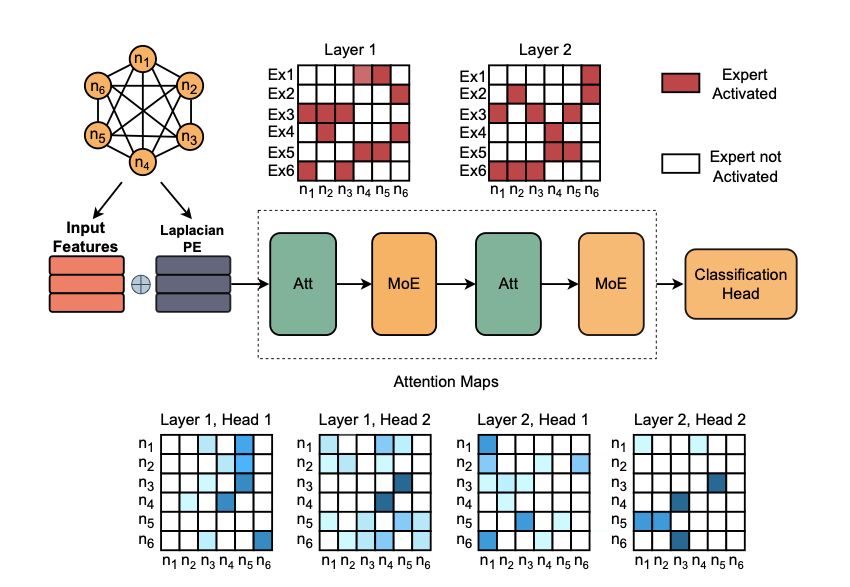

by @alessiodevoto.bsky.social @sgiagu.bsky.social et al.

We propose a MoE graph transformer for particle collision analysis, with many nice interpretability insights (e.g., expert specialization).

arxiv.org/abs/2501.03432

by @alessiodevoto.bsky.social @sgiagu.bsky.social et al.

We propose a MoE graph transformer for particle collision analysis, with many nice interpretability insights (e.g., expert specialization).

arxiv.org/abs/2501.03432