🥷stealth-mode CEO

🔬prev visiting @ Cambridge | RS intern @ Amazon Search | RS intern @ Alexa.

🆓 time 🎭improv theater, 🤿scuba diving, ⛰️hiking

Kudos to the team 👏

Antonio A. Gargiulo, @mariasofiab.bsky.social, @sscardapane.bsky.social, Fabrizio Silvestri, Emanuele Rodolà.

1. Perform a low-rank approximation of layer-wise task vectors.

2. Minimize task interference by orthogonalizing inter-task singular vectors.

🧵(1/6)

Kudos to the team 👏

Antonio A. Gargiulo, @mariasofiab.bsky.social, @sscardapane.bsky.social, Fabrizio Silvestri, Emanuele Rodolà.

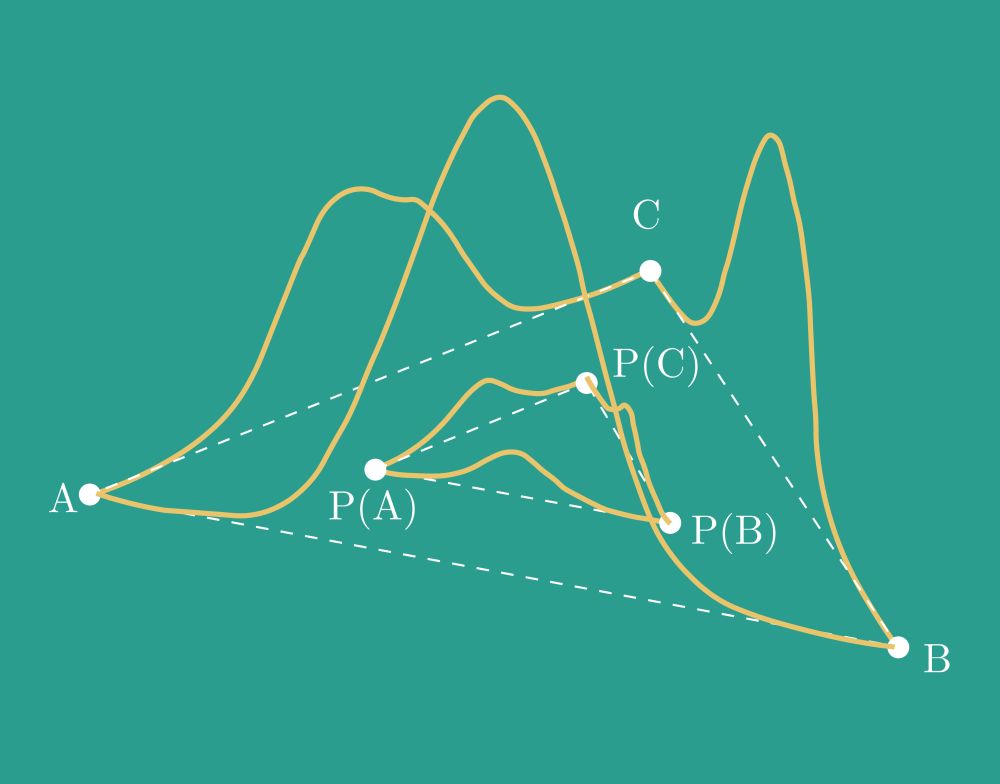

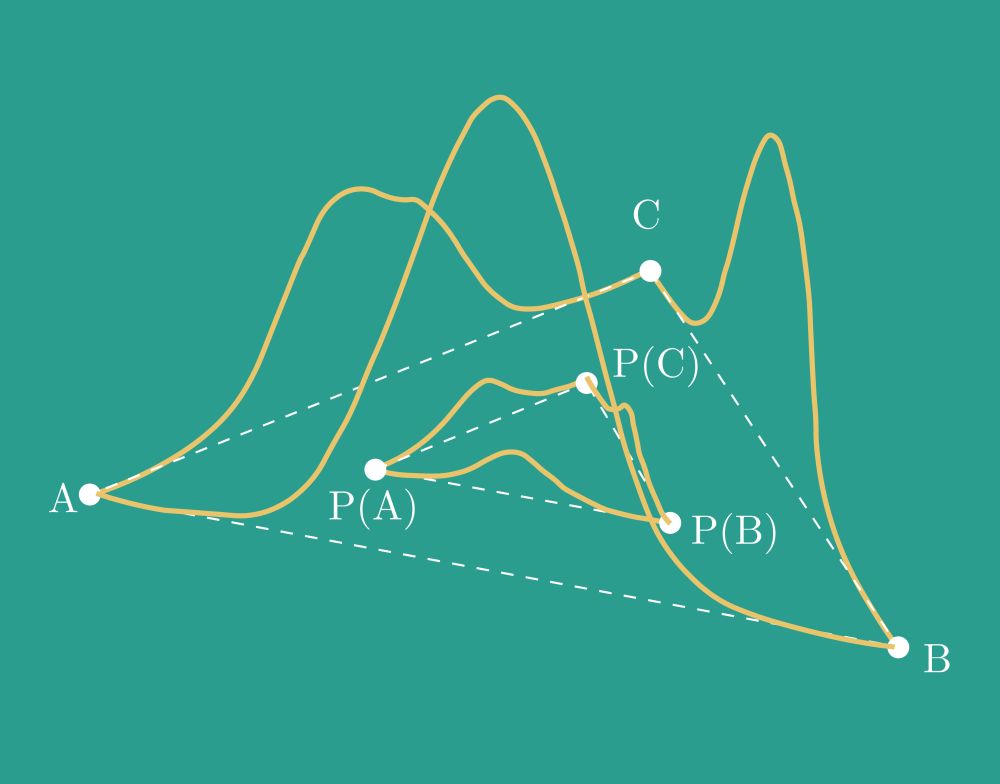

1. Perform a low-rank approximation of layer-wise task vectors.

2. Minimize task interference by orthogonalizing inter-task singular vectors.

🧵(1/6)

1. Perform a low-rank approximation of layer-wise task vectors.

2. Minimize task interference by orthogonalizing inter-task singular vectors.

🧵(1/6)

Say no more!

It just so happens that our new #NeurIPS24 paper covers exactly this!

Huh? No idea what I am talking about? Read on

(1/6)

Say no more!

It just so happens that our new #NeurIPS24 paper covers exactly this!

Huh? No idea what I am talking about? Read on

(1/6)

Say no more!

It just so happens that our new #NeurIPS24 paper covers exactly this!

Huh? No idea what I am talking about? Read on

(1/6)

💡idea: we consider task vectors at the layer level and reduce task interference by decorrelating the task-specific singular vectors of any matrix-structured layer

🔬results: large-margin improvements across all vision benchmarks

We show that task vectors are inherently low-rank, and we propose a merging method that significantly improves SOTA.

arxiv.org/abs/2412.00081

💡idea: we consider task vectors at the layer level and reduce task interference by decorrelating the task-specific singular vectors of any matrix-structured layer

🔬results: large-margin improvements across all vision benchmarks