New SOTA 🏆 results on ScreenSpot-v2 (+5.7%) and ScreenSpot-Pro (+110.8%)!

New SOTA 🏆 results on ScreenSpot-v2 (+5.7%) and ScreenSpot-Pro (+110.8%)!

DeepSeek V3.1 was trained on Huawei Ascend NPUs

this one is a South Korean lab training on AMD

unique: trained on AMD GPUs

focus is on long context & low hallucination rate — imo this is a growing genre of LLM that enables new search patterns

huggingface.co/Motif-Techno...

DeepSeek V3.1 was trained on Huawei Ascend NPUs

this one is a South Korean lab training on AMD

Fairly close to my own, though I didn't get the preview the tech.

Walking around a generated image-to-image world is not the same as playing a game. There are no game objectives.

Fairly close to my own, though I didn't get the preview the tech.

Walking around a generated image-to-image world is not the same as playing a game. There are no game objectives.

#MLSky

#MLSky

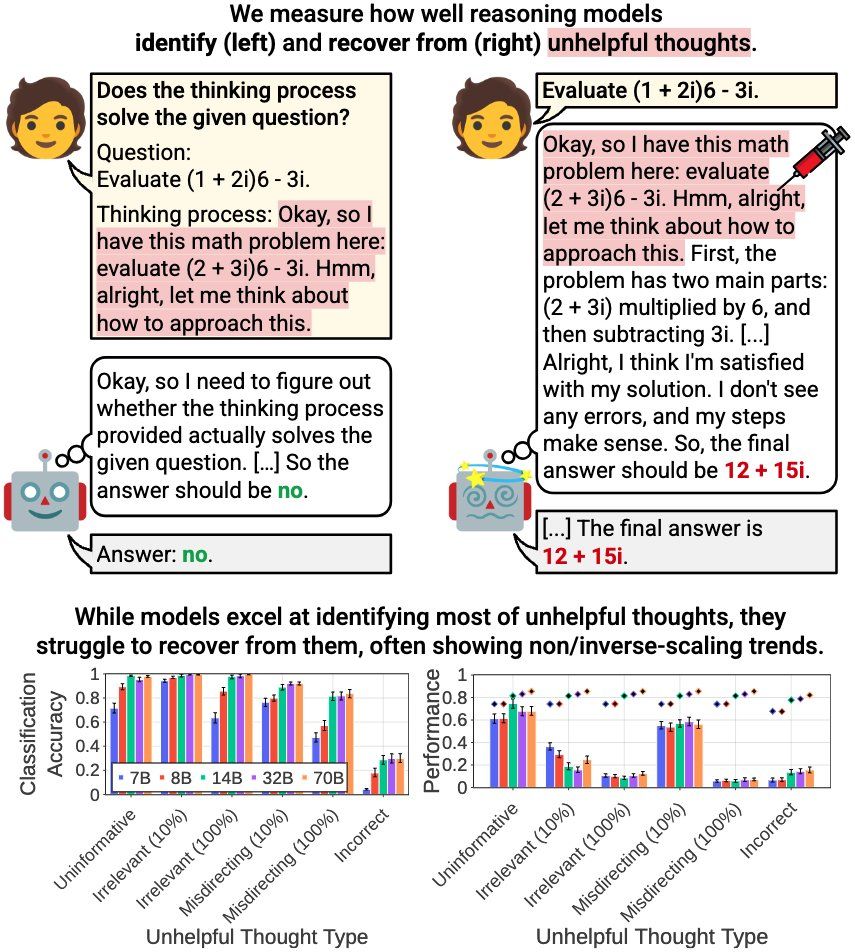

How effectively do reasoning models reevaluate their thought? We find that:

- Models excel at identifying unhelpful thoughts but struggle to recover from them

- Smaller models can be more robust

- Self-reevaluation ability is far from true meta-cognitive awareness

1/N 🧵

How effectively do reasoning models reevaluate their thought? We find that:

- Models excel at identifying unhelpful thoughts but struggle to recover from them

- Smaller models can be more robust

- Self-reevaluation ability is far from true meta-cognitive awareness

1/N 🧵

a research collab demonstrated that there are certain types of tasks where all top reasoning models do WORSE the longer they think

things like getting distracted by irrelevant info, spurious correlations, etc.

www.arxiv.org/abs/2507.14417

a research collab demonstrated that there are certain types of tasks where all top reasoning models do WORSE the longer they think

things like getting distracted by irrelevant info, spurious correlations, etc.

www.arxiv.org/abs/2507.14417

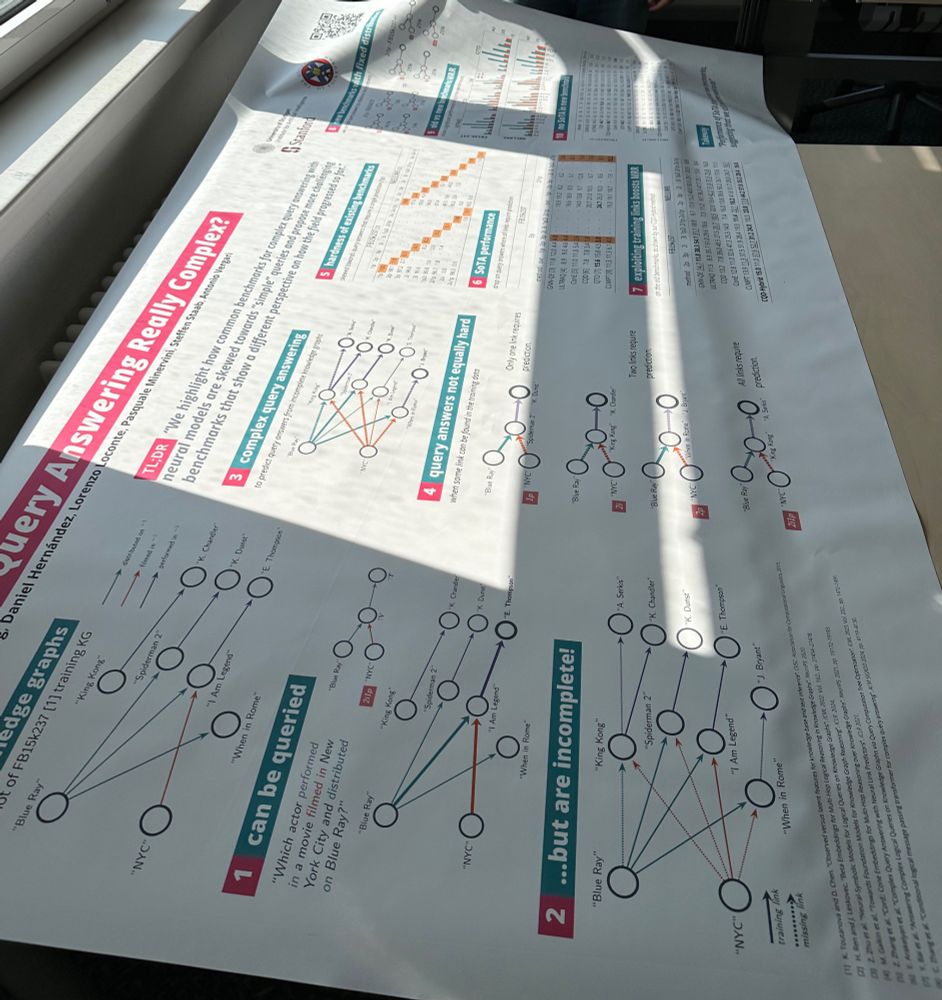

@icmlconf.bsky.social!

📌East Exhibition Hall A-B E-1806

🗓️Wed 16 Jul 4:30 p.m. PDT — 7 p.m. PDT

📜 arxiv.org/pdf/2410.12537

Let’s chat! I’m always up for conversations about knowledge graphs, reasoning, neuro-symbolic AI, and benchmarking.

@icmlconf.bsky.social!

📌East Exhibition Hall A-B E-1806

🗓️Wed 16 Jul 4:30 p.m. PDT — 7 p.m. PDT

📜 arxiv.org/pdf/2410.12537

Let’s chat! I’m always up for conversations about knowledge graphs, reasoning, neuro-symbolic AI, and benchmarking.

nisheethvishnoi.substack.com/p/what-count...

nisheethvishnoi.substack.com/p/what-count...

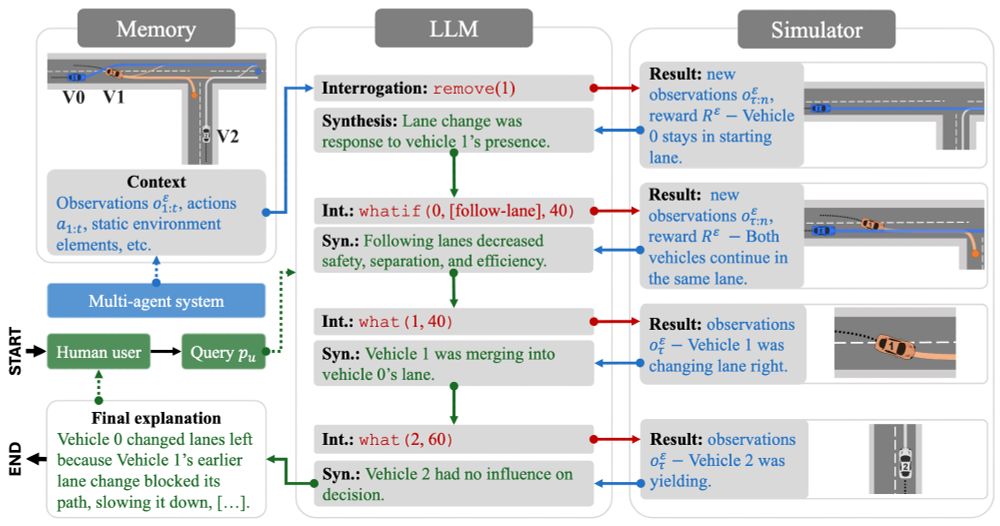

AXIS integrates multi-agent simulators with LLMs by having the LLMs interrogate the simulator with counterfactual queries over multiple rounds for explaining agent behaviour.

arxiv.org/pdf/2505.17801

AXIS integrates multi-agent simulators with LLMs by having the LLMs interrogate the simulator with counterfactual queries over multiple rounds for explaining agent behaviour.

arxiv.org/pdf/2505.17801

We are thrilled to announce the Common Pile v0.1, an 8TB dataset of openly licensed and public domain text. We train 7B models for 1T and 2T tokens and match the performance similar models like LLaMA 1 & 2

We are thrilled to announce the Common Pile v0.1, an 8TB dataset of openly licensed and public domain text. We train 7B models for 1T and 2T tokens and match the performance similar models like LLaMA 1 & 2