In collaboration with @commoncrawl.bsky.social @mlcommons.org @jhu.edu we built a LID benchmark on actual Common Crawl text covering 109 languages. Existing evaluations overestimate how well LangID works on web data.

arxiv.org/abs/2601.18026

In collaboration with @commoncrawl.bsky.social @mlcommons.org @jhu.edu we built a LID benchmark on actual Common Crawl text covering 109 languages. Existing evaluations overestimate how well LangID works on web data.

arxiv.org/abs/2601.18026

Jan 9th at 2 pm US Eastern Time

Jan 9th at 2 pm US Eastern Time

Our first talk is by @catherinearnett.bsky.social on tokenizers, their limitations, and how to improve them.

Our first talk is by @catherinearnett.bsky.social on tokenizers, their limitations, and how to improve them.

We are thrilled to announce the Common Pile v0.1, an 8TB dataset of openly licensed and public domain text. We train 7B models for 1T and 2T tokens and match the performance similar models like LLaMA 1 & 2

We are thrilled to announce the Common Pile v0.1, an 8TB dataset of openly licensed and public domain text. We train 7B models for 1T and 2T tokens and match the performance similar models like LLaMA 1 & 2

We are organising the 1st Workshop on Multilingual Data Quality Signals with @mlcommons.org and @eleutherai.bsky.social, held in tandem with @colmweb.org. Submit your research on multilingual data quality!

Submission deadline is 23 June, more info: wmdqs.org

We are organising the 1st Workshop on Multilingual Data Quality Signals with @mlcommons.org and @eleutherai.bsky.social, held in tandem with @colmweb.org. Submit your research on multilingual data quality!

Submission deadline is 23 June, more info: wmdqs.org

ArXiv: arxiv.org/abs/2502.02289

#NLProc #LLM #Evaluation

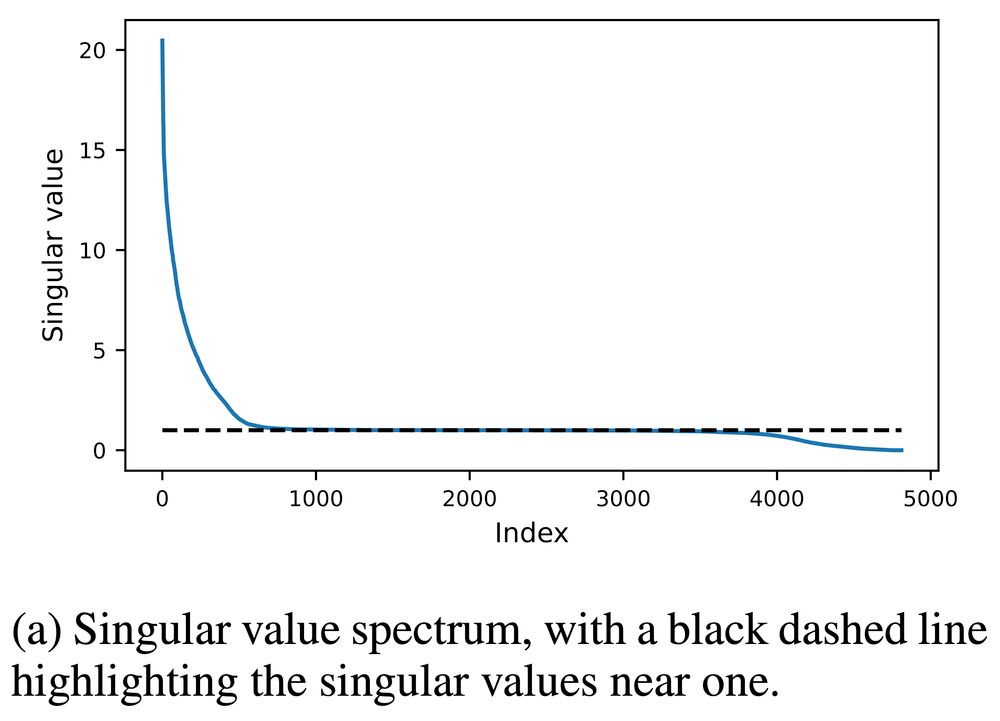

In this new paper, we answer this question by analyzing the training Jacobian, the matrix of derivatives of the final parameters with respect to the initial parameters.

https://arxiv.org/abs/2412.07003

In this new paper, we answer this question by analyzing the training Jacobian, the matrix of derivatives of the final parameters with respect to the initial parameters.

https://arxiv.org/abs/2412.07003

Is a linear representation a linear function (that preserves the origin) or an affine function (that does not)? This distinction matters in practice. arxiv.org/abs/2411.09003

Is a linear representation a linear function (that preserves the origin) or an affine function (that does not)? This distinction matters in practice. arxiv.org/abs/2411.09003