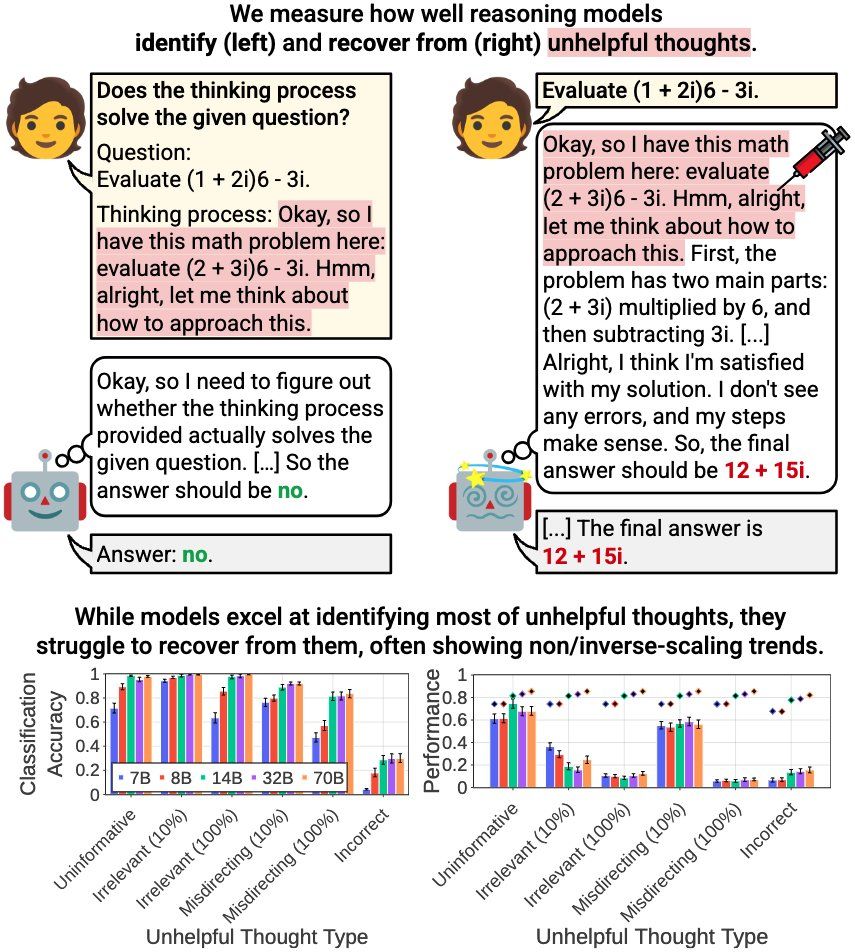

How effectively do reasoning models reevaluate their thought? We find that:

- Models excel at identifying unhelpful thoughts but struggle to recover from them

- Smaller models can be more robust

- Self-reevaluation ability is far from true meta-cognitive awareness

1/N 🧵

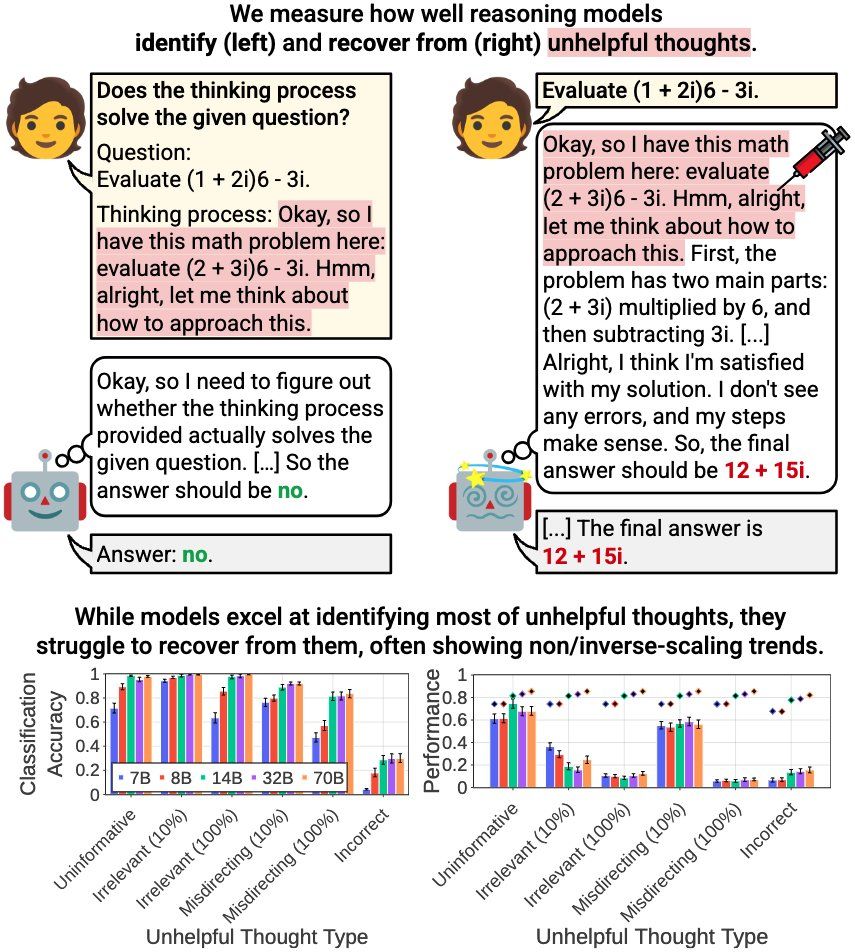

How effectively do reasoning models reevaluate their thought? We find that:

- Models excel at identifying unhelpful thoughts but struggle to recover from them

- Smaller models can be more robust

- Self-reevaluation ability is far from true meta-cognitive awareness

1/N 🧵

How effectively do reasoning models reevaluate their thought? We find that:

- Models excel at identifying unhelpful thoughts but struggle to recover from them

- Smaller models can be more robust

- Self-reevaluation ability is far from true meta-cognitive awareness

1/N 🧵

Procedural knowledge in pretraining drives LLM reasoning ⚙️🔢

🧵⬇️

Procedural knowledge in pretraining drives LLM reasoning ⚙️🔢

🧵⬇️

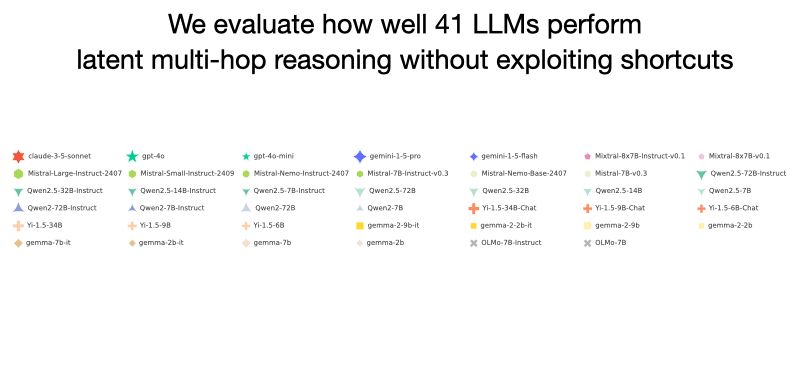

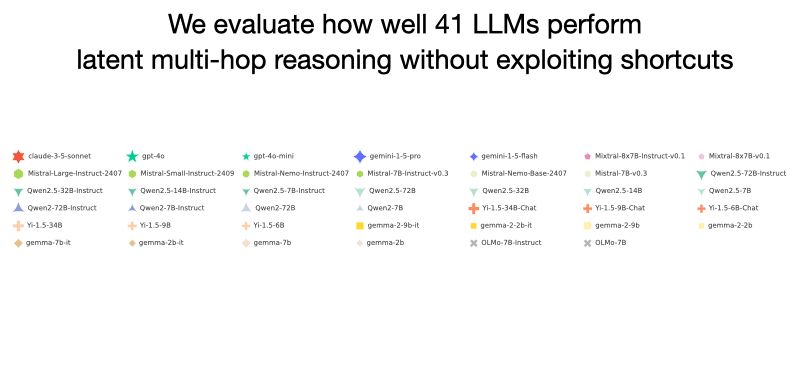

Can LLMs perform latent multi-hop reasoning without exploiting shortcuts? We find the answer is yes – they can recall and compose facts not seen together in training or guessing the answer, but success greatly depends on the type of the bridge entity (80% for country, 6% for year)! 1/N

Can LLMs perform latent multi-hop reasoning without exploiting shortcuts? We find the answer is yes – they can recall and compose facts not seen together in training or guessing the answer, but success greatly depends on the type of the bridge entity (80% for country, 6% for year)! 1/N

Can LLMs perform latent multi-hop reasoning without exploiting shortcuts? We find the answer is yes – they can recall and compose facts not seen together in training or guessing the answer, but success greatly depends on the type of the bridge entity (80% for country, 6% for year)! 1/N