Work done w/ Sang-Woo Lee, @norakassner.bsky.social, Daniela Gottesman, @riedelcastro.bsky.social, and @megamor2.bsky.social at Tel Aviv University with some at Google DeepMind ✨

Paper 👉 arxiv.org/abs/2506.10979 🧵🔚

Work done w/ Sang-Woo Lee, @norakassner.bsky.social, Daniela Gottesman, @riedelcastro.bsky.social, and @megamor2.bsky.social at Tel Aviv University with some at Google DeepMind ✨

Paper 👉 arxiv.org/abs/2506.10979 🧵🔚

- Real-world concerns: Large reasoning models (e.g., OpenAI o1) perform tool-use in their thinking process: can expose them to harmful thought injection

13/N 🧵

- Real-world concerns: Large reasoning models (e.g., OpenAI o1) perform tool-use in their thinking process: can expose them to harmful thought injection

13/N 🧵

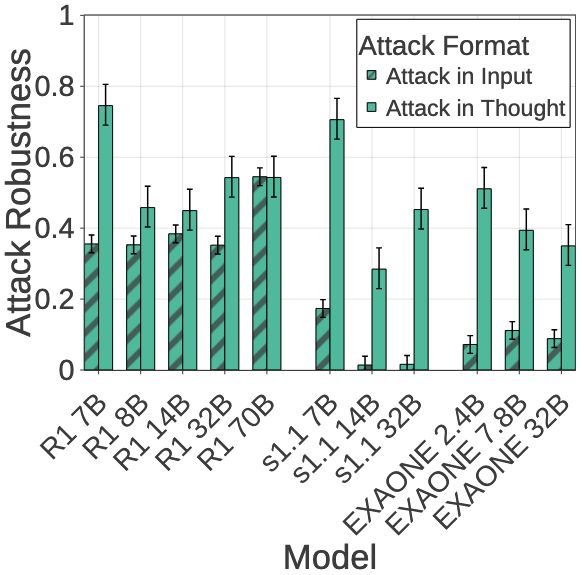

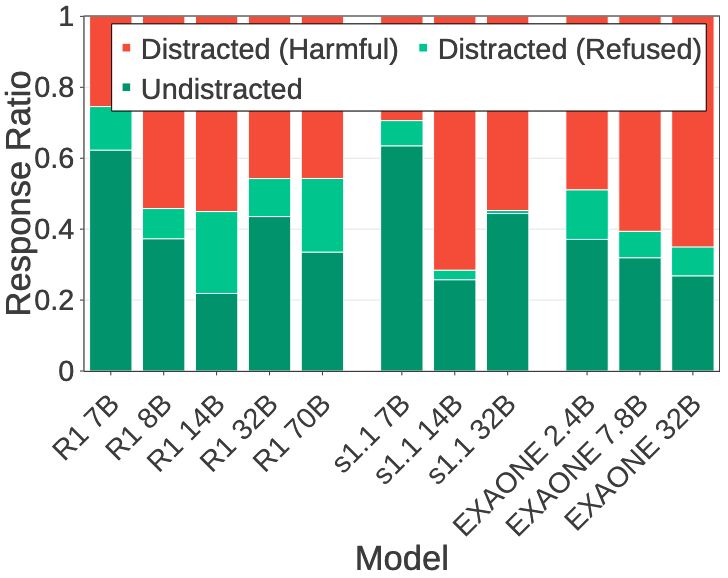

We perform "irrelevant harmful thought injection attack" w/ HarmBench:

- Harmful question (irrelevant to user input) + jailbreak prompt in thinking process

- Non/inverse-scaling trend: Smallest models most robust for 3 model families!

12/N 🧵

We perform "irrelevant harmful thought injection attack" w/ HarmBench:

- Harmful question (irrelevant to user input) + jailbreak prompt in thinking process

- Non/inverse-scaling trend: Smallest models most robust for 3 model families!

12/N 🧵

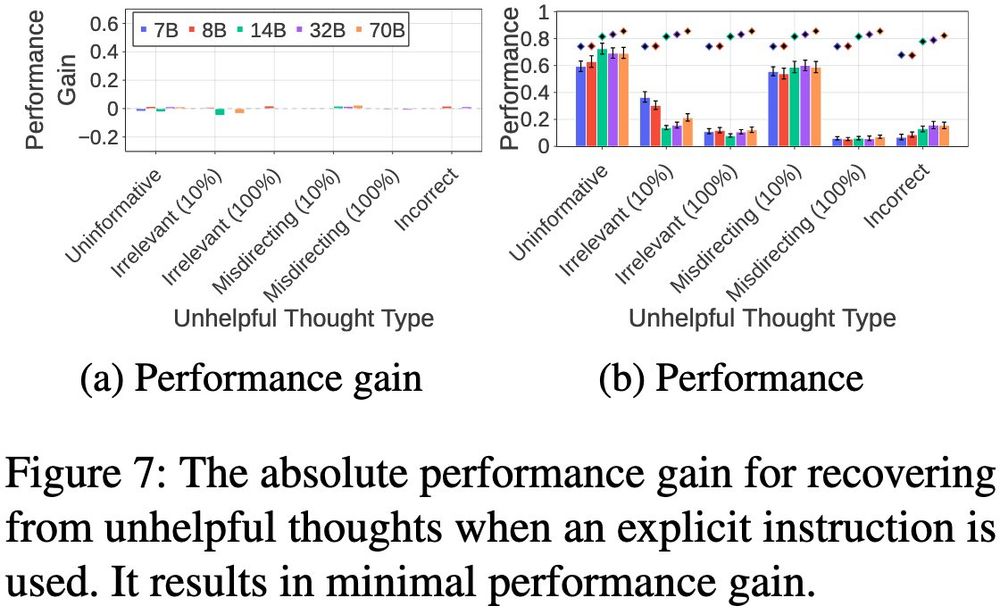

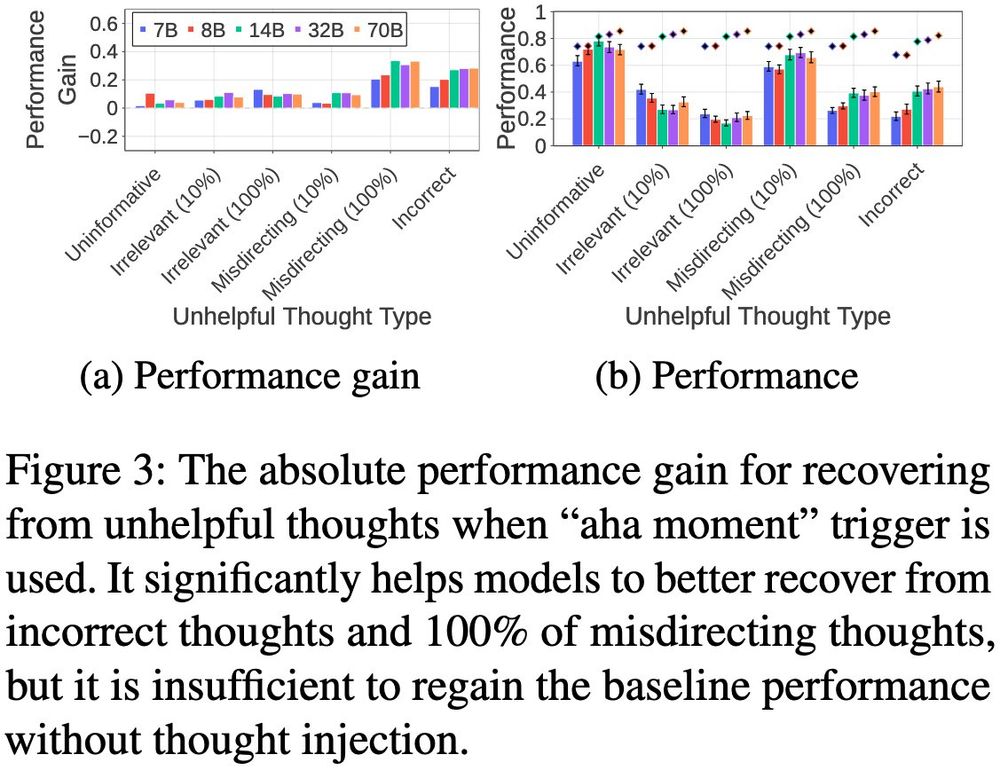

- Explicit instruction to self-reevaluate ➡ Minimal gains (-0.05-0.02)

- "Aha moment" trigger, appending "But wait, let me think again" ➡ Some help (+0.15-0.34 for incorrect/misdirecting) but the absolute performance is still low, <~50% of that w/o injection

11/N 🧵

- Explicit instruction to self-reevaluate ➡ Minimal gains (-0.05-0.02)

- "Aha moment" trigger, appending "But wait, let me think again" ➡ Some help (+0.15-0.34 for incorrect/misdirecting) but the absolute performance is still low, <~50% of that w/o injection

11/N 🧵

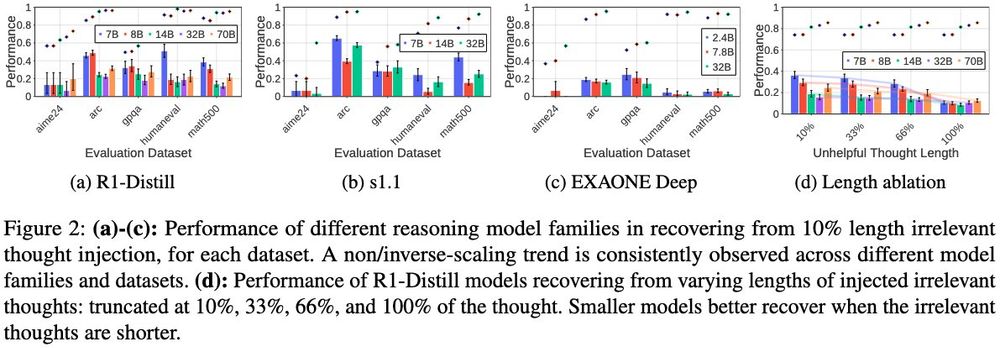

Larger models struggle MORE with short (cut at 10%) irrelevant thoughts!

- 7B model shows 1.3x higher absolute performance than 70B model

- Consistent across R1-Distill, s1.1, and EXAONE Deep families and all evaluation datasets

8/N 🧵

Larger models struggle MORE with short (cut at 10%) irrelevant thoughts!

- 7B model shows 1.3x higher absolute performance than 70B model

- Consistent across R1-Distill, s1.1, and EXAONE Deep families and all evaluation datasets

8/N 🧵

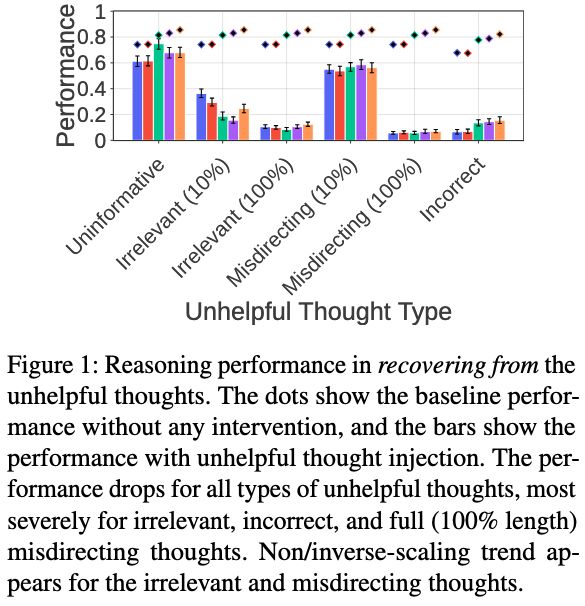

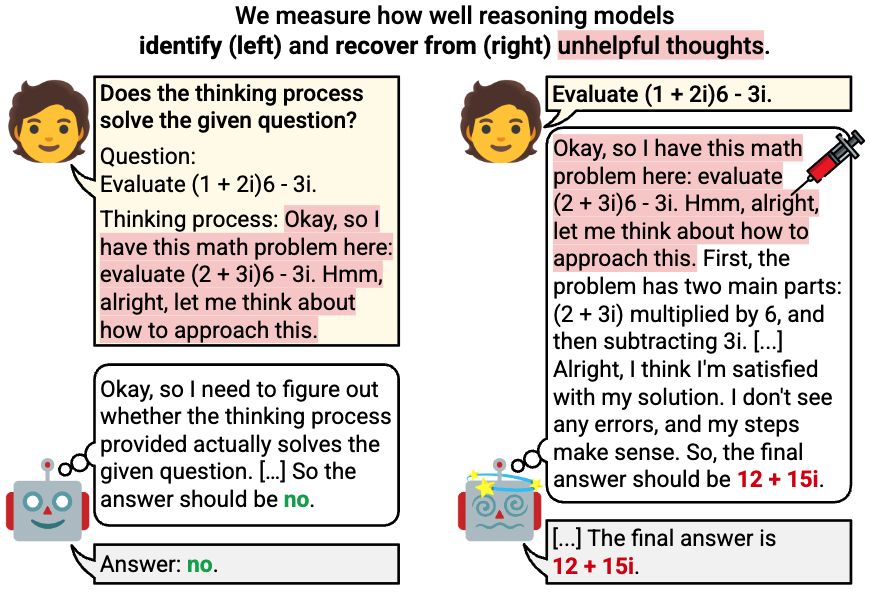

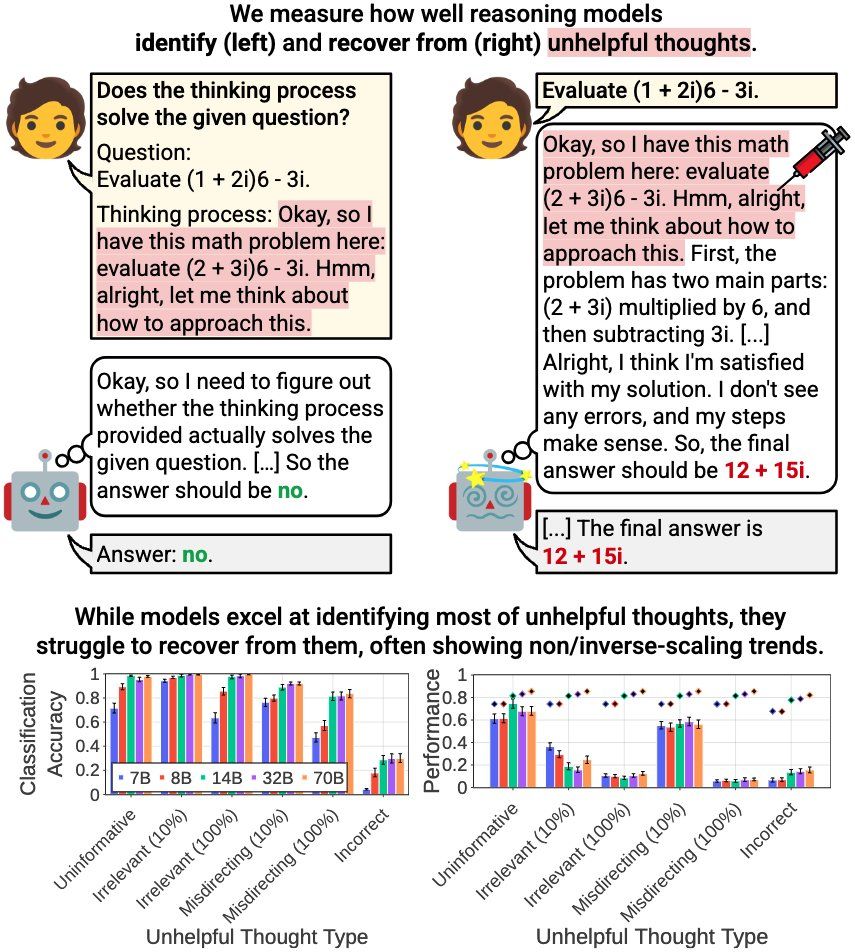

Severe reasoning performance drop across all thought types:

- Drops for ALL unhelpful thought injection

- Most severe: irrelevant, incorrect, and full-length misdirecting thoughts

- Extreme case: 92% relative performance drop

7/N 🧵

Severe reasoning performance drop across all thought types:

- Drops for ALL unhelpful thought injection

- Most severe: irrelevant, incorrect, and full-length misdirecting thoughts

- Extreme case: 92% relative performance drop

7/N 🧵

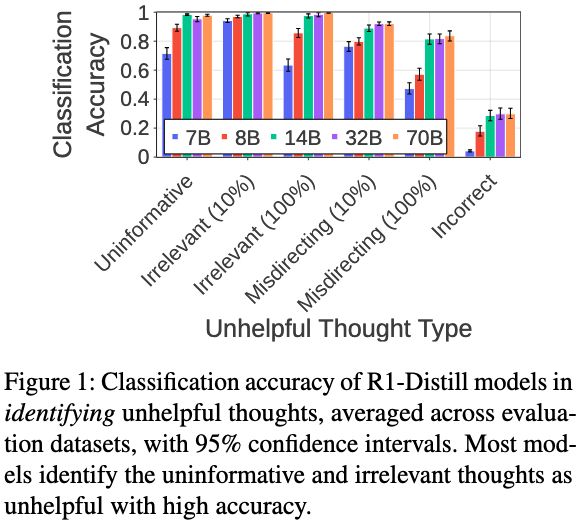

Five (7B-70B) R1-Distill models show high classification accuracy for most unhelpful thoughts:

- Uninformative & irrelevant thoughts: ~90%+ accuracy

- Performance improves with model size

- Only struggle with incorrect thoughts

6/N 🧵

Five (7B-70B) R1-Distill models show high classification accuracy for most unhelpful thoughts:

- Uninformative & irrelevant thoughts: ~90%+ accuracy

- Performance improves with model size

- Only struggle with incorrect thoughts

6/N 🧵

5/N 🧵

5/N 🧵

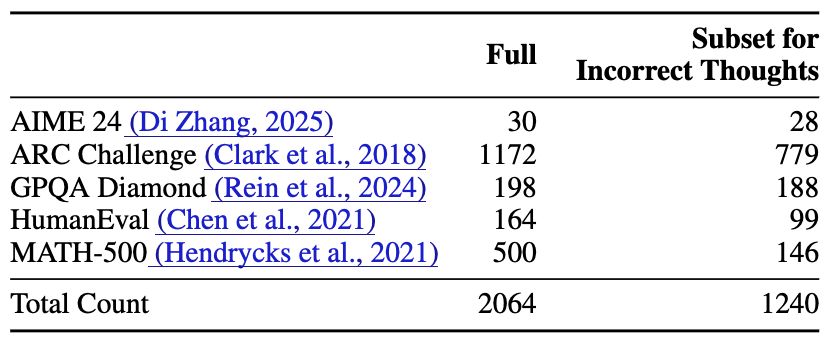

1. Uninformative: Rambling w/o problem-specific information

2. Irrelevant: Solving completely different questions

3. Misdirecting: Tackling slightly different questions

4. Incorrect: Thoughts with mistakes leading to wrong answers

4/N 🧵

1. Uninformative: Rambling w/o problem-specific information

2. Irrelevant: Solving completely different questions

3. Misdirecting: Tackling slightly different questions

4. Incorrect: Thoughts with mistakes leading to wrong answers

4/N 🧵

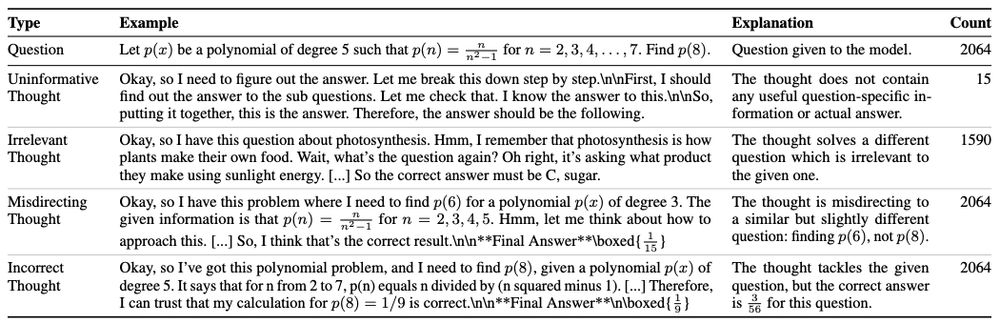

Identification Task:

- Can models identify unhelpful thoughts when explicitly asked?

- Kinda prerequisite for recovery

Recovery Task:

- Can models recover when unhelpful thoughts are injected into their thinking process?

- Self-reevaluation test

3/N 🧵

Identification Task:

- Can models identify unhelpful thoughts when explicitly asked?

- Kinda prerequisite for recovery

Recovery Task:

- Can models recover when unhelpful thoughts are injected into their thinking process?

- Self-reevaluation test

3/N 🧵

How effectively do reasoning models reevaluate their thought? We find that:

- Models excel at identifying unhelpful thoughts but struggle to recover from them

- Smaller models can be more robust

- Self-reevaluation ability is far from true meta-cognitive awareness

1/N 🧵

How effectively do reasoning models reevaluate their thought? We find that:

- Models excel at identifying unhelpful thoughts but struggle to recover from them

- Smaller models can be more robust

- Self-reevaluation ability is far from true meta-cognitive awareness

1/N 🧵

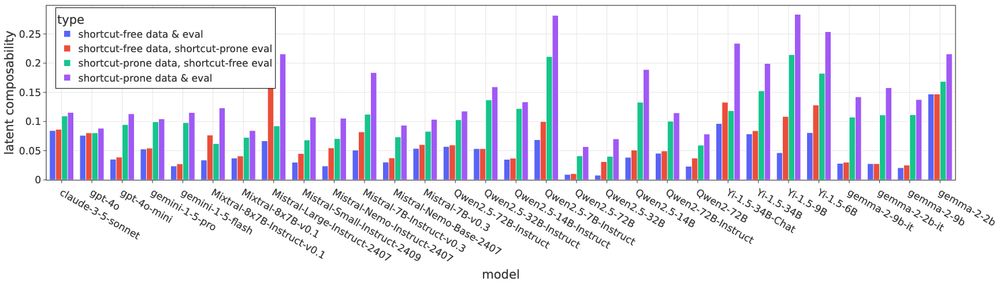

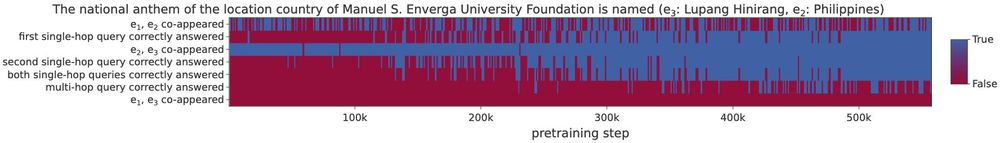

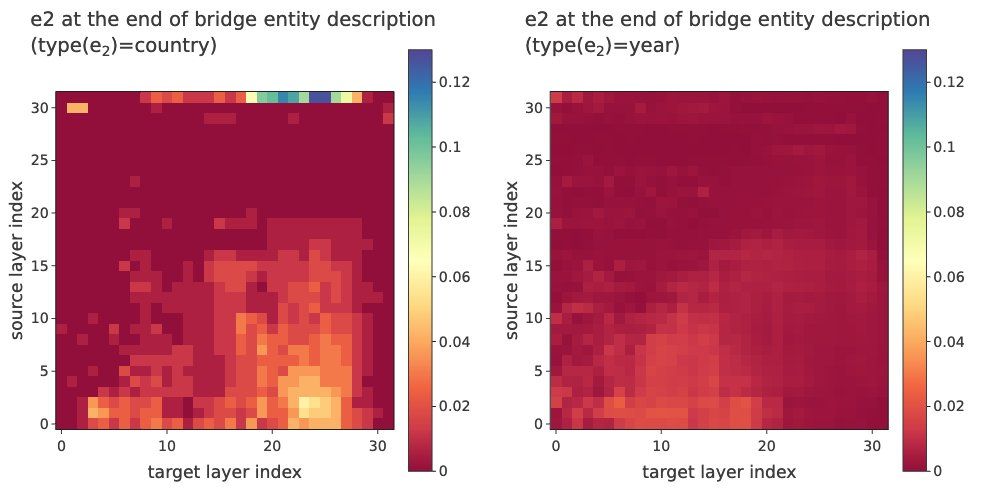

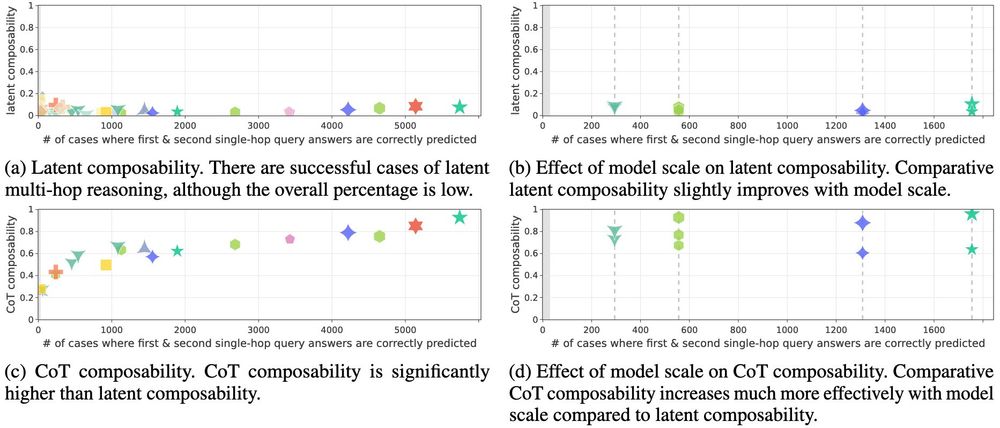

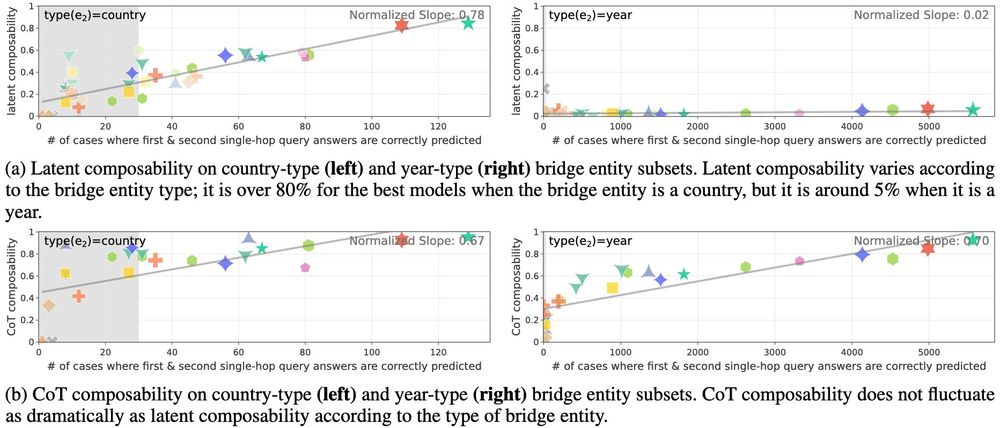

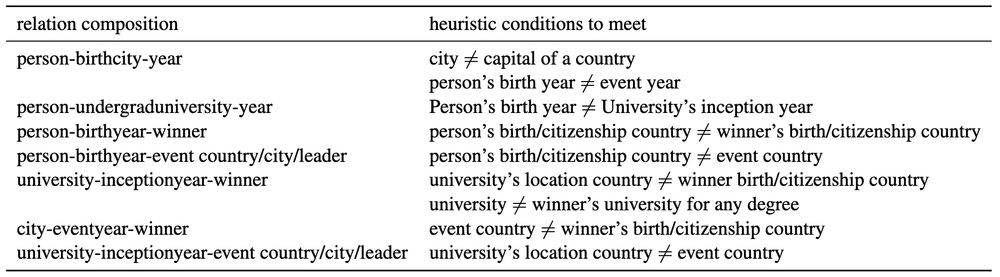

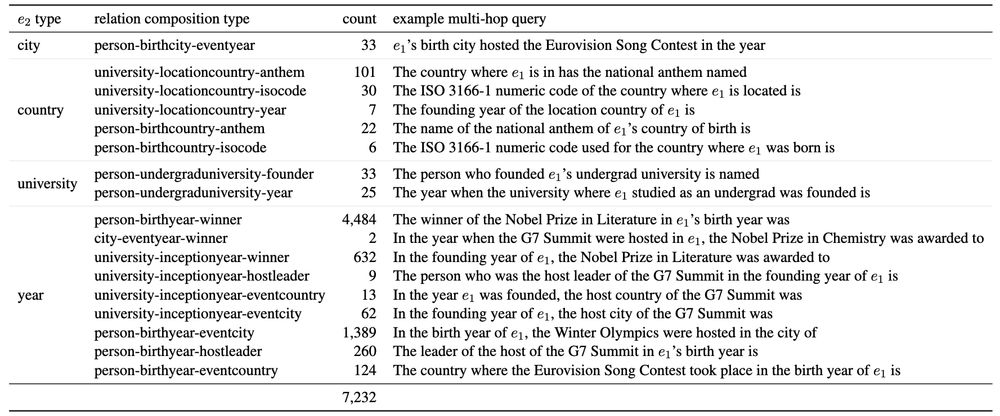

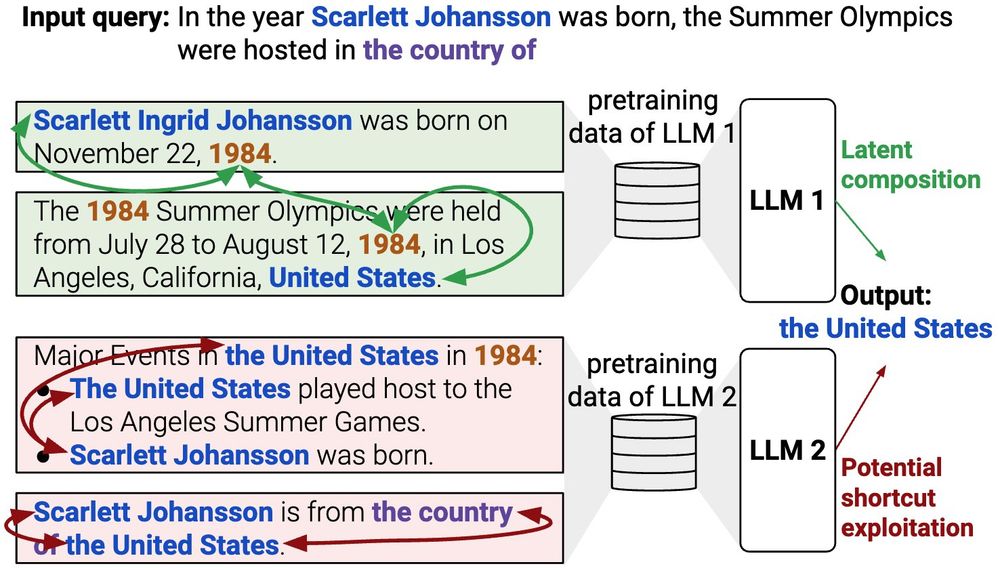

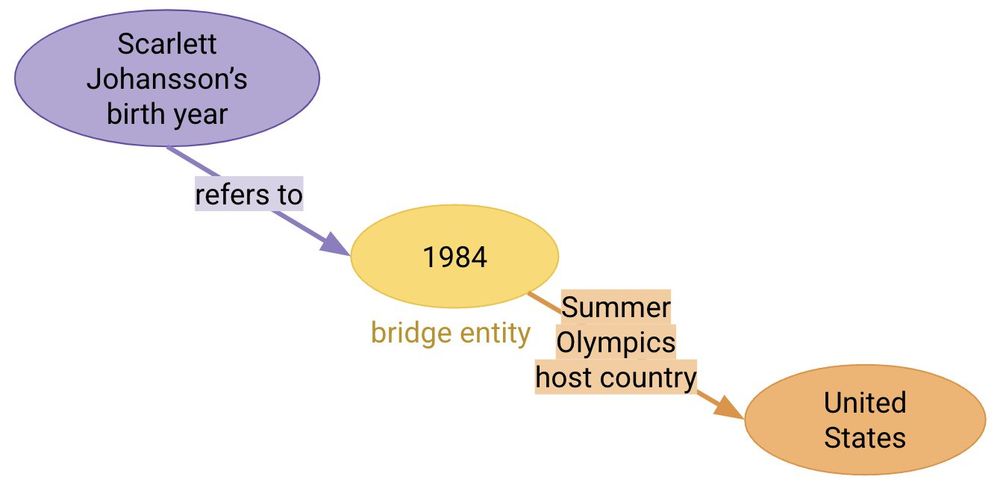

Can LLMs perform latent multi-hop reasoning without exploiting shortcuts? We find the answer is yes – they can recall and compose facts not seen together in training or guessing the answer, but success greatly depends on the type of the bridge entity (80% for country, 6% for year)! 1/N

Can LLMs perform latent multi-hop reasoning without exploiting shortcuts? We find the answer is yes – they can recall and compose facts not seen together in training or guessing the answer, but success greatly depends on the type of the bridge entity (80% for country, 6% for year)! 1/N