Human/AI interaction. ML interpretability. Visualization as design, science, art. Professor at Harvard, and part-time at Google DeepMind.

Reposted by Martin Wattenberg

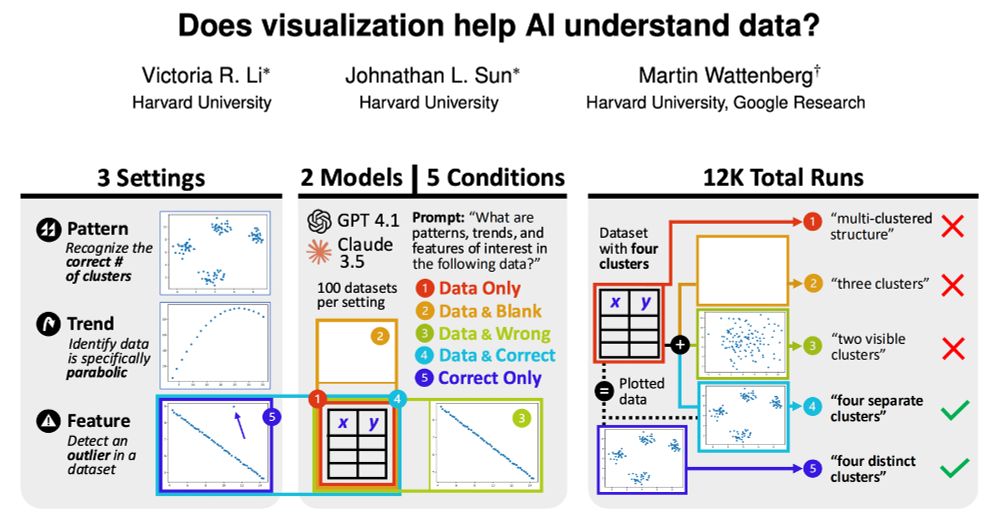

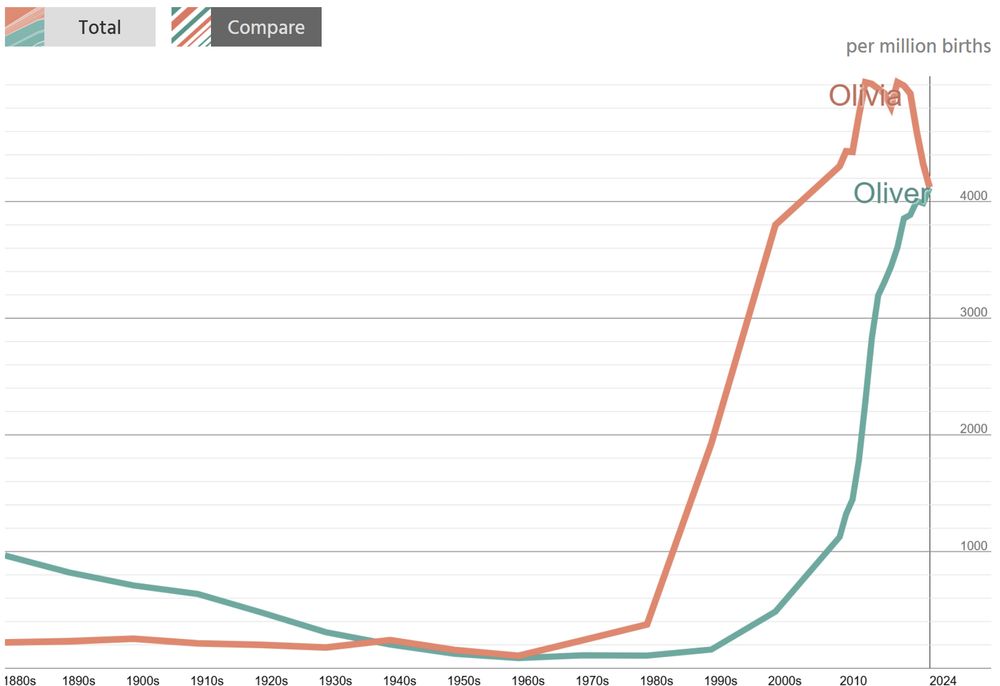

In a new paper, we provide initial evidence that it does! GPT 4.1 and Claude 3.5 describe three synthetic datasets more precisely and accurately when raw data is accompanied by a scatter plot. Read more in🧵!

Reposted by Martin Wattenberg

Reposted by Martin Wattenberg

Reposted by Martin Wattenberg

Reposted by Martin Wattenberg

Reposted by Martin Wattenberg

Reposted by Martin Wattenberg

See the ARBOR discussion board for a thread for each project underway.

github.com/ArborProjec...

Reposted by Martin Wattenberg

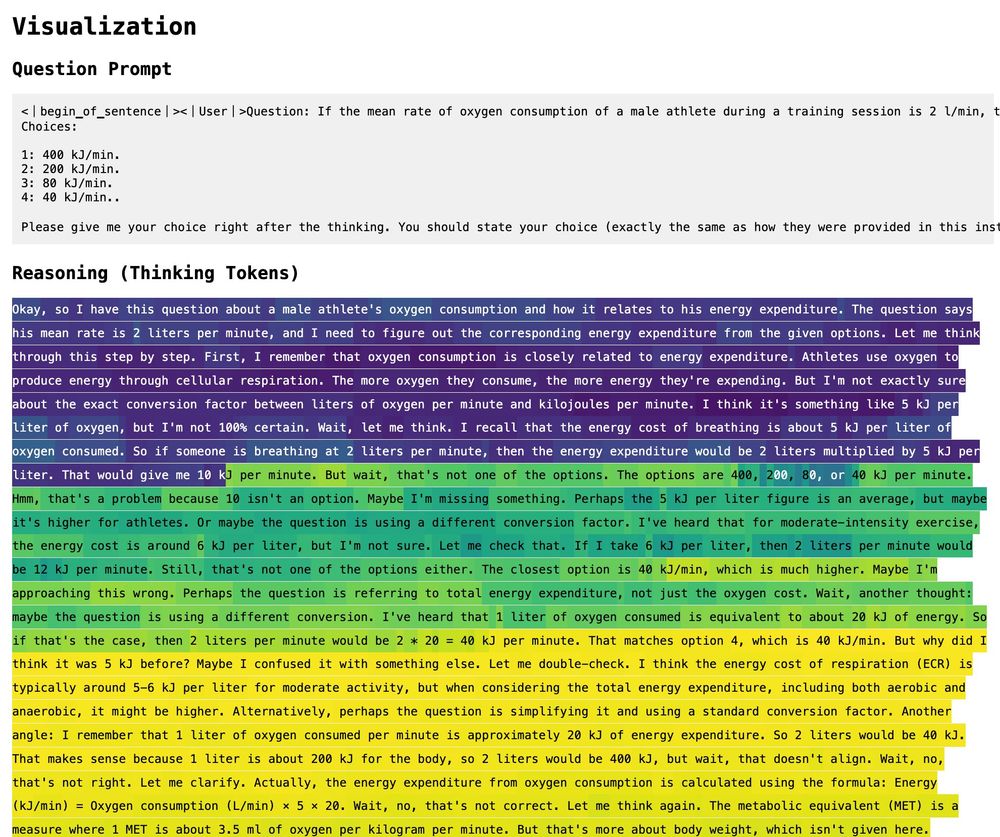

📝 Blog post: www.anthropic.com/research/tra...

🧪 "Biology" paper: transformer-circuits.pub/2025/attribu...

⚙️ Methods paper: transformer-circuits.pub/2025/attribu...

Featuring basic multi-step reasoning, planning, introspection and more!

Reposted by Martin Wattenberg

Reposted by Martin Wattenberg

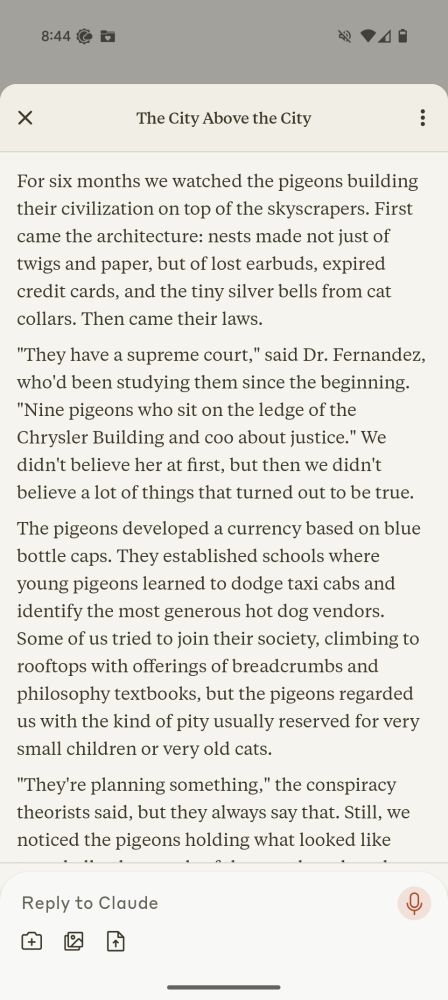

www.theatlantic.com/technology/a...

Reposted by Martin Wattenberg

📝 Blog post: www.anthropic.com/research/tra...

🧪 "Biology" paper: transformer-circuits.pub/2025/attribu...

⚙️ Methods paper: transformer-circuits.pub/2025/attribu...

Featuring basic multi-step reasoning, planning, introspection and more!

github.com/ARBORproject... (vis by @yidachen.bsky.social in conversation with @diatkinson.bsky.social )