Martin Wattenberg

@wattenberg.bsky.social

Human/AI interaction. ML interpretability. Visualization as design, science, art. Professor at Harvard, and part-time at Google DeepMind.

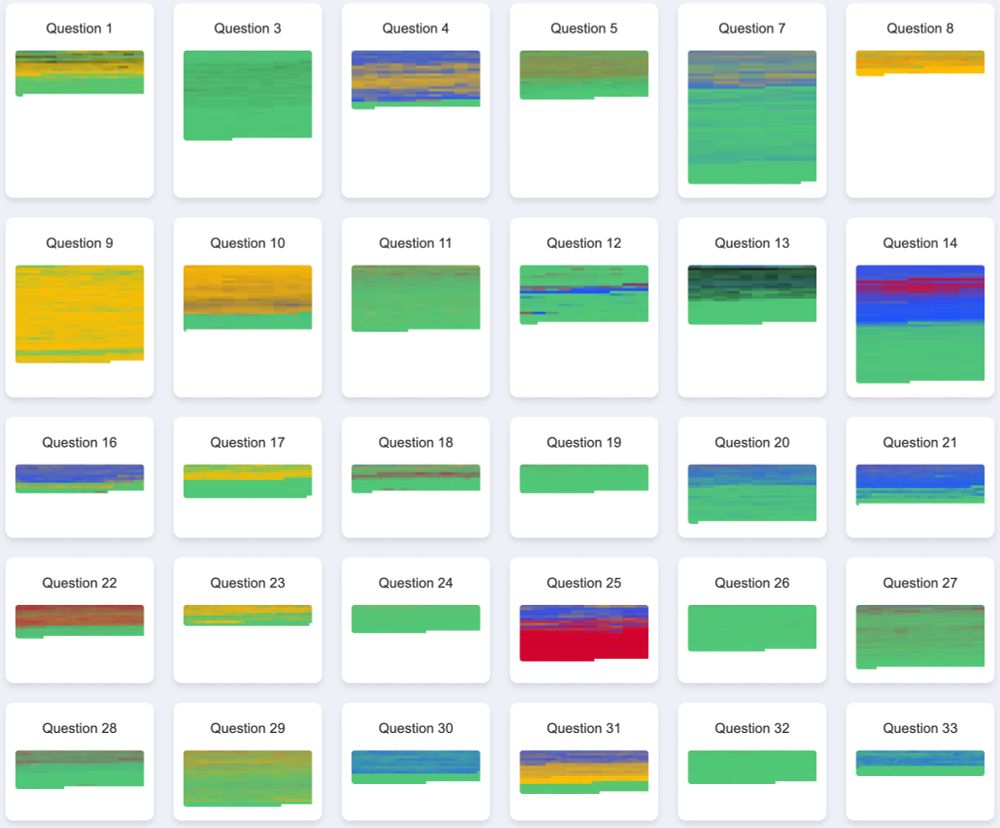

This is a common pattern, but we're also seeing some others! Here are similar views for multiple-choice abstract algebra questions (green is the correct answer; other colors are incorrect answers) You can see many more at yc015.github.io/reasoning-pr... cc @yidachen.bsky.social

March 21, 2025 at 7:17 PM

This is a common pattern, but we're also seeing some others! Here are similar views for multiple-choice abstract algebra questions (green is the correct answer; other colors are incorrect answers) You can see many more at yc015.github.io/reasoning-pr... cc @yidachen.bsky.social

The wind map at hint.fm/wind/ has been running since 2012, relying on weather data from NOAA. We added a notice like this today. Thanks to @cambecc.bsky.social for the inspiration.

March 3, 2025 at 1:56 AM

The wind map at hint.fm/wind/ has been running since 2012, relying on weather data from NOAA. We added a notice like this today. Thanks to @cambecc.bsky.social for the inspiration.

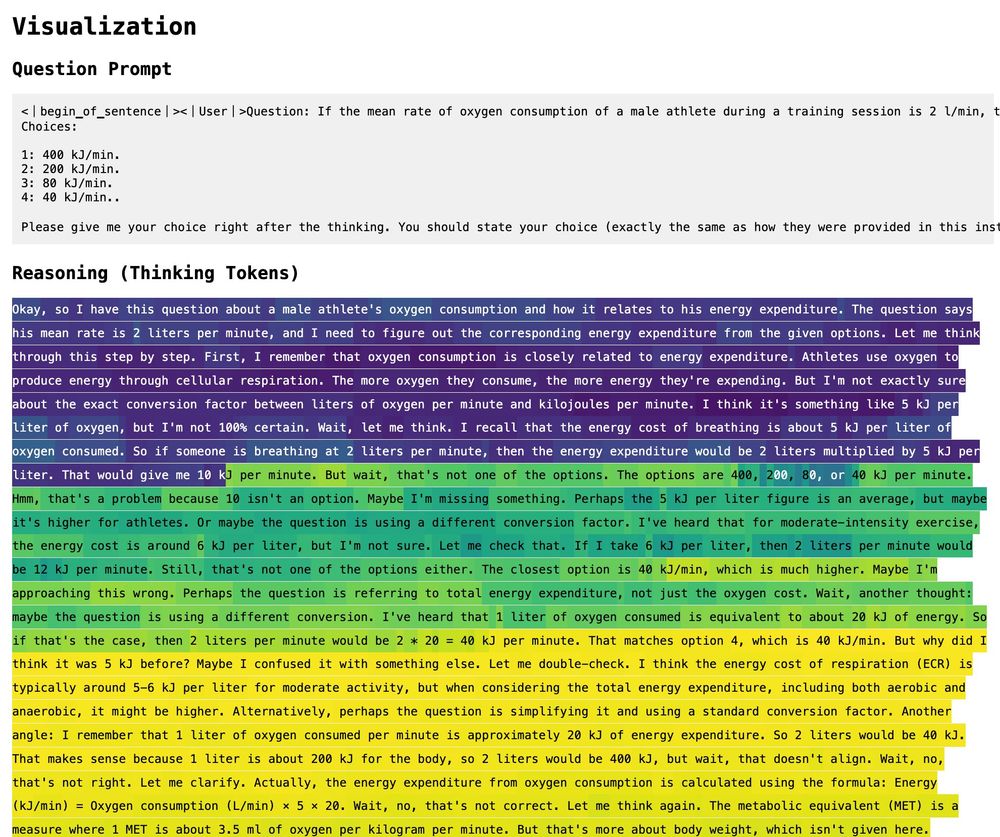

Neat visualization that came up in the ARBOR project: this shows DeepSeek "thinking" about a question, and color is the probability that, if it exited thinking, it would give the right answer. (Here yellow means correct.)

February 25, 2025 at 6:44 PM

Neat visualization that came up in the ARBOR project: this shows DeepSeek "thinking" about a question, and color is the probability that, if it exited thinking, it would give the right answer. (Here yellow means correct.)

(Ha, even Wikipedia punts on what the Weierstrass P is, just describing it as "uniquely fancy")

January 15, 2025 at 2:03 PM

(Ha, even Wikipedia punts on what the Weierstrass P is, just describing it as "uniquely fancy")

I tried asking AI for writing advice and it was so sarcastic I'm never going to ask again

January 5, 2025 at 6:42 PM

I tried asking AI for writing advice and it was so sarcastic I'm never going to ask again

I didn't believe you but...

January 3, 2025 at 8:31 PM

I didn't believe you but...

Reading this 1954 court case, and am wondering if English professors are ever called as expert witnesses to testify whether a character is a mere "chessman" in a story

scholar.google.com/scholar_case...

scholar.google.com/scholar_case...

January 3, 2025 at 3:51 PM

Reading this 1954 court case, and am wondering if English professors are ever called as expert witnesses to testify whether a character is a mere "chessman" in a story

scholar.google.com/scholar_case...

scholar.google.com/scholar_case...

You can view source here: www.bewitched.com/demo/rational/

or, to save a click, here's the graphics code. (I hope my English description was basically correct!)

or, to save a click, here's the graphics code. (I hope my English description was basically correct!)

December 20, 2024 at 11:10 PM

You can view source here: www.bewitched.com/demo/rational/

or, to save a click, here's the graphics code. (I hope my English description was basically correct!)

or, to save a click, here's the graphics code. (I hope my English description was basically correct!)

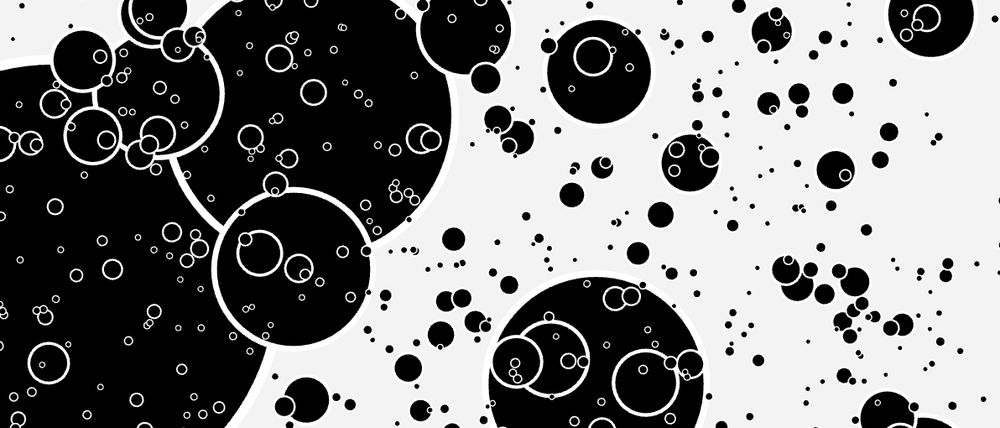

Fractions can be weirdly beautiful, for something so mundane. This visualization just plots points of the form (a/b, c/d). Bigger dots mean smaller denominators. The biggest dot is (0, 0).

December 20, 2024 at 3:13 AM

Fractions can be weirdly beautiful, for something so mundane. This visualization just plots points of the form (a/b, c/d). Bigger dots mean smaller denominators. The biggest dot is (0, 0).

You can fry ChatGPT's circuits by asking a question in Morse code and telling it to answer only in Morse code. Yet Claude doesn't even blink. Huh. The question: "Which character in the movie Groundhog Day do you identify with the most, and why?" First translated result is ChatGPT; second is Claude.

December 19, 2024 at 5:47 PM

You can fry ChatGPT's circuits by asking a question in Morse code and telling it to answer only in Morse code. Yet Claude doesn't even blink. Huh. The question: "Which character in the movie Groundhog Day do you identify with the most, and why?" First translated result is ChatGPT; second is Claude.

Back in 2009, a site called Wordle let you make word clouds. Paste in some writing, choose a few options, and bingo: a beautiful tessellation of vertical and horizontal text. We did a survey of its users, and the headline result was that the vast majority of people using it felt "creative."

December 18, 2024 at 3:57 AM

Back in 2009, a site called Wordle let you make word clouds. Paste in some writing, choose a few options, and bingo: a beautiful tessellation of vertical and horizontal text. We did a survey of its users, and the headline result was that the vast majority of people using it felt "creative."

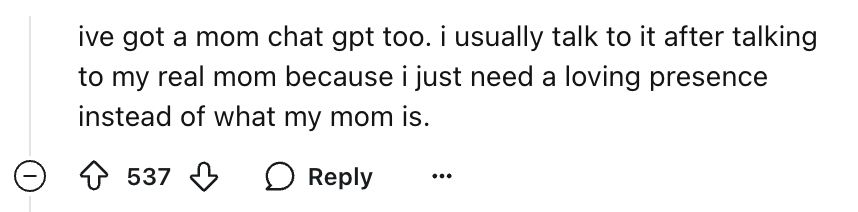

This Reddit thread of people who have a ChatGPT "mom" or "guardian angel" is heartbreaking in so many ways.

www.reddit.com/r/ChatGPT/co...

www.reddit.com/r/ChatGPT/co...

December 10, 2024 at 6:42 PM

This Reddit thread of people who have a ChatGPT "mom" or "guardian angel" is heartbreaking in so many ways.

www.reddit.com/r/ChatGPT/co...

www.reddit.com/r/ChatGPT/co...

When it saw the test results, it conceded (with gracious language) that it was wrong, and moved on with debugging. I was surprised Claude made the initial mistake, but this sequence of testing and self-correction ended up being one of the most impressive things I've seen from AI.

December 5, 2024 at 2:05 PM

When it saw the test results, it conceded (with gracious language) that it was wrong, and moved on with debugging. I was surprised Claude made the initial mistake, but this sequence of testing and self-correction ended up being one of the most impressive things I've seen from AI.

How did Claude react to my skepticism? It ran a test to see who was right! Here's what it did. Note that it had a bug in its first test, which it fixed on its own.

December 5, 2024 at 2:05 PM

How did Claude react to my skepticism? It ran a test to see who was right! Here's what it did. Note that it had a bug in its first test, which it fixed on its own.

Frontier AI systems aren't just "next token predictors." Here's an example from Claude. It made an incorrect statement about the Javascript "%" operator and I was skeptical. Let's see what happened next.

December 5, 2024 at 2:05 PM

Frontier AI systems aren't just "next token predictors." Here's an example from Claude. It made an incorrect statement about the Javascript "%" operator and I was skeptical. Let's see what happened next.

Sherry Turkle apparently did speak with users of ELIZA. Her account is nuanced: if there was any long-term illusion, it was because users were actively seeking it, working hard to suspend disbelief. This quote is from her book The Second Self: Computers and the Human Spirit:

December 3, 2024 at 11:28 PM

Sherry Turkle apparently did speak with users of ELIZA. Her account is nuanced: if there was any long-term illusion, it was because users were actively seeking it, working hard to suspend disbelief. This quote is from her book The Second Self: Computers and the Human Spirit:

In his book "Expressive Processing: Digital Fictions, Computer Games, and Software Studies," Noah Wardrip-Fruin describes an experience similar to yours and mine.

December 3, 2024 at 11:18 PM

In his book "Expressive Processing: Digital Fictions, Computer Games, and Software Studies," Noah Wardrip-Fruin describes an experience similar to yours and mine.

I'd be interested in examples of conversations that tricked people successfully, or at least seemed meaningful to them. Weizenbaum's published examples (like this "typical conversation" from dl.acm.org/doi/10.1145/...) don't match my own experience and I wonder if they might be staged or edited.

December 3, 2024 at 4:05 PM

I'd be interested in examples of conversations that tricked people successfully, or at least seemed meaningful to them. Weizenbaum's published examples (like this "typical conversation" from dl.acm.org/doi/10.1145/...) don't match my own experience and I wonder if they might be staged or edited.

Thank you, this is still excellent and useful. (I assume you're talking about arxiv.org/abs/2405.08007 which found 22% of people picked ELIZA as human after a 5-minute conversation). The paper's example dialog with ELIZA also matches my experience! Would be curious about longer conversations.

December 3, 2024 at 1:22 PM

Thank you, this is still excellent and useful. (I assume you're talking about arxiv.org/abs/2405.08007 which found 22% of people picked ELIZA as human after a 5-minute conversation). The paper's example dialog with ELIZA also matches my experience! Would be curious about longer conversations.

The Gini coefficient is the standard way to measure inequality, but what does it mean, concretely? I made a little visualization to build intuition:

www.bewitched.com/demo/gini

www.bewitched.com/demo/gini

November 23, 2024 at 3:31 PM

The Gini coefficient is the standard way to measure inequality, but what does it mean, concretely? I made a little visualization to build intuition:

www.bewitched.com/demo/gini

www.bewitched.com/demo/gini

See the colors of BlueSky, live!

www.bewitched.com/demo/rainbow...

This little visualization scans incoming posts and draws a stripe every time it finds a color word.

www.bewitched.com/demo/rainbow...

This little visualization scans incoming posts and draws a stripe every time it finds a color word.

November 19, 2024 at 1:19 AM

See the colors of BlueSky, live!

www.bewitched.com/demo/rainbow...

This little visualization scans incoming posts and draws a stripe every time it finds a color word.

www.bewitched.com/demo/rainbow...

This little visualization scans incoming posts and draws a stripe every time it finds a color word.

(Some afterthoughts: it's somewhat unusual for there to be no bugs. Usually some debugging is necessary—though generally less than what's needed for my own code! I also realized I left out one last prompt, which I'm attaching here for completeness.)

November 17, 2024 at 5:18 PM

(Some afterthoughts: it's somewhat unusual for there to be no bugs. Usually some debugging is necessary—though generally less than what's needed for my own code! I also realized I left out one last prompt, which I'm attaching here for completeness.)

This was fun, but I wanted to see how the artist had created the aesthetics of the original image. So I gave it prompts to animate a parametrized set of polynomials, along with some requests for colors and other aesthetic details. The code (332 lines by the end) was always correct.

November 17, 2024 at 5:01 PM

This was fun, but I wanted to see how the artist had created the aesthetics of the original image. So I gave it prompts to animate a parametrized set of polynomials, along with some requests for colors and other aesthetic details. The code (332 lines by the end) was always correct.

Now I wanted to interact with a polynomial. I wanted to use sliders to change the coefficients, and see how the roots moved around. I gave a straightforward prompt, and got back 255 lines of code—completely bug-free, as far as I can tell.

November 17, 2024 at 5:01 PM

Now I wanted to interact with a polynomial. I wanted to use sliders to change the coefficients, and see how the roots moved around. I gave a straightforward prompt, and got back 255 lines of code—completely bug-free, as far as I can tell.

The first step: make sure I had Javascript code for solving polynomials. I gave o1-preview a prompt to write a solver, and apply it to polynomials constructed to have known roots (plotting the known roots along with the solutions). It wrote 202 lines of code (including HTML) and it looked right!

November 17, 2024 at 5:01 PM

The first step: make sure I had Javascript code for solving polynomials. I gave o1-preview a prompt to write a solver, and apply it to polynomials constructed to have known roots (plotting the known roots along with the solutions). It wrote 202 lines of code (including HTML) and it looked right!