Language Understanding and Reasoning Lab

Stony Brook University

- I've completed my PhD at @unccs.bsky.social! 🎓

- Starting Fall 2026, I'll be joining the CS dept. at Johns Hopkins University @jhucompsci.bsky.social as an Assistant Professor 💙

- Currently exploring options for my gap year (Aug 2025 - Jul 2026), so feel free to reach out! 🔎

- I've completed my PhD at @unccs.bsky.social! 🎓

- Starting Fall 2026, I'll be joining the CS dept. at Johns Hopkins University @jhucompsci.bsky.social as an Assistant Professor 💙

- Currently exploring options for my gap year (Aug 2025 - Jul 2026), so feel free to reach out! 🔎

@niranjanb.bsky.social @ajayp95.bsky.social

Arxiv link coming hopefully soon!

@niranjanb.bsky.social @ajayp95.bsky.social

Arxiv link coming hopefully soon!

Make sure to apply for your PhD with him -- he is an amazing advisor and person! 💙

@utaustin.bsky.social Computer Science in August 2025 as an Assistant Professor! 🎉

Make sure to apply for your PhD with him -- he is an amazing advisor and person! 💙

Our work (REL-A.I.) introduces an evaluation framework that measures human reliance on LLMs and reveals how contextual features like anthropomorphism, subject, and user history can significantly influence user reliance behaviors.

Our work (REL-A.I.) introduces an evaluation framework that measures human reliance on LLMs and reveals how contextual features like anthropomorphism, subject, and user history can significantly influence user reliance behaviors.

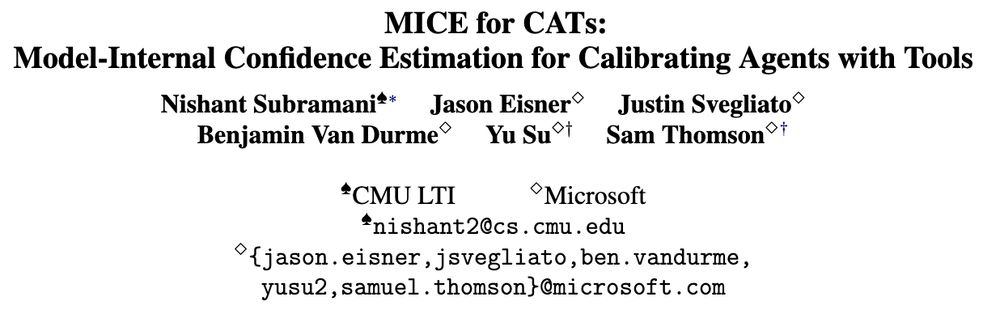

This was work done @msftresearch.bsky.social last summer with Jason Eisner, Justin Svegliato, Ben Van Durme, Yu Su, and Sam Thomson

1/🧵

This was work done @msftresearch.bsky.social last summer with Jason Eisner, Justin Svegliato, Ben Van Durme, Yu Su, and Sam Thomson

1/🧵

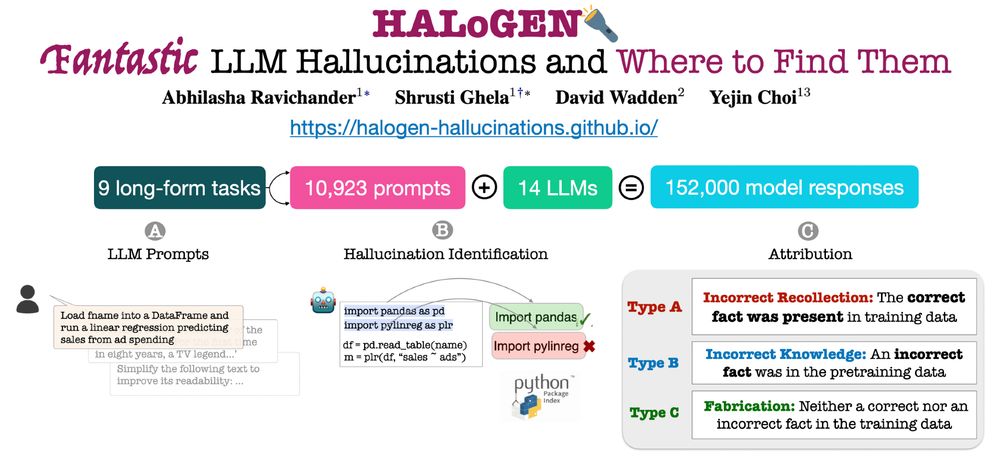

New work w/ Shrusti Ghela*, David Wadden, and Yejin Choi 💫

📝 Paper: arxiv.org/abs/2501.08292

🚀 Code/Data: github.com/AbhilashaRav...

🌐 Website: halogen-hallucinations.github.io 🧵 [1/n]

New work w/ Shrusti Ghela*, David Wadden, and Yejin Choi 💫

📝 Paper: arxiv.org/abs/2501.08292

🚀 Code/Data: github.com/AbhilashaRav...

🌐 Website: halogen-hallucinations.github.io 🧵 [1/n]

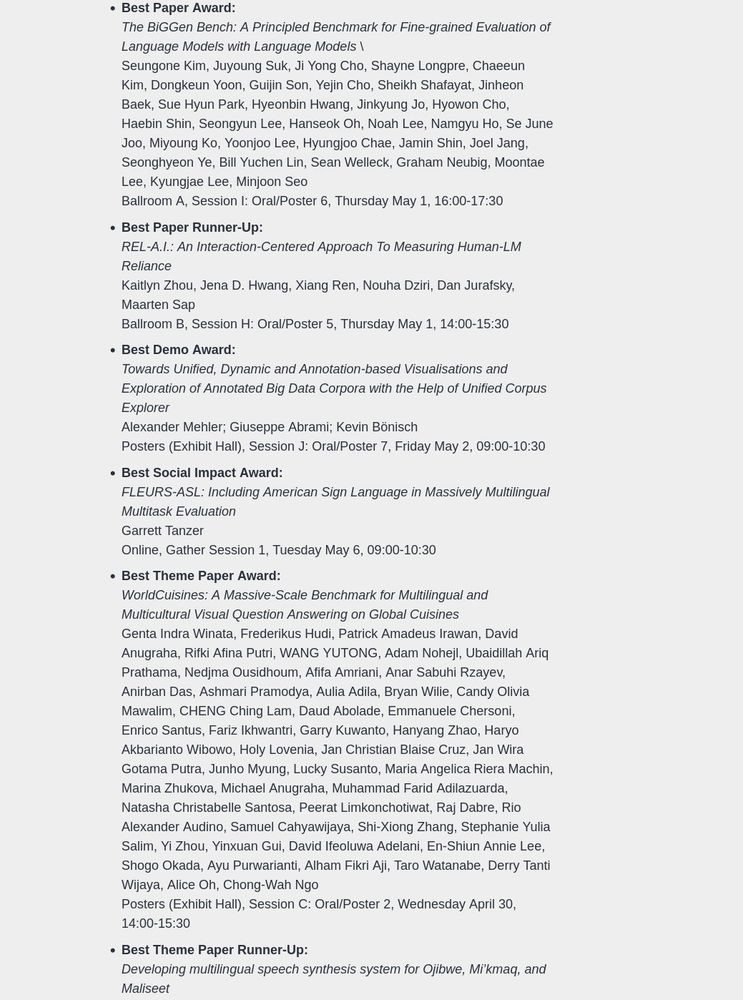

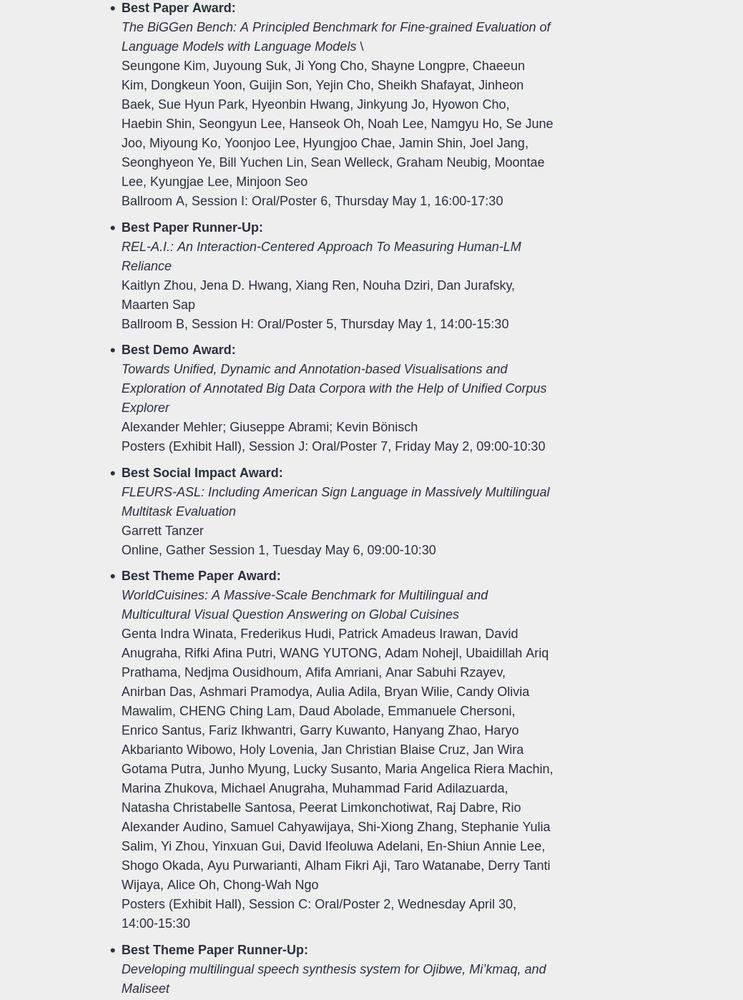

The Best Paper and Best Theme Paper winners will present at our closing session

2025.naacl.org/blog/best-pa...

The Best Paper and Best Theme Paper winners will present at our closing session

2025.naacl.org/blog/best-pa...

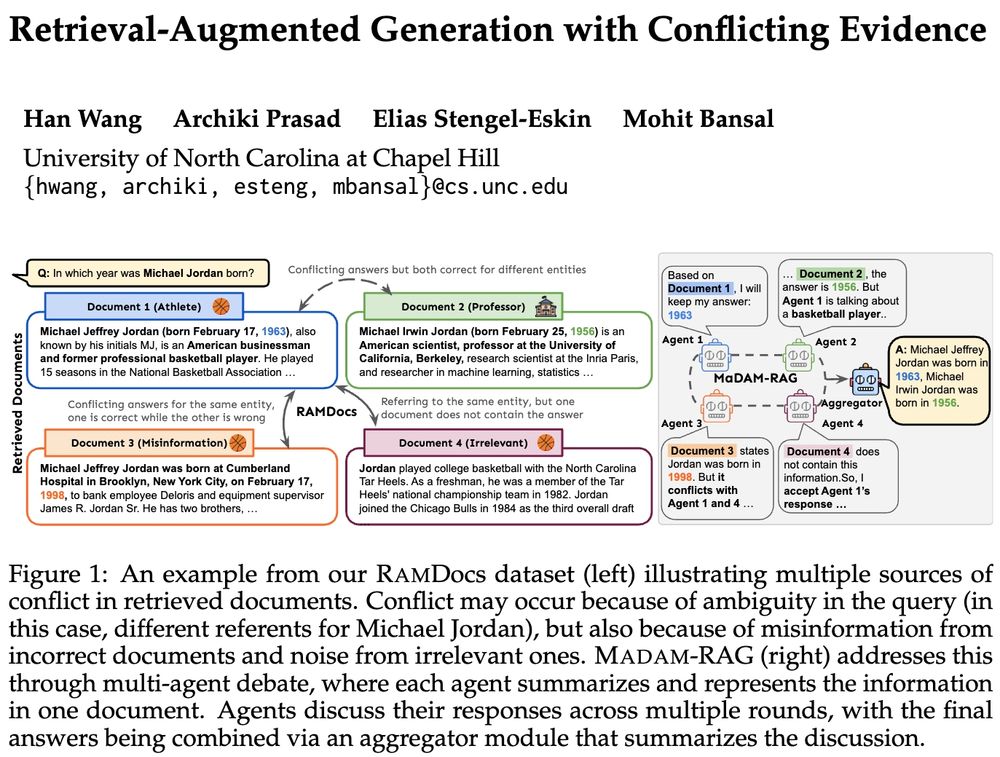

➡️RAMDocs: challenging dataset w/ ambiguity, misinformation & noise

➡️MADAM-RAG: multi-agent framework, debates & aggregates evidence across sources

🧵⬇️

➡️RAMDocs: challenging dataset w/ ambiguity, misinformation & noise

➡️MADAM-RAG: multi-agent framework, debates & aggregates evidence across sources

🧵⬇️

🎯 We demonstrate that ranking-based discriminator training can significantly reduce this gap, and improvements on one task often generalize to others!

🧵👇

Introducing OLMoTrace, a new feature in the Ai2 Playground that begins to shed some light. 🔦

Introducing OLMoTrace, a new feature in the Ai2 Playground that begins to shed some light. 🔦

See you in October in Montreal!

See you in October in Montreal!

Students do different research, go on the job market, and recruit other students. Ping me and I'll add you!

Students do different research, go on the job market, and recruit other students. Ping me and I'll add you!

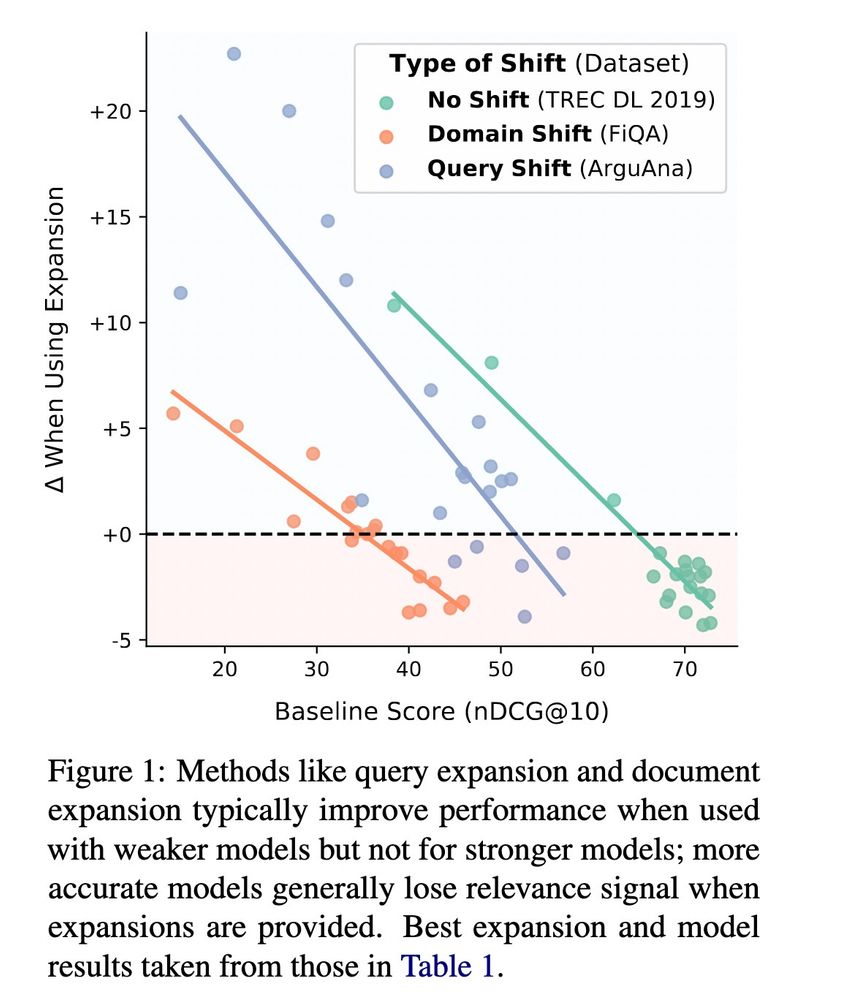

But do these approaches work for all IR models and for different types of distribution shifts? Turns out its actually more 📉 🚨

📝 (arxiv soon): orionweller.github.io/assets/pdf/L...

But do these approaches work for all IR models and for different types of distribution shifts? Turns out its actually more 📉 🚨

📝 (arxiv soon): orionweller.github.io/assets/pdf/L...

❓ What would you like to be included?

🔌 Self-plugs are welcome!!

x.com/harsh3vedi/s...

❓ What would you like to be included?

🔌 Self-plugs are welcome!!

x.com/harsh3vedi/s...