Previously postdoc at UW and AI2, working on Natural Language Processing

Recruiting PhD students!

🌐 https://lasharavichander.github.io/

I’ll be recruiting PhD students in the upcoming cycle, as well as research interns throughout the year: lasharavichander.github.io/contact.html

@microsoft.com (will forever miss this team) and moved to Vancouver, Canada, where I'm starting my lab as an assistant professor at the gorgeous @sfu.ca 🏔️

I'm looking to hire 1-2 students starting in Fall 2026. Details in 🧵

Noam joined us from the Hebrew University of Jerusalem after five years as a journalist! Looking forward to working together and learning from her unique perspective🤗

@lasha.bsky.social on factuality and nuanced forms of misinformation.

Cheers from Germany!

Noam joined us from the Hebrew University of Jerusalem after five years as a journalist! Looking forward to working together and learning from her unique perspective🤗

Topics include, but aren’t limited to:

🔎Linguistic Interpretability

🌍Multilingual Evaluation

📖Computational Typology

Please share!

#NLProc #NLP

Topics include, but aren’t limited to:

🔎Linguistic Interpretability

🌍Multilingual Evaluation

📖Computational Typology

Please share!

#NLProc #NLP

While we've all been worrying about tokenizers, lurking in the background has been the preprocessing *before* tokenization. Poems break standard HTML-to-text linearization systems, and we find that multimodal models aren't a solution.

While we've all been worrying about tokenizers, lurking in the background has been the preprocessing *before* tokenization. Poems break standard HTML-to-text linearization systems, and we find that multimodal models aren't a solution.

KSoC: utah.peopleadmin.com/postings/190... (AI broadly)

Education + AI:

- utah.peopleadmin.com/postings/189...

- utah.peopleadmin.com/postings/190...

Computer Vision:

- utah.peopleadmin.com/postings/183...

KSoC: utah.peopleadmin.com/postings/190... (AI broadly)

Education + AI:

- utah.peopleadmin.com/postings/189...

- utah.peopleadmin.com/postings/190...

Computer Vision:

- utah.peopleadmin.com/postings/183...

I'm excited to share our preprint answering these questions:

"Epistemic Diversity and Knowledge Collapse in Large Language Models"

📄Paper: arxiv.org/pdf/2510.04226

💻Code: github.com/dwright37/ll...

1/10

I'm excited to share our preprint answering these questions:

"Epistemic Diversity and Knowledge Collapse in Large Language Models"

📄Paper: arxiv.org/pdf/2510.04226

💻Code: github.com/dwright37/ll...

1/10

I'm still so proud of our work (led by @lasha.bsky.social) on CondaQA, so we had to ask what would happen if we tried to create high-quality reasoning-over-text benchmarks now that LLMs are available. Turns out, we'd make an easier benchmark!

📍Findings Session 1 - Hall C

📅 Wed, November 5, 13:00 - 14:00

arxiv.org/abs/2505.22830

I'm still so proud of our work (led by @lasha.bsky.social) on CondaQA, so we had to ask what would happen if we tried to create high-quality reasoning-over-text benchmarks now that LLMs are available. Turns out, we'd make an easier benchmark!

📍Findings Session 1 - Hall C

📅 Wed, November 5, 13:00 - 14:00

arxiv.org/abs/2505.22830

📍Findings Session 1 - Hall C

📅 Wed, November 5, 13:00 - 14:00

arxiv.org/abs/2505.22830

(And if you’re at COLM, come hear about it on Tuesday – sessions Spotlight 2 & Poster 2)!

(And if you’re at COLM, come hear about it on Tuesday – sessions Spotlight 2 & Poster 2)!

1. Examples of statements of purpose (SOPs) for computer science PhD programs: cs-sop.org [1/4]

1. Examples of statements of purpose (SOPs) for computer science PhD programs: cs-sop.org [1/4]

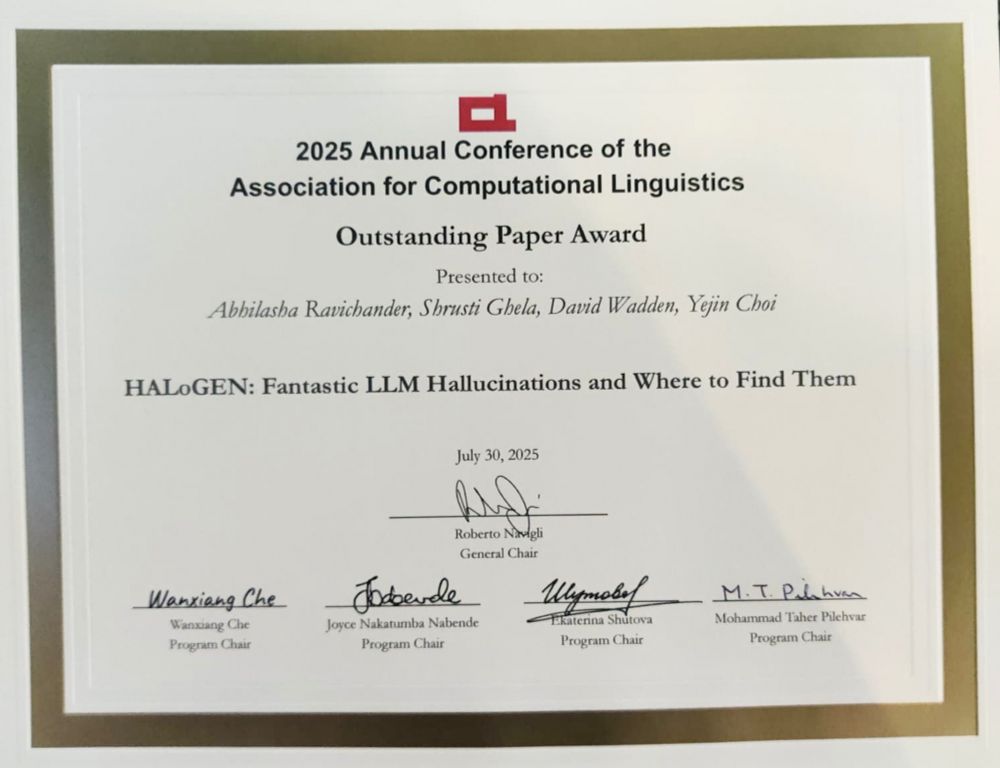

#ACL #LLMs #Hallucination #WiAIR #WomenInAI

#ACL #LLMs #Hallucination #WiAIR #WomenInAI

Copenhagen friends, I'm here for a couple more days! Please stop by P1 to say bye 🥺

Copenhagen friends, I'm here for a couple more days! Please stop by P1 to say bye 🥺

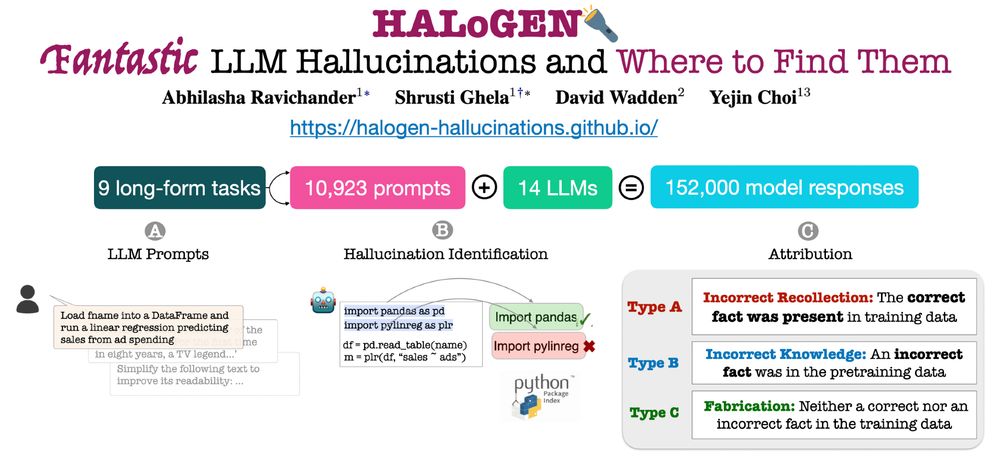

We talk to @lasha.bsky.social about LLM Hallucination, her award-winning HALoGEN benchmark, and how we can better evaluate hallucinations in language models.

👇 What’s inside:

1/

We talk to @lasha.bsky.social about LLM Hallucination, her award-winning HALoGEN benchmark, and how we can better evaluate hallucinations in language models.

👇 What’s inside:

1/

Joint work w/i Shrusti Ghela*, David Wadden, and Yejin Choi

bsky.app/profile/lash...

New work w/ Shrusti Ghela*, David Wadden, and Yejin Choi 💫

📝 Paper: arxiv.org/abs/2501.08292

🚀 Code/Data: github.com/AbhilashaRav...

🌐 Website: halogen-hallucinations.github.io 🧵 [1/n]

Joint work w/i Shrusti Ghela*, David Wadden, and Yejin Choi

bsky.app/profile/lash...

I’ll be recruiting PhD students in the upcoming cycle, as well as research interns throughout the year: lasharavichander.github.io/contact.html

I’ll be recruiting PhD students in the upcoming cycle, as well as research interns throughout the year: lasharavichander.github.io/contact.html

I’ll be recruiting PhD students in the upcoming cycle, as well as research interns throughout the year: lasharavichander.github.io/contact.html

I’ll be recruiting PhD students in the upcoming cycle, as well as research interns throughout the year: lasharavichander.github.io/contact.html

Please spread the word that I'm recruiting prospective PhD students: lucy3.notion.site/for-prospect...

Please spread the word that I'm recruiting prospective PhD students: lucy3.notion.site/for-prospect...

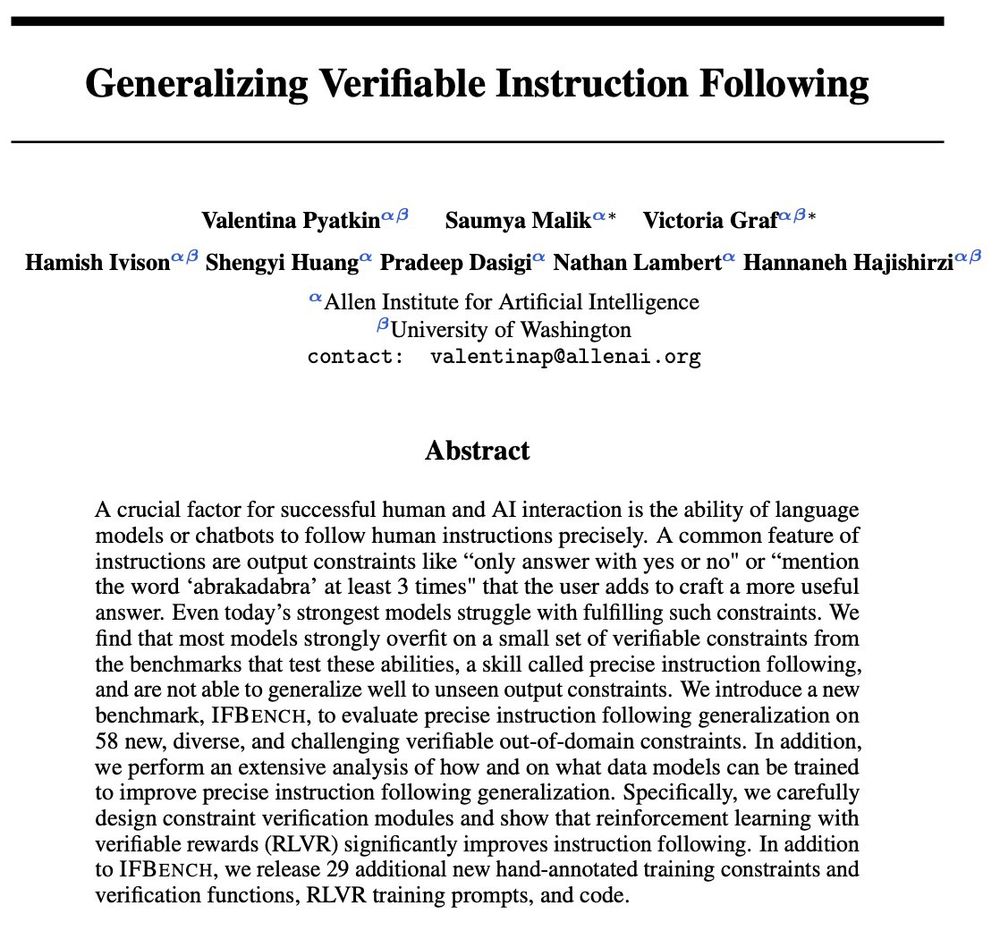

But the set of constraints and verifier functions is limited and most models overfit on IFEval.

We introduce IFBench to measure model generalization to unseen constraints.

But the set of constraints and verifier functions is limited and most models overfit on IFEval.

We introduce IFBench to measure model generalization to unseen constraints.

(arxiv.org/abs/2505.22830)

I'm happy to announce that the preprint release of my first project is online! Developed with the amazing support of @lasha.bsky.social & @anamarasovic.bsky.social

(arxiv.org/abs/2505.22830)

I'm happy to announce that the preprint release of my first project is online! Developed with the amazing support of @lasha.bsky.social & @anamarasovic.bsky.social