Postdoc at UW, working on Natural Language Processing

Recruiting PhD students!

🌐 https://lasharavichander.github.io/

I’ll be recruiting PhD students in the upcoming cycle, as well as research interns throughout the year: lasharavichander.github.io/contact.html

I’ll be recruiting PhD students in the upcoming cycle, as well as research interns throughout the year: lasharavichander.github.io/contact.html

If a recovered token is unpredictable from context, the remaining mechanism must be memorization. (2/5)

If a recovered token is unpredictable from context, the remaining mechanism must be memorization. (2/5)

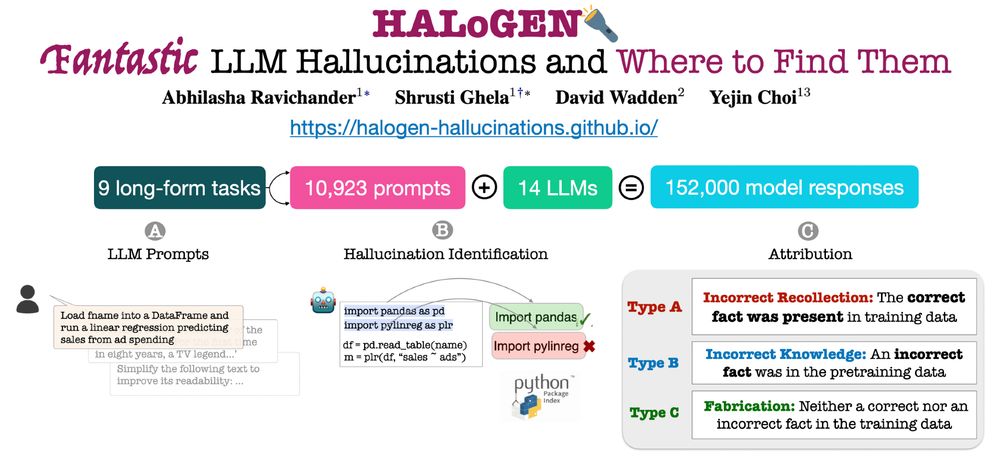

New work w/ Shrusti Ghela*, David Wadden, and Yejin Choi 💫

📝 Paper: arxiv.org/abs/2501.08292

🚀 Code/Data: github.com/AbhilashaRav...

🌐 Website: halogen-hallucinations.github.io 🧵 [1/n]

New work w/ Shrusti Ghela*, David Wadden, and Yejin Choi 💫

📝 Paper: arxiv.org/abs/2501.08292

🚀 Code/Data: github.com/AbhilashaRav...

🌐 Website: halogen-hallucinations.github.io 🧵 [1/n]

✍️ 10,923 prompts across 9 different long-form tasks

🧐 Automatic verifiers that break down AI model responses into individual facts, and check each fact against a reliable knowledge source [2/n]

✍️ 10,923 prompts across 9 different long-form tasks

🧐 Automatic verifiers that break down AI model responses into individual facts, and check each fact against a reliable knowledge source [2/n]