🌐 https://valentinapy.github.io

I am particularly excited about Olmo 3 models' precise instruction following abilities and their good generalization performance on IFBench!

Lucky to have been a part of the Olmo journey for three iterations already.

Best fully open 32B reasoning model & best 32B base model. 🧵

I am particularly excited about Olmo 3 models' precise instruction following abilities and their good generalization performance on IFBench!

Lucky to have been a part of the Olmo journey for three iterations already.

IssueBench, our attempt to fix this, is accepted at TACL, and I will be at #EMNLP2025 next week to talk about it!

New results 🧵

We just released IssueBench – the largest, most realistic benchmark of its kind – to answer this question more robustly than ever before.

Long 🧵with spicy results 👇

IssueBench, our attempt to fix this, is accepted at TACL, and I will be at #EMNLP2025 next week to talk about it!

New results 🧵

Join if you are in Zurich and interested in hearing about IFBench and our latest Olmo and Tülu works at @ai2.bsky.social

Join if you are in Zurich and interested in hearing about IFBench and our latest Olmo and Tülu works at @ai2.bsky.social

We investigate if LMs capture these inferences from connectives when they cannot rely on world knowledge.

New paper w/ Daniel, Will, @jessyjli.bsky.social

We investigate if LMs capture these inferences from connectives when they cannot rely on world knowledge.

New paper w/ Daniel, Will, @jessyjli.bsky.social

@geomblog.bsky.social

) on "Testing LLMs in a sandbox isn't responsible. Focusing on community use and needs is."

@geomblog.bsky.social

) on "Testing LLMs in a sandbox isn't responsible. Focusing on community use and needs is."

Hope to see you there!

Hope to see you there!

See you in San Diego! 🥳

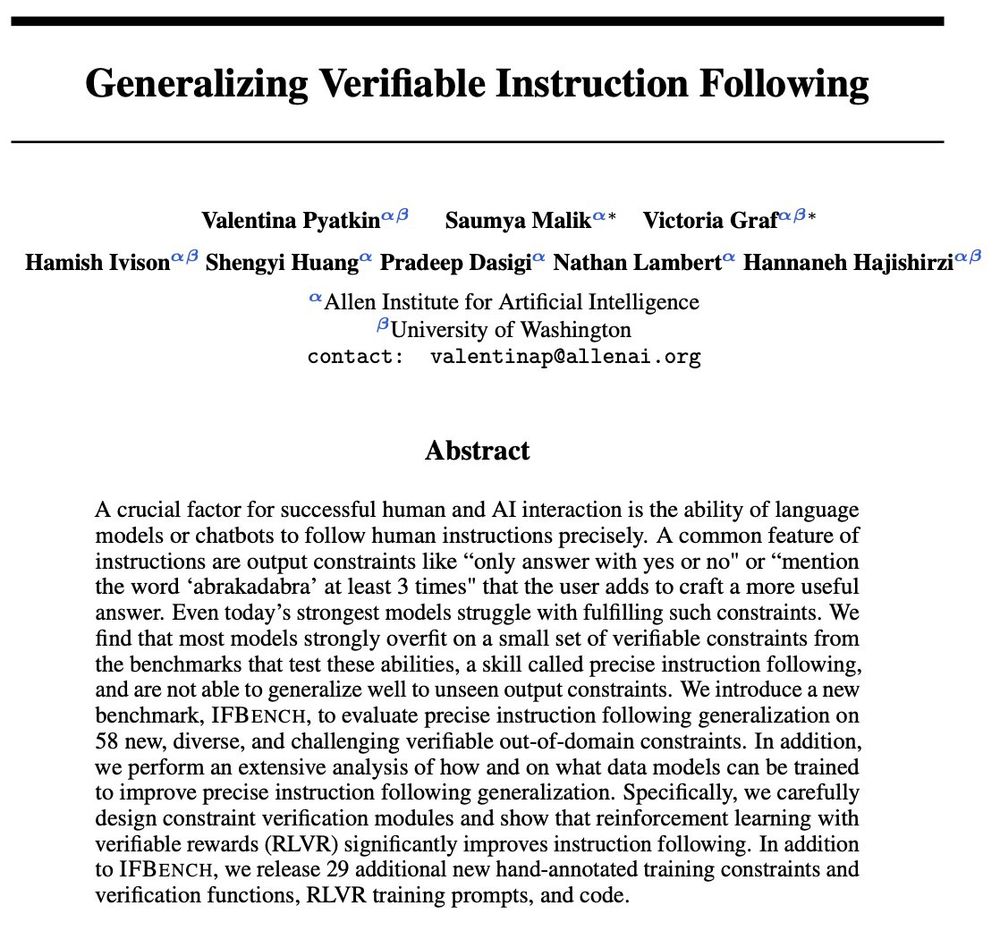

But the set of constraints and verifier functions is limited and most models overfit on IFEval.

We introduce IFBench to measure model generalization to unseen constraints.

See you in San Diego! 🥳

On Sept 17, the #WiAIRpodcast speaks with @valentinapy.bsky.social (@ai2.bsky.social & University of Washington) about open science, post-training, mentorship, and visibility

#WiAIR #NLProc

On Sept 17, the #WiAIRpodcast speaks with @valentinapy.bsky.social (@ai2.bsky.social & University of Washington) about open science, post-training, mentorship, and visibility

#WiAIR #NLProc

@COLM_conf

we are soliciting opinion abstracts to encourage new perspectives and opinions on responsible language modeling, 1-2 of which will be selected to be presented at the workshop.

Please use the google form below to submit your opinion abstract ⬇️

@COLM_conf

we are soliciting opinion abstracts to encourage new perspectives and opinions on responsible language modeling, 1-2 of which will be selected to be presented at the workshop.

Please use the google form below to submit your opinion abstract ⬇️

@pietrolesci.bsky.social who did a fantastic job!

#ACL2025

@pietrolesci.bsky.social who did a fantastic job!

#ACL2025

Would love to chat about all things pragmatics 🧠, redefining "helpfulness"🤔 and enabling better cross-cultural capabilities 🗺️ 🫶

Presenting our work on culturally offensive nonverbal gestures 👇

🕛Wed @ Poster Session 4

📍Hall 4/5, 11:00-12:30

🤞means luck in US but deeply offensive in Vietnam 🚨

📣 We introduce MC-SIGNS, a test bed to evaluate how LLMs/VLMs/T2I handle such nonverbal behavior!

📜: arxiv.org/abs/2502.17710

Would love to chat about all things pragmatics 🧠, redefining "helpfulness"🤔 and enabling better cross-cultural capabilities 🗺️ 🫶

Presenting our work on culturally offensive nonverbal gestures 👇

🕛Wed @ Poster Session 4

📍Hall 4/5, 11:00-12:30

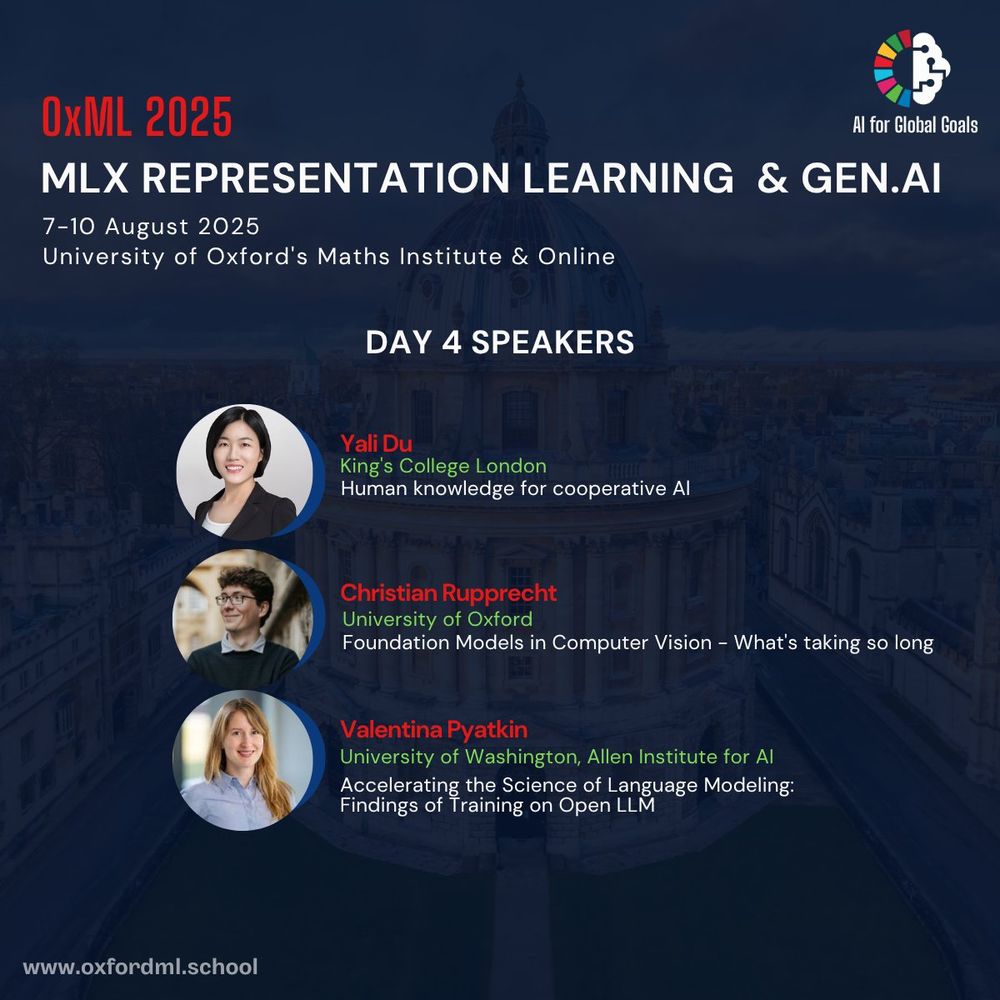

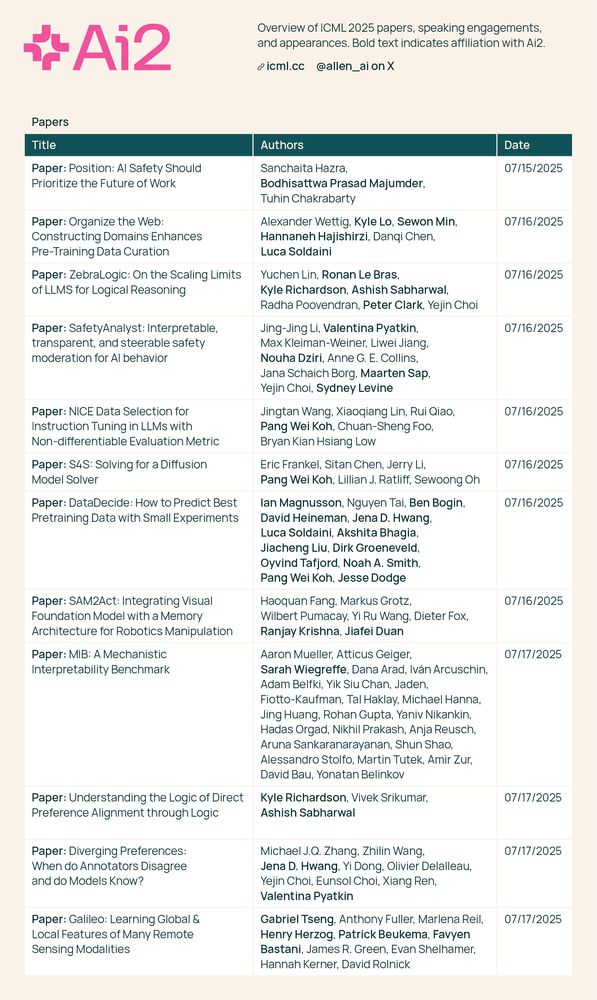

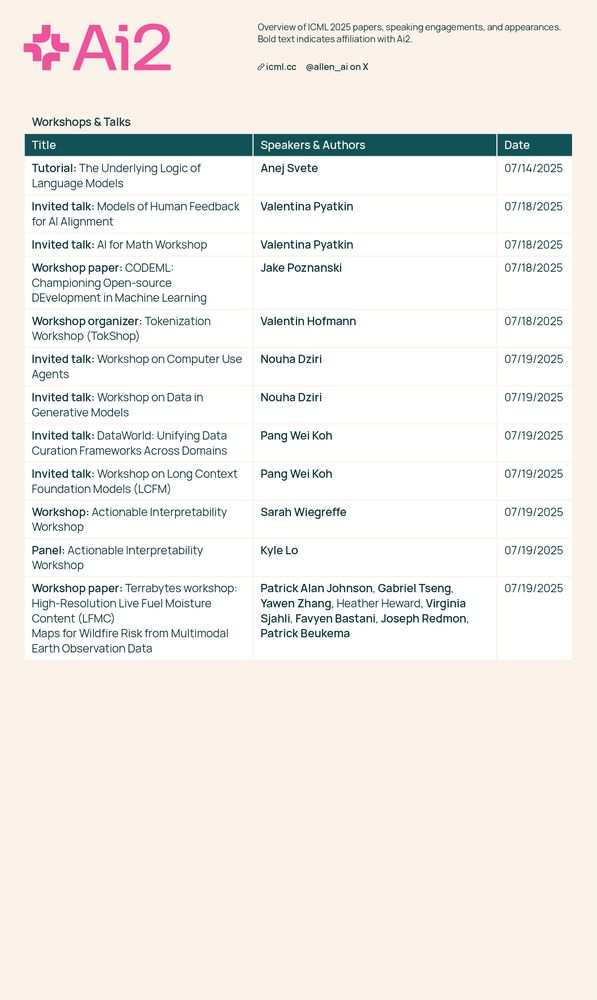

You can find me at the following:

- giving an invited talk at the "Models of Human Feedback for AI Alignment" workshop

- giving an invited talk at the "AI for Math" workshop

I'll also present these two papers ⤵️

You can find me at the following:

- giving an invited talk at the "Models of Human Feedback for AI Alignment" workshop

- giving an invited talk at the "AI for Math" workshop

I'll also present these two papers ⤵️

But the set of constraints and verifier functions is limited and most models overfit on IFEval.

We introduce IFBench to measure model generalization to unseen constraints.

But the set of constraints and verifier functions is limited and most models overfit on IFEval.

We introduce IFBench to measure model generalization to unseen constraints.

Wild gap from o3 > Gemini 2.5 pro of like 30 points.

Wild gap from o3 > Gemini 2.5 pro of like 30 points.