🌐 https://valentinapy.github.io

Join if you are in Zurich and interested in hearing about IFBench and our latest Olmo and Tülu works at @ai2.bsky.social

Join if you are in Zurich and interested in hearing about IFBench and our latest Olmo and Tülu works at @ai2.bsky.social

@geomblog.bsky.social

) on "Testing LLMs in a sandbox isn't responsible. Focusing on community use and needs is."

@geomblog.bsky.social

) on "Testing LLMs in a sandbox isn't responsible. Focusing on community use and needs is."

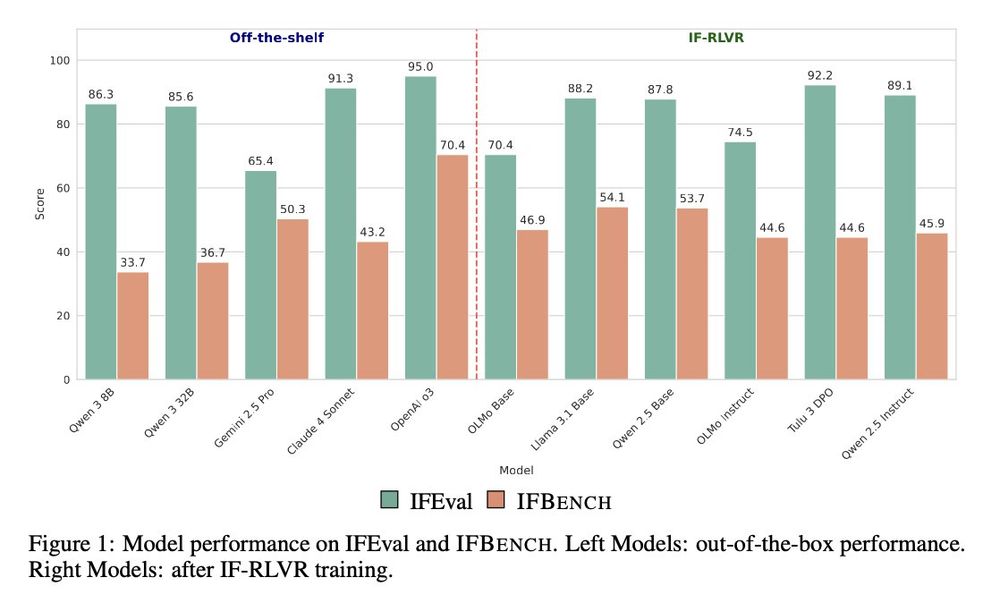

We find that IF-RLVR generalization works best on base models and when you train on multiple constraints per instruction!

We find that IF-RLVR generalization works best on base models and when you train on multiple constraints per instruction!

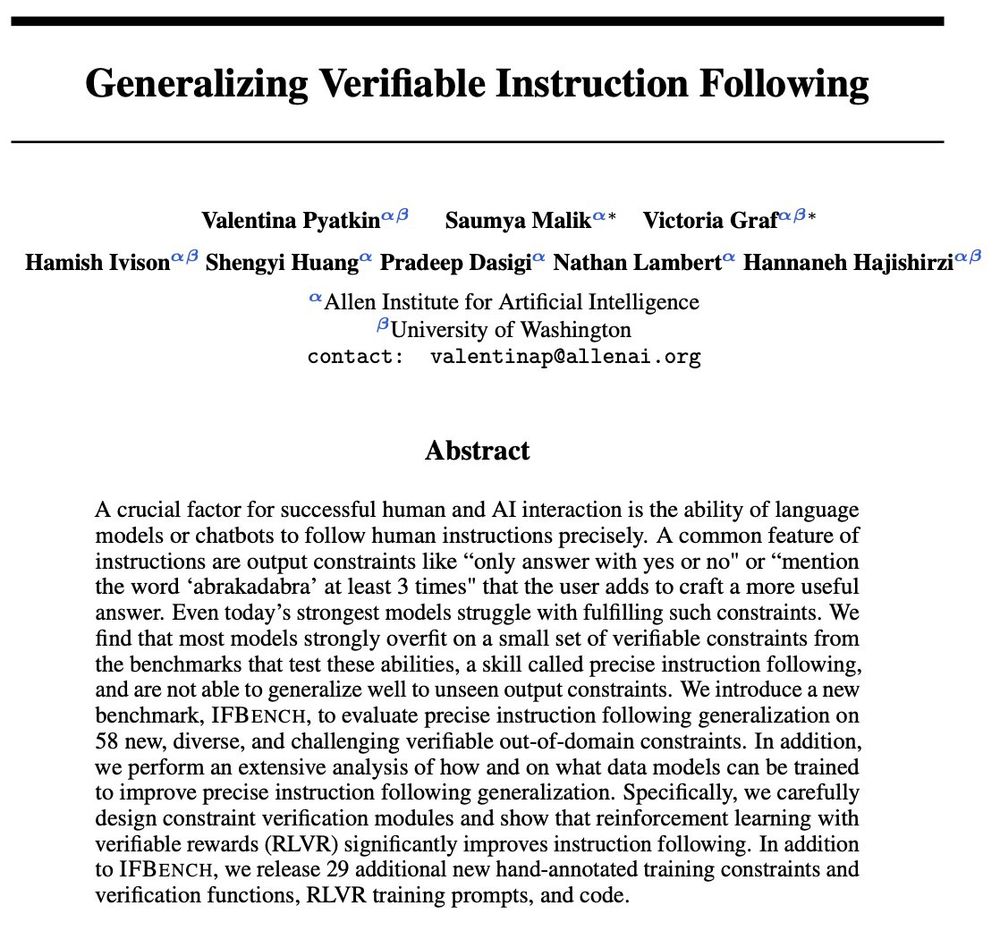

But the set of constraints and verifier functions is limited and most models overfit on IFEval.

We introduce IFBench to measure model generalization to unseen constraints.

But the set of constraints and verifier functions is limited and most models overfit on IFEval.

We introduce IFBench to measure model generalization to unseen constraints.

🤖 ML track: algorithms, math, computation

📚 Socio-technical track: policy, ethics, human participant research

🤖 ML track: algorithms, math, computation

📚 Socio-technical track: policy, ethics, human participant research

@colmweb.org

SoLaR is a collaborative forum for researchers working on responsible development, deployment and use of language models.

We welcome both technical and sociotechnical submissions, deadline July 5th!

@colmweb.org

SoLaR is a collaborative forum for researchers working on responsible development, deployment and use of language models.

We welcome both technical and sociotechnical submissions, deadline July 5th!

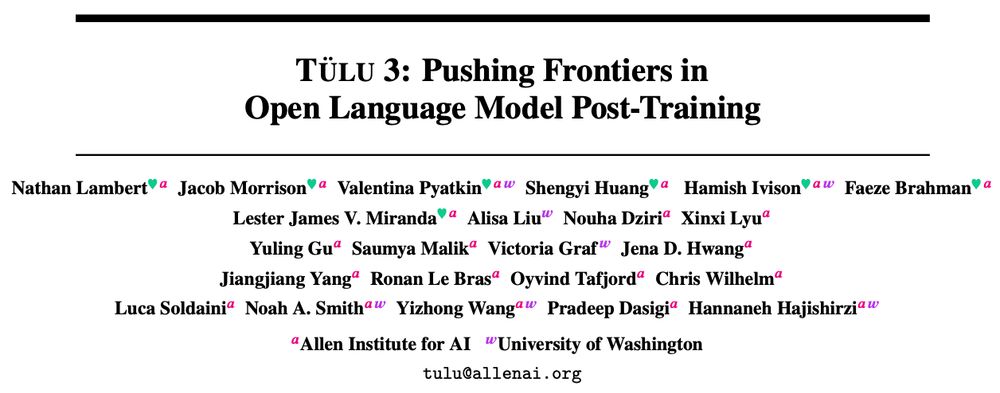

Some of my personal highlights:

💡 We significantly scaled up our preference data!

💡 RL with Verifiable Rewards to improve targeted skills like math and precise instruction following

💡 evaluation toolkit for post-training (including new unseen evals!)

Some of my personal highlights:

💡 We significantly scaled up our preference data!

💡 RL with Verifiable Rewards to improve targeted skills like math and precise instruction following

💡 evaluation toolkit for post-training (including new unseen evals!)

Come join us on Saturday, Dec. 14th in Vancouver

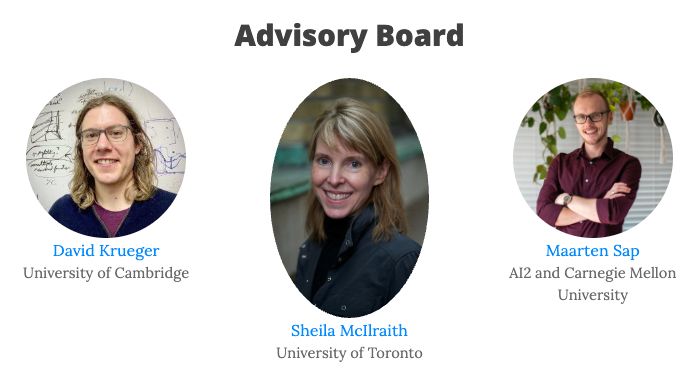

@peterhenderson.bsky.social, Been Kim, @zicokolter.bsky.social, Rida Qadri, Hannah Rose Kirk

Come join us on Saturday, Dec. 14th in Vancouver

@peterhenderson.bsky.social, Been Kim, @zicokolter.bsky.social, Rida Qadri, Hannah Rose Kirk

We show these models can be applied to improve evaluations by identifying divisive examples in LLM-as-Judge benchmarks like WildBench.

[5/6]

We show these models can be applied to improve evaluations by identifying divisive examples in LLM-as-Judge benchmarks like WildBench.

[5/6]

For instance, LLM-judges prefer complying responses when annotators disagree on whether refusing is appropriate.

[4/6]

For instance, LLM-judges prefer complying responses when annotators disagree on whether refusing is appropriate.

[4/6]

We find reward models fail to distinguish between diverging and high-agreement preferences, decisively identifying a preferred response even when annotators disagree.

[3/6]

We find reward models fail to distinguish between diverging and high-agreement preferences, decisively identifying a preferred response even when annotators disagree.

[3/6]

Michael explored these questions in a new ✨preprint✨ from his @ai2.bsky.social internship with me!

Michael explored these questions in a new ✨preprint✨ from his @ai2.bsky.social internship with me!

Our new work "Promptly Predicting Structures: The Return of Inference" shows that they do not, and we show how to fix it.

Paper: arxiv.org/abs/2401.06877

Code: github.com/utahnlp/prom...

Our new work "Promptly Predicting Structures: The Return of Inference" shows that they do not, and we show how to fix it.

Paper: arxiv.org/abs/2401.06877

Code: github.com/utahnlp/prom...