https://yanaiela.github.io/

Submit your big picture ideas, consolidation work, phd thesis distillation, etc. by March 5th!

www.bigpictureworkshop.com

w/ Allyson Ettinger, @norakassner.bsky.social, @sebruder.bsky.social

Submit your big picture ideas, consolidation work, phd thesis distillation, etc. by March 5th!

www.bigpictureworkshop.com

w/ Allyson Ettinger, @norakassner.bsky.social, @sebruder.bsky.social

Applicant: I have written 3 papers

Interviewer: where?

Applicant: arXiv

Interviewer: hired!

Applicant: I have written 3 papers

Interviewer: where?

Applicant: arXiv

Interviewer: hired!

Nearly 50% of Computers & Society papers might be censored, vs 3% of Computer Vision ‼️

@arxiv.bsky.social has recently decided to prohibit any 'position' paper from being submitted to its CS servers.

Why? Because of the "AI slop", and allegedly higher ratios of LLM-generated content in review papers, compared to non-review papers.

Nearly 50% of Computers & Society papers might be censored, vs 3% of Computer Vision ‼️

@arxiv.bsky.social has recently decided to prohibit any 'position' paper from being submitted to its CS servers.

Why? Because of the "AI slop", and allegedly higher ratios of LLM-generated content in review papers, compared to non-review papers.

@arxiv.bsky.social has recently decided to prohibit any 'position' paper from being submitted to its CS servers.

Why? Because of the "AI slop", and allegedly higher ratios of LLM-generated content in review papers, compared to non-review papers.

What I found so far targeted folks who don't know what they are, mostly setting expectations and saying what LLMs can or cannot do.

I'm beyond that. I'm looking for best practices. Kind of design patterns for vibe coding.

What I found so far targeted folks who don't know what they are, mostly setting expectations and saying what LLMs can or cannot do.

I'm beyond that. I'm looking for best practices. Kind of design patterns for vibe coding.

Interested in doing a postdoc with me?

Apply to the prestigious Azrieli program!

Link below 👇

DMs are open (email is good too!)

Interested in doing a postdoc with me?

Apply to the prestigious Azrieli program!

Link below 👇

DMs are open (email is good too!)

In our new work (w/ @tuhinchakr.bsky.social, Diego Garcia-Olano, @byron.bsky.social ) we provide a systematic attempt at measuring AI "slop" in text!

arxiv.org/abs/2509.19163

🧵 (1/7)

In our new work (w/ @tuhinchakr.bsky.social, Diego Garcia-Olano, @byron.bsky.social ) we provide a systematic attempt at measuring AI "slop" in text!

arxiv.org/abs/2509.19163

🧵 (1/7)

Let me know if you're interested!

Let me know if you're interested!

(and hopefully we'll be back for another iteration of the Big Picture next year w/ Allyson Ettinger, @norakassner.bsky.social, @sebruder.bsky.social)

(and hopefully we'll be back for another iteration of the Big Picture next year w/ Allyson Ettinger, @norakassner.bsky.social, @sebruder.bsky.social)

This is unfortunate for both parties! A simple email can save a lot of time to the sender, but is also one of my favorite kind of email as the receiver!

This is unfortunate for both parties! A simple email can save a lot of time to the sender, but is also one of my favorite kind of email as the receiver!

docs.google.com/document/d/1...

Come join our dynamic group in beautiful Lausanne!

docs.google.com/document/d/1...

Come join our dynamic group in beautiful Lausanne!

@pietrolesci.bsky.social who did a fantastic job!

#ACL2025

@pietrolesci.bsky.social who did a fantastic job!

#ACL2025

@pietrolesci.bsky.social who did a fantastic job!

#ACL2025

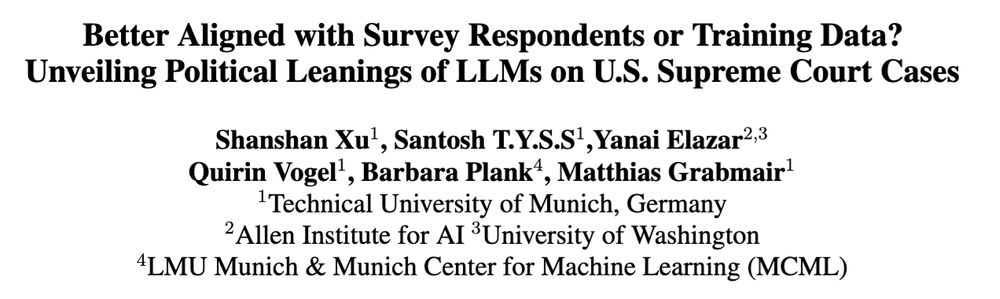

@yanai.bsky.social @barbaraplank.bsky.social on LLMs memorization of distributions of political leanings in their pretraining data! Catch us at L2M2 workshop @l2m2workshop.bsky.social #ACL2025 tmrw

📆 Aug 1, 14:00–15:30 📑 arxiv.org/pdf/2502.18282

@yanai.bsky.social @barbaraplank.bsky.social on LLMs memorization of distributions of political leanings in their pretraining data! Catch us at L2M2 workshop @l2m2workshop.bsky.social #ACL2025 tmrw

📆 Aug 1, 14:00–15:30 📑 arxiv.org/pdf/2502.18282

A single talk (done right) is challenging enough.

A single talk (done right) is challenging enough.

Our new paper introduces the Linear Representation Transferability Hypothesis. We find that the internal representations of different-sized models can be translated into one another using a simple linear(affine) map.

Our new paper introduces the Linear Representation Transferability Hypothesis. We find that the internal representations of different-sized models can be translated into one another using a simple linear(affine) map.

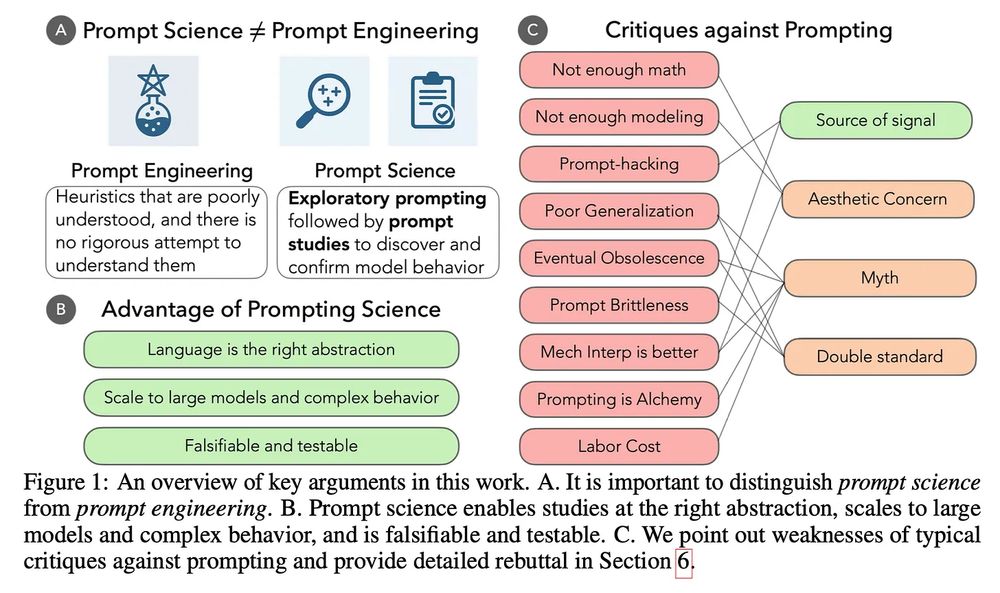

This is holding us back. 🧵and new paper with @ari-holtzman.bsky.social .

This is holding us back. 🧵and new paper with @ari-holtzman.bsky.social .

Wasn't the entire premise of this website to allow uploading of papers w/o the official peer review process??

Wasn't the entire premise of this website to allow uploading of papers w/o the official peer review process??