https://yanaiela.github.io/

Interested in doing a postdoc with me?

Apply to the prestigious Azrieli program!

Link below 👇

DMs are open (email is good too!)

Interested in doing a postdoc with me?

Apply to the prestigious Azrieli program!

Link below 👇

DMs are open (email is good too!)

In our new work (w/ @tuhinchakr.bsky.social, Diego Garcia-Olano, @byron.bsky.social ) we provide a systematic attempt at measuring AI "slop" in text!

arxiv.org/abs/2509.19163

🧵 (1/7)

In our new work (w/ @tuhinchakr.bsky.social, Diego Garcia-Olano, @byron.bsky.social ) we provide a systematic attempt at measuring AI "slop" in text!

arxiv.org/abs/2509.19163

🧵 (1/7)

Let me know if you're interested!

Let me know if you're interested!

(and hopefully we'll be back for another iteration of the Big Picture next year w/ Allyson Ettinger, @norakassner.bsky.social, @sebruder.bsky.social)

(and hopefully we'll be back for another iteration of the Big Picture next year w/ Allyson Ettinger, @norakassner.bsky.social, @sebruder.bsky.social)

This is unfortunate for both parties! A simple email can save a lot of time to the sender, but is also one of my favorite kind of email as the receiver!

This is unfortunate for both parties! A simple email can save a lot of time to the sender, but is also one of my favorite kind of email as the receiver!

docs.google.com/document/d/1...

Come join our dynamic group in beautiful Lausanne!

docs.google.com/document/d/1...

Come join our dynamic group in beautiful Lausanne!

@pietrolesci.bsky.social who did a fantastic job!

#ACL2025

@pietrolesci.bsky.social who did a fantastic job!

#ACL2025

@pietrolesci.bsky.social who did a fantastic job!

#ACL2025

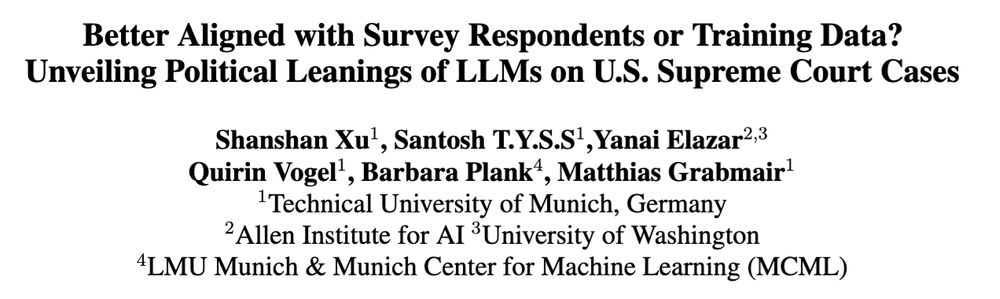

@yanai.bsky.social @barbaraplank.bsky.social on LLMs memorization of distributions of political leanings in their pretraining data! Catch us at L2M2 workshop @l2m2workshop.bsky.social #ACL2025 tmrw

📆 Aug 1, 14:00–15:30 📑 arxiv.org/pdf/2502.18282

@yanai.bsky.social @barbaraplank.bsky.social on LLMs memorization of distributions of political leanings in their pretraining data! Catch us at L2M2 workshop @l2m2workshop.bsky.social #ACL2025 tmrw

📆 Aug 1, 14:00–15:30 📑 arxiv.org/pdf/2502.18282

A single talk (done right) is challenging enough.

A single talk (done right) is challenging enough.

Our new paper introduces the Linear Representation Transferability Hypothesis. We find that the internal representations of different-sized models can be translated into one another using a simple linear(affine) map.

Our new paper introduces the Linear Representation Transferability Hypothesis. We find that the internal representations of different-sized models can be translated into one another using a simple linear(affine) map.

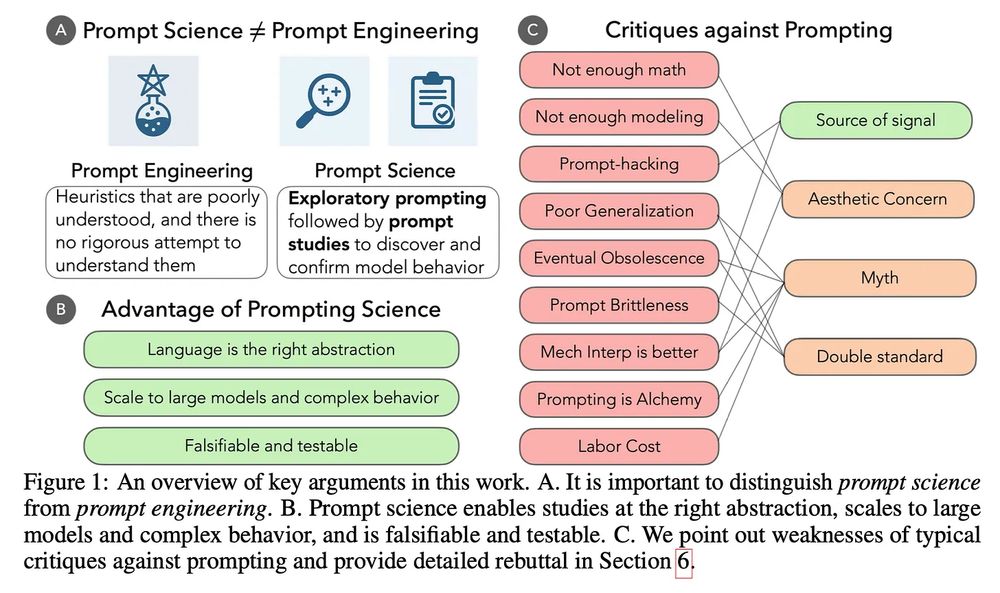

This is holding us back. 🧵and new paper with @ari-holtzman.bsky.social .

This is holding us back. 🧵and new paper with @ari-holtzman.bsky.social .

Wasn't the entire premise of this website to allow uploading of papers w/o the official peer review process??

Wasn't the entire premise of this website to allow uploading of papers w/o the official peer review process??

I really like this paper as a survey on the current literature on what CoT is, but more importantly on what it's not.

It also serves as a cautionary tale to the (apparently quite common) misuse of CoT as an interpretable method.

I really like this paper as a survey on the current literature on what CoT is, but more importantly on what it's not.

It also serves as a cautionary tale to the (apparently quite common) misuse of CoT as an interpretable method.

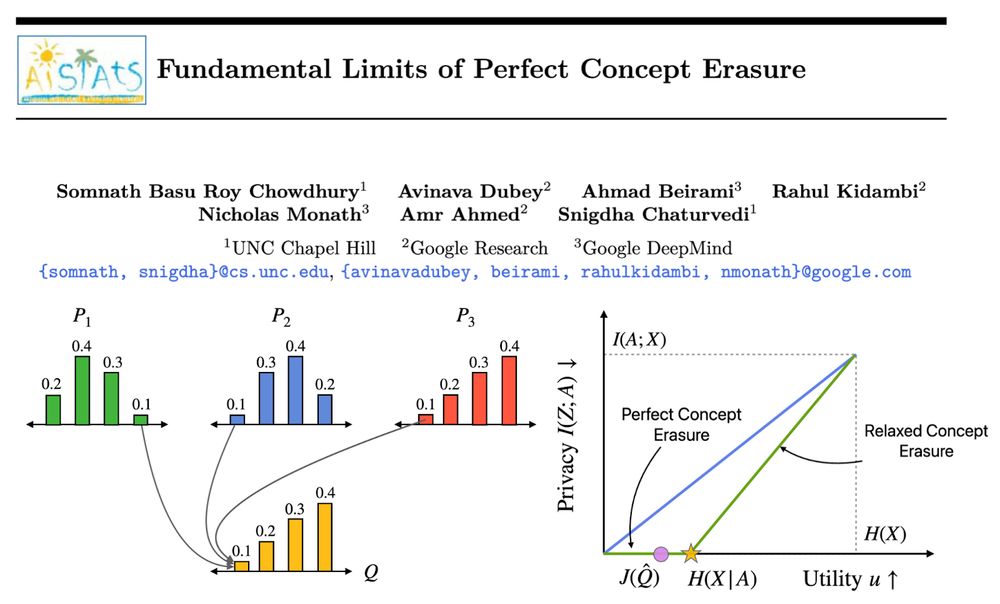

Our method, Perfect Erasure Functions (PEF), erases concepts perfectly from LLM representations. We analytically derive PEF w/o parameter estimation. PEFs achieve pareto optimal erasure-utility tradeoff backed w/ theoretical guarantees. #AISTATS2025 🧵

Our method, Perfect Erasure Functions (PEF), erases concepts perfectly from LLM representations. We analytically derive PEF w/o parameter estimation. PEFs achieve pareto optimal erasure-utility tradeoff backed w/ theoretical guarantees. #AISTATS2025 🧵

🖇️To present my paper "Superlatives in Context", showing how the interpretation of superlatives is very context dependent and often implicit, and how LLMs handle such semantic underspecification

🖇️And we will present RewardBench on Friday

Reach out if you want to chat!

🖇️To present my paper "Superlatives in Context", showing how the interpretation of superlatives is very context dependent and often implicit, and how LLMs handle such semantic underspecification

🖇️And we will present RewardBench on Friday

Reach out if you want to chat!

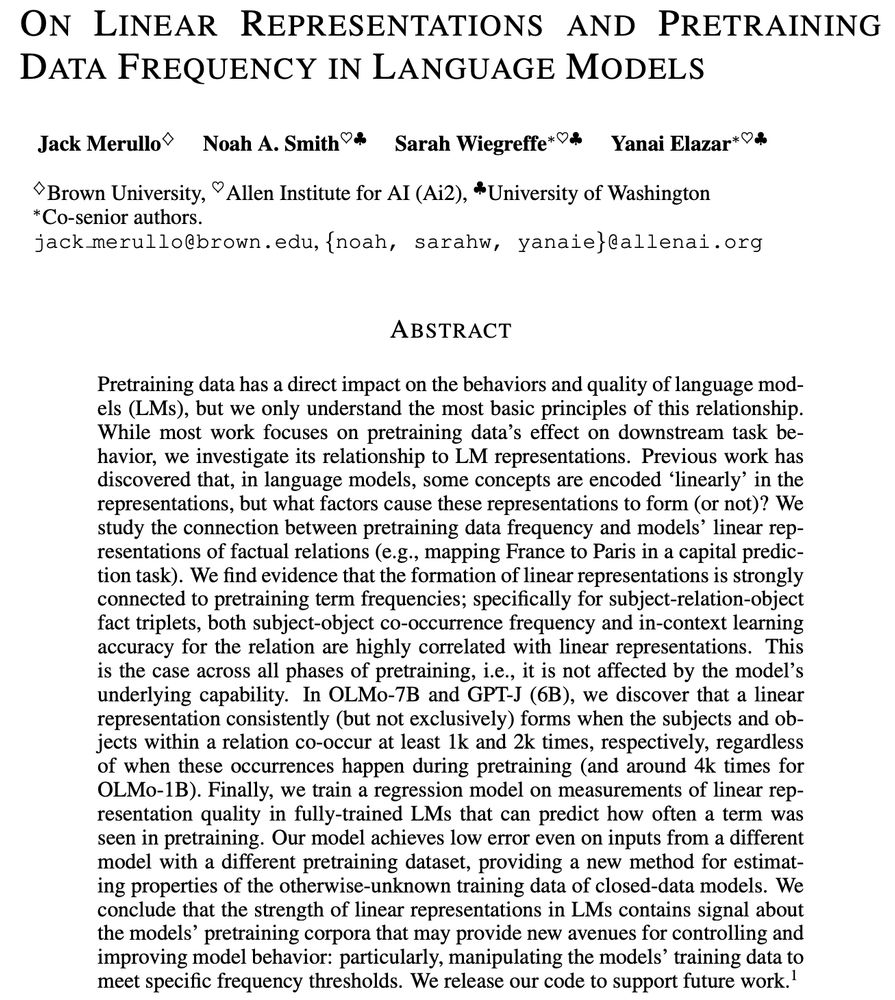

"On Linear Representations and Pretraining Data Frequency in Language Models":

We provide an explanation for when & why linear representations form in large (or small) language models.

Led by @jackmerullo.bsky.social, w/ @nlpnoah.bsky.social & @sarah-nlp.bsky.social

"On Linear Representations and Pretraining Data Frequency in Language Models":

We provide an explanation for when & why linear representations form in large (or small) language models.

Led by @jackmerullo.bsky.social, w/ @nlpnoah.bsky.social & @sarah-nlp.bsky.social

Let me know if you want to meet and/or hang out 🥳

Let me know if you want to meet and/or hang out 🥳