Kanishka Misra 🌊

@kanishka.bsky.social

Assistant Professor of Linguistics, and Harrington Fellow at UT Austin. Works on computational understanding of language, concepts, and generalization.

🕸️👁️: https://kanishka.website

🕸️👁️: https://kanishka.website

Pinned

News🗞️

I will return to UT Austin as an Assistant Professor of Linguistics this fall, and join its vibrant community of Computational Linguists, NLPers, and Cognitive Scientists!🤘

Excited to develop ideas about linguistic and conceptual generalization (recruitment details soon!)

I will return to UT Austin as an Assistant Professor of Linguistics this fall, and join its vibrant community of Computational Linguists, NLPers, and Cognitive Scientists!🤘

Excited to develop ideas about linguistic and conceptual generalization (recruitment details soon!)

Nice to see the practice of using minimal pairs being formalized!

New work to appear @ TACL!

Language models (LMs) are remarkably good at generating novel well-formed sentences, leading to claims that they have mastered grammar.

Yet they often assign higher probability to ungrammatical strings than to grammatical strings.

How can both things be true? 🧵👇

Language models (LMs) are remarkably good at generating novel well-formed sentences, leading to claims that they have mastered grammar.

Yet they often assign higher probability to ungrammatical strings than to grammatical strings.

How can both things be true? 🧵👇

November 11, 2025 at 12:33 PM

Nice to see the practice of using minimal pairs being formalized!

Reposted by Kanishka Misra 🌊

if you’ve had Szechuan peppercorns do you experience them more as

a) tingly (like a vibration) or

b) numbing (like super weak lidocaine)

~And~ are you colorblind

(we‘d need to correct for base rates but let’s just try to crank the anecdata knob first; @elenatenenbaum.bsky.social this is for you)

a) tingly (like a vibration) or

b) numbing (like super weak lidocaine)

~And~ are you colorblind

(we‘d need to correct for base rates but let’s just try to crank the anecdata knob first; @elenatenenbaum.bsky.social this is for you)

November 10, 2025 at 4:02 AM

if you’ve had Szechuan peppercorns do you experience them more as

a) tingly (like a vibration) or

b) numbing (like super weak lidocaine)

~And~ are you colorblind

(we‘d need to correct for base rates but let’s just try to crank the anecdata knob first; @elenatenenbaum.bsky.social this is for you)

a) tingly (like a vibration) or

b) numbing (like super weak lidocaine)

~And~ are you colorblind

(we‘d need to correct for base rates but let’s just try to crank the anecdata knob first; @elenatenenbaum.bsky.social this is for you)

Reposted by Kanishka Misra 🌊

honored to have given a plenary address at the Society for Language Development annual symposium titled "Whence insights? The value of delineating human and machine CogSci". It's a synthesis of a few years of thoughts, recently concretized with @aditya-yedetore.bsky.social and @kanishka.bsky.social

November 9, 2025 at 1:27 PM

honored to have given a plenary address at the Society for Language Development annual symposium titled "Whence insights? The value of delineating human and machine CogSci". It's a synthesis of a few years of thoughts, recently concretized with @aditya-yedetore.bsky.social and @kanishka.bsky.social

Reposted by Kanishka Misra 🌊

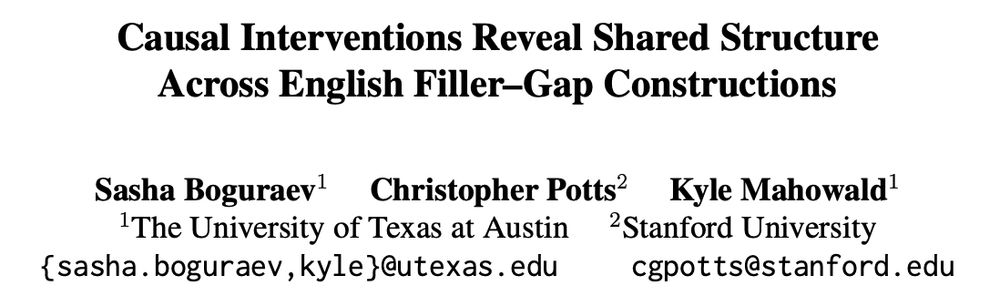

Delighted Sasha's (first year PhD!) work using mech interp to study complex syntax constructions won an Outstanding Paper Award at EMNLP!

Also delighted the ACL community continues to recognize unabashedly linguistic topics like filler-gaps... and the huge potential for LMs to inform such topics!

Also delighted the ACL community continues to recognize unabashedly linguistic topics like filler-gaps... and the huge potential for LMs to inform such topics!

November 7, 2025 at 6:22 PM

Delighted Sasha's (first year PhD!) work using mech interp to study complex syntax constructions won an Outstanding Paper Award at EMNLP!

Also delighted the ACL community continues to recognize unabashedly linguistic topics like filler-gaps... and the huge potential for LMs to inform such topics!

Also delighted the ACL community continues to recognize unabashedly linguistic topics like filler-gaps... and the huge potential for LMs to inform such topics!

Never did I imagine I'll hear "Dhoom Machale" at an election victory party in the US! LFG!

November 5, 2025 at 4:44 AM

Never did I imagine I'll hear "Dhoom Machale" at an election victory party in the US! LFG!

I’ll be in Boston attending BUCLD this week — I won’t be presenting but I’ll be cheering on @najoung.bsky.social who will present at the prestigious SLD symposium about the awesome work by her group, including our work on LMs as hypotheses generators for language acquisition!

🤠👻

🤠👻

November 5, 2025 at 1:40 AM

I’ll be in Boston attending BUCLD this week — I won’t be presenting but I’ll be cheering on @najoung.bsky.social who will present at the prestigious SLD symposium about the awesome work by her group, including our work on LMs as hypotheses generators for language acquisition!

🤠👻

🤠👻

Reposted by Kanishka Misra 🌊

N-gram novelty is widely used as a measure of creativity and generalization. But if LLMs produce highly n-gram novel expressions that don’t make sense or sound awkward, should they still be called creative? In a new paper, we investigate how n-gram novelty relates to creativity.

November 4, 2025 at 3:08 PM

N-gram novelty is widely used as a measure of creativity and generalization. But if LLMs produce highly n-gram novel expressions that don’t make sense or sound awkward, should they still be called creative? In a new paper, we investigate how n-gram novelty relates to creativity.

Reposted by Kanishka Misra 🌊

The psychology program at NYU Abu Dhabi has open rank open area positions in cognition-perception-cogneuro. Wonderful colleagues, excellent research infrastructure (incl MRI, MEG, EEG), close ties to NYU New York. Superfast growing part of the research world

apply.interfolio.com/175417

#neuroskyence

apply.interfolio.com/175417

#neuroskyence

Apply - Interfolio

{{$ctrl.$state.data.pageTitle}} - Apply - Interfolio

apply.interfolio.com

November 2, 2025 at 2:11 PM

The psychology program at NYU Abu Dhabi has open rank open area positions in cognition-perception-cogneuro. Wonderful colleagues, excellent research infrastructure (incl MRI, MEG, EEG), close ties to NYU New York. Superfast growing part of the research world

apply.interfolio.com/175417

#neuroskyence

apply.interfolio.com/175417

#neuroskyence

Reposted by Kanishka Misra 🌊

🧠 New at #NeurIPS2025!

🎵 We're far from the shallow now🎵

TL;DR: We introduce the first "reasoning embedding" and uncover its unique spatio-temporal pattern in the brain.

🔗 arxiv.org/abs/2510.228...

🎵 We're far from the shallow now🎵

TL;DR: We introduce the first "reasoning embedding" and uncover its unique spatio-temporal pattern in the brain.

🔗 arxiv.org/abs/2510.228...

October 30, 2025 at 10:25 PM

🧠 New at #NeurIPS2025!

🎵 We're far from the shallow now🎵

TL;DR: We introduce the first "reasoning embedding" and uncover its unique spatio-temporal pattern in the brain.

🔗 arxiv.org/abs/2510.228...

🎵 We're far from the shallow now🎵

TL;DR: We introduce the first "reasoning embedding" and uncover its unique spatio-temporal pattern in the brain.

🔗 arxiv.org/abs/2510.228...

Reposted by Kanishka Misra 🌊

Introducing Global PIQA, a new multilingual benchmark for 100+ languages. This benchmark is the outcome of this year’s MRL shared task, in collaboration with 300+ researchers from 65 countries. This dataset evaluates physical commonsense reasoning in culturally relevant contexts.

October 29, 2025 at 3:50 PM

Introducing Global PIQA, a new multilingual benchmark for 100+ languages. This benchmark is the outcome of this year’s MRL shared task, in collaboration with 300+ researchers from 65 countries. This dataset evaluates physical commonsense reasoning in culturally relevant contexts.

Reposted by Kanishka Misra 🌊

Our #NeurIPS2025 paper shows that even comparable monolingual tokenizers have different compression rates across languages. But by getting rid of whitespace tokenization and using a custom vocab size for each language, we can reduce token premiums. Preprint out now!

October 28, 2025 at 3:11 PM

Our #NeurIPS2025 paper shows that even comparable monolingual tokenizers have different compression rates across languages. But by getting rid of whitespace tokenization and using a custom vocab size for each language, we can reduce token premiums. Preprint out now!

Reposted by Kanishka Misra 🌊

Check out UT Linguistics very own Austin German's new article on interaction and variation in Z Sign in @glossa-linguistics.bsky.social

3 new #DiamondOpenAccess papers out in @glossa-linguistics.bsky.social. See glossa-journal.org/articles/ Powered by the @janewayolh.bsky.social platform, copy-edited & typeset by @siliconchips.bsky.social , & financially supported by the scholar-owned consortial library model of @openlibhums.org

October 27, 2025 at 6:45 PM

Check out UT Linguistics very own Austin German's new article on interaction and variation in Z Sign in @glossa-linguistics.bsky.social

Reposted by Kanishka Misra 🌊

I will be recruiting PhD students via Georgetown Linguistics this application cycle! Come join us in the PICoL (pronounced “pickle”) lab. We focus on psycholinguistics and cognitive modeling using LLMs. See the linked flyer for more details: bit.ly/3L3vcyA

October 21, 2025 at 9:52 PM

I will be recruiting PhD students via Georgetown Linguistics this application cycle! Come join us in the PICoL (pronounced “pickle”) lab. We focus on psycholinguistics and cognitive modeling using LLMs. See the linked flyer for more details: bit.ly/3L3vcyA

If I spill the tea—“Did you know Sue, Max’s gf, was a tennis champ?”—but then if you reply “They’re dating?!” I’d be a bit puzzled, since that’s not the main point! Humans can track what’s ‘at issue’ in conversation. How sensitive are LMs to this distinction?

New paper w/ @sangheekim.bsky.social!

New paper w/ @sangheekim.bsky.social!

October 21, 2025 at 2:02 PM

If I spill the tea—“Did you know Sue, Max’s gf, was a tennis champ?”—but then if you reply “They’re dating?!” I’d be a bit puzzled, since that’s not the main point! Humans can track what’s ‘at issue’ in conversation. How sensitive are LMs to this distinction?

New paper w/ @sangheekim.bsky.social!

New paper w/ @sangheekim.bsky.social!

Reposted by Kanishka Misra 🌊

Finally out in TACL:

🌎EWoK (Elements of World Knowledge)🌎: A cognition-inspired framework for evaluating basic world knowledge in language models

tl;dr: LLMs learn basic social concepts way easier than physical&spatial concepts

Paper: direct.mit.edu/tacl/article...

Website: ewok-core.github.io

🌎EWoK (Elements of World Knowledge)🌎: A cognition-inspired framework for evaluating basic world knowledge in language models

tl;dr: LLMs learn basic social concepts way easier than physical&spatial concepts

Paper: direct.mit.edu/tacl/article...

Website: ewok-core.github.io

October 20, 2025 at 5:36 PM

Finally out in TACL:

🌎EWoK (Elements of World Knowledge)🌎: A cognition-inspired framework for evaluating basic world knowledge in language models

tl;dr: LLMs learn basic social concepts way easier than physical&spatial concepts

Paper: direct.mit.edu/tacl/article...

Website: ewok-core.github.io

🌎EWoK (Elements of World Knowledge)🌎: A cognition-inspired framework for evaluating basic world knowledge in language models

tl;dr: LLMs learn basic social concepts way easier than physical&spatial concepts

Paper: direct.mit.edu/tacl/article...

Website: ewok-core.github.io

"Although I hate leafy vegetables, I prefer daxes to blickets." Can you tell if daxes are leafy vegetables? LM's can't seem to! 📷

We investigate if LMs capture these inferences from connectives when they cannot rely on world knowledge.

New paper w/ Daniel, Will, @jessyjli.bsky.social

We investigate if LMs capture these inferences from connectives when they cannot rely on world knowledge.

New paper w/ Daniel, Will, @jessyjli.bsky.social

October 16, 2025 at 3:27 PM

"Although I hate leafy vegetables, I prefer daxes to blickets." Can you tell if daxes are leafy vegetables? LM's can't seem to! 📷

We investigate if LMs capture these inferences from connectives when they cannot rely on world knowledge.

New paper w/ Daniel, Will, @jessyjli.bsky.social

We investigate if LMs capture these inferences from connectives when they cannot rely on world knowledge.

New paper w/ Daniel, Will, @jessyjli.bsky.social

Reposted by Kanishka Misra 🌊

Curious as to if people think if (when?) ‘superhuman AI’ arrives, will the building blocks of its performance be human recognizable concepts which have been applied and combined in new and novel ways to achieve ‘superhuman’ performance? Or will it be completely uninterpretable?

October 11, 2025 at 6:17 PM

Curious as to if people think if (when?) ‘superhuman AI’ arrives, will the building blocks of its performance be human recognizable concepts which have been applied and combined in new and novel ways to achieve ‘superhuman’ performance? Or will it be completely uninterpretable?

Reposted by Kanishka Misra 🌊

We're hiring! UT Linguistics invites applications for a position in computational linguistics to begin next academic year 2026-27 (rank of tenure-track Assistant Professor or Associate Professor with tenure). apply.interfolio.com/175156

Apply - Interfolio

{{$ctrl.$state.data.pageTitle}} - Apply - Interfolio

apply.interfolio.com

October 8, 2025 at 6:12 PM

We're hiring! UT Linguistics invites applications for a position in computational linguistics to begin next academic year 2026-27 (rank of tenure-track Assistant Professor or Associate Professor with tenure). apply.interfolio.com/175156

Happening now! Poster 42!

October 8, 2025 at 3:12 PM

Happening now! Poster 42!

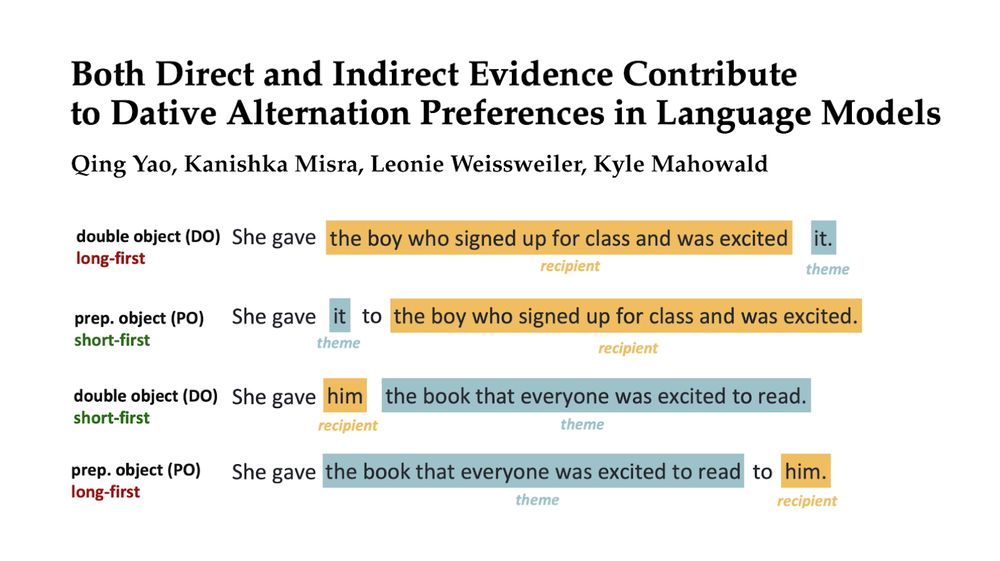

Catch Qing’s poster in the morning poster session today!!

I’ll also be there, talk to me about UT Ling’s new comp ling job/methods to study linguistic generalization/and how LMs *might* inform language science!

I’ll also be there, talk to me about UT Ling’s new comp ling job/methods to study linguistic generalization/and how LMs *might* inform language science!

LMs learn argument-based preferences for dative constructions (preferring recipient first when it’s shorter), consistent with humans. Is this from memorizing preferences in training? New paper w/ @kanishka.bsky.social , @weissweiler.bsky.social , @kmahowald.bsky.social

arxiv.org/abs/2503.20850

arxiv.org/abs/2503.20850

October 8, 2025 at 2:06 PM

Catch Qing’s poster in the morning poster session today!!

I’ll also be there, talk to me about UT Ling’s new comp ling job/methods to study linguistic generalization/and how LMs *might* inform language science!

I’ll also be there, talk to me about UT Ling’s new comp ling job/methods to study linguistic generalization/and how LMs *might* inform language science!

Reposted by Kanishka Misra 🌊

UT Austin Linguistics is hiring in computational linguistics!

Asst or Assoc.

We have a thriving group sites.utexas.edu/compling/ and a long proud history in the space. (For instance, fun fact, Jeff Elman was a UT Austin Linguistics Ph.D.)

faculty.utexas.edu/career/170793

🤘

Asst or Assoc.

We have a thriving group sites.utexas.edu/compling/ and a long proud history in the space. (For instance, fun fact, Jeff Elman was a UT Austin Linguistics Ph.D.)

faculty.utexas.edu/career/170793

🤘

UT Austin Computational Linguistics Research Group – Humans processing computers processing humans processing language

sites.utexas.edu

October 7, 2025 at 8:53 PM

UT Austin Linguistics is hiring in computational linguistics!

Asst or Assoc.

We have a thriving group sites.utexas.edu/compling/ and a long proud history in the space. (For instance, fun fact, Jeff Elman was a UT Austin Linguistics Ph.D.)

faculty.utexas.edu/career/170793

🤘

Asst or Assoc.

We have a thriving group sites.utexas.edu/compling/ and a long proud history in the space. (For instance, fun fact, Jeff Elman was a UT Austin Linguistics Ph.D.)

faculty.utexas.edu/career/170793

🤘

Come join us at the city of ACL!

Very happy to chat about my experience as a new faculty at UT Ling, come find me at #COLM2025 if you’re interested!!

Very happy to chat about my experience as a new faculty at UT Ling, come find me at #COLM2025 if you’re interested!!

UT Austin Linguistics is hiring in computational linguistics!

Asst or Assoc.

We have a thriving group sites.utexas.edu/compling/ and a long proud history in the space. (For instance, fun fact, Jeff Elman was a UT Austin Linguistics Ph.D.)

faculty.utexas.edu/career/170793

🤘

Asst or Assoc.

We have a thriving group sites.utexas.edu/compling/ and a long proud history in the space. (For instance, fun fact, Jeff Elman was a UT Austin Linguistics Ph.D.)

faculty.utexas.edu/career/170793

🤘

UT Austin Computational Linguistics Research Group – Humans processing computers processing humans processing language

sites.utexas.edu

October 7, 2025 at 11:28 PM

Come join us at the city of ACL!

Very happy to chat about my experience as a new faculty at UT Ling, come find me at #COLM2025 if you’re interested!!

Very happy to chat about my experience as a new faculty at UT Ling, come find me at #COLM2025 if you’re interested!!

Reposted by Kanishka Misra 🌊

I will be giving a short talk on this work at the COLM Interplay workshop on Friday (also to appear at EMNLP)!

Will be in Montreal all week and excited to chat about LM interpretability + its interaction with human cognition and ling theory.

Will be in Montreal all week and excited to chat about LM interpretability + its interaction with human cognition and ling theory.

A key hypothesis in the history of linguistics is that different constructions share underlying structure. We take advantage of recent advances in mechanistic interpretability to test this hypothesis in Language Models.

New work with @kmahowald.bsky.social and @cgpotts.bsky.social!

🧵👇!

New work with @kmahowald.bsky.social and @cgpotts.bsky.social!

🧵👇!

October 6, 2025 at 12:05 PM

I will be giving a short talk on this work at the COLM Interplay workshop on Friday (also to appear at EMNLP)!

Will be in Montreal all week and excited to chat about LM interpretability + its interaction with human cognition and ling theory.

Will be in Montreal all week and excited to chat about LM interpretability + its interaction with human cognition and ling theory.

Reposted by Kanishka Misra 🌊

On my way to #COLM2025 🍁

Check out jessyli.com/colm2025

QUDsim: Discourse templates in LLM stories arxiv.org/abs/2504.09373

EvalAgent: retrieval-based eval targeting implicit criteria arxiv.org/abs/2504.15219

RoboInstruct: code generation for robotics with simulators arxiv.org/abs/2405.20179

Check out jessyli.com/colm2025

QUDsim: Discourse templates in LLM stories arxiv.org/abs/2504.09373

EvalAgent: retrieval-based eval targeting implicit criteria arxiv.org/abs/2504.15219

RoboInstruct: code generation for robotics with simulators arxiv.org/abs/2405.20179

October 6, 2025 at 3:50 PM

On my way to #COLM2025 🍁

Check out jessyli.com/colm2025

QUDsim: Discourse templates in LLM stories arxiv.org/abs/2504.09373

EvalAgent: retrieval-based eval targeting implicit criteria arxiv.org/abs/2504.15219

RoboInstruct: code generation for robotics with simulators arxiv.org/abs/2405.20179

Check out jessyli.com/colm2025

QUDsim: Discourse templates in LLM stories arxiv.org/abs/2504.09373

EvalAgent: retrieval-based eval targeting implicit criteria arxiv.org/abs/2504.15219

RoboInstruct: code generation for robotics with simulators arxiv.org/abs/2405.20179

Reposted by Kanishka Misra 🌊

I’m at #COLM2025 from Wed with:

@siyuansong.bsky.social Tue am introspection arxiv.org/abs/2503.07513

@qyao.bsky.social Wed am controlled rearing: arxiv.org/abs/2503.20850

@sashaboguraev.bsky.social INTERPLAY ling interp: arxiv.org/abs/2505.16002

I’ll talk at INTERPLAY too. Come say hi!

@siyuansong.bsky.social Tue am introspection arxiv.org/abs/2503.07513

@qyao.bsky.social Wed am controlled rearing: arxiv.org/abs/2503.20850

@sashaboguraev.bsky.social INTERPLAY ling interp: arxiv.org/abs/2505.16002

I’ll talk at INTERPLAY too. Come say hi!

Language Models Fail to Introspect About Their Knowledge of Language

There has been recent interest in whether large language models (LLMs) can introspect about their own internal states. Such abilities would make LLMs more interpretable, and also validate the use of s...

arxiv.org

October 6, 2025 at 3:57 PM

I’m at #COLM2025 from Wed with:

@siyuansong.bsky.social Tue am introspection arxiv.org/abs/2503.07513

@qyao.bsky.social Wed am controlled rearing: arxiv.org/abs/2503.20850

@sashaboguraev.bsky.social INTERPLAY ling interp: arxiv.org/abs/2505.16002

I’ll talk at INTERPLAY too. Come say hi!

@siyuansong.bsky.social Tue am introspection arxiv.org/abs/2503.07513

@qyao.bsky.social Wed am controlled rearing: arxiv.org/abs/2503.20850

@sashaboguraev.bsky.social INTERPLAY ling interp: arxiv.org/abs/2505.16002

I’ll talk at INTERPLAY too. Come say hi!