Maria Ryskina

@mryskina.bsky.social

Postdoc @vectorinstitute.ai | organizer @queerinai.com | previously MIT, CMU LTI | 🐀 rodent enthusiast | she/they

🌐 https://ryskina.github.io/

🌐 https://ryskina.github.io/

Pinned

Maria Ryskina

@mryskina.bsky.social

· Oct 4

Interested in language models, brains, and concepts? Check out our COLM 2025 🔦 Spotlight paper!

(And if you’re at COLM, come hear about it on Tuesday – sessions Spotlight 2 & Poster 2)!

(And if you’re at COLM, come hear about it on Tuesday – sessions Spotlight 2 & Poster 2)!

Reposted by Maria Ryskina

The recording of my keynote from #COLM2025 is now available!

Gillian Hadfield - Alignment is social: lessons from human alignment for AI

Current approaches conceptualize the alignment challenge as one of eliciting individual human preferences and training models to choose outputs that that satisfy those preferences. To the extent…

www.youtube.com

November 6, 2025 at 9:35 PM

The recording of my keynote from #COLM2025 is now available!

Reposted by Maria Ryskina

the only kind of Rat Race I'm down for

November 6, 2025 at 2:43 PM

the only kind of Rat Race I'm down for

Reposted by Maria Ryskina

Canadian researchers should be aware the there is a motion before the Parliamentary Standing Committee on Science and Research to force Tricouncils to hand over disaggregated peer review data on all applications:

Applicant names, profiles, demographics

Reviewers names, profiles, comments, and scores

Applicant names, profiles, demographics

Reviewers names, profiles, comments, and scores

October 30, 2025 at 8:33 PM

Canadian researchers should be aware the there is a motion before the Parliamentary Standing Committee on Science and Research to force Tricouncils to hand over disaggregated peer review data on all applications:

Applicant names, profiles, demographics

Reviewers names, profiles, comments, and scores

Applicant names, profiles, demographics

Reviewers names, profiles, comments, and scores

Reposted by Maria Ryskina

Finally out in TACL:

🌎EWoK (Elements of World Knowledge)🌎: A cognition-inspired framework for evaluating basic world knowledge in language models

tl;dr: LLMs learn basic social concepts way easier than physical&spatial concepts

Paper: direct.mit.edu/tacl/article...

Website: ewok-core.github.io

🌎EWoK (Elements of World Knowledge)🌎: A cognition-inspired framework for evaluating basic world knowledge in language models

tl;dr: LLMs learn basic social concepts way easier than physical&spatial concepts

Paper: direct.mit.edu/tacl/article...

Website: ewok-core.github.io

October 20, 2025 at 5:36 PM

Finally out in TACL:

🌎EWoK (Elements of World Knowledge)🌎: A cognition-inspired framework for evaluating basic world knowledge in language models

tl;dr: LLMs learn basic social concepts way easier than physical&spatial concepts

Paper: direct.mit.edu/tacl/article...

Website: ewok-core.github.io

🌎EWoK (Elements of World Knowledge)🌎: A cognition-inspired framework for evaluating basic world knowledge in language models

tl;dr: LLMs learn basic social concepts way easier than physical&spatial concepts

Paper: direct.mit.edu/tacl/article...

Website: ewok-core.github.io

Reposted by Maria Ryskina

🚀 Excited to share a major update to our “Mixture of Cognitive Reasoners” (MiCRo) paper!

We ask: What benefits can we unlock by designing language models whose inner structure mirrors the brain’s functional specialization?

More below 🧠👇

cognitive-reasoners.epfl.ch

We ask: What benefits can we unlock by designing language models whose inner structure mirrors the brain’s functional specialization?

More below 🧠👇

cognitive-reasoners.epfl.ch

October 20, 2025 at 12:10 PM

🚀 Excited to share a major update to our “Mixture of Cognitive Reasoners” (MiCRo) paper!

We ask: What benefits can we unlock by designing language models whose inner structure mirrors the brain’s functional specialization?

More below 🧠👇

cognitive-reasoners.epfl.ch

We ask: What benefits can we unlock by designing language models whose inner structure mirrors the brain’s functional specialization?

More below 🧠👇

cognitive-reasoners.epfl.ch

Reposted by Maria Ryskina

I'm on the job market looking for CS/ischool faculty and related positions! I'm broadly interested in doing research with policymakers and communities impacted by AI to inform and develop mitigations to harms and risks. If you've included any of my work in syllabi or policy docs please let me know!

October 16, 2025 at 11:19 PM

I'm on the job market looking for CS/ischool faculty and related positions! I'm broadly interested in doing research with policymakers and communities impacted by AI to inform and develop mitigations to harms and risks. If you've included any of my work in syllabi or policy docs please let me know!

Reposted by Maria Ryskina

Grateful to keynote at #COLM2025. Here's what we're missing about AI alignment: Humans don’t cooperate just by aggregating preferences, we build social processes and institutions to generate norms that make it safe to trade with strangers. AI needs to play by these same systems, not replace them.

October 15, 2025 at 11:00 PM

Grateful to keynote at #COLM2025. Here's what we're missing about AI alignment: Humans don’t cooperate just by aggregating preferences, we build social processes and institutions to generate norms that make it safe to trade with strangers. AI needs to play by these same systems, not replace them.

Reposted by Maria Ryskina

Inspired to share some papers that I found at #COLM2025!

"Register Always Matters: Analysis of LLM Pretraining Data Through the Lens of Language Variation" by Amanda Myntti et al. arxiv.org/abs/2504.01542

"Register Always Matters: Analysis of LLM Pretraining Data Through the Lens of Language Variation" by Amanda Myntti et al. arxiv.org/abs/2504.01542

October 14, 2025 at 6:16 PM

Inspired to share some papers that I found at #COLM2025!

"Register Always Matters: Analysis of LLM Pretraining Data Through the Lens of Language Variation" by Amanda Myntti et al. arxiv.org/abs/2504.01542

"Register Always Matters: Analysis of LLM Pretraining Data Through the Lens of Language Variation" by Amanda Myntti et al. arxiv.org/abs/2504.01542

Reposted by Maria Ryskina

👀

Over the past year, my lab has been working on fleshing out theory + applications of the Platonic Representation Hypothesis.

Today I want to share two new works on this topic:

Eliciting higher alignment: arxiv.org/abs/2510.02425

Unpaired learning of unified reps: arxiv.org/abs/2510.08492

1/9

Today I want to share two new works on this topic:

Eliciting higher alignment: arxiv.org/abs/2510.02425

Unpaired learning of unified reps: arxiv.org/abs/2510.08492

1/9

October 13, 2025 at 5:43 PM

👀

Reposted by Maria Ryskina

We are launching our Graduate School Application Financial Aid Program (www.queerinai.com/grad-app-aid) for 2025-2026. We’ll give up to $750 per person to LGBTQIA+ STEM scholars applying to graduate programs. Apply at openreview.net/group?id=Que.... 1/5

Grad App Aid — Queer in AI

www.queerinai.com

October 9, 2025 at 12:37 AM

We are launching our Graduate School Application Financial Aid Program (www.queerinai.com/grad-app-aid) for 2025-2026. We’ll give up to $750 per person to LGBTQIA+ STEM scholars applying to graduate programs. Apply at openreview.net/group?id=Que.... 1/5

Reposted by Maria Ryskina

Keynote at #COLM2025: Nicholas Carlini from Anthropic

"Are language models worth it?"

Explains that the prior decade of his work on adversarial images, while it taught us a lot, isn't very applied; it's unlikely anyone is actually altering images of cats in scary ways.

"Are language models worth it?"

Explains that the prior decade of his work on adversarial images, while it taught us a lot, isn't very applied; it's unlikely anyone is actually altering images of cats in scary ways.

October 9, 2025 at 1:12 PM

Keynote at #COLM2025: Nicholas Carlini from Anthropic

"Are language models worth it?"

Explains that the prior decade of his work on adversarial images, while it taught us a lot, isn't very applied; it's unlikely anyone is actually altering images of cats in scary ways.

"Are language models worth it?"

Explains that the prior decade of his work on adversarial images, while it taught us a lot, isn't very applied; it's unlikely anyone is actually altering images of cats in scary ways.

Reposted by Maria Ryskina

Outstanding paper 🏆 1: Fast Controlled Generation from Language Models with Adaptive Weighted Rejection Sampling

openreview.net/forum?id=3Bm...

openreview.net/forum?id=3Bm...

October 7, 2025 at 1:23 PM

Outstanding paper 🏆 1: Fast Controlled Generation from Language Models with Adaptive Weighted Rejection Sampling

openreview.net/forum?id=3Bm...

openreview.net/forum?id=3Bm...

Reposted by Maria Ryskina

How can an imitative model like an LLM outperform the experts it is trained on? Our new COLM paper outlines three types of transcendence and shows that each one relies on a different aspect of data diversity. arxiv.org/abs/2508.17669

August 29, 2025 at 9:46 PM

How can an imitative model like an LLM outperform the experts it is trained on? Our new COLM paper outlines three types of transcendence and shows that each one relies on a different aspect of data diversity. arxiv.org/abs/2508.17669

Reposted by Maria Ryskina

Check out @mryskina.bsky.social's talk and poster at COLM on Tuesday—we present a method to identify 'semantically consistent' brain regions (responding to concepts across modalities) and show that more semantically consistent brain regions are better predicted by LLMs.

Interested in language models, brains, and concepts? Check out our COLM 2025 🔦 Spotlight paper!

(And if you’re at COLM, come hear about it on Tuesday – sessions Spotlight 2 & Poster 2)!

(And if you’re at COLM, come hear about it on Tuesday – sessions Spotlight 2 & Poster 2)!

October 4, 2025 at 12:43 PM

Check out @mryskina.bsky.social's talk and poster at COLM on Tuesday—we present a method to identify 'semantically consistent' brain regions (responding to concepts across modalities) and show that more semantically consistent brain regions are better predicted by LLMs.

Also, if you're at COLM, come to Gillian's keynote and find out what our lab is working on!

Keynote spotlight #4: the second day of COLM will close with @ghadfield.bsky.social from JHU talking about human society alignment, and lessons for AI alignment

October 4, 2025 at 2:36 AM

Also, if you're at COLM, come to Gillian's keynote and find out what our lab is working on!

Interested in language models, brains, and concepts? Check out our COLM 2025 🔦 Spotlight paper!

(And if you’re at COLM, come hear about it on Tuesday – sessions Spotlight 2 & Poster 2)!

(And if you’re at COLM, come hear about it on Tuesday – sessions Spotlight 2 & Poster 2)!

October 4, 2025 at 2:15 AM

Interested in language models, brains, and concepts? Check out our COLM 2025 🔦 Spotlight paper!

(And if you’re at COLM, come hear about it on Tuesday – sessions Spotlight 2 & Poster 2)!

(And if you’re at COLM, come hear about it on Tuesday – sessions Spotlight 2 & Poster 2)!

Reposted by Maria Ryskina

Attending COLM next week in Montreal? 🇨🇦 Join us on Thursday for a 2-part social! ✨ 5:30-6:30 at the conference venue and 7:00-10:00 offsite! 🌈 Sign up here: forms.gle/oiMK3TLP8ZZc...

October 1, 2025 at 2:40 PM

Attending COLM next week in Montreal? 🇨🇦 Join us on Thursday for a 2-part social! ✨ 5:30-6:30 at the conference venue and 7:00-10:00 offsite! 🌈 Sign up here: forms.gle/oiMK3TLP8ZZc...

Reposted by Maria Ryskina

Hi all, we are organizing this fun affinity workshop at EurIPS in Copenhagen. Feel free to present any of your previously published works before or submit abstracts / mixed-media materials!

Hey everyone! 👋 We're so excited to be organizing a fun Queer in AI Workshop, co-located with EurIPS in Copenhagen on December 5!

We are seeking submissions, with a deadline of October 15. #NeurIPS2025

We are seeking submissions, with a deadline of October 15. #NeurIPS2025

September 9, 2025 at 9:34 AM

Hi all, we are organizing this fun affinity workshop at EurIPS in Copenhagen. Feel free to present any of your previously published works before or submit abstracts / mixed-media materials!

Reposted by Maria Ryskina

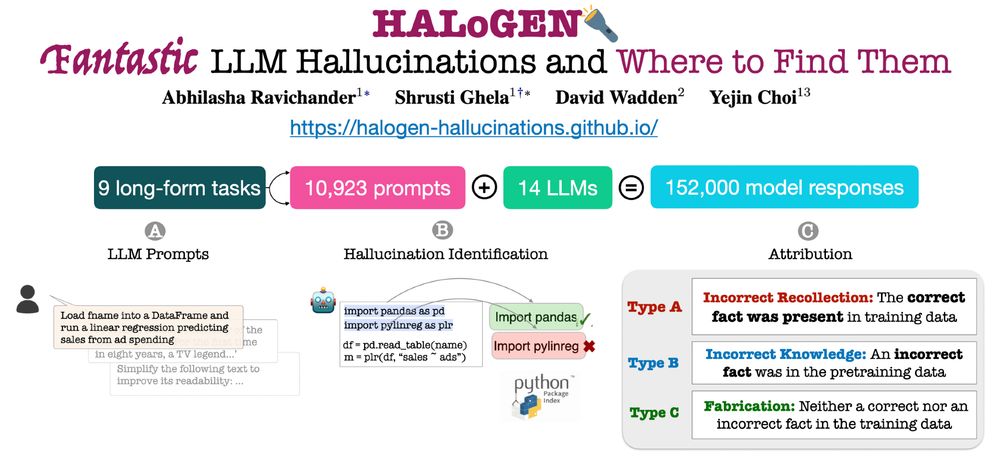

Super super thrilled that HALoGEN, our study of LLM hallucinations and their potential origins in training data, received an ✨Outstanding Paper Award✨ at ACL!

Joint work w/i Shrusti Ghela*, David Wadden, and Yejin Choi

bsky.app/profile/lash...

Joint work w/i Shrusti Ghela*, David Wadden, and Yejin Choi

bsky.app/profile/lash...

We are launching HALoGEN💡, a way to systematically study *when* and *why* LLMs still hallucinate.

New work w/ Shrusti Ghela*, David Wadden, and Yejin Choi 💫

📝 Paper: arxiv.org/abs/2501.08292

🚀 Code/Data: github.com/AbhilashaRav...

🌐 Website: halogen-hallucinations.github.io 🧵 [1/n]

New work w/ Shrusti Ghela*, David Wadden, and Yejin Choi 💫

📝 Paper: arxiv.org/abs/2501.08292

🚀 Code/Data: github.com/AbhilashaRav...

🌐 Website: halogen-hallucinations.github.io 🧵 [1/n]

July 30, 2025 at 7:53 PM

Super super thrilled that HALoGEN, our study of LLM hallucinations and their potential origins in training data, received an ✨Outstanding Paper Award✨ at ACL!

Joint work w/i Shrusti Ghela*, David Wadden, and Yejin Choi

bsky.app/profile/lash...

Joint work w/i Shrusti Ghela*, David Wadden, and Yejin Choi

bsky.app/profile/lash...

Reposted by Maria Ryskina

Looking forward to attending #cogsci2025 (Jul 29 - Aug 3)! I’m especially excited to meet students who will be applying to PhD programs in Computational Ling/CogSci in the coming cycle.

Please reach out if you want to meet up and chat! Email is the best way, but DM also works if you must!

quick🧵:

Please reach out if you want to meet up and chat! Email is the best way, but DM also works if you must!

quick🧵:

July 28, 2025 at 9:20 PM

Looking forward to attending #cogsci2025 (Jul 29 - Aug 3)! I’m especially excited to meet students who will be applying to PhD programs in Computational Ling/CogSci in the coming cycle.

Please reach out if you want to meet up and chat! Email is the best way, but DM also works if you must!

quick🧵:

Please reach out if you want to meet up and chat! Email is the best way, but DM also works if you must!

quick🧵:

Join Abhilasha's lab, she is an awesome researcher and mentor! I can attest, being her collaborator was great fun 🤩

📣 Life update: Thrilled to announce that I’ll be starting as faculty at the Max Planck Institute for Software Systems this Fall!

I’ll be recruiting PhD students in the upcoming cycle, as well as research interns throughout the year: lasharavichander.github.io/contact.html

I’ll be recruiting PhD students in the upcoming cycle, as well as research interns throughout the year: lasharavichander.github.io/contact.html

July 24, 2025 at 1:25 PM

Join Abhilasha's lab, she is an awesome researcher and mentor! I can attest, being her collaborator was great fun 🤩

Come join us in Vancouver for our ICML social!

(I'll be at ICML July 15-18, any poster/talk recs welcome!)

(I'll be at ICML July 15-18, any poster/talk recs welcome!)

1/ 💻 Queer in AI is hosting a social at #ICML2025 in Vancouver on 📅 July 16, and you’re invited! Let’s network, enjoy food and drinks, and celebrate our community. Details below…

July 11, 2025 at 3:47 PM

Come join us in Vancouver for our ICML social!

(I'll be at ICML July 15-18, any poster/talk recs welcome!)

(I'll be at ICML July 15-18, any poster/talk recs welcome!)