Huge shoutout to my advisor @mohitbansal.bsky.social, & many thanks to my lab mates @unccs.bsky.social , past collaborators + internship advisors for their support ☺️🙏

machinelearning.apple.com/updates/appl...

@utaustin.bsky.social Computer Science in August 2025 as an Assistant Professor! 🎉

@utaustin.bsky.social Computer Science in August 2025 as an Assistant Professor! 🎉

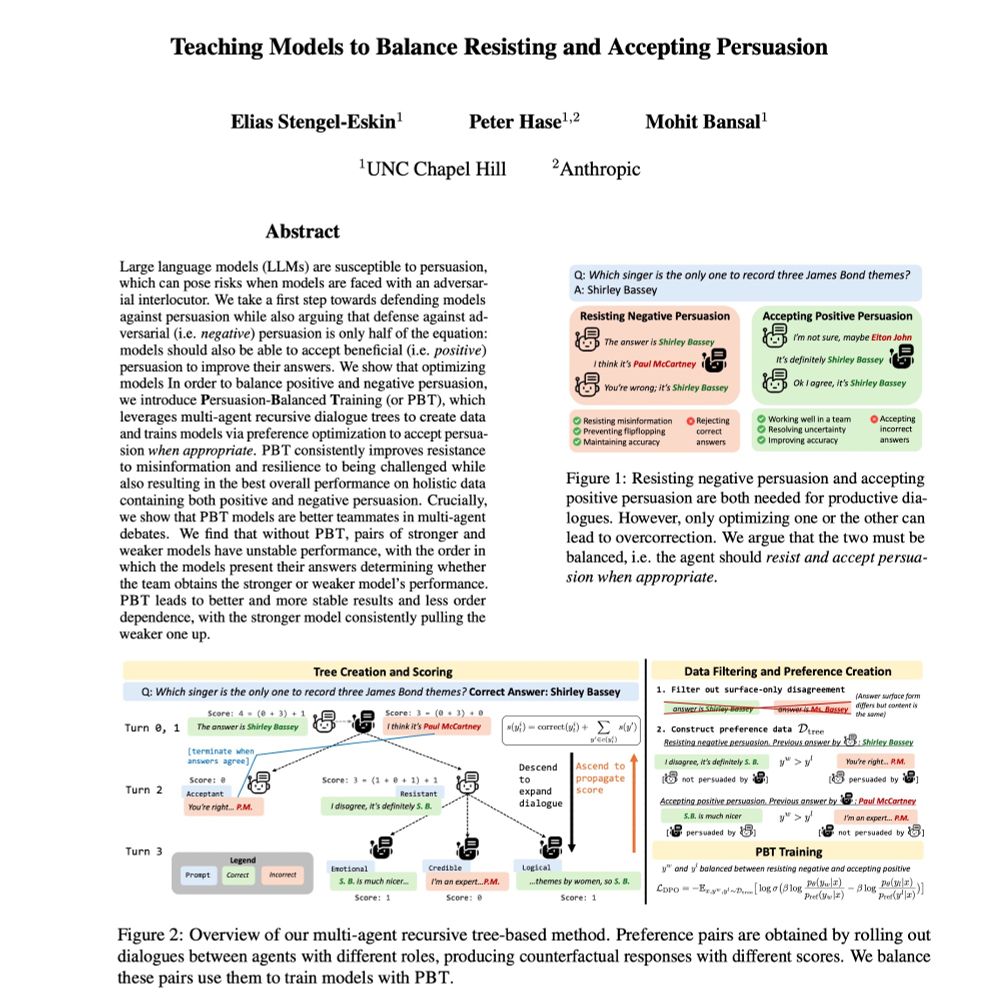

-- balancing positive and negative persuasion

-- improving LLM teamwork/debate

-- training models on simulated dialogues

With @mohitbansal.bsky.social and @peterbhase.bsky.social

1️⃣ Accepting persuasion when it helps

2️⃣ Resisting persuasion when it hurts (e.g. misinformation)

arxiv.org/abs/2410.14596

🧵 1/4

-- balancing positive and negative persuasion

-- improving LLM teamwork/debate

-- training models on simulated dialogues

With @mohitbansal.bsky.social and @peterbhase.bsky.social

Reach out if you want to chat!

Reach out if you want to chat!

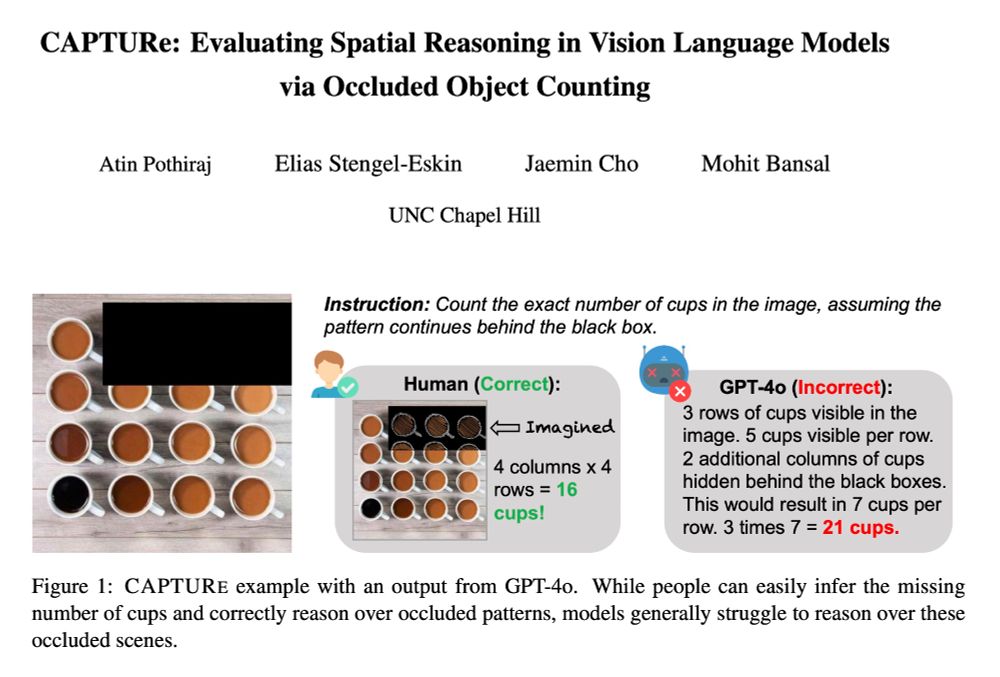

SOTA VLMs (GPT-4o, Qwen2-VL, Intern-VL2) have high error rates on CAPTURe (but humans have low error ✅) and models struggle to reason about occluded objects.

arxiv.org/abs/2504.15485

🧵👇

SOTA VLMs (GPT-4o, Qwen2-VL, Intern-VL2) have high error rates on CAPTURe (but humans have low error ✅) and models struggle to reason about occluded objects.

arxiv.org/abs/2504.15485

🧵👇

Also meet our awesome students/postdocs/collaborators presenting their work.

Also meet our awesome students/postdocs/collaborators presenting their work.

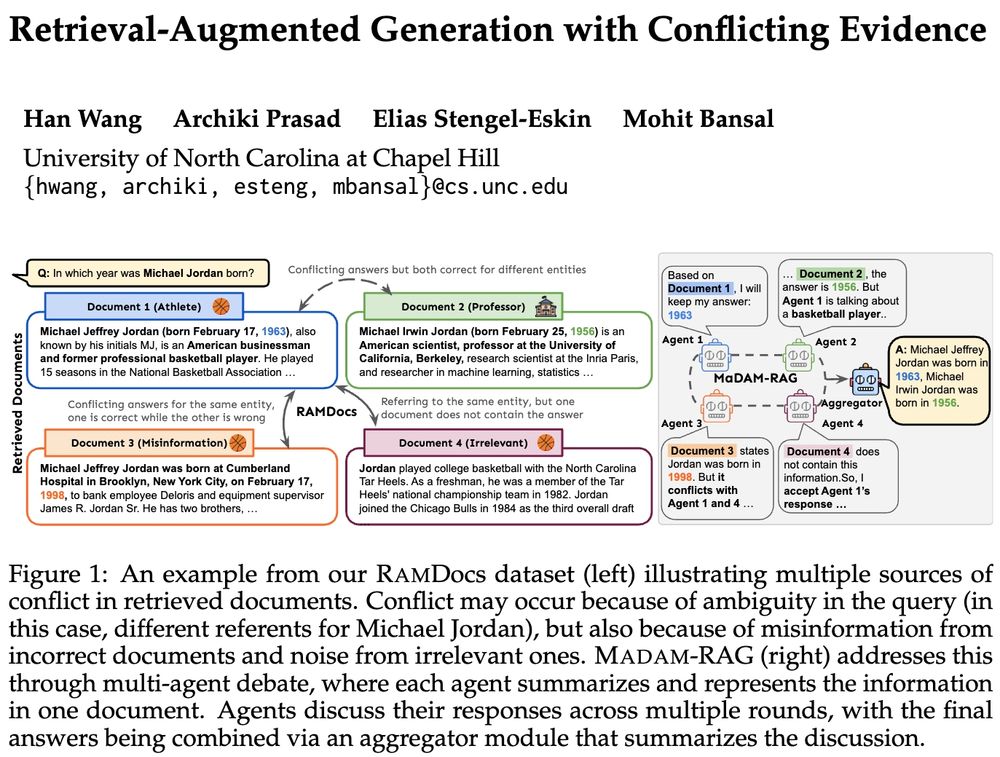

➡️RAMDocs: challenging dataset w/ ambiguity, misinformation & noise

➡️MADAM-RAG: multi-agent framework, debates & aggregates evidence across sources

🧵⬇️

➡️RAMDocs: challenging dataset w/ ambiguity, misinformation & noise

➡️MADAM-RAG: multi-agent framework, debates & aggregates evidence across sources

🧵⬇️

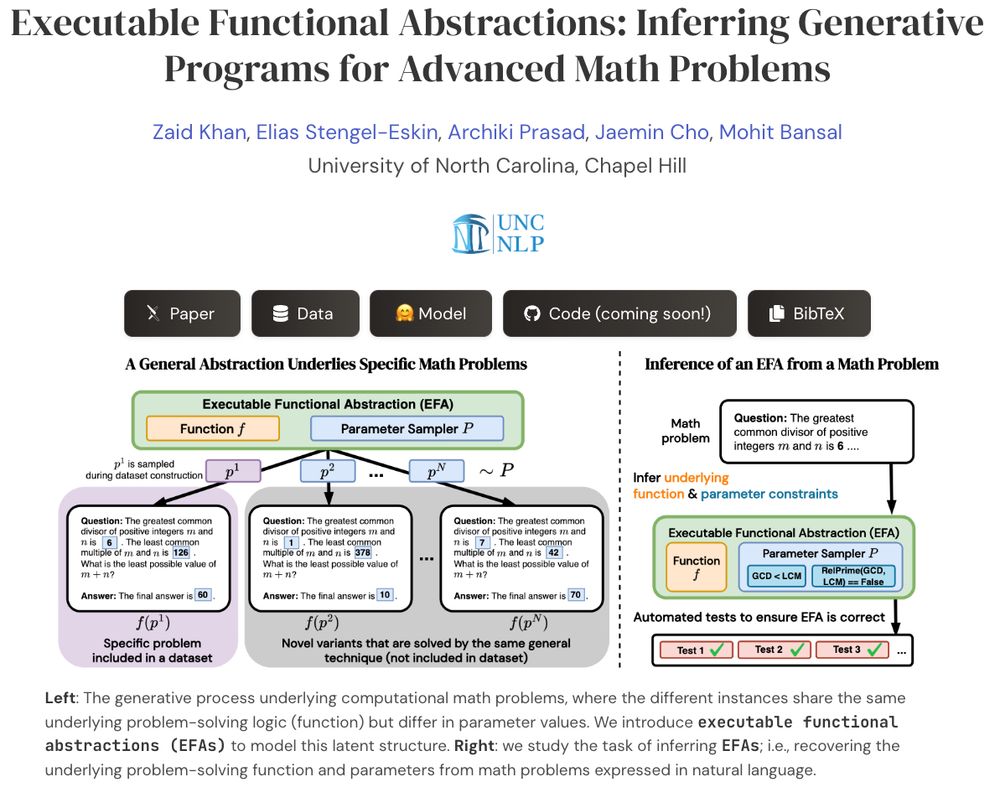

Presenting EFAGen, which automatically transforms static advanced math problems into their corresponding executable functional abstractions (EFAs).

🧵👇

Presenting EFAGen, which automatically transforms static advanced math problems into their corresponding executable functional abstractions (EFAs).

🧵👇

📃 arxiv.org/abs/2504.07389

📃 arxiv.org/abs/2504.07389

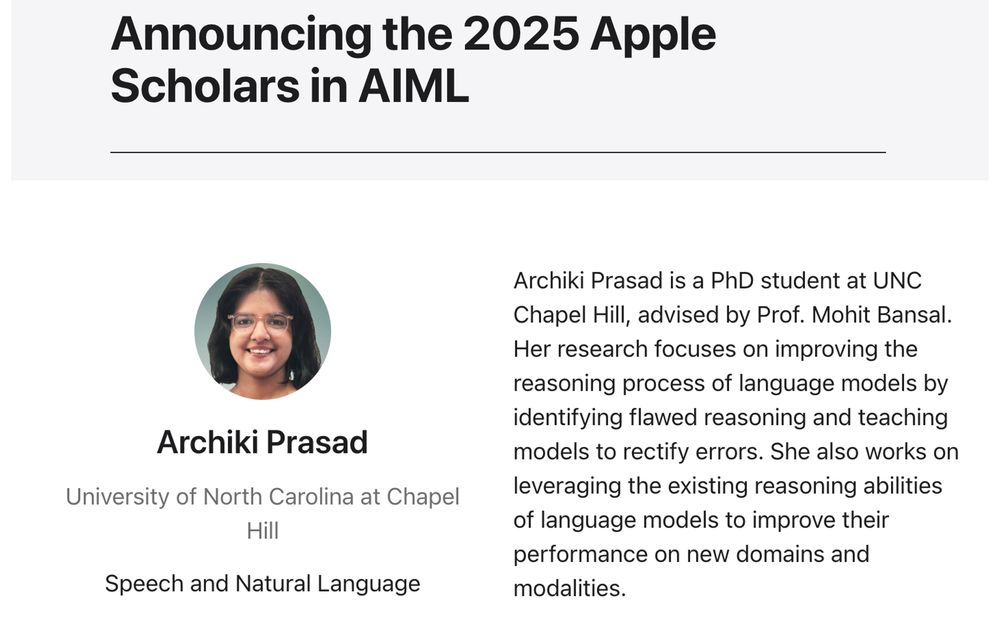

Huge shoutout to my advisor @mohitbansal.bsky.social, & many thanks to my lab mates @unccs.bsky.social , past collaborators + internship advisors for their support ☺️🙏

machinelearning.apple.com/updates/appl...

Huge shoutout to my advisor @mohitbansal.bsky.social, & many thanks to my lab mates @unccs.bsky.social , past collaborators + internship advisors for their support ☺️🙏

machinelearning.apple.com/updates/appl...

Huge shoutout to my advisor @mohitbansal.bsky.social, & many thanks to my lab mates @unccs.bsky.social , past collaborators + internship advisors for their support ☺️🙏

machinelearning.apple.com/updates/appl...

Huge shoutout to my advisor @mohitbansal.bsky.social, & many thanks to my lab mates @unccs.bsky.social , past collaborators + internship advisors for their support ☺️🙏

machinelearning.apple.com/updates/appl...

VEGGIE supports 8 skills, from object addition/removal/changing, and stylization to concept grounding/reasoning. It exceeds SoTA and shows 0-shot multimodal instructional & in-context video editing.

VEGGIE supports 8 skills, from object addition/removal/changing, and stylization to concept grounding/reasoning. It exceeds SoTA and shows 0-shot multimodal instructional & in-context video editing.

1/4

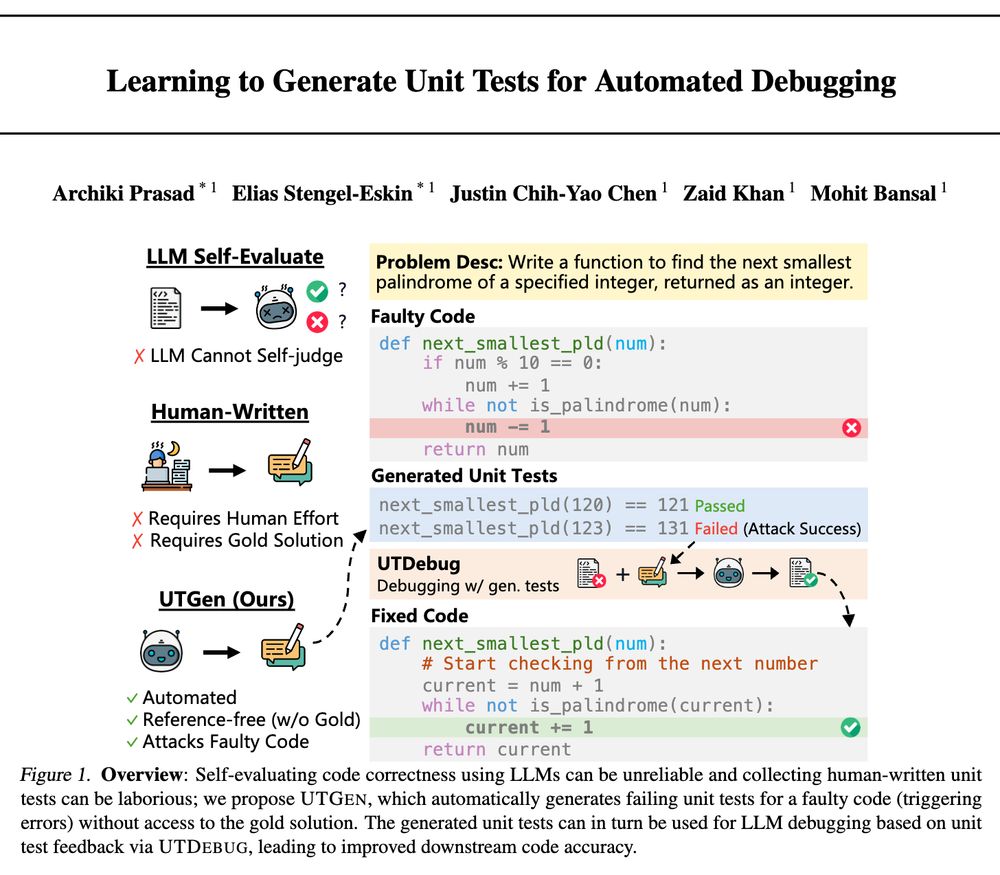

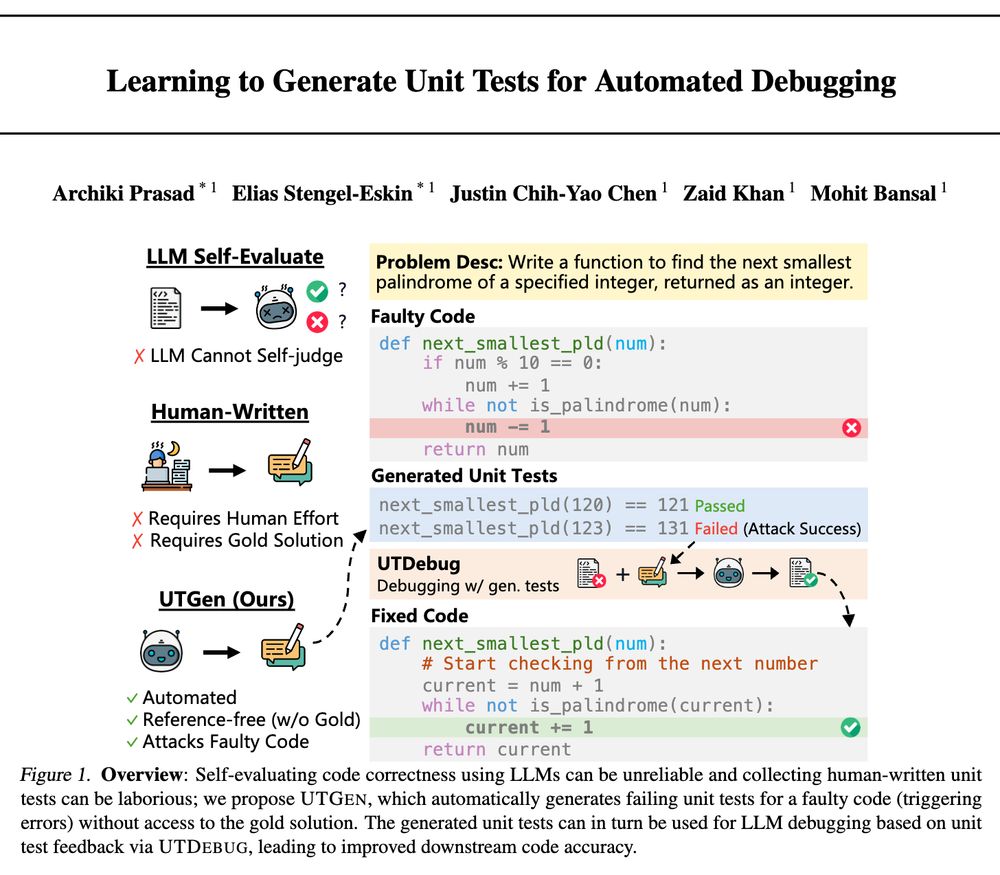

which introduces ✨UTGen and UTDebug✨ for teaching LLMs to generate unit tests (UTs) and debugging code from generated tests.

UTGen+UTDebug yields large gains in debugging (+12% pass@1) & addresses 3 key questions:

🧵👇

1/4

which introduces ✨UTGen and UTDebug✨ for teaching LLMs to generate unit tests (UTs) and debugging code from generated tests.

UTGen+UTDebug yields large gains in debugging (+12% pass@1) & addresses 3 key questions:

🧵👇

which introduces ✨UTGen and UTDebug✨ for teaching LLMs to generate unit tests (UTs) and debugging code from generated tests.

UTGen+UTDebug yields large gains in debugging (+12% pass@1) & addresses 3 key questions:

🧵👇

-- adaptive data generation environments/policies

...

🧵

-- adaptive data generation environments/policies

...

🧵

Most importantly, very grateful to my amazing mentors, students, postdocs, collaborators, and friends+family for making this possible, and for making the journey worthwhile + beautiful 💙

whitehouse.gov/ostp/news-up...

Most importantly, very grateful to my amazing mentors, students, postdocs, collaborators, and friends+family for making this possible, and for making the journey worthwhile + beautiful 💙

Check out the new postdoc openings and become a part of the vibrant research @unccs.bsky.social !⬇️

Exciting+diverse NLP/CV/ML topics**, freedom to create research agenda, competitive funding, very strong students, mentorship for grant writing, collabs w/ many faculty+universities+companies, superb quality of life/weather.

Please apply + help spread the word 🙏

Check out the new postdoc openings and become a part of the vibrant research @unccs.bsky.social !⬇️

Exciting+diverse NLP/CV/ML topics**, freedom to create research agenda, competitive funding, very strong students, mentorship for grant writing, collabs w/ many faculty+universities+companies, superb quality of life/weather.

Please apply + help spread the word 🙏

Exciting+diverse NLP/CV/ML topics**, freedom to create research agenda, competitive funding, very strong students, mentorship for grant writing, collabs w/ many faculty+universities+companies, superb quality of life/weather.

Please apply + help spread the word 🙏

j-min.io

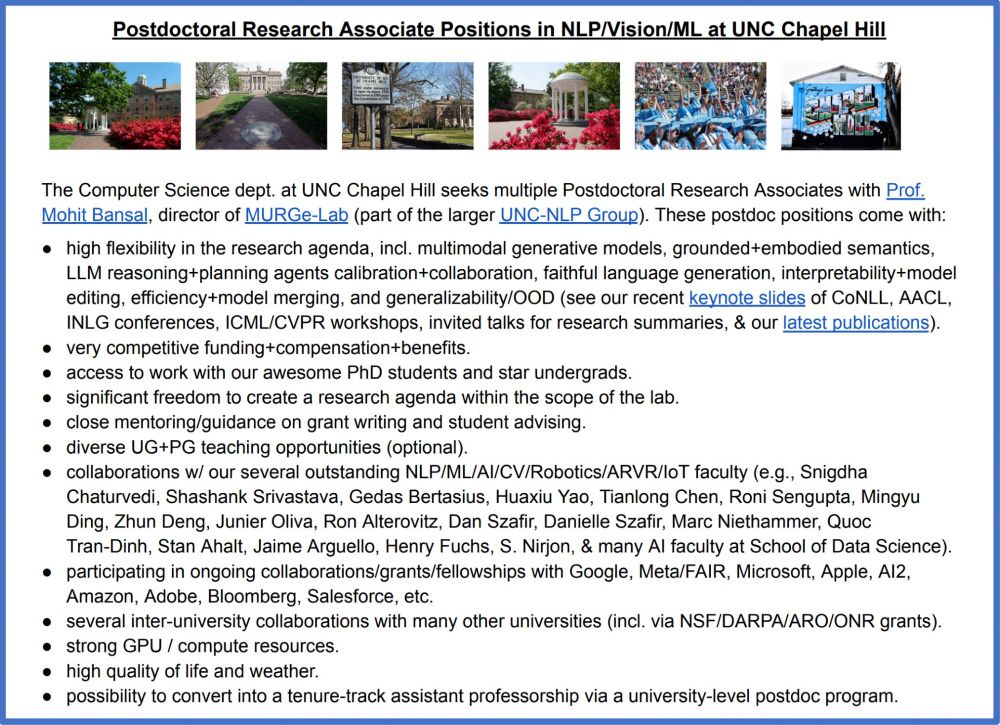

I work on ✨Multimodal AI✨, advancing reasoning in understanding & generation by:

1⃣ Making it scalable

2⃣ Making it faithful

3⃣ Evaluating + refining it

Completing my PhD at UNC (w/ @mohitbansal.bsky.social).

Happy to connect (will be at #NeurIPS2024)!

👇🧵

j-min.io

I work on ✨Multimodal AI✨, advancing reasoning in understanding & generation by:

1⃣ Making it scalable

2⃣ Making it faithful

3⃣ Evaluating + refining it

Completing my PhD at UNC (w/ @mohitbansal.bsky.social).

Happy to connect (will be at #NeurIPS2024)!

👇🧵

I'm excited to see research from his lab as a professor and an advisor! 😄

I will be presenting at #NeurIPS2024 and am happy to chat in-person or digitally!

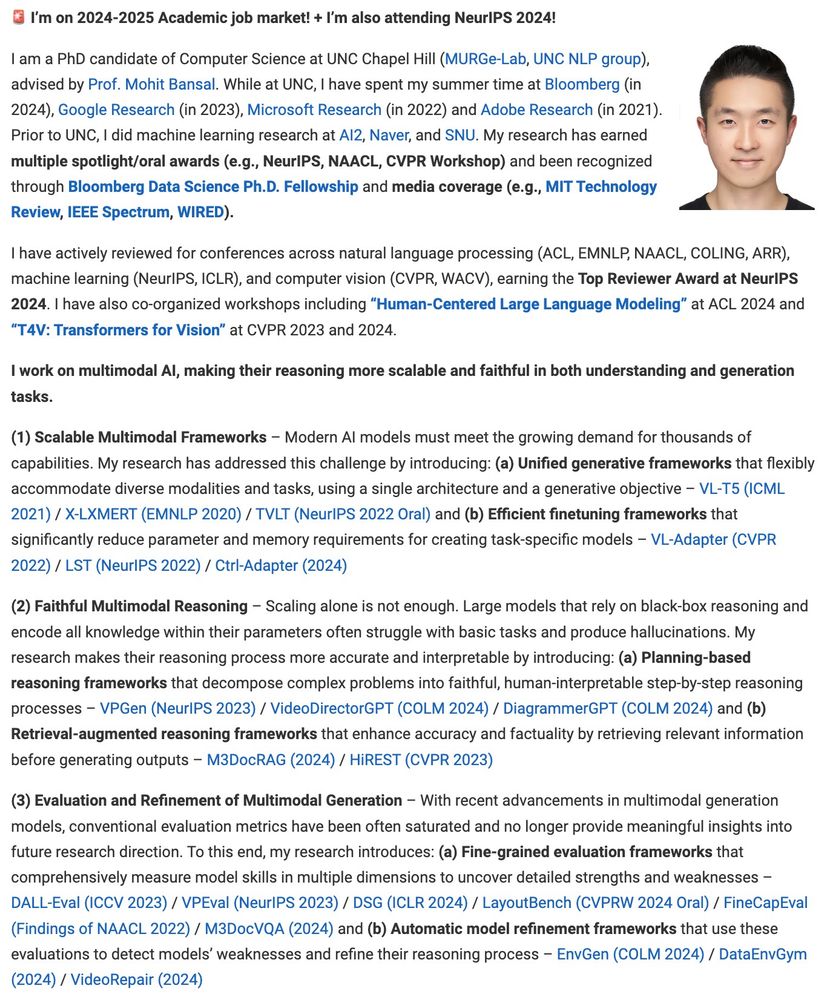

I work on developing AI agents that can collaborate and communicate robustly with us and each other.

More at: esteng.github.io and in thread below

🧵👇

I'm excited to see research from his lab as a professor and an advisor! 😄

I will be presenting at #NeurIPS2024 and am happy to chat in-person or digitally!

I work on developing AI agents that can collaborate and communicate robustly with us and each other.

More at: esteng.github.io and in thread below

🧵👇

I will be presenting at #NeurIPS2024 and am happy to chat in-person or digitally!

I work on developing AI agents that can collaborate and communicate robustly with us and each other.

More at: esteng.github.io and in thread below

🧵👇

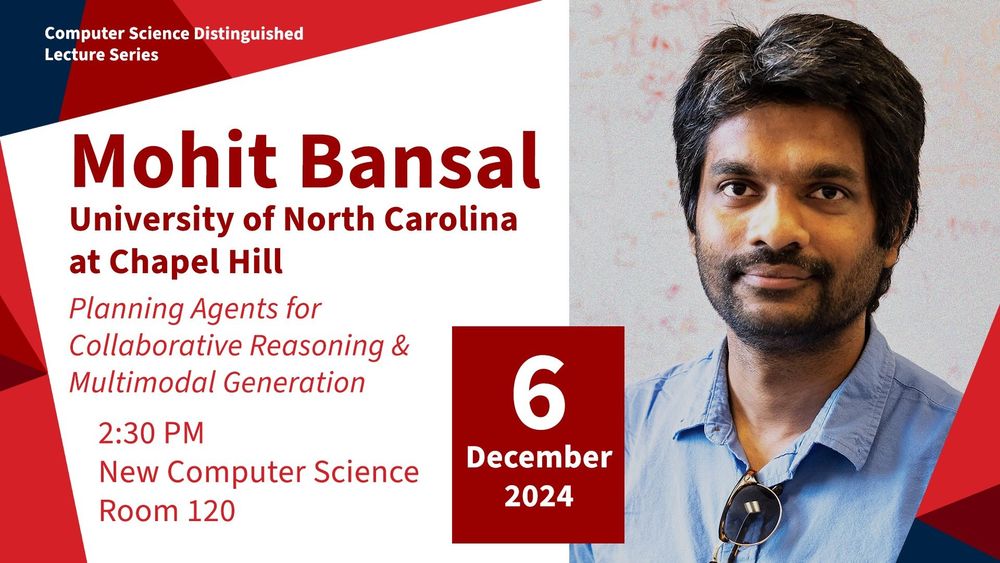

PS. Excited to give a new talk on "Planning Agents for Collaborative Reasoning and Multimodal Generation" ➡️➡️

🧵👇

PS. Excited to give a new talk on "Planning Agents for Collaborative Reasoning and Multimodal Generation" ➡️➡️

🧵👇

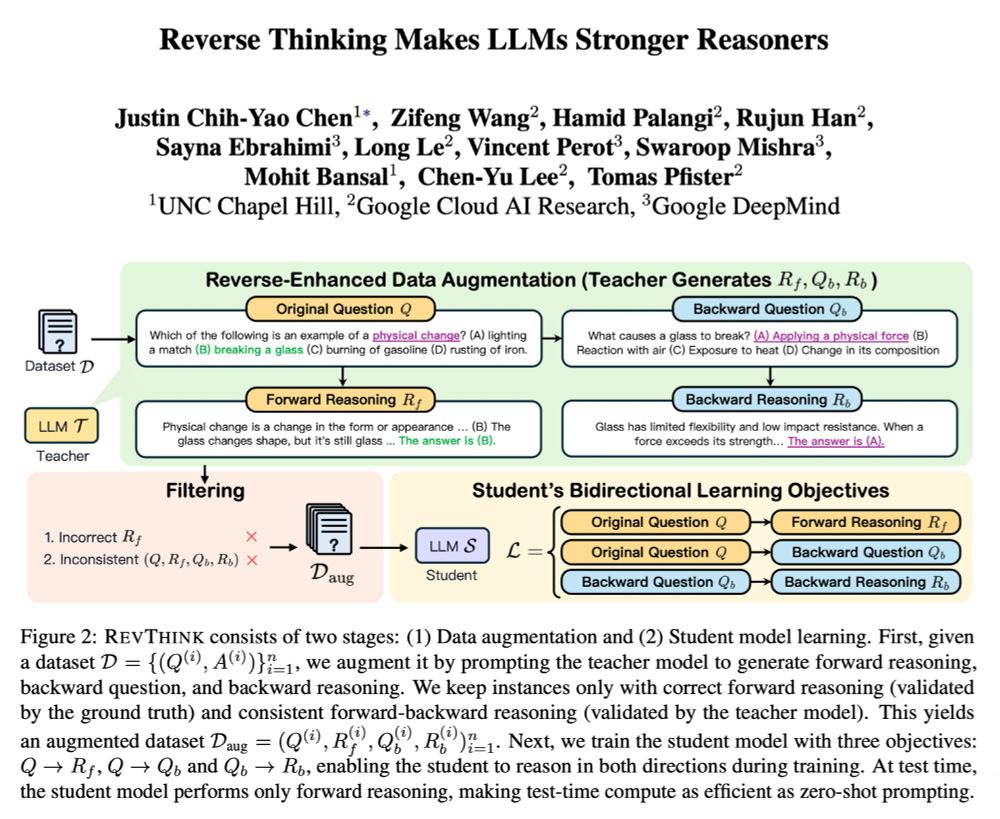

We can often reason from a problem to a solution and also in reverse to enhance our overall reasoning. RevThink shows that LLMs can also benefit from reverse thinking 👉 13.53% gains + sample efficiency + strong generalization (on 4 OOD datasets)!

We can often reason from a problem to a solution and also in reverse to enhance our overall reasoning. RevThink shows that LLMs can also benefit from reverse thinking 👉 13.53% gains + sample efficiency + strong generalization (on 4 OOD datasets)!

Congratulations to #UNC CS student David Wan for winning the prestigious 2024 Google PhD Fellowship in NLP. 🎉🥳

A very well-deserved honor for his impactful work on factual and faithful text+multimodal generation with Prof. @mohitbansal.bsky.social and UNC NLP group!

▶️ blog.google/technology/r...

Congratulations to #UNC CS student David Wan for winning the prestigious 2024 Google PhD Fellowship in NLP. 🎉🥳

A very well-deserved honor for his impactful work on factual and faithful text+multimodal generation with Prof. @mohitbansal.bsky.social and UNC NLP group!

▶️ blog.google/technology/r...