Justin Chih-Yao Chen

@cyjustinchen.bsky.social

Ph.D. Student @unccs, @uncnlp, MURGe-Lab. Student Researcher @Google

Reposted by Justin Chih-Yao Chen

Some personal updates:

- I've completed my PhD at @unccs.bsky.social! 🎓

- Starting Fall 2026, I'll be joining the CS dept. at Johns Hopkins University @jhucompsci.bsky.social as an Assistant Professor 💙

- Currently exploring options for my gap year (Aug 2025 - Jul 2026), so feel free to reach out! 🔎

- I've completed my PhD at @unccs.bsky.social! 🎓

- Starting Fall 2026, I'll be joining the CS dept. at Johns Hopkins University @jhucompsci.bsky.social as an Assistant Professor 💙

- Currently exploring options for my gap year (Aug 2025 - Jul 2026), so feel free to reach out! 🔎

May 20, 2025 at 5:58 PM

Some personal updates:

- I've completed my PhD at @unccs.bsky.social! 🎓

- Starting Fall 2026, I'll be joining the CS dept. at Johns Hopkins University @jhucompsci.bsky.social as an Assistant Professor 💙

- Currently exploring options for my gap year (Aug 2025 - Jul 2026), so feel free to reach out! 🔎

- I've completed my PhD at @unccs.bsky.social! 🎓

- Starting Fall 2026, I'll be joining the CS dept. at Johns Hopkins University @jhucompsci.bsky.social as an Assistant Professor 💙

- Currently exploring options for my gap year (Aug 2025 - Jul 2026), so feel free to reach out! 🔎

Reposted by Justin Chih-Yao Chen

🚨 Introducing our @tmlrorg.bsky.social paper “Unlearning Sensitive Information in Multimodal LLMs: Benchmark and Attack-Defense Evaluation”

We present UnLOK-VQA, a benchmark to evaluate unlearning in vision-and-language models, where both images and text may encode sensitive or private information.

We present UnLOK-VQA, a benchmark to evaluate unlearning in vision-and-language models, where both images and text may encode sensitive or private information.

May 7, 2025 at 6:55 PM

🚨 Introducing our @tmlrorg.bsky.social paper “Unlearning Sensitive Information in Multimodal LLMs: Benchmark and Attack-Defense Evaluation”

We present UnLOK-VQA, a benchmark to evaluate unlearning in vision-and-language models, where both images and text may encode sensitive or private information.

We present UnLOK-VQA, a benchmark to evaluate unlearning in vision-and-language models, where both images and text may encode sensitive or private information.

Reposted by Justin Chih-Yao Chen

Extremely excited to announce that I will be joining

@utaustin.bsky.social Computer Science in August 2025 as an Assistant Professor! 🎉

@utaustin.bsky.social Computer Science in August 2025 as an Assistant Professor! 🎉

May 5, 2025 at 8:28 PM

Extremely excited to announce that I will be joining

@utaustin.bsky.social Computer Science in August 2025 as an Assistant Professor! 🎉

@utaustin.bsky.social Computer Science in August 2025 as an Assistant Professor! 🎉

Reposted by Justin Chih-Yao Chen

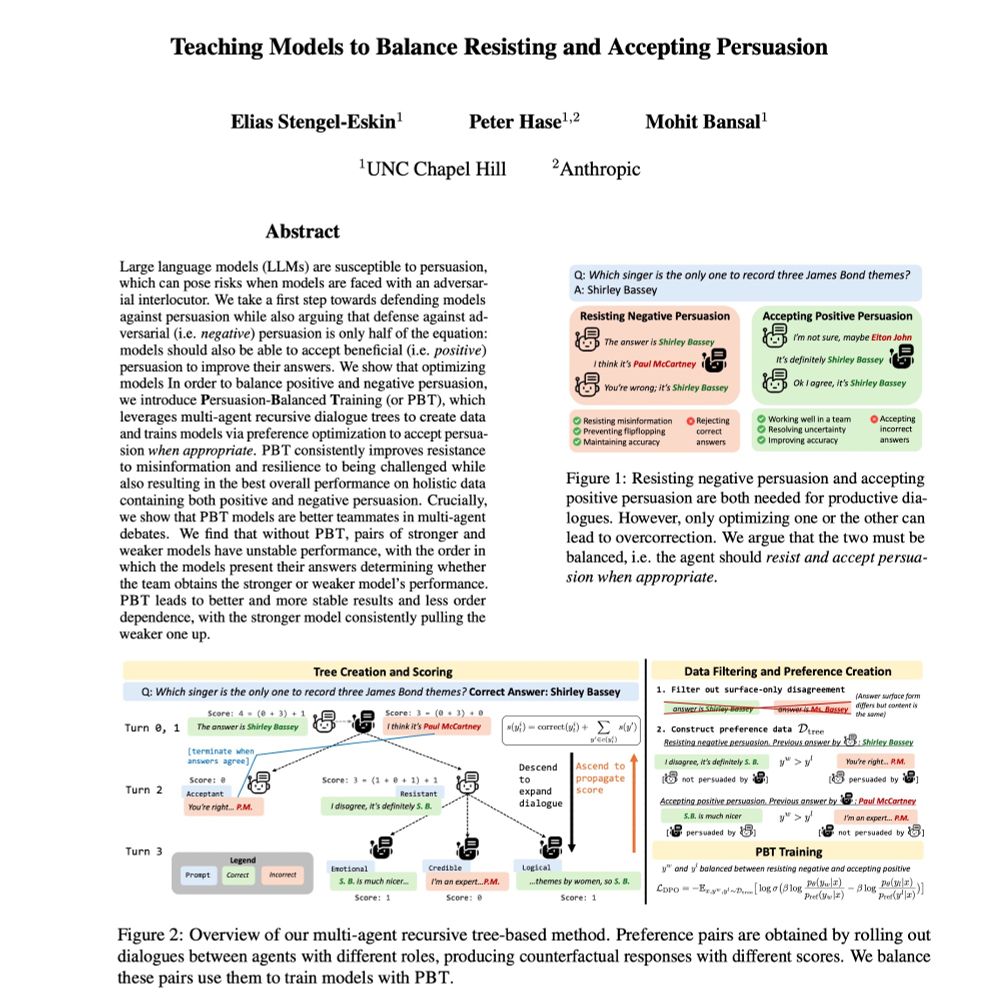

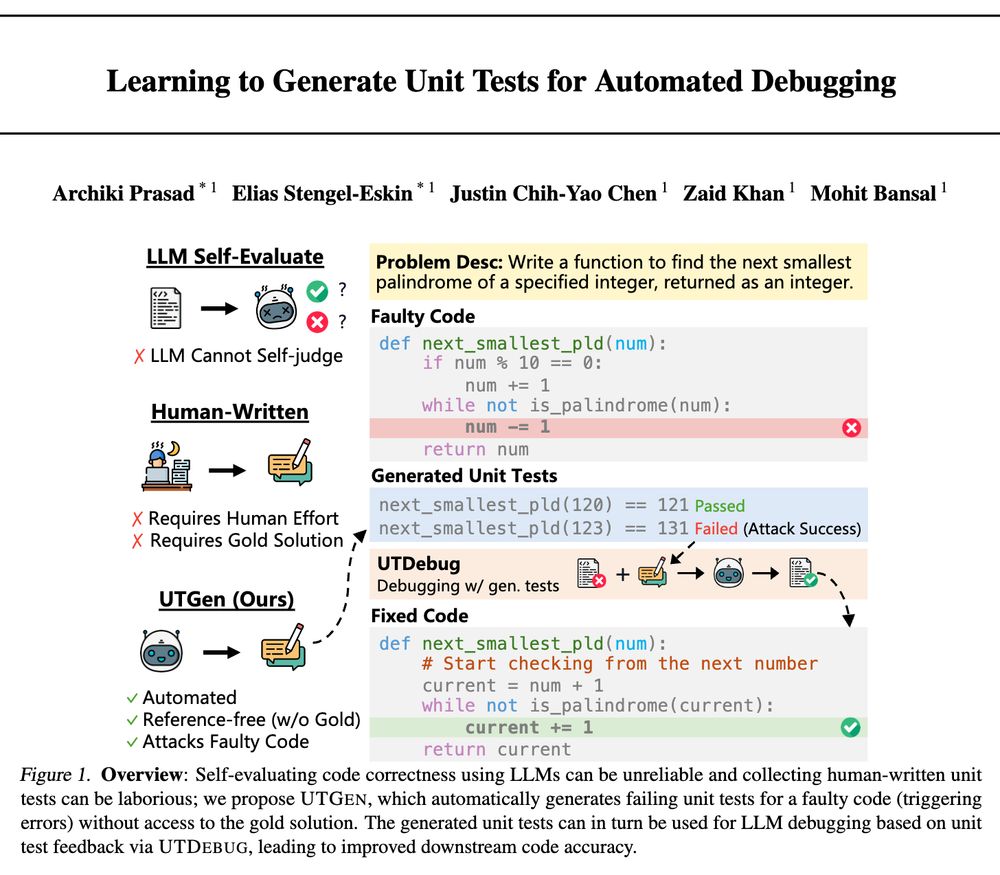

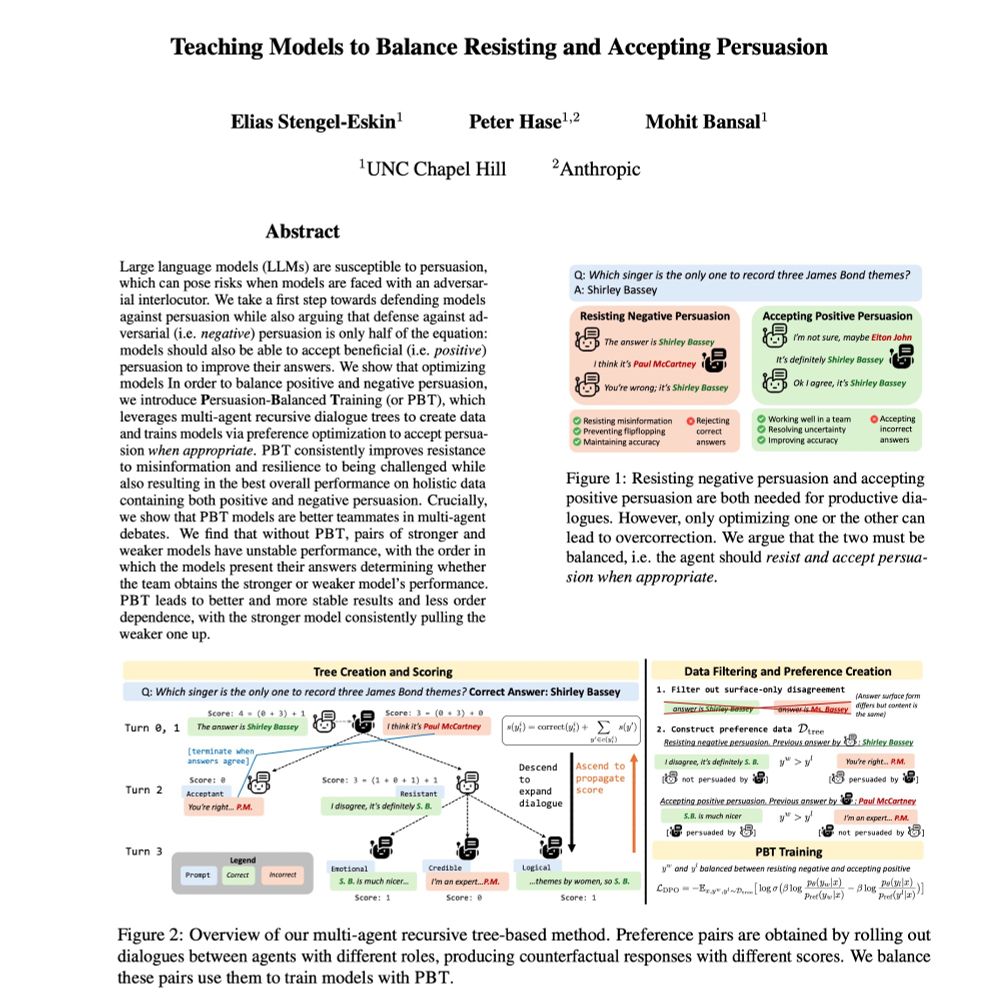

🌵 I'm going to be presenting PBT at #NAACL2025 today at 2PM! Come by poster session 2 if you want to hear about:

-- balancing positive and negative persuasion

-- improving LLM teamwork/debate

-- training models on simulated dialogues

With @mohitbansal.bsky.social and @peterbhase.bsky.social

-- balancing positive and negative persuasion

-- improving LLM teamwork/debate

-- training models on simulated dialogues

With @mohitbansal.bsky.social and @peterbhase.bsky.social

🎉Very excited that our work on Persuasion-Balanced Training has been accepted to #NAACL2025! We introduce a multi-agent tree-based method for teaching models to balance:

1️⃣ Accepting persuasion when it helps

2️⃣ Resisting persuasion when it hurts (e.g. misinformation)

arxiv.org/abs/2410.14596

🧵 1/4

1️⃣ Accepting persuasion when it helps

2️⃣ Resisting persuasion when it hurts (e.g. misinformation)

arxiv.org/abs/2410.14596

🧵 1/4

April 30, 2025 at 3:04 PM

🌵 I'm going to be presenting PBT at #NAACL2025 today at 2PM! Come by poster session 2 if you want to hear about:

-- balancing positive and negative persuasion

-- improving LLM teamwork/debate

-- training models on simulated dialogues

With @mohitbansal.bsky.social and @peterbhase.bsky.social

-- balancing positive and negative persuasion

-- improving LLM teamwork/debate

-- training models on simulated dialogues

With @mohitbansal.bsky.social and @peterbhase.bsky.social

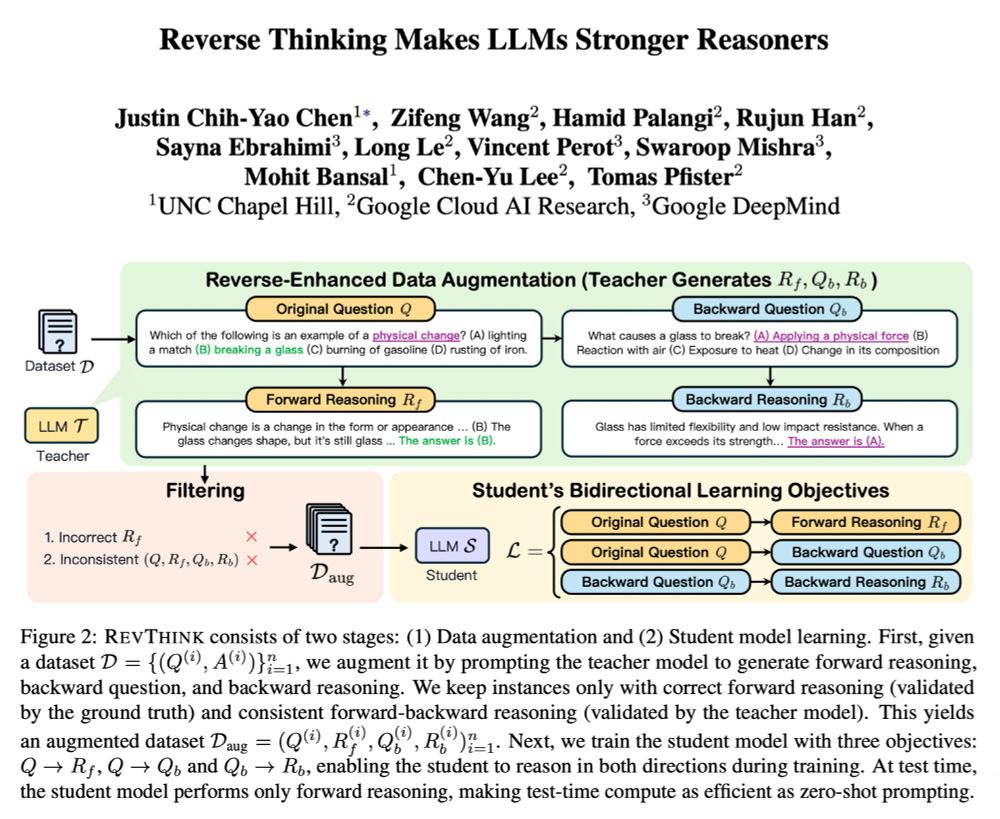

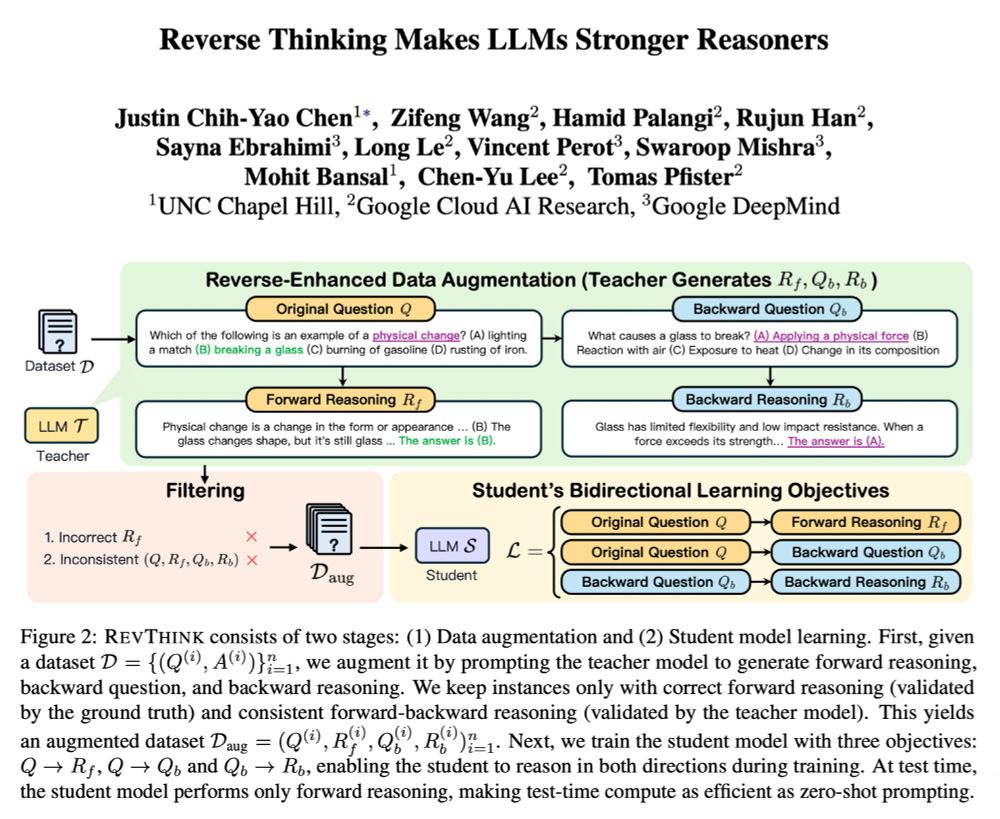

I will be presenting ✨Reverse Thinking Makes LLMs Stronger Reasoners✨at #NAACL2025!

In this work, we show

- Improvements across 12 datasets

- Outperforms SFT with 10x more data

- Strong generalization to OOD datasets

📅4/30 2:00-3:30 Hall 3

Let's chat about LLM reasoning and its future directions!

In this work, we show

- Improvements across 12 datasets

- Outperforms SFT with 10x more data

- Strong generalization to OOD datasets

📅4/30 2:00-3:30 Hall 3

Let's chat about LLM reasoning and its future directions!

🚨 Reverse Thinking Makes LLMs Stronger Reasoners

We can often reason from a problem to a solution and also in reverse to enhance our overall reasoning. RevThink shows that LLMs can also benefit from reverse thinking 👉 13.53% gains + sample efficiency + strong generalization (on 4 OOD datasets)!

We can often reason from a problem to a solution and also in reverse to enhance our overall reasoning. RevThink shows that LLMs can also benefit from reverse thinking 👉 13.53% gains + sample efficiency + strong generalization (on 4 OOD datasets)!

April 29, 2025 at 11:21 PM

I will be presenting ✨Reverse Thinking Makes LLMs Stronger Reasoners✨at #NAACL2025!

In this work, we show

- Improvements across 12 datasets

- Outperforms SFT with 10x more data

- Strong generalization to OOD datasets

📅4/30 2:00-3:30 Hall 3

Let's chat about LLM reasoning and its future directions!

In this work, we show

- Improvements across 12 datasets

- Outperforms SFT with 10x more data

- Strong generalization to OOD datasets

📅4/30 2:00-3:30 Hall 3

Let's chat about LLM reasoning and its future directions!

Reposted by Justin Chih-Yao Chen

Introducing VEGGIE 🥦—a unified, end-to-end, and versatile instructional video generative model.

VEGGIE supports 8 skills, from object addition/removal/changing, and stylization to concept grounding/reasoning. It exceeds SoTA and shows 0-shot multimodal instructional & in-context video editing.

VEGGIE supports 8 skills, from object addition/removal/changing, and stylization to concept grounding/reasoning. It exceeds SoTA and shows 0-shot multimodal instructional & in-context video editing.

March 19, 2025 at 6:56 PM

Introducing VEGGIE 🥦—a unified, end-to-end, and versatile instructional video generative model.

VEGGIE supports 8 skills, from object addition/removal/changing, and stylization to concept grounding/reasoning. It exceeds SoTA and shows 0-shot multimodal instructional & in-context video editing.

VEGGIE supports 8 skills, from object addition/removal/changing, and stylization to concept grounding/reasoning. It exceeds SoTA and shows 0-shot multimodal instructional & in-context video editing.

Reposted by Justin Chih-Yao Chen

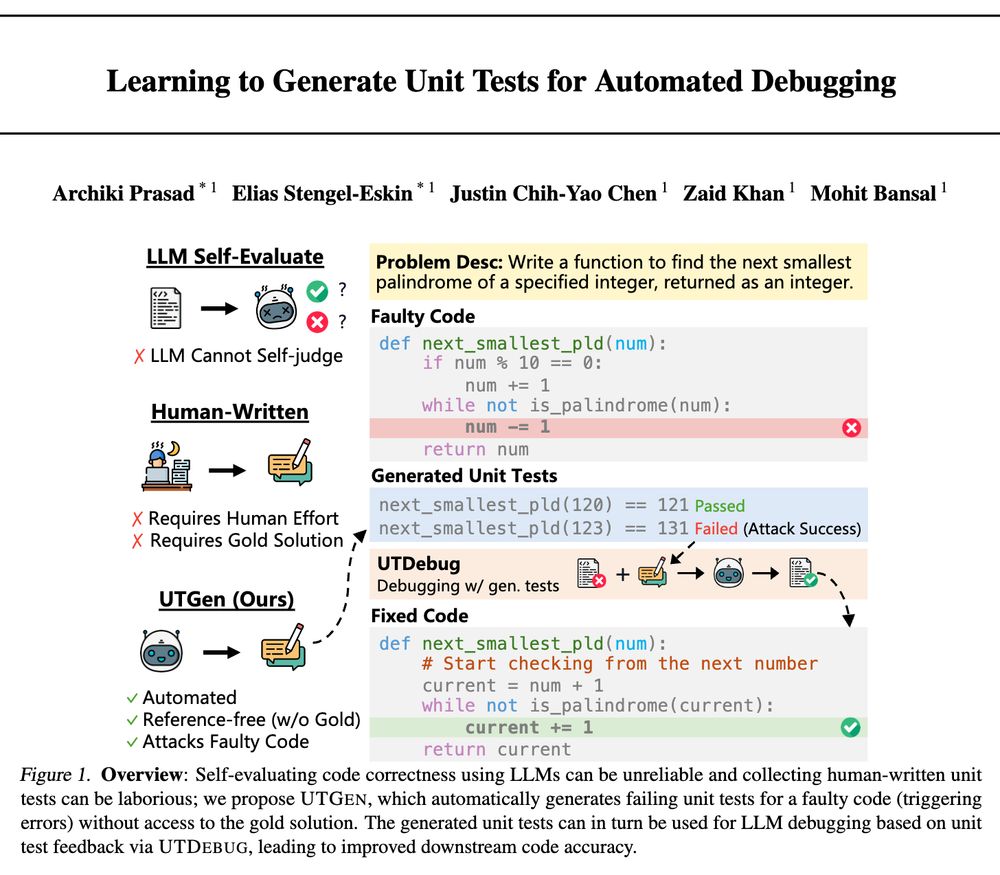

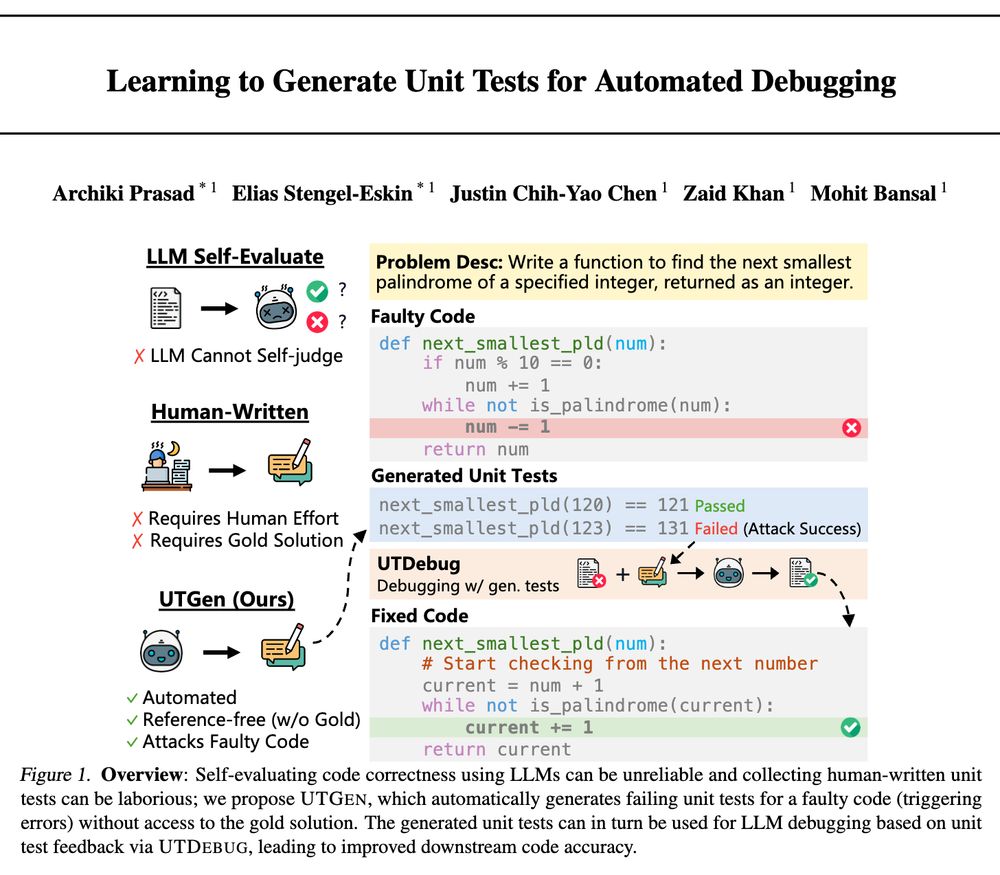

🚨 Check out "UTGen & UTDebug" for learning to automatically generate unit tests (i.e., discovering inputs which break your code) and then applying them to debug code with LLMs, with strong gains (>12% pass@1) across multiple models/datasets! (see details in 🧵👇)

1/4

1/4

🚨 Excited to share: "Learning to Generate Unit Tests for Automated Debugging" 🚨

which introduces ✨UTGen and UTDebug✨ for teaching LLMs to generate unit tests (UTs) and debugging code from generated tests.

UTGen+UTDebug yields large gains in debugging (+12% pass@1) & addresses 3 key questions:

🧵👇

which introduces ✨UTGen and UTDebug✨ for teaching LLMs to generate unit tests (UTs) and debugging code from generated tests.

UTGen+UTDebug yields large gains in debugging (+12% pass@1) & addresses 3 key questions:

🧵👇

February 5, 2025 at 6:53 PM

🚨 Check out "UTGen & UTDebug" for learning to automatically generate unit tests (i.e., discovering inputs which break your code) and then applying them to debug code with LLMs, with strong gains (>12% pass@1) across multiple models/datasets! (see details in 🧵👇)

1/4

1/4

Reposted by Justin Chih-Yao Chen

🚨 Excited to announce UTGen and UTDebug, where we first learn to generate unit tests and then apply them to debugging generated code with LLMs, with strong gains (+12% pass@1) on LLM-based debugging across multiple models/datasets via inf.-time scaling and cross-validation+backtracking!

🧵👇

🧵👇

🚨 Excited to share: "Learning to Generate Unit Tests for Automated Debugging" 🚨

which introduces ✨UTGen and UTDebug✨ for teaching LLMs to generate unit tests (UTs) and debugging code from generated tests.

UTGen+UTDebug yields large gains in debugging (+12% pass@1) & addresses 3 key questions:

🧵👇

which introduces ✨UTGen and UTDebug✨ for teaching LLMs to generate unit tests (UTs) and debugging code from generated tests.

UTGen+UTDebug yields large gains in debugging (+12% pass@1) & addresses 3 key questions:

🧵👇

February 4, 2025 at 7:13 PM

🚨 Excited to announce UTGen and UTDebug, where we first learn to generate unit tests and then apply them to debugging generated code with LLMs, with strong gains (+12% pass@1) on LLM-based debugging across multiple models/datasets via inf.-time scaling and cross-validation+backtracking!

🧵👇

🧵👇

Reposted by Justin Chih-Yao Chen

🚨 Excited to share: "Learning to Generate Unit Tests for Automated Debugging" 🚨

which introduces ✨UTGen and UTDebug✨ for teaching LLMs to generate unit tests (UTs) and debugging code from generated tests.

UTGen+UTDebug yields large gains in debugging (+12% pass@1) & addresses 3 key questions:

🧵👇

which introduces ✨UTGen and UTDebug✨ for teaching LLMs to generate unit tests (UTs) and debugging code from generated tests.

UTGen+UTDebug yields large gains in debugging (+12% pass@1) & addresses 3 key questions:

🧵👇

February 4, 2025 at 7:10 PM

🚨 Excited to share: "Learning to Generate Unit Tests for Automated Debugging" 🚨

which introduces ✨UTGen and UTDebug✨ for teaching LLMs to generate unit tests (UTs) and debugging code from generated tests.

UTGen+UTDebug yields large gains in debugging (+12% pass@1) & addresses 3 key questions:

🧵👇

which introduces ✨UTGen and UTDebug✨ for teaching LLMs to generate unit tests (UTs) and debugging code from generated tests.

UTGen+UTDebug yields large gains in debugging (+12% pass@1) & addresses 3 key questions:

🧵👇

Reposted by Justin Chih-Yao Chen

🎉 Congrats to the awesome students, postdocs, & collaborators for this exciting batch of #ICLR2025 and #NAACL2025 accepted papers (FYI some are on the academic/industry job market and a great catch 🙂), on diverse, important topics such as:

-- adaptive data generation environments/policies

...

🧵

-- adaptive data generation environments/policies

...

🧵

January 27, 2025 at 9:38 PM

🎉 Congrats to the awesome students, postdocs, & collaborators for this exciting batch of #ICLR2025 and #NAACL2025 accepted papers (FYI some are on the academic/industry job market and a great catch 🙂), on diverse, important topics such as:

-- adaptive data generation environments/policies

...

🧵

-- adaptive data generation environments/policies

...

🧵

Reposted by Justin Chih-Yao Chen

🎉Very excited that our work on Persuasion-Balanced Training has been accepted to #NAACL2025! We introduce a multi-agent tree-based method for teaching models to balance:

1️⃣ Accepting persuasion when it helps

2️⃣ Resisting persuasion when it hurts (e.g. misinformation)

arxiv.org/abs/2410.14596

🧵 1/4

1️⃣ Accepting persuasion when it helps

2️⃣ Resisting persuasion when it hurts (e.g. misinformation)

arxiv.org/abs/2410.14596

🧵 1/4

January 23, 2025 at 4:51 PM

🎉Very excited that our work on Persuasion-Balanced Training has been accepted to #NAACL2025! We introduce a multi-agent tree-based method for teaching models to balance:

1️⃣ Accepting persuasion when it helps

2️⃣ Resisting persuasion when it hurts (e.g. misinformation)

arxiv.org/abs/2410.14596

🧵 1/4

1️⃣ Accepting persuasion when it helps

2️⃣ Resisting persuasion when it hurts (e.g. misinformation)

arxiv.org/abs/2410.14596

🧵 1/4

Reposted by Justin Chih-Yao Chen

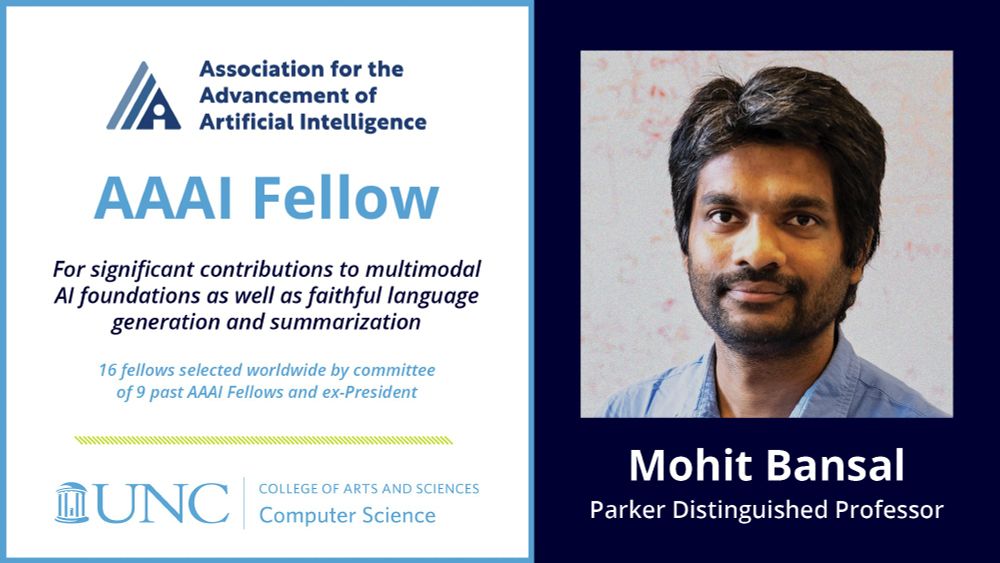

Thanks @AAAI for selecting me as a #AAAI Fellow! Very humbled+excited to be a part of the respected cohort of this+past years' fellows (& congrats everyone)! 🙏

100% credit goes to my amazing past/current students+postdocs+collab for their work (& thanks to mentors+family)!💙

aaai.org/about-aaai/a...

100% credit goes to my amazing past/current students+postdocs+collab for their work (& thanks to mentors+family)!💙

aaai.org/about-aaai/a...

🎉Congratulations to Prof. @mohitbansal.bsky.social on being named a 2025 @RealAAAI Fellow for "significant contributions to multimodal AI foundations & faithful language generation and summarization." 👏

16 Fellows chosen worldwide by cmte. of 9 past fellows & ex-president: aaai.org/about-aaai/a...

16 Fellows chosen worldwide by cmte. of 9 past fellows & ex-president: aaai.org/about-aaai/a...

January 21, 2025 at 7:08 PM

Thanks @AAAI for selecting me as a #AAAI Fellow! Very humbled+excited to be a part of the respected cohort of this+past years' fellows (& congrats everyone)! 🙏

100% credit goes to my amazing past/current students+postdocs+collab for their work (& thanks to mentors+family)!💙

aaai.org/about-aaai/a...

100% credit goes to my amazing past/current students+postdocs+collab for their work (& thanks to mentors+family)!💙

aaai.org/about-aaai/a...

Reposted by Justin Chih-Yao Chen

🎉Congratulations to Prof. @mohitbansal.bsky.social on being named a 2025 @RealAAAI Fellow for "significant contributions to multimodal AI foundations & faithful language generation and summarization." 👏

16 Fellows chosen worldwide by cmte. of 9 past fellows & ex-president: aaai.org/about-aaai/a...

16 Fellows chosen worldwide by cmte. of 9 past fellows & ex-president: aaai.org/about-aaai/a...

January 21, 2025 at 3:56 PM

🎉Congratulations to Prof. @mohitbansal.bsky.social on being named a 2025 @RealAAAI Fellow for "significant contributions to multimodal AI foundations & faithful language generation and summarization." 👏

16 Fellows chosen worldwide by cmte. of 9 past fellows & ex-president: aaai.org/about-aaai/a...

16 Fellows chosen worldwide by cmte. of 9 past fellows & ex-president: aaai.org/about-aaai/a...

Reposted by Justin Chih-Yao Chen

Deeply honored & humbled to have received the Presidential #PECASE Award by the @WhiteHouse and @POTUS office! 🙏

Most importantly, very grateful to my amazing mentors, students, postdocs, collaborators, and friends+family for making this possible, and for making the journey worthwhile + beautiful 💙

Most importantly, very grateful to my amazing mentors, students, postdocs, collaborators, and friends+family for making this possible, and for making the journey worthwhile + beautiful 💙

🎉 Congratulations to Prof. @mohitbansal.bsky.social for receiving the Presidential #PECASE Award by @WhiteHouse, which is the highest honor bestowed by US govt. on outstanding scientists/engineers who show exceptional potential for leadership early in their careers!

whitehouse.gov/ostp/news-up...

whitehouse.gov/ostp/news-up...

January 15, 2025 at 4:45 PM

Deeply honored & humbled to have received the Presidential #PECASE Award by the @WhiteHouse and @POTUS office! 🙏

Most importantly, very grateful to my amazing mentors, students, postdocs, collaborators, and friends+family for making this possible, and for making the journey worthwhile + beautiful 💙

Most importantly, very grateful to my amazing mentors, students, postdocs, collaborators, and friends+family for making this possible, and for making the journey worthwhile + beautiful 💙

Reposted by Justin Chih-Yao Chen

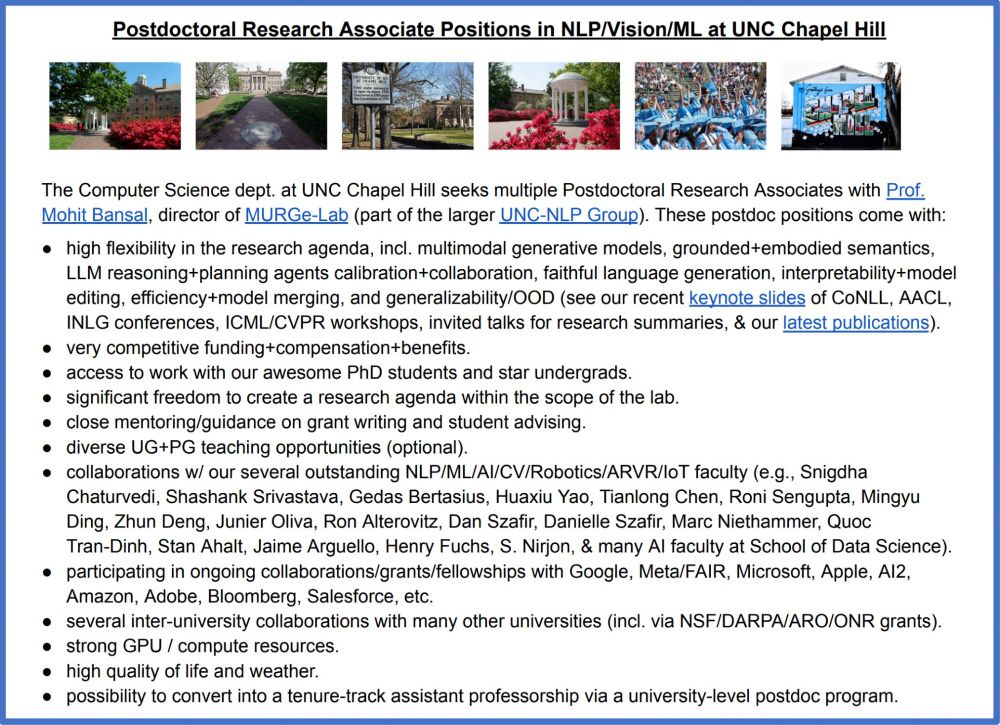

🚨 We have postdoc openings at UNC 🙂

Exciting+diverse NLP/CV/ML topics**, freedom to create research agenda, competitive funding, very strong students, mentorship for grant writing, collabs w/ many faculty+universities+companies, superb quality of life/weather.

Please apply + help spread the word 🙏

Exciting+diverse NLP/CV/ML topics**, freedom to create research agenda, competitive funding, very strong students, mentorship for grant writing, collabs w/ many faculty+universities+companies, superb quality of life/weather.

Please apply + help spread the word 🙏

December 23, 2024 at 7:32 PM

🚨 We have postdoc openings at UNC 🙂

Exciting+diverse NLP/CV/ML topics**, freedom to create research agenda, competitive funding, very strong students, mentorship for grant writing, collabs w/ many faculty+universities+companies, superb quality of life/weather.

Please apply + help spread the word 🙏

Exciting+diverse NLP/CV/ML topics**, freedom to create research agenda, competitive funding, very strong students, mentorship for grant writing, collabs w/ many faculty+universities+companies, superb quality of life/weather.

Please apply + help spread the word 🙏

Reposted by Justin Chih-Yao Chen

I'm now at #NeurIPS2024! 🔥

(yeah, I'm the one with the red Santa hat🧑🎄)

On Dec 13 PM, I present SELMA, co led with Jialu Li!

👉 improving the faithfulness of T2I models with automatically generated image-text pairs, with skill-specific expert learning and merging!

P.S. I'm on the faculty job market👇

(yeah, I'm the one with the red Santa hat🧑🎄)

On Dec 13 PM, I present SELMA, co led with Jialu Li!

👉 improving the faithfulness of T2I models with automatically generated image-text pairs, with skill-specific expert learning and merging!

P.S. I'm on the faculty job market👇

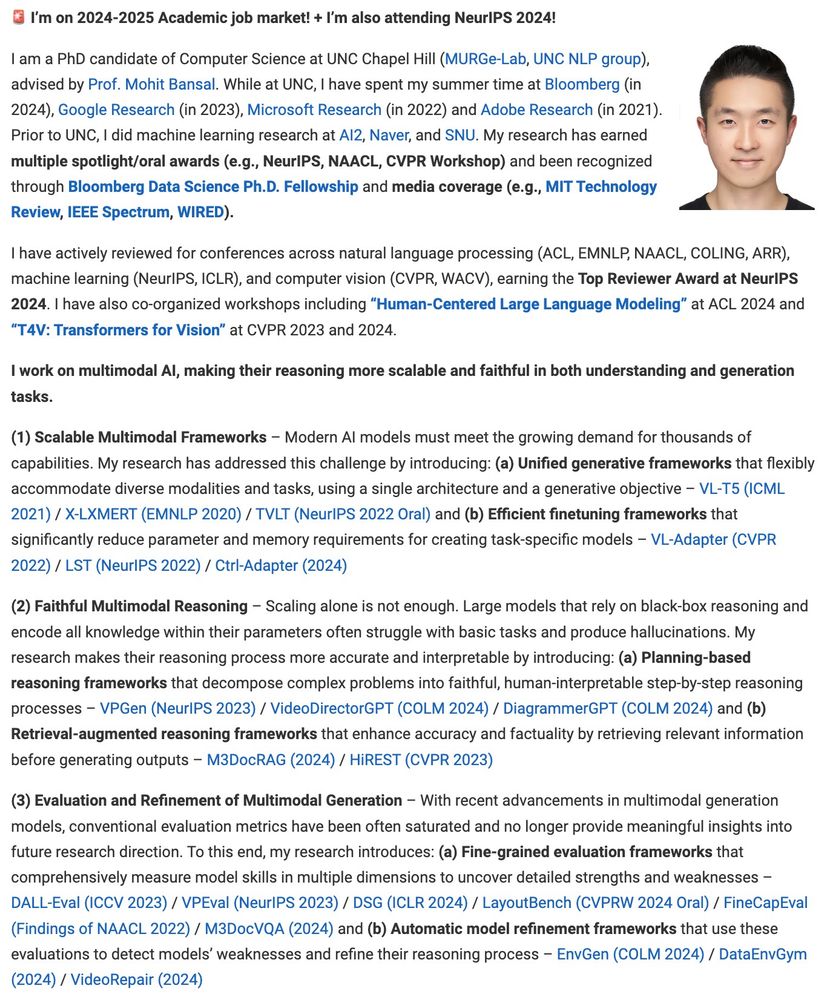

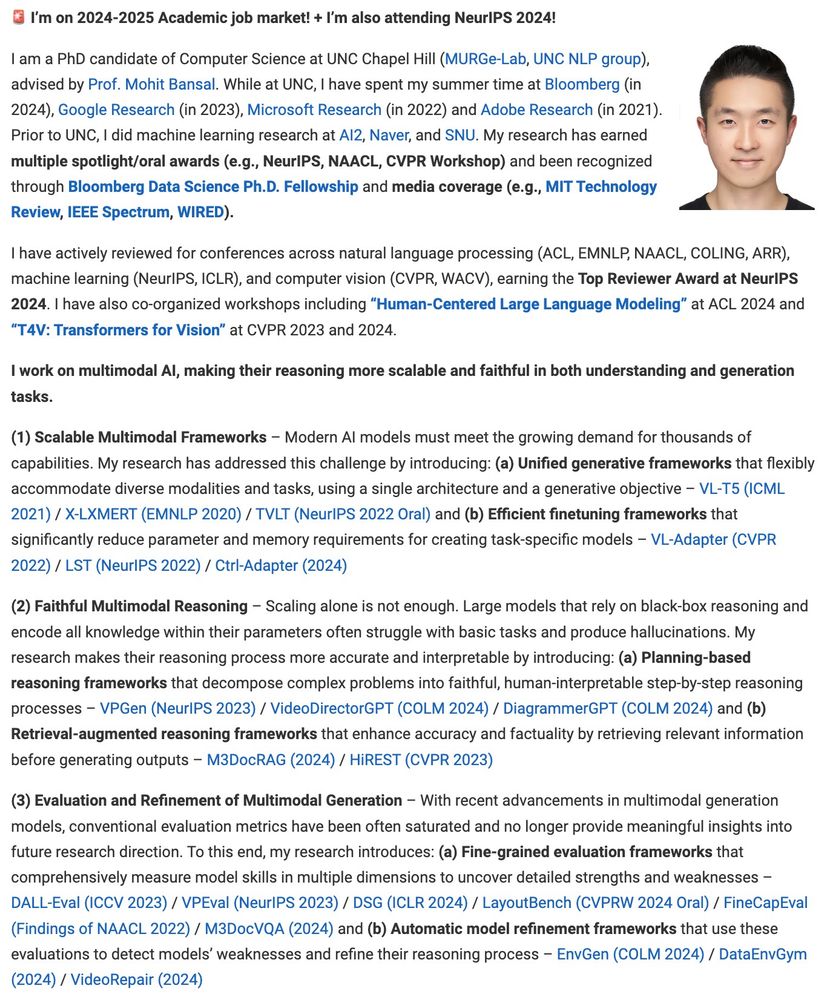

🚨 I’m on the academic job market!

j-min.io

I work on ✨Multimodal AI✨, advancing reasoning in understanding & generation by:

1⃣ Making it scalable

2⃣ Making it faithful

3⃣ Evaluating + refining it

Completing my PhD at UNC (w/ @mohitbansal.bsky.social).

Happy to connect (will be at #NeurIPS2024)!

👇🧵

j-min.io

I work on ✨Multimodal AI✨, advancing reasoning in understanding & generation by:

1⃣ Making it scalable

2⃣ Making it faithful

3⃣ Evaluating + refining it

Completing my PhD at UNC (w/ @mohitbansal.bsky.social).

Happy to connect (will be at #NeurIPS2024)!

👇🧵

December 12, 2024 at 1:33 AM

I'm now at #NeurIPS2024! 🔥

(yeah, I'm the one with the red Santa hat🧑🎄)

On Dec 13 PM, I present SELMA, co led with Jialu Li!

👉 improving the faithfulness of T2I models with automatically generated image-text pairs, with skill-specific expert learning and merging!

P.S. I'm on the faculty job market👇

(yeah, I'm the one with the red Santa hat🧑🎄)

On Dec 13 PM, I present SELMA, co led with Jialu Li!

👉 improving the faithfulness of T2I models with automatically generated image-text pairs, with skill-specific expert learning and merging!

P.S. I'm on the faculty job market👇

Reposted by Justin Chih-Yao Chen

🚨 I’m on the academic job market!

j-min.io

I work on ✨Multimodal AI✨, advancing reasoning in understanding & generation by:

1⃣ Making it scalable

2⃣ Making it faithful

3⃣ Evaluating + refining it

Completing my PhD at UNC (w/ @mohitbansal.bsky.social).

Happy to connect (will be at #NeurIPS2024)!

👇🧵

j-min.io

I work on ✨Multimodal AI✨, advancing reasoning in understanding & generation by:

1⃣ Making it scalable

2⃣ Making it faithful

3⃣ Evaluating + refining it

Completing my PhD at UNC (w/ @mohitbansal.bsky.social).

Happy to connect (will be at #NeurIPS2024)!

👇🧵

December 7, 2024 at 10:32 PM

🚨 I’m on the academic job market!

j-min.io

I work on ✨Multimodal AI✨, advancing reasoning in understanding & generation by:

1⃣ Making it scalable

2⃣ Making it faithful

3⃣ Evaluating + refining it

Completing my PhD at UNC (w/ @mohitbansal.bsky.social).

Happy to connect (will be at #NeurIPS2024)!

👇🧵

j-min.io

I work on ✨Multimodal AI✨, advancing reasoning in understanding & generation by:

1⃣ Making it scalable

2⃣ Making it faithful

3⃣ Evaluating + refining it

Completing my PhD at UNC (w/ @mohitbansal.bsky.social).

Happy to connect (will be at #NeurIPS2024)!

👇🧵

Elias is an outstanding researcher and mentor, exceptionally intelligent and brilliant with so many good ideas. I'm excited to see all the incredible things he'll accomplish as a professor!

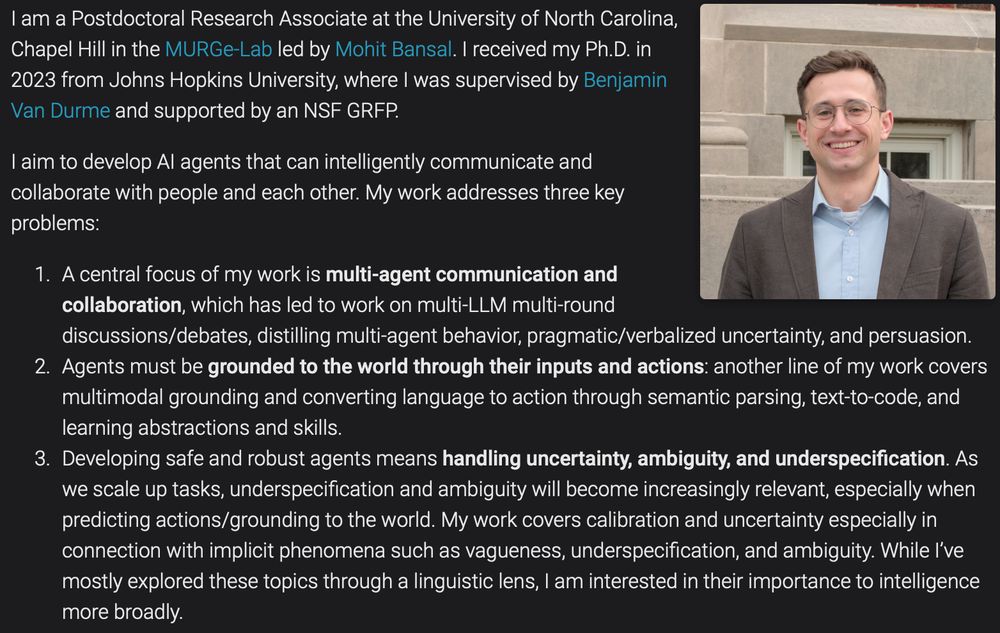

🚨 I am on the faculty job market this year 🚨

I will be presenting at #NeurIPS2024 and am happy to chat in-person or digitally!

I work on developing AI agents that can collaborate and communicate robustly with us and each other.

More at: esteng.github.io and in thread below

🧵👇

I will be presenting at #NeurIPS2024 and am happy to chat in-person or digitally!

I work on developing AI agents that can collaborate and communicate robustly with us and each other.

More at: esteng.github.io and in thread below

🧵👇

December 5, 2024 at 7:13 PM

Elias is an outstanding researcher and mentor, exceptionally intelligent and brilliant with so many good ideas. I'm excited to see all the incredible things he'll accomplish as a professor!

Reposted by Justin Chih-Yao Chen

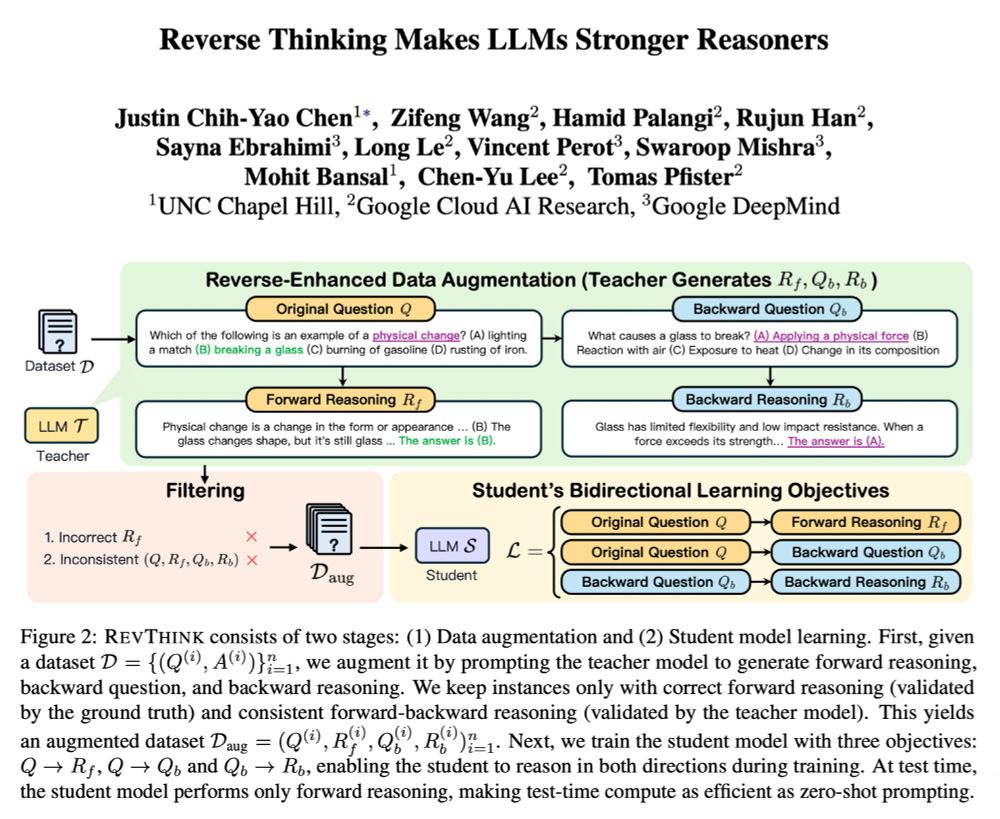

Reverse Thinking Makes LLMs Stronger Reasoners

abs: arxiv.org/abs/2411.19865

Train an LLM to be able to generate forward reasoning from question, backward question, and backward reaoning from backward question

Shows an average 13.53% improvement over the student model’s zero-shot performance

abs: arxiv.org/abs/2411.19865

Train an LLM to be able to generate forward reasoning from question, backward question, and backward reaoning from backward question

Shows an average 13.53% improvement over the student model’s zero-shot performance

December 2, 2024 at 10:45 AM

Reverse Thinking Makes LLMs Stronger Reasoners

abs: arxiv.org/abs/2411.19865

Train an LLM to be able to generate forward reasoning from question, backward question, and backward reaoning from backward question

Shows an average 13.53% improvement over the student model’s zero-shot performance

abs: arxiv.org/abs/2411.19865

Train an LLM to be able to generate forward reasoning from question, backward question, and backward reaoning from backward question

Shows an average 13.53% improvement over the student model’s zero-shot performance

Reposted by Justin Chih-Yao Chen

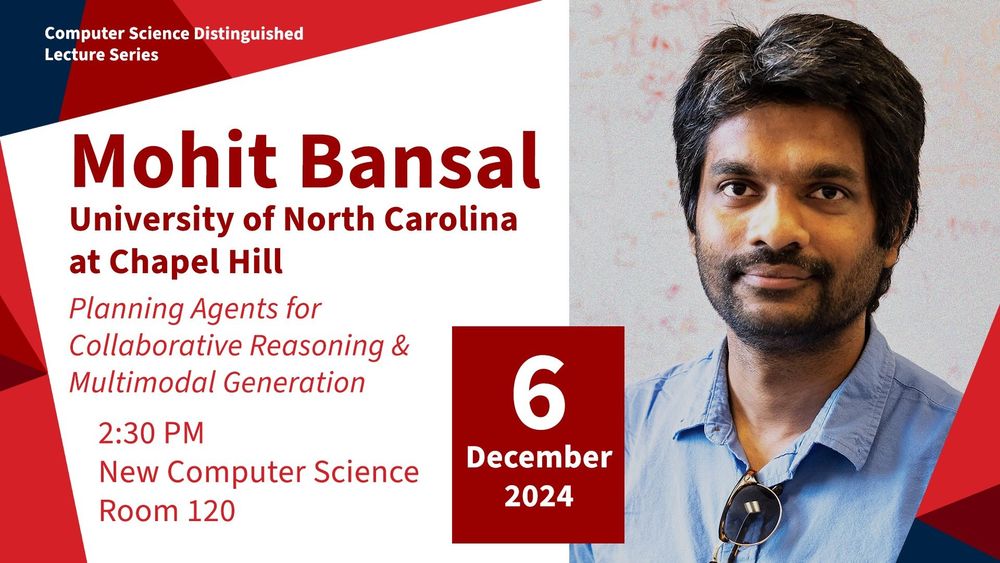

Looking forward to giving this Distinguished Lecture at StonyBrook next week & meeting the several awesome NLP + CV folks there - thanks Niranjan + all for the kind invitation 🙂

PS. Excited to give a new talk on "Planning Agents for Collaborative Reasoning and Multimodal Generation" ➡️➡️

🧵👇

PS. Excited to give a new talk on "Planning Agents for Collaborative Reasoning and Multimodal Generation" ➡️➡️

🧵👇

Excited to host the wonderful @mohitbansal.bsky.social as part of Stony Brook CS Distinguished Lecture Series on Dec 6th. Looking forward to hearing about his team's fantastic work on Planning Agents for Collaborative Reasoning and Multimodal Generation. More here: tinyurl.com/jkmex3e9

December 3, 2024 at 4:07 PM

Looking forward to giving this Distinguished Lecture at StonyBrook next week & meeting the several awesome NLP + CV folks there - thanks Niranjan + all for the kind invitation 🙂

PS. Excited to give a new talk on "Planning Agents for Collaborative Reasoning and Multimodal Generation" ➡️➡️

🧵👇

PS. Excited to give a new talk on "Planning Agents for Collaborative Reasoning and Multimodal Generation" ➡️➡️

🧵👇

Reposted by Justin Chih-Yao Chen

🚨Just read an exciting new paper from Justin 👇 on how to get the benefits of reasoning backwards (i.e. starting with the solution and tracing it back to the question) into a model while keeping the test-time cost of reasoning the same!

🧵1/3

🧵1/3

🚨 Reverse Thinking Makes LLMs Stronger Reasoners

We can often reason from a problem to a solution and also in reverse to enhance our overall reasoning. RevThink shows that LLMs can also benefit from reverse thinking 👉 13.53% gains + sample efficiency + strong generalization (on 4 OOD datasets)!

We can often reason from a problem to a solution and also in reverse to enhance our overall reasoning. RevThink shows that LLMs can also benefit from reverse thinking 👉 13.53% gains + sample efficiency + strong generalization (on 4 OOD datasets)!

December 2, 2024 at 7:55 PM

🚨Just read an exciting new paper from Justin 👇 on how to get the benefits of reasoning backwards (i.e. starting with the solution and tracing it back to the question) into a model while keeping the test-time cost of reasoning the same!

🧵1/3

🧵1/3

🚨 Reverse Thinking Makes LLMs Stronger Reasoners

We can often reason from a problem to a solution and also in reverse to enhance our overall reasoning. RevThink shows that LLMs can also benefit from reverse thinking 👉 13.53% gains + sample efficiency + strong generalization (on 4 OOD datasets)!

We can often reason from a problem to a solution and also in reverse to enhance our overall reasoning. RevThink shows that LLMs can also benefit from reverse thinking 👉 13.53% gains + sample efficiency + strong generalization (on 4 OOD datasets)!

December 2, 2024 at 7:29 PM

🚨 Reverse Thinking Makes LLMs Stronger Reasoners

We can often reason from a problem to a solution and also in reverse to enhance our overall reasoning. RevThink shows that LLMs can also benefit from reverse thinking 👉 13.53% gains + sample efficiency + strong generalization (on 4 OOD datasets)!

We can often reason from a problem to a solution and also in reverse to enhance our overall reasoning. RevThink shows that LLMs can also benefit from reverse thinking 👉 13.53% gains + sample efficiency + strong generalization (on 4 OOD datasets)!