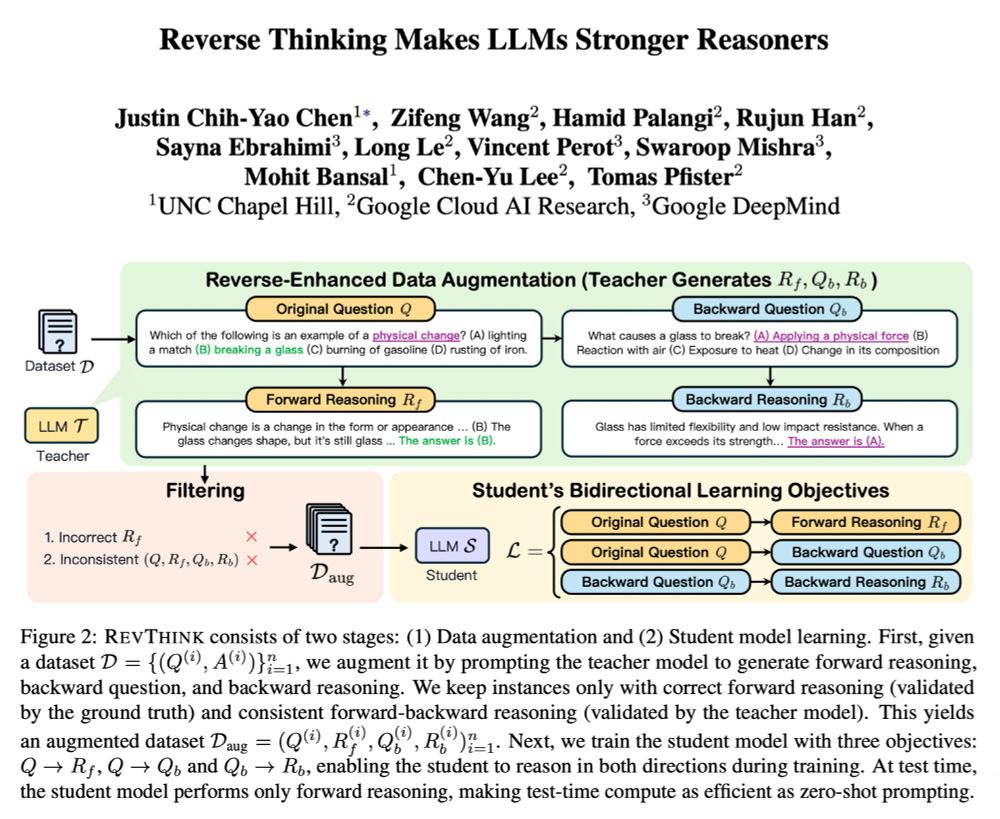

1. Given Q, generate forward reasoning

2. Given Q, generate backward Q

3. Given backward Q, generate backward reasoning

The student learns bidirectional reasoning in training. At test time, the student only do forward reasoning (as efficient as 0-shot prompting)

1. Given Q, generate forward reasoning

2. Given Q, generate backward Q

3. Given backward Q, generate backward reasoning

The student learns bidirectional reasoning in training. At test time, the student only do forward reasoning (as efficient as 0-shot prompting)

We can often reason from a problem to a solution and also in reverse to enhance our overall reasoning. RevThink shows that LLMs can also benefit from reverse thinking 👉 13.53% gains + sample efficiency + strong generalization (on 4 OOD datasets)!

We can often reason from a problem to a solution and also in reverse to enhance our overall reasoning. RevThink shows that LLMs can also benefit from reverse thinking 👉 13.53% gains + sample efficiency + strong generalization (on 4 OOD datasets)!