https://yui010206.github.io/

We present UnLOK-VQA, a benchmark to evaluate unlearning in vision-and-language models, where both images and text may encode sensitive or private information.

We present UnLOK-VQA, a benchmark to evaluate unlearning in vision-and-language models, where both images and text may encode sensitive or private information.

Check out our 3 papers:

☕️CREMA: Video-language + any modality reasoning

🛡️SAFREE: A training-free concept guard for any visual diffusion models

🧭SRDF: Human-level VL-navigation via self-refined data loop

feel free to DM me to grab a coffee&citywalk together 😉

Also meet our awesome students/postdocs/collaborators presenting their work.

Check out our 3 papers:

☕️CREMA: Video-language + any modality reasoning

🛡️SAFREE: A training-free concept guard for any visual diffusion models

🧭SRDF: Human-level VL-navigation via self-refined data loop

feel free to DM me to grab a coffee&citywalk together 😉

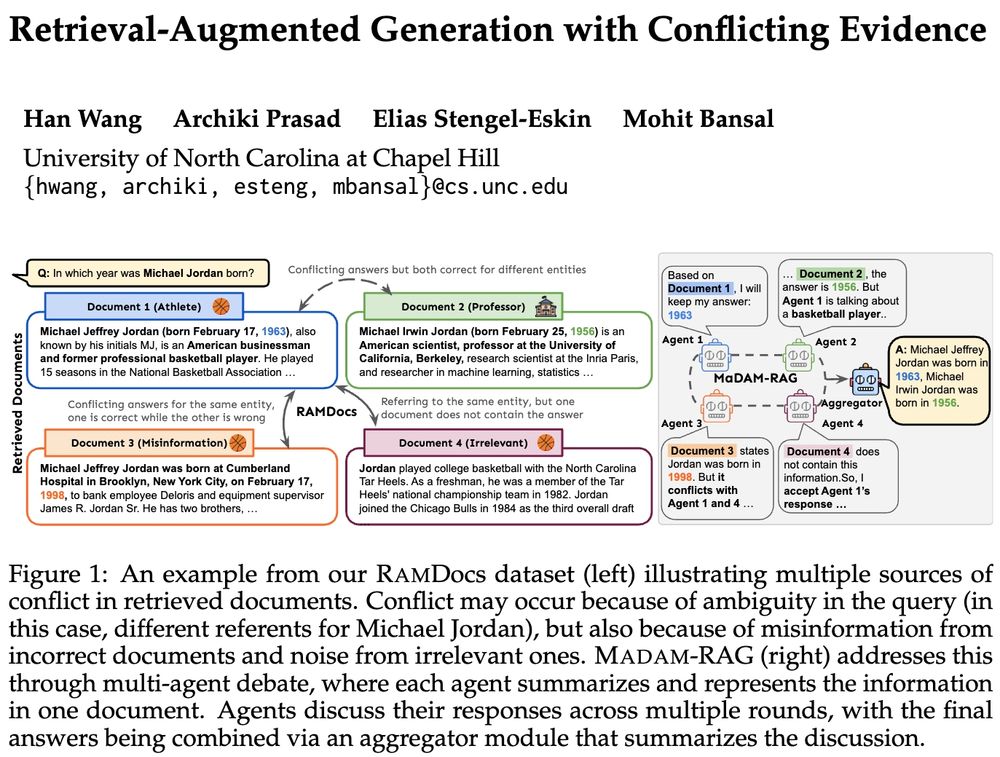

➡️RAMDocs: challenging dataset w/ ambiguity, misinformation & noise

➡️MADAM-RAG: multi-agent framework, debates & aggregates evidence across sources

🧵⬇️

➡️RAMDocs: challenging dataset w/ ambiguity, misinformation & noise

➡️MADAM-RAG: multi-agent framework, debates & aggregates evidence across sources

🧵⬇️

📃 arxiv.org/abs/2504.07389

📃 arxiv.org/abs/2504.07389

VEGGIE supports 8 skills, from object addition/removal/changing, and stylization to concept grounding/reasoning. It exceeds SoTA and shows 0-shot multimodal instructional & in-context video editing.

VEGGIE supports 8 skills, from object addition/removal/changing, and stylization to concept grounding/reasoning. It exceeds SoTA and shows 0-shot multimodal instructional & in-context video editing.

-- adaptive data generation environments/policies

...

🧵

-- adaptive data generation environments/policies

...

🧵

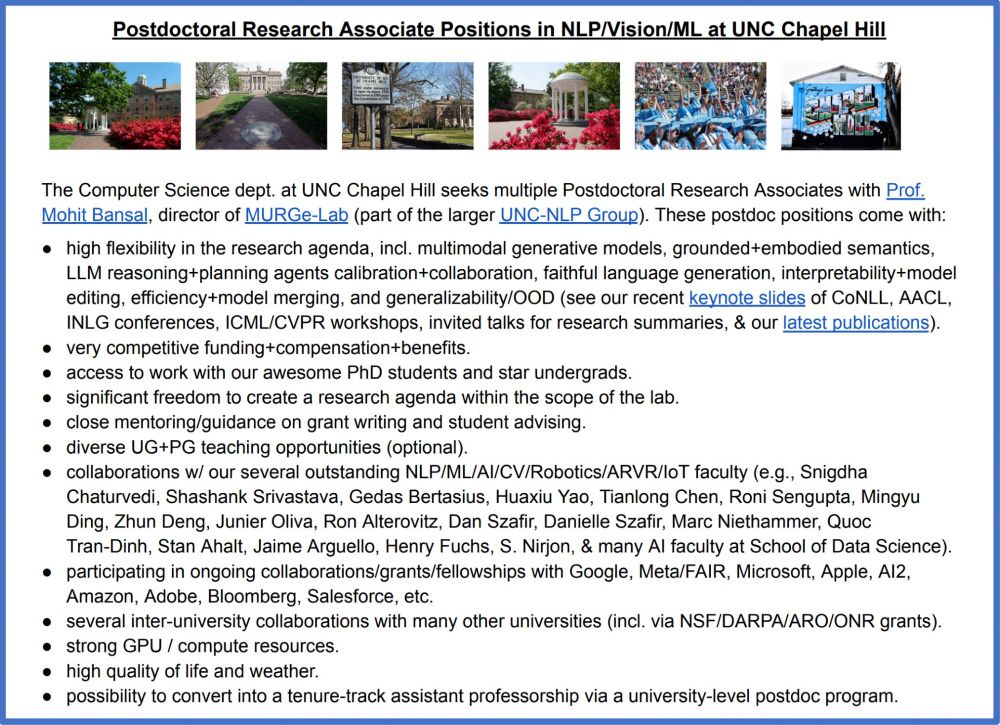

Exciting+diverse NLP/CV/ML topics**, freedom to create research agenda, competitive funding, very strong students, mentorship for grant writing, collabs w/ many faculty+universities+companies, superb quality of life/weather.

Please apply + help spread the word 🙏

Exciting+diverse NLP/CV/ML topics**, freedom to create research agenda, competitive funding, very strong students, mentorship for grant writing, collabs w/ many faculty+universities+companies, superb quality of life/weather.

Please apply + help spread the word 🙏

j-min.io

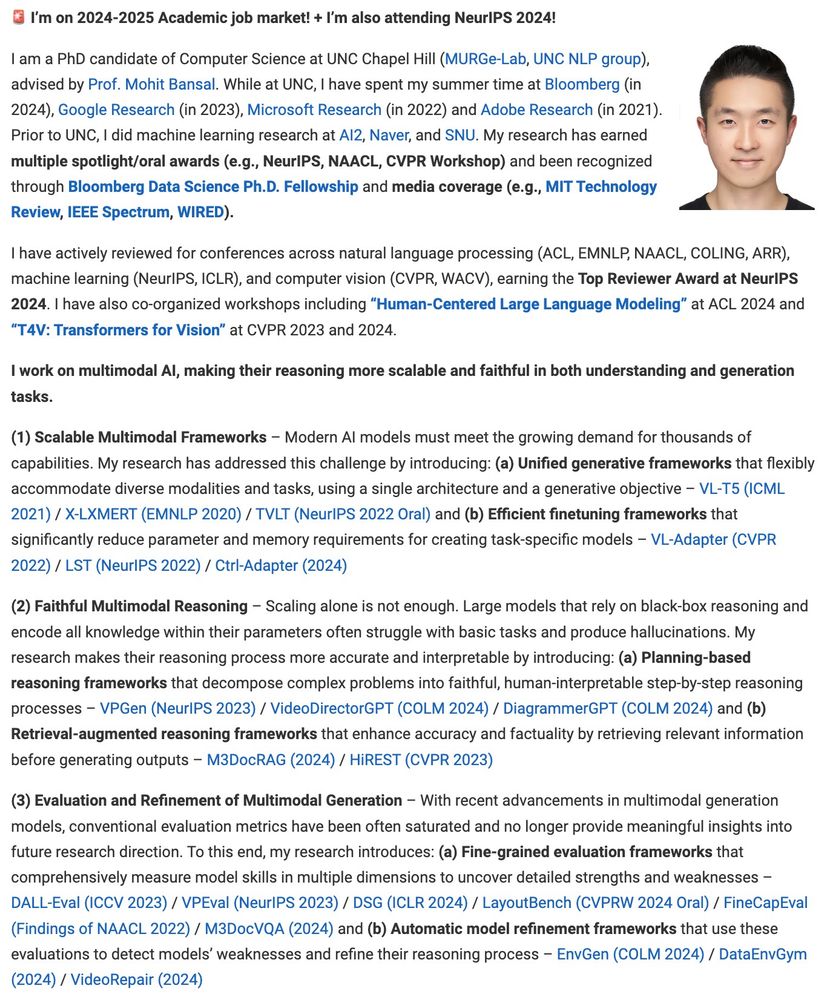

I work on ✨Multimodal AI✨, advancing reasoning in understanding & generation by:

1⃣ Making it scalable

2⃣ Making it faithful

3⃣ Evaluating + refining it

Completing my PhD at UNC (w/ @mohitbansal.bsky.social).

Happy to connect (will be at #NeurIPS2024)!

👇🧵

I will be presenting at #NeurIPS2024 and am happy to chat in-person or digitally!

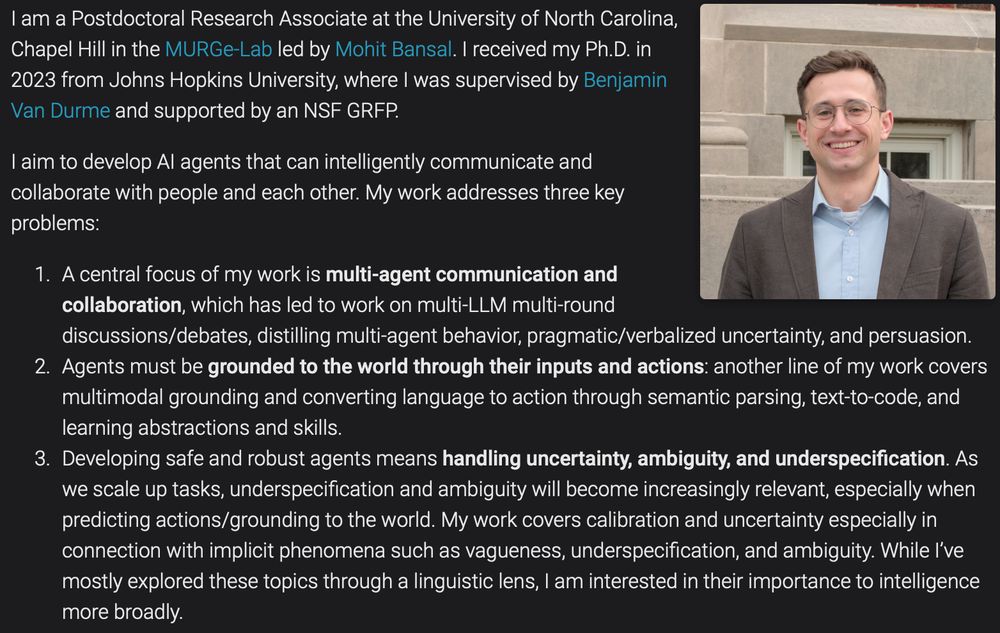

I work on developing AI agents that can collaborate and communicate robustly with us and each other.

More at: esteng.github.io and in thread below

🧵👇

I will be presenting at #NeurIPS2024 and am happy to chat in-person or digitally!

I work on developing AI agents that can collaborate and communicate robustly with us and each other.

More at: esteng.github.io and in thread below

🧵👇

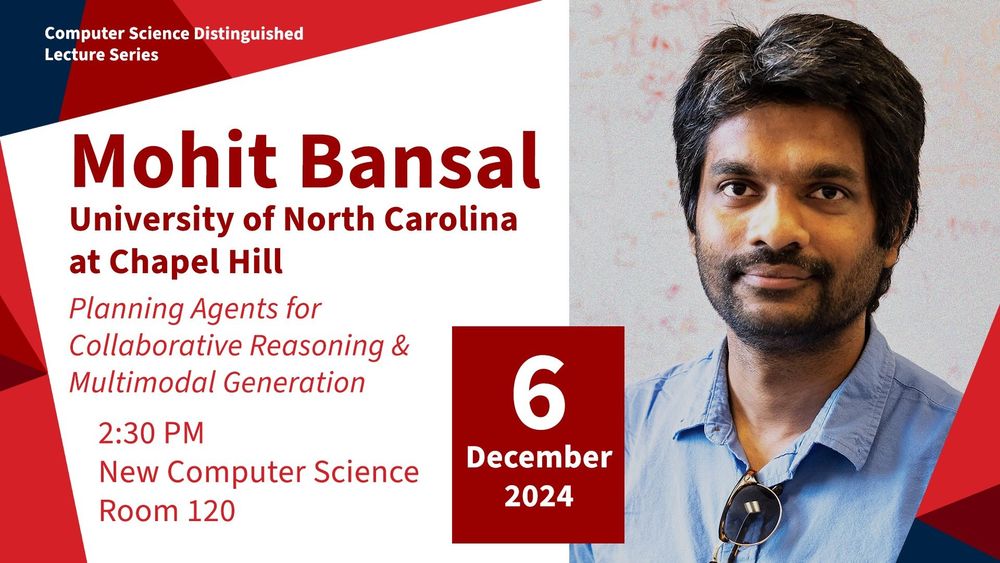

PS. Excited to give a new talk on "Planning Agents for Collaborative Reasoning and Multimodal Generation" ➡️➡️

🧵👇

PS. Excited to give a new talk on "Planning Agents for Collaborative Reasoning and Multimodal Generation" ➡️➡️

🧵👇

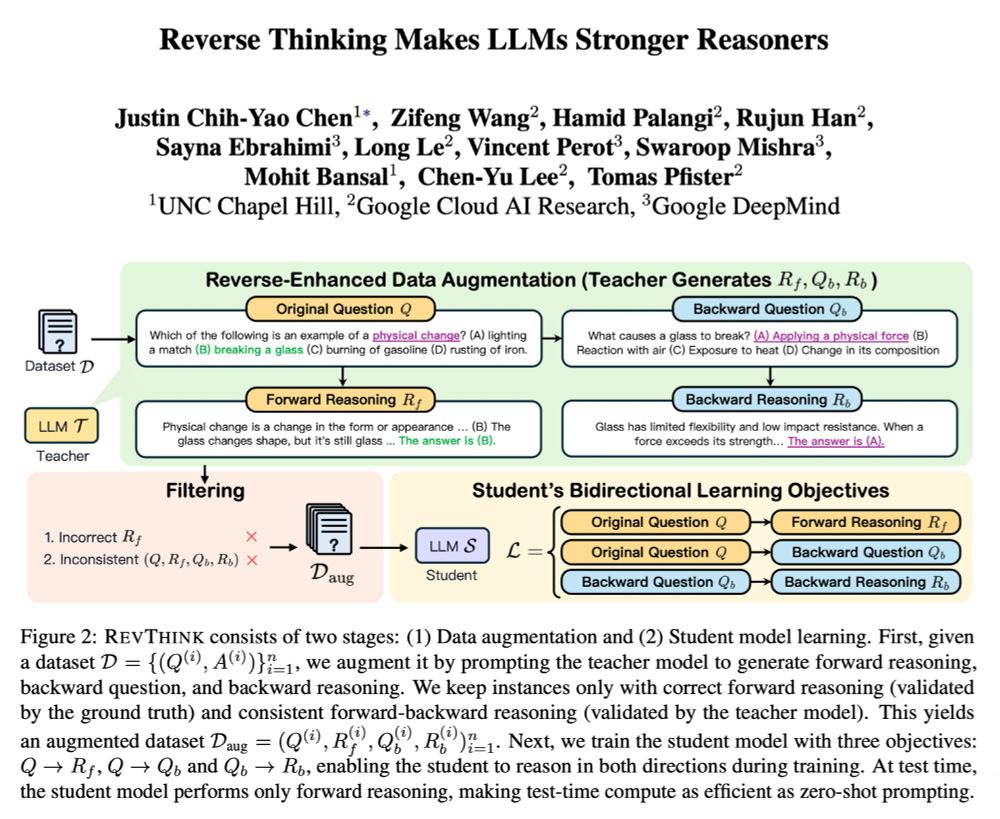

We can often reason from a problem to a solution and also in reverse to enhance our overall reasoning. RevThink shows that LLMs can also benefit from reverse thinking 👉 13.53% gains + sample efficiency + strong generalization (on 4 OOD datasets)!

We can often reason from a problem to a solution and also in reverse to enhance our overall reasoning. RevThink shows that LLMs can also benefit from reverse thinking 👉 13.53% gains + sample efficiency + strong generalization (on 4 OOD datasets)!