➡️ As document support becomes imbalanced, baselines ignore under-supported correct answers but MADAM-RAG maintains stable performance

➡️ As misinformation 📈, baselines degrade sharply (−46%) but MADAM-RAG remains more robust

➡️ As document support becomes imbalanced, baselines ignore under-supported correct answers but MADAM-RAG maintains stable performance

➡️ As misinformation 📈, baselines degrade sharply (−46%) but MADAM-RAG remains more robust

Increasing debate rounds in MADAM-RAG improves performance by allowing agents to refine answers via debate.

Aggregator provides even greater gains, especially in early rounds, aligning conflicting views & suppressing misinfo.

Increasing debate rounds in MADAM-RAG improves performance by allowing agents to refine answers via debate.

Aggregator provides even greater gains, especially in early rounds, aligning conflicting views & suppressing misinfo.

MADAM-RAG consistently outperforms concatenated-prompt and Astute RAG baselines across all three datasets and model backbones.

MADAM-RAG consistently outperforms concatenated-prompt and Astute RAG baselines across all three datasets and model backbones.

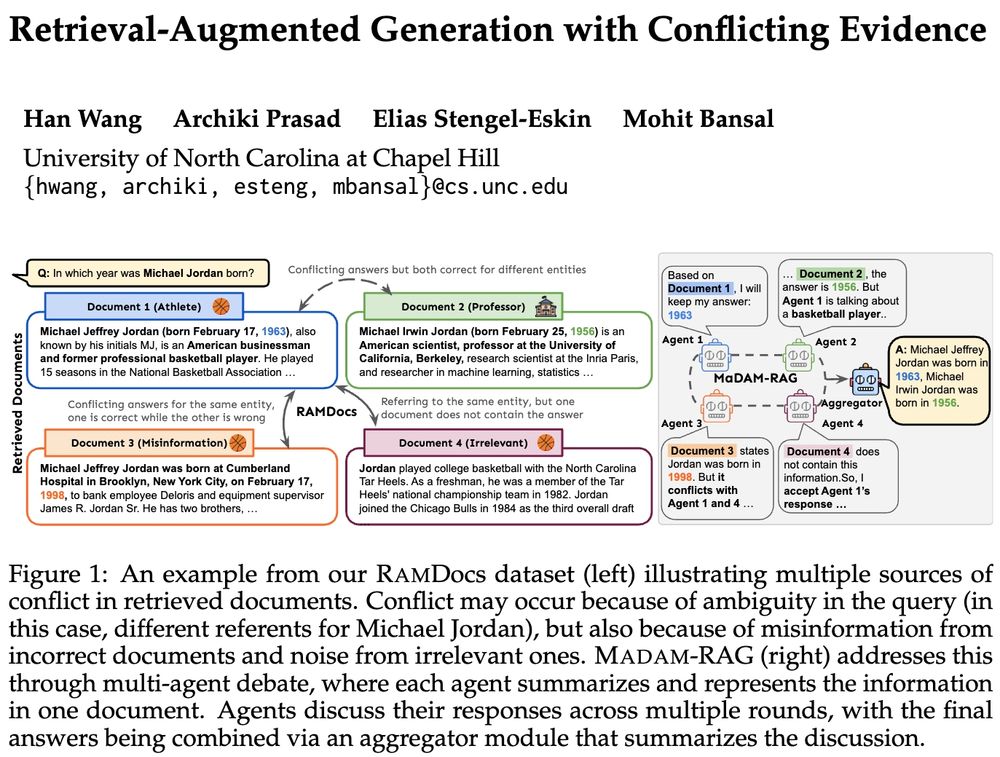

1️⃣ Independent LLM agents - generate intermediate response conditioned on a single doc

2️⃣ Centralized aggregator

3️⃣ Iterative multi-round debate

1️⃣ Independent LLM agents - generate intermediate response conditioned on a single doc

2️⃣ Centralized aggregator

3️⃣ Iterative multi-round debate

➡️ Ambiguous queries w/ multiple valid ans.

➡️ Imbalanced document support (some answers backed by many sources, others by fewer)

➡️ Docs w/ misinformation (plausible but wrong claims) or noisy/irrelevant content

➡️ Ambiguous queries w/ multiple valid ans.

➡️ Imbalanced document support (some answers backed by many sources, others by fewer)

➡️ Docs w/ misinformation (plausible but wrong claims) or noisy/irrelevant content

➡️RAMDocs: challenging dataset w/ ambiguity, misinformation & noise

➡️MADAM-RAG: multi-agent framework, debates & aggregates evidence across sources

🧵⬇️

➡️RAMDocs: challenging dataset w/ ambiguity, misinformation & noise

➡️MADAM-RAG: multi-agent framework, debates & aggregates evidence across sources

🧵⬇️

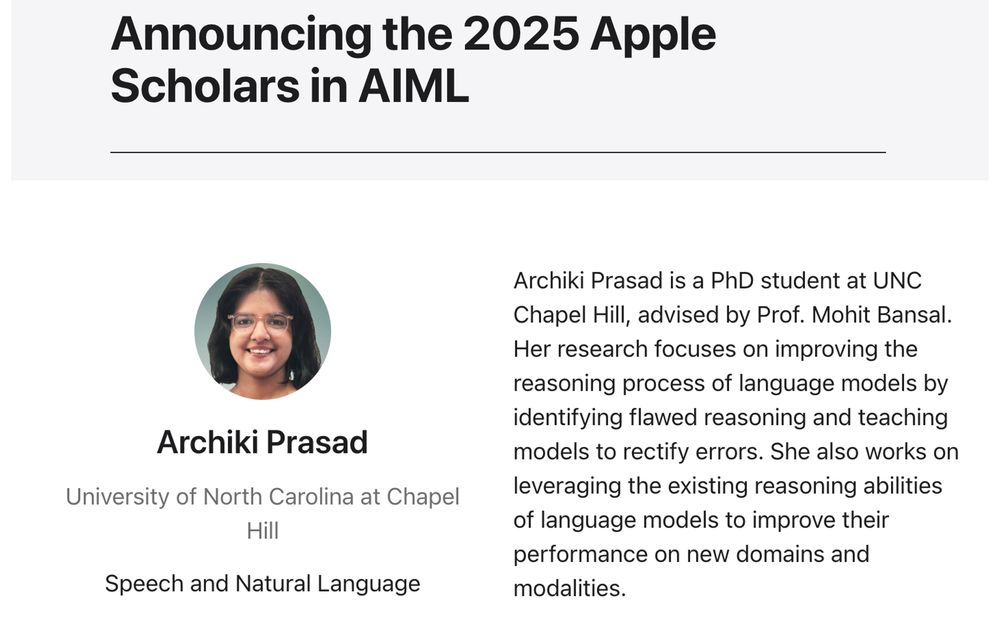

Huge shoutout to my advisor @mohitbansal.bsky.social, & many thanks to my lab mates @unccs.bsky.social , past collaborators + internship advisors for their support ☺️🙏

machinelearning.apple.com/updates/appl...

Huge shoutout to my advisor @mohitbansal.bsky.social, & many thanks to my lab mates @unccs.bsky.social , past collaborators + internship advisors for their support ☺️🙏

machinelearning.apple.com/updates/appl...

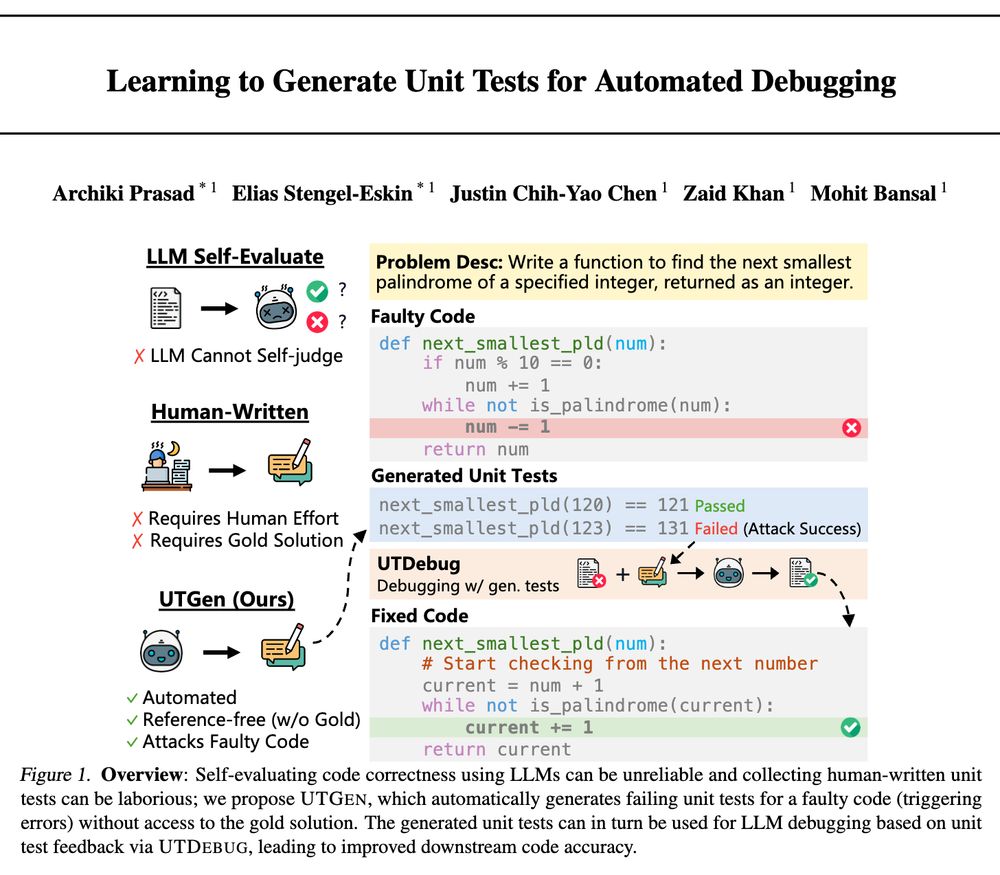

For partially correct code with subtle errors (our MBPP+Fix hard split) debugging with UTGen improves over baselines by >12.35% on Qwen 2.5!

For partially correct code with subtle errors (our MBPP+Fix hard split) debugging with UTGen improves over baselines by >12.35% on Qwen 2.5!

1⃣Test-time scaling (self-consistency over multiple samples) for increasing output acc.

2⃣Validation & Backtracking: Generating multiple UTs to perform validation, accept edits only when the overall pass rate increases & backtrack otherwise

1⃣Test-time scaling (self-consistency over multiple samples) for increasing output acc.

2⃣Validation & Backtracking: Generating multiple UTs to perform validation, accept edits only when the overall pass rate increases & backtrack otherwise

We find that UTGen models balance output acc and attack rate and result in 7.59% more failing/error-revealing unit tests with correct outputs on Qwen-2.5.

We find that UTGen models balance output acc and attack rate and result in 7.59% more failing/error-revealing unit tests with correct outputs on Qwen-2.5.

Given coding problems and their solutions, we 1⃣ perturb the code to simulate errors, 2⃣ find challenging UT inputs, 3⃣ generate CoT rationales deducing the correct UT output for challenging UT inputs.

Given coding problems and their solutions, we 1⃣ perturb the code to simulate errors, 2⃣ find challenging UT inputs, 3⃣ generate CoT rationales deducing the correct UT output for challenging UT inputs.

which introduces ✨UTGen and UTDebug✨ for teaching LLMs to generate unit tests (UTs) and debugging code from generated tests.

UTGen+UTDebug yields large gains in debugging (+12% pass@1) & addresses 3 key questions:

🧵👇

which introduces ✨UTGen and UTDebug✨ for teaching LLMs to generate unit tests (UTs) and debugging code from generated tests.

UTGen+UTDebug yields large gains in debugging (+12% pass@1) & addresses 3 key questions:

🧵👇