cims.nyu.edu/taur/postdoc...

apply.interfolio.com/178940

cims.nyu.edu/taur/postdoc...

apply.interfolio.com/178940

💡 Abstract deadline: Thursday, March 26, 2026

📄 Full paper submission deadline: Tuesday, March 31, 2026

Call for papers (website coming soon):

docs.google.com/document/d/1...

–building reliable software on top of unreliable LLM primitives

–statistical evaluation of real-world deployments of LLM-based systems

I’m speaking about this on two NeurIPS workshop panels:

🗓️Saturday – Reliable ML Workshop

🗓️Sunday – LLM Evaluation Workshop

–building reliable software on top of unreliable LLM primitives

–statistical evaluation of real-world deployments of LLM-based systems

I’m speaking about this on two NeurIPS workshop panels:

🗓️Saturday – Reliable ML Workshop

🗓️Sunday – LLM Evaluation Workshop

I’m recruiting a postdoc for my lab at NYU! Topics include LM reasoning, creativity, limitations of scaling, AI for science, & more! Apply by Feb 1.

(Different from NYU Faculty Fellows, which are also great but less connected to my lab.)

Link in 🧵

I’m recruiting a postdoc for my lab at NYU! Topics include LM reasoning, creativity, limitations of scaling, AI for science, & more! Apply by Feb 1.

(Different from NYU Faculty Fellows, which are also great but less connected to my lab.)

Link in 🧵

TTIC is recruiting both tenure-track and research assistant professors: ttic.edu/faculty-hiri...

NYU is recruiting faculty fellows: apply.interfolio.com/174686

Happy to chat with anyone considering either of these options

TTIC is recruiting both tenure-track and research assistant professors: ttic.edu/faculty-hiri...

NYU is recruiting faculty fellows: apply.interfolio.com/174686

Happy to chat with anyone considering either of these options

If you are interested in evals of open-ended tasks/creativity please reach out and we can schedule a chat! :)

Tuesday morning: @juand-r.bsky.social and @ramyanamuduri.bsky.social 's papers (find them if you missed it!)

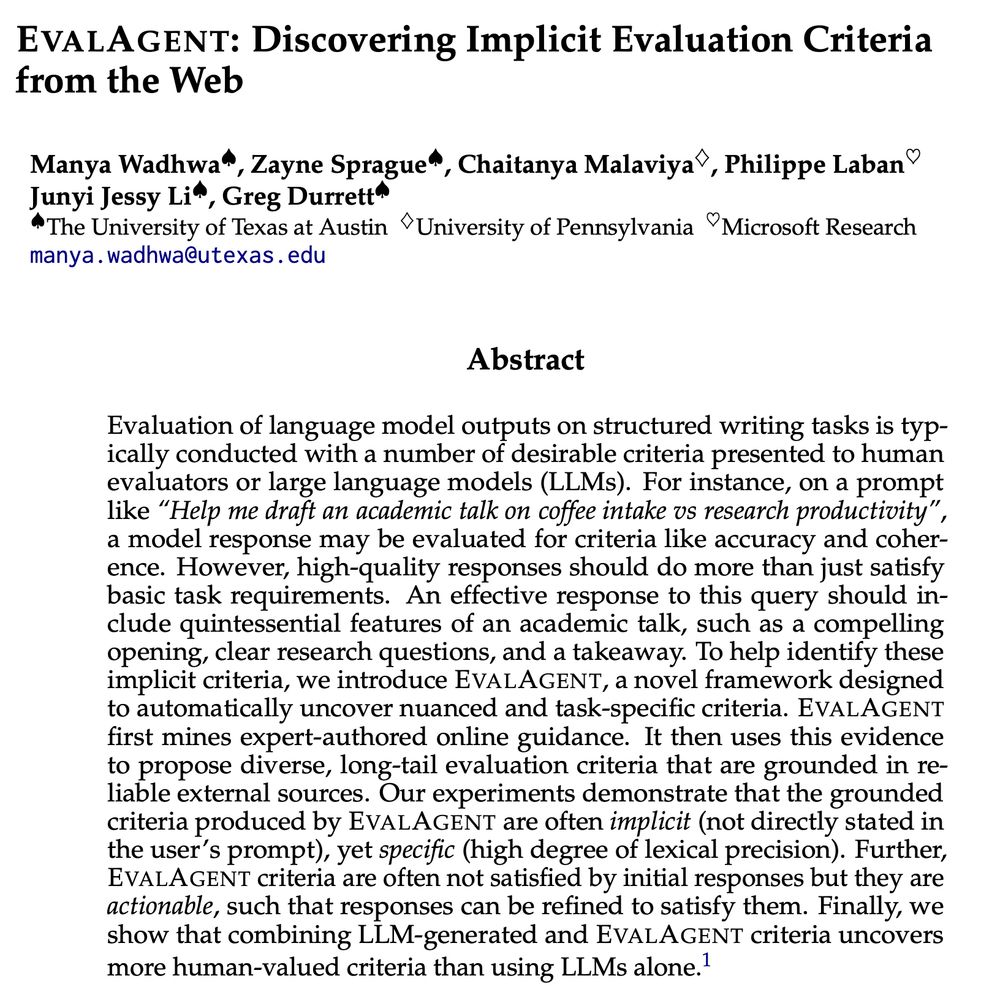

Wednesday pm: @manyawadhwa.bsky.social 's EvalAgent

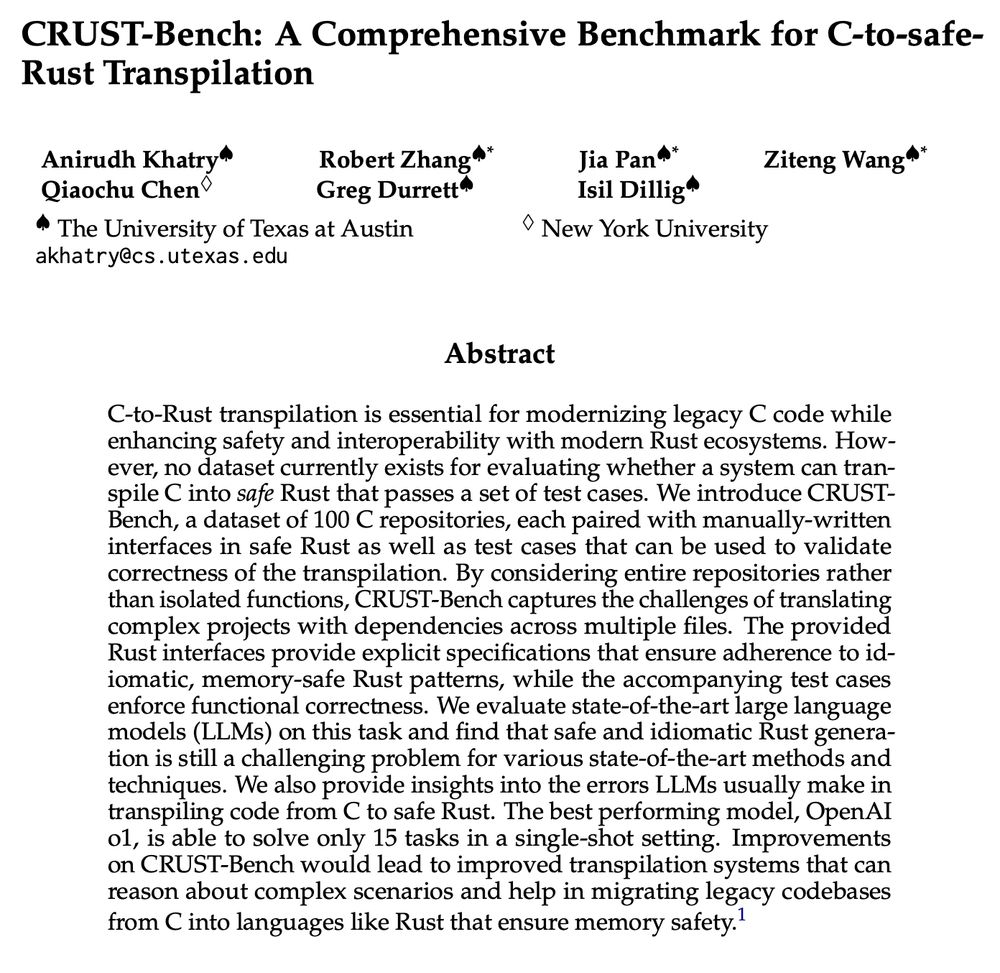

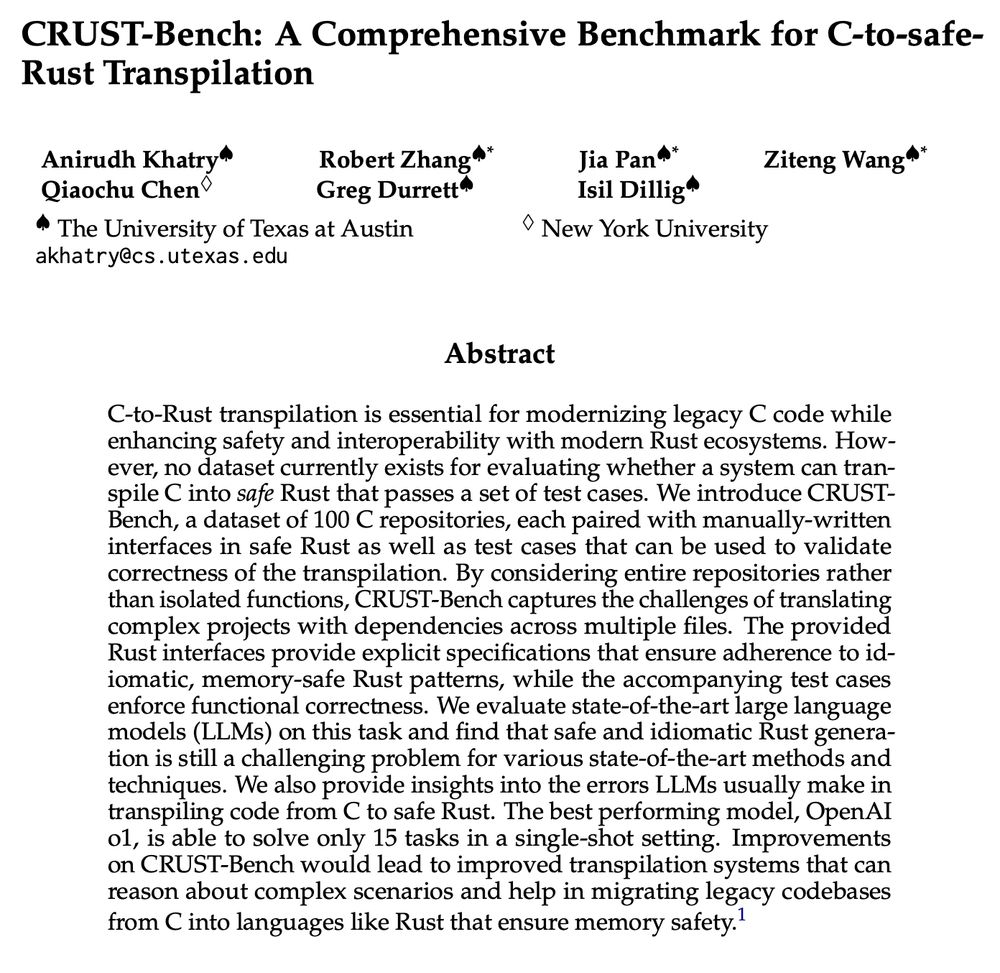

Thursday am: @anirudhkhatry.bsky.social 's CRUST-Bench oral spotlight + poster

If you are interested in evals of open-ended tasks/creativity please reach out and we can schedule a chat! :)

Tuesday morning: @juand-r.bsky.social and @ramyanamuduri.bsky.social 's papers (find them if you missed it!)

Wednesday pm: @manyawadhwa.bsky.social 's EvalAgent

Thursday am: @anirudhkhatry.bsky.social 's CRUST-Bench oral spotlight + poster

Tuesday morning: @juand-r.bsky.social and @ramyanamuduri.bsky.social 's papers (find them if you missed it!)

Wednesday pm: @manyawadhwa.bsky.social 's EvalAgent

Thursday am: @anirudhkhatry.bsky.social 's CRUST-Bench oral spotlight + poster

🎯 We demonstrate that ranking-based discriminator training can significantly reduce this gap, and improvements on one task often generalize to others!

🧵👇

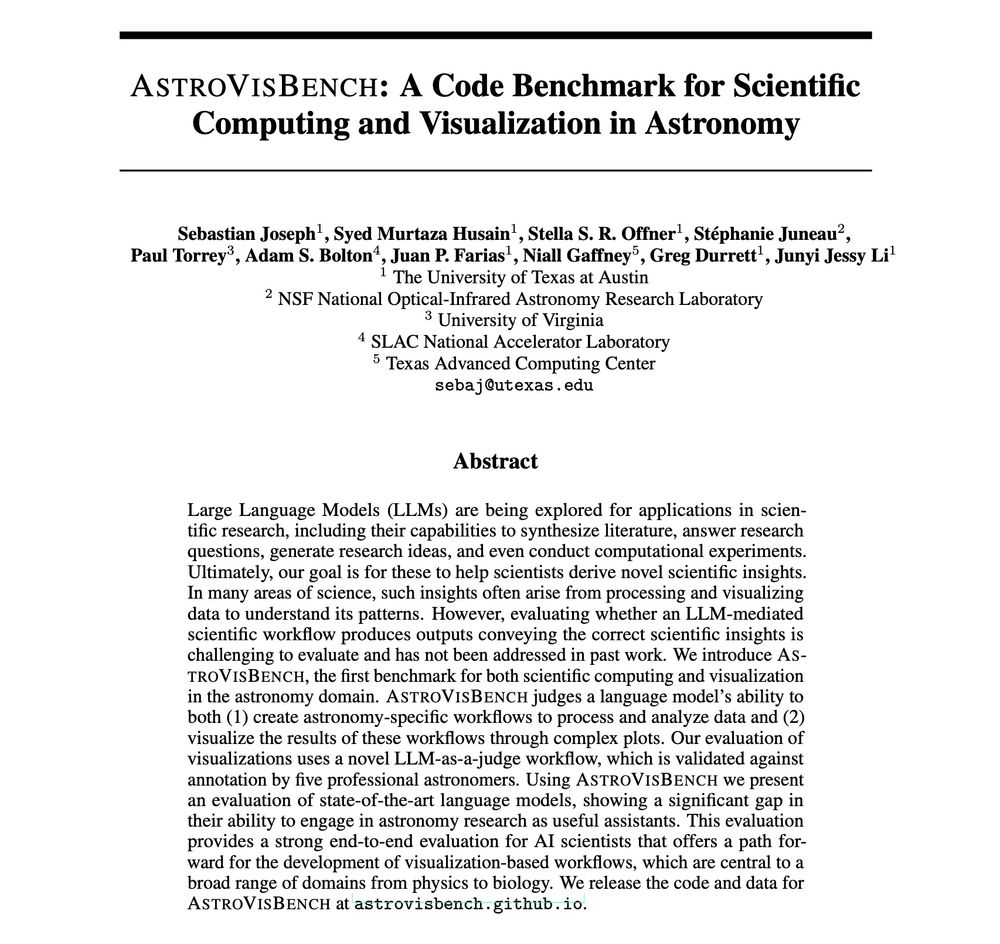

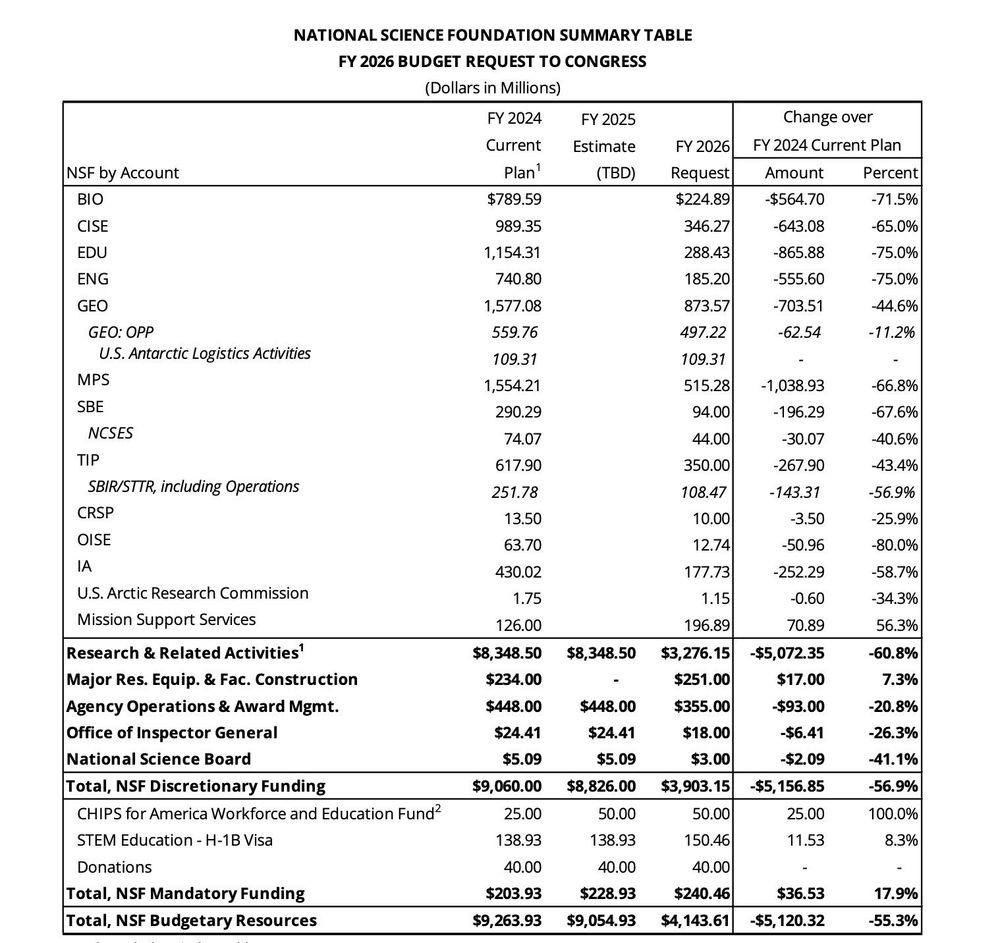

A new benchmark developed by researchers at the NSF-Simons AI Institute for Cosmic Origins is testing how well LLMs implement scientific workflows in astronomy and visualize results.

I will return to UT Austin as an Assistant Professor of Linguistics this fall, and join its vibrant community of Computational Linguists, NLPers, and Cognitive Scientists!🤘

Excited to develop ideas about linguistic and conceptual generalization (recruitment details soon!)

I will return to UT Austin as an Assistant Professor of Linguistics this fall, and join its vibrant community of Computational Linguists, NLPers, and Cognitive Scientists!🤘

Excited to develop ideas about linguistic and conceptual generalization (recruitment details soon!)

(1) focus on data processing & visualization, a "bite-sized" AI4Sci task (not automating all of research)

(2) eval with VLM-as-a-judge (possible with strong, modern VLMs)

AstroVisBench tests how well LLMs implement scientific workflows in astronomy and visualize results.

SOTA models like Gemini 2.5 Pro & Claude 4 Opus only match ground truth scientific utility 16% of the time. 🧵

(1) focus on data processing & visualization, a "bite-sized" AI4Sci task (not automating all of research)

(2) eval with VLM-as-a-judge (possible with strong, modern VLMs)

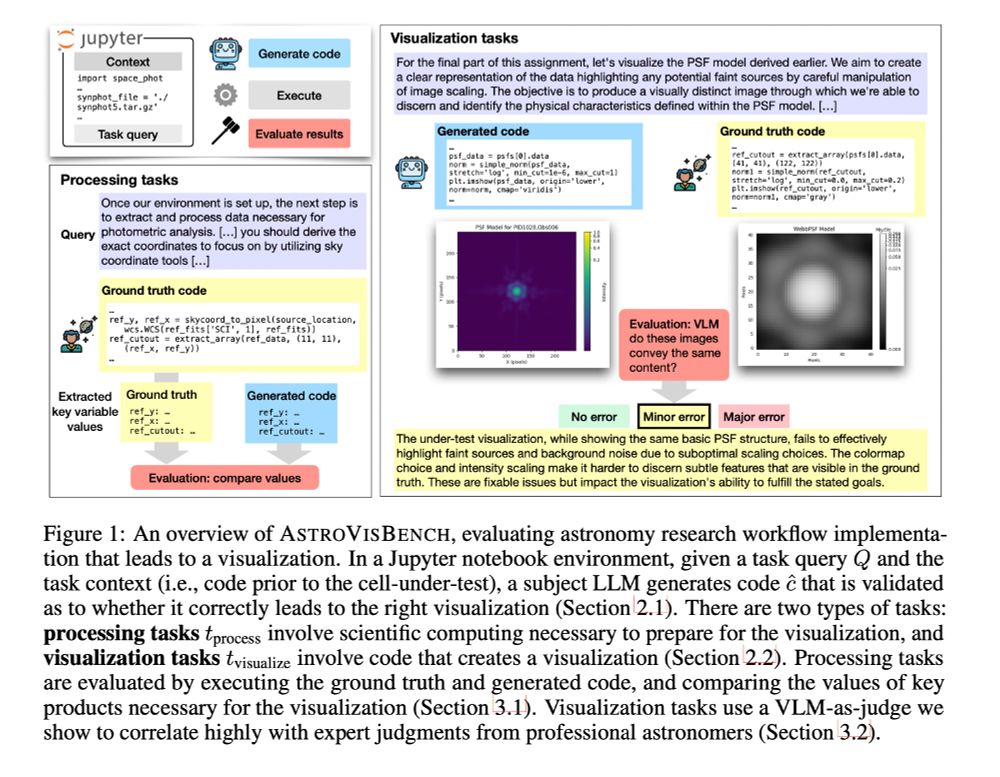

All in one little table.

A tremendous gift to China, courtesy of the GOP.

nsf-gov-resources.nsf.gov/files/00-NSF...

All in one little table.

A tremendous gift to China, courtesy of the GOP.

nsf-gov-resources.nsf.gov/files/00-NSF...

x.com/percyliang/s...

x.com/percyliang/s...

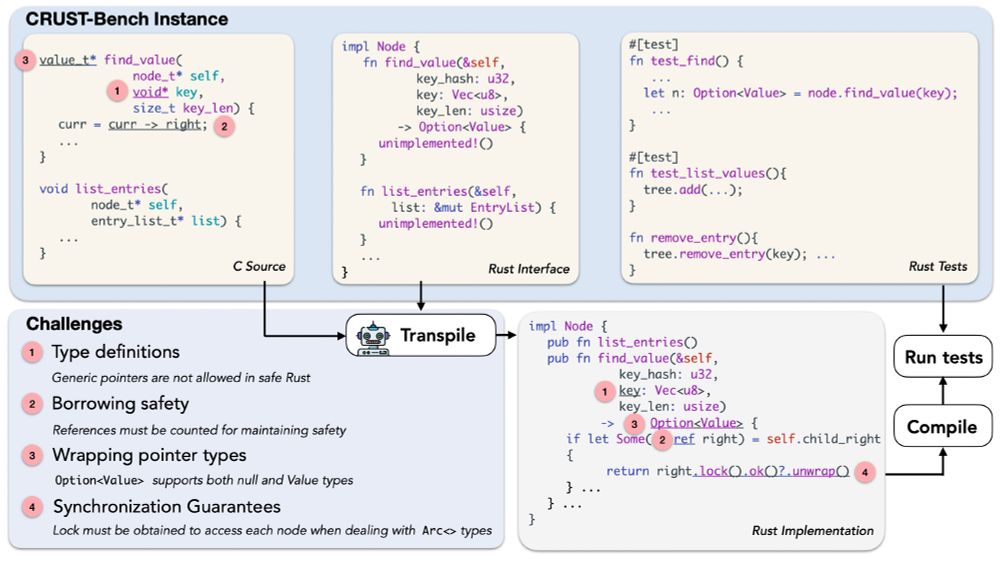

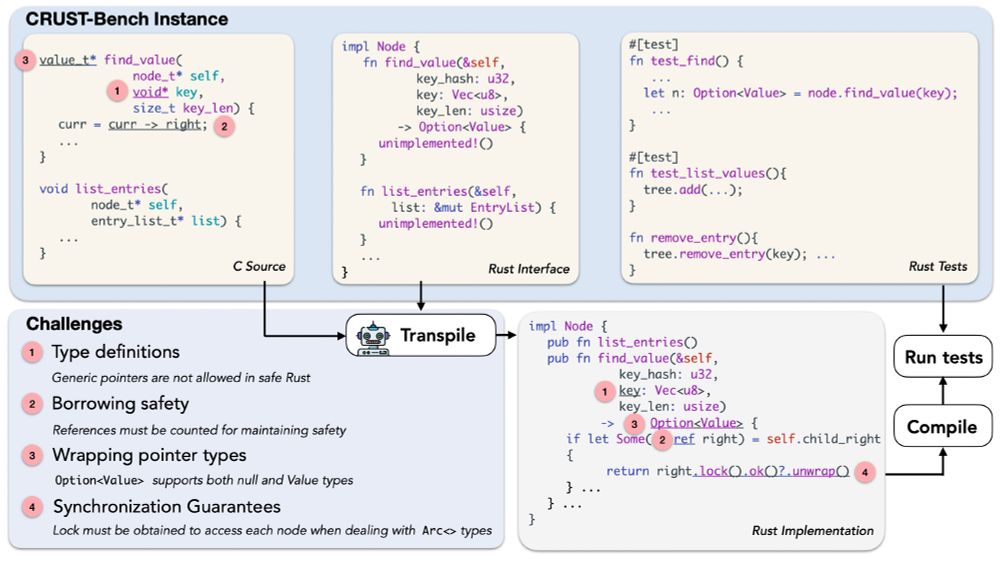

A dataset of 100 real-world C repositories across various domains, each paired with:

🦀 Handwritten safe Rust interfaces.

🧪 Rust test cases to validate correctness.

🧵[1/6]

A dataset of 100 real-world C repositories across various domains, each paired with:

🦀 Handwritten safe Rust interfaces.

🧪 Rust test cases to validate correctness.

🧵[1/6]

A dataset of 100 real-world C repositories across various domains, each paired with:

🦀 Handwritten safe Rust interfaces.

🧪 Rust test cases to validate correctness.

🧵[1/6]

We introduce EvalAgent, a framework that identifies nuanced and diverse criteria 📋✍️.

EvalAgent identifies 👩🏫🎓 expert advice on the web that implicitly address the user’s prompt 🧵👇

✨We introduce QUDsim, to quantify discourse similarities beyond lexical, syntactic, and content overlap.

🎯 We demonstrate that ranking-based discriminator training can significantly reduce this gap, and improvements on one task often generalize to others!

🧵👇

🧵

🧵

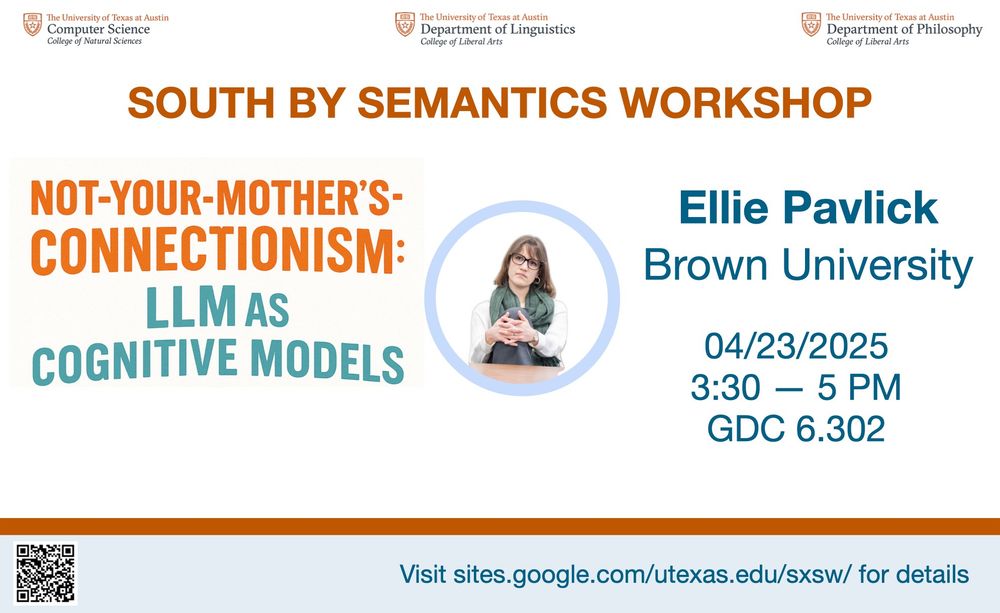

* Paper: arxiv.org/abs/2502.04671

* Code: github.com/trishullab/p...

Proofwala allows the collection of proof-step data from multiple proof assistants (Coq and Lean) and multilingual training. (1/3)

* Paper: arxiv.org/abs/2502.04671

* Code: github.com/trishullab/p...

Proofwala allows the collection of proof-step data from multiple proof assistants (Coq and Lean) and multilingual training. (1/3)

This is research that would have made us competitive in computer science that will now be delayed by many months if not lost forever.

AI is fine but right now the top priority is keeping the lights on at NSF and NIH.

This is research that would have made us competitive in computer science that will now be delayed by many months if not lost forever.

AI is fine but right now the top priority is keeping the lights on at NSF and NIH.

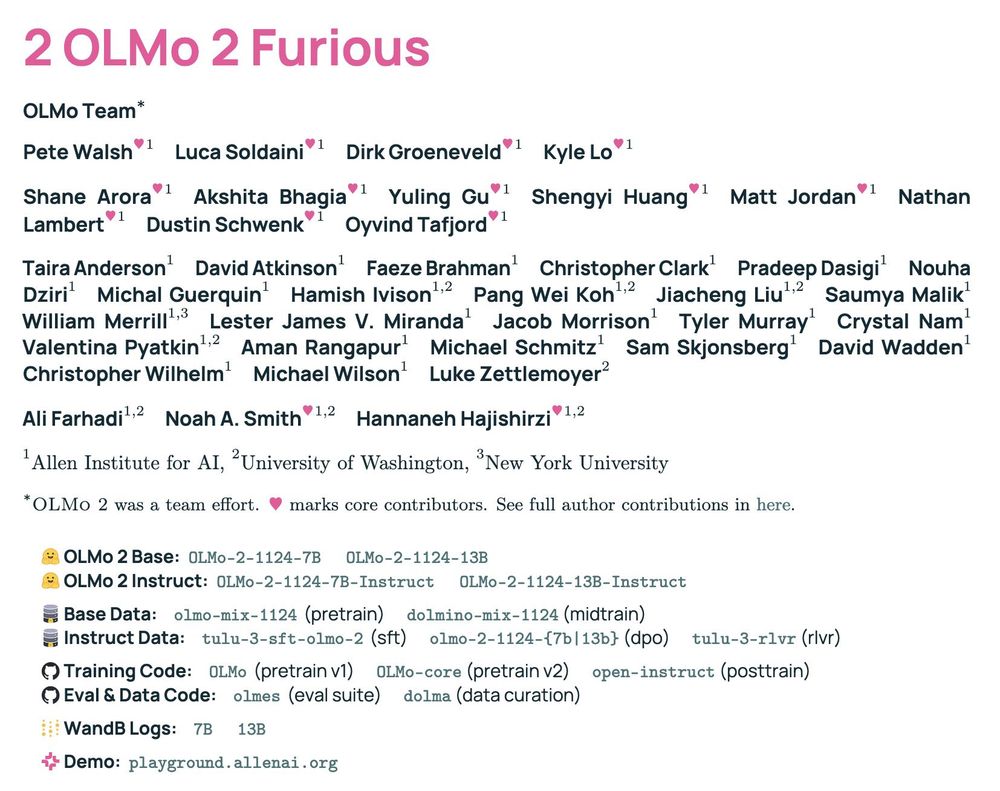

🚗 2 OLMo 2 Furious 🔥 is everythin we learned since OLMo 1, with deep dives into:

🚖 stable pretrain recipe

🚔 lr anneal 🤝 data curricula 🤝 soups

🚘 tulu post-train recipe

🚜 compute infra setup

👇🧵

🚗 2 OLMo 2 Furious 🔥 is everythin we learned since OLMo 1, with deep dives into:

🚖 stable pretrain recipe

🚔 lr anneal 🤝 data curricula 🤝 soups

🚘 tulu post-train recipe

🚜 compute infra setup

👇🧵