DataDecide opens up the process: 1,050 models, 30k checkpoints, 25 datasets & 10 benchmarks 🧵

🛰 So we asked: what's missing in open language modeling?

🪐 DataDecide 🌌 charts the cosmos of pretraining—across scales and corpora—at a resolution beyond any public suite of models that has come before.

We find an answer with two simple metrics: signal, a benchmark’s ability to separate models, and noise, a benchmark’s random variability between training steps 🧵

We find an answer with two simple metrics: signal, a benchmark’s ability to separate models, and noise, a benchmark’s random variability between training steps 🧵

Standard benchmarks give every LLM the same questions. This is like testing 5th graders and college seniors with *one* exam! 🥴

Meet Fluid Benchmarking, a capability-adaptive eval method delivering lower variance, higher validity, and reduced cost.

🧵

Standard benchmarks give every LLM the same questions. This is like testing 5th graders and college seniors with *one* exam! 🥴

Meet Fluid Benchmarking, a capability-adaptive eval method delivering lower variance, higher validity, and reduced cost.

🧵

🛰 So we asked: what's missing in open language modeling?

🪐 DataDecide 🌌 charts the cosmos of pretraining—across scales and corpora—at a resolution beyond any public suite of models that has come before.

DataDecide opens up the process: 1,050 models, 30k checkpoints, 25 datasets & 10 benchmarks 🧵

🛰 So we asked: what's missing in open language modeling?

🪐 DataDecide 🌌 charts the cosmos of pretraining—across scales and corpora—at a resolution beyond any public suite of models that has come before.

We do this on unprecedented scale and in real time: finding matching text between model outputs and 4 trillion training tokens within seconds. ✨

Introducing OLMoTrace, a new feature in the Ai2 Playground that begins to shed some light. 🔦

We do this on unprecedented scale and in real time: finding matching text between model outputs and 4 trillion training tokens within seconds. ✨

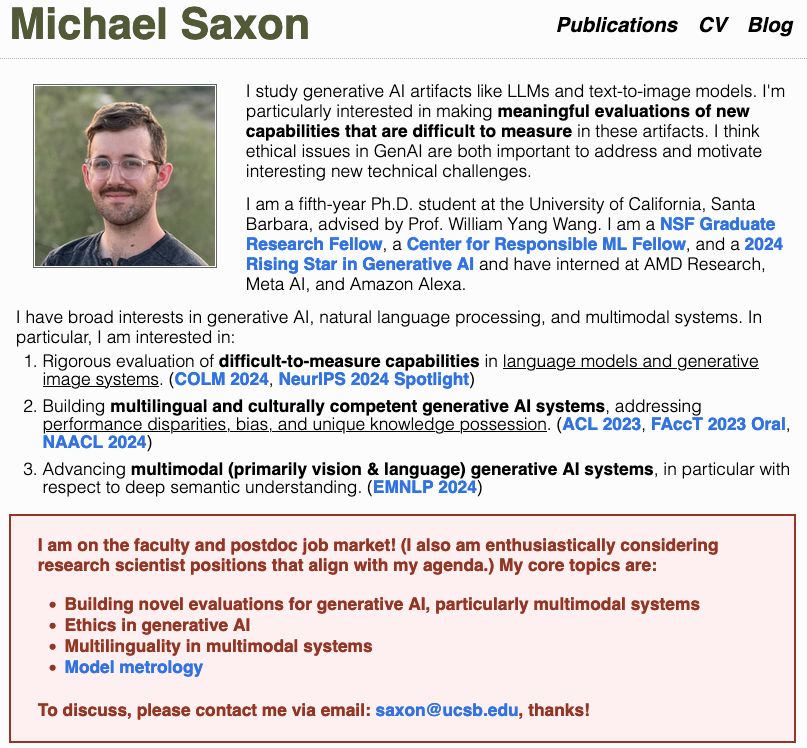

I'm searching for faculty positions/postdocs in multilingual/multicultural NLP, vision+language models, and eval for genAI!

I'll be at #NeurIPS2024 presenting our work on meta-evaluation for text-to-image faithfulness! Let's chat there!

Papers in🧵, see more: saxon.me

I'm searching for faculty positions/postdocs in multilingual/multicultural NLP, vision+language models, and eval for genAI!

I'll be at #NeurIPS2024 presenting our work on meta-evaluation for text-to-image faithfulness! Let's chat there!

Papers in🧵, see more: saxon.me

today @akshitab.bsky.social @natolambert.bsky.social and I are giving our #neurips2024 tutorial on language model development.

everything from data, training, adaptation. published or not, no secrets 🫡

tues, 12/10, 9:30am PT ☕️

neurips.cc/virtual/2024...

today @akshitab.bsky.social @natolambert.bsky.social and I are giving our #neurips2024 tutorial on language model development.

everything from data, training, adaptation. published or not, no secrets 🫡

tues, 12/10, 9:30am PT ☕️

neurips.cc/virtual/2024...

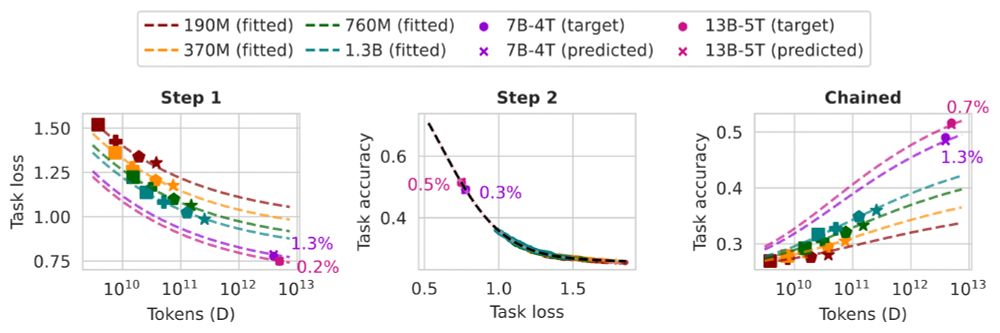

We develop task scaling laws and model ladders, which predict the accuracy on individual tasks by OLMo 2 7B & 13B models within 2 points of absolute error. The cost is 1% of the compute used to pretrain them.

We develop task scaling laws and model ladders, which predict the accuracy on individual tasks by OLMo 2 7B & 13B models within 2 points of absolute error. The cost is 1% of the compute used to pretrain them.

I'll be around all week, with two papers you should go check out (see image or next tweet):

I'll be around all week, with two papers you should go check out (see image or next tweet):

I'll be presenting our "Consent in Crisis" work on the 11th: arxiv.org/abs/2407.14933

Reach out to catch up or chat about:

- Training data / methods

- AI uses & impacts

- Multilingual scaling

I'll be presenting our "Consent in Crisis" work on the 11th: arxiv.org/abs/2407.14933

Reach out to catch up or chat about:

- Training data / methods

- AI uses & impacts

- Multilingual scaling

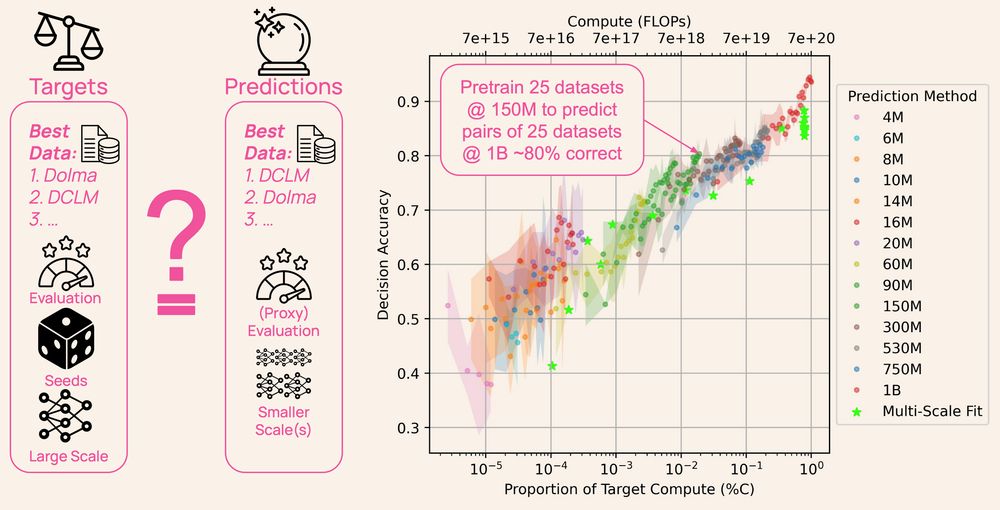

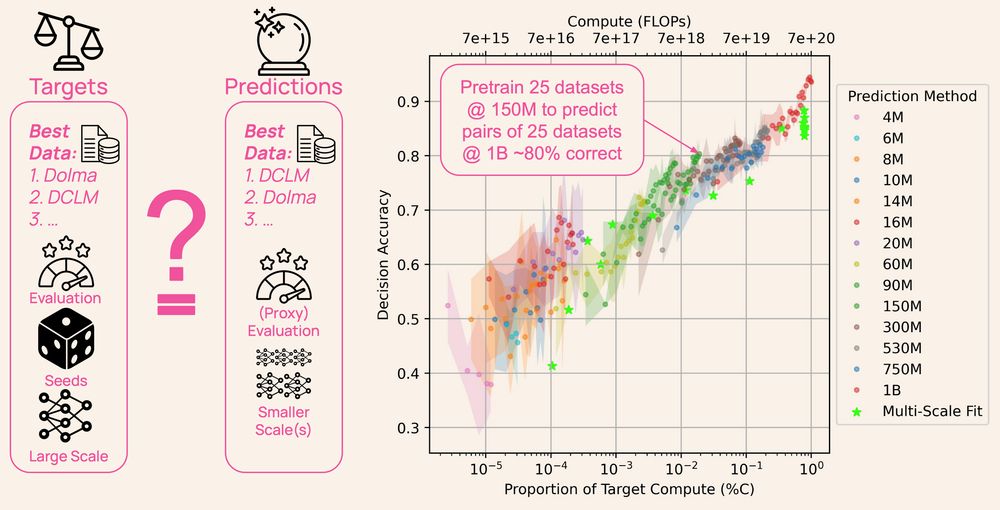

You can try out recipes👩🍳 iterate on ✨vibes✨ but we can't actually test all possible combos of tweaks,,, right?? 🙅♂️WRONG! arxiv.org/abs/2410.15661 (1/n) 🧵

You can try out recipes👩🍳 iterate on ✨vibes✨ but we can't actually test all possible combos of tweaks,,, right?? 🙅♂️WRONG! arxiv.org/abs/2410.15661 (1/n) 🧵

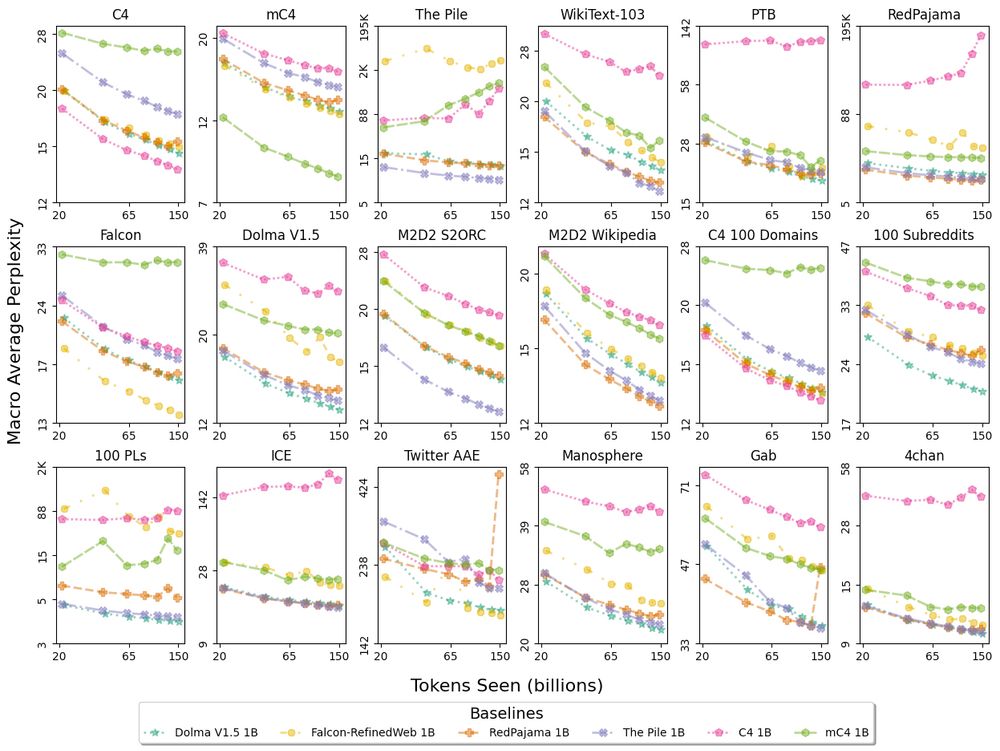

📈 Evaluating perplexity on just one corpus like C4 doesn't tell the whole story 📉

✨📃✨

We introduce Paloma, a benchmark of 585 domains from NY Times to r/depression on Reddit.

📈 Evaluating perplexity on just one corpus like C4 doesn't tell the whole story 📉

✨📃✨

We introduce Paloma, a benchmark of 585 domains from NY Times to r/depression on Reddit.