Again, not always bad, but Paloma reports average loss of each vocabulary string, surfacing strings that behave differently in some domains.

Again, not always bad, but Paloma reports average loss of each vocabulary string, surfacing strings that behave differently in some domains.

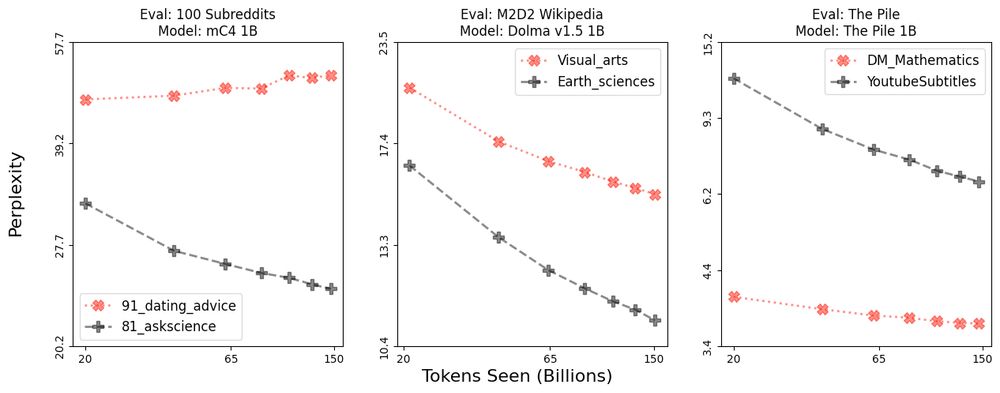

Differences in improvement, such as these examples, can indicate divergence, stagnation, or saturation—not all bad, but worth investigating!

Differences in improvement, such as these examples, can indicate divergence, stagnation, or saturation—not all bad, but worth investigating!

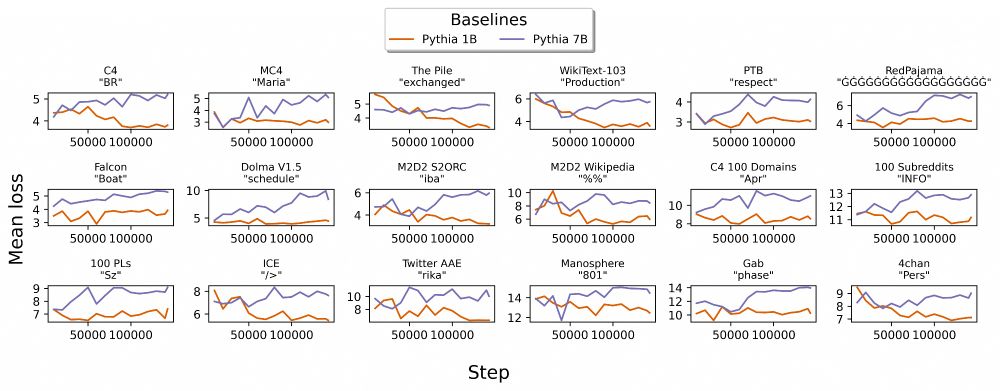

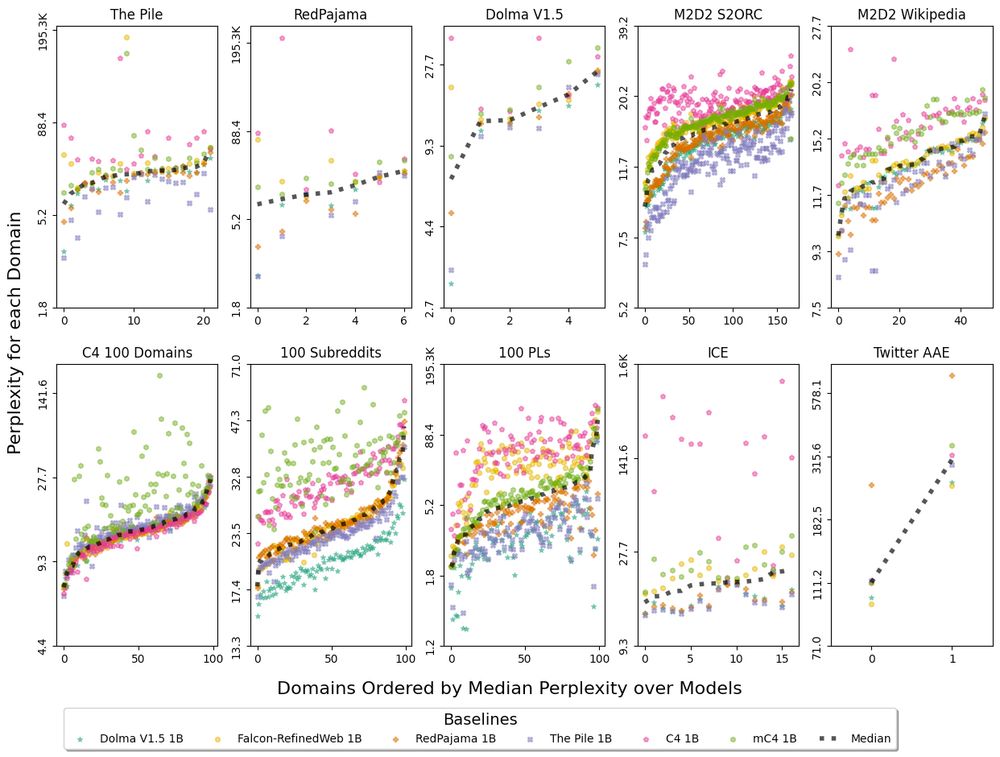

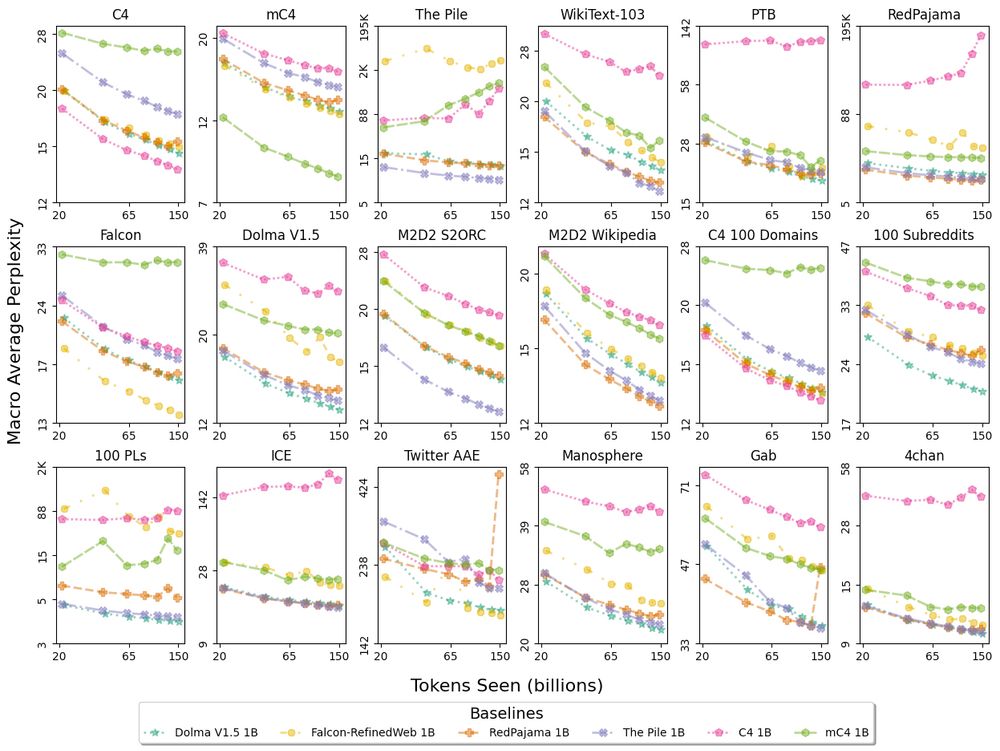

With these we find Common-Crawl-only pretraining has inconsistent fit to many domains:

1. C4 and mC4 baselines erratically worse fit than median model

2. C4, mC4, and Falcon baselines sometimes non-monotonic perplexity in Fig 1

With these we find Common-Crawl-only pretraining has inconsistent fit to many domains:

1. C4 and mC4 baselines erratically worse fit than median model

2. C4, mC4, and Falcon baselines sometimes non-monotonic perplexity in Fig 1

💬 top 100 subreddits

🧑💻 top 100 programming languages

Different research may require other domains, but Paloma enables research on 100s of domains from existing metadata.

💬 top 100 subreddits

🧑💻 top 100 programming languages

Different research may require other domains, but Paloma enables research on 100s of domains from existing metadata.

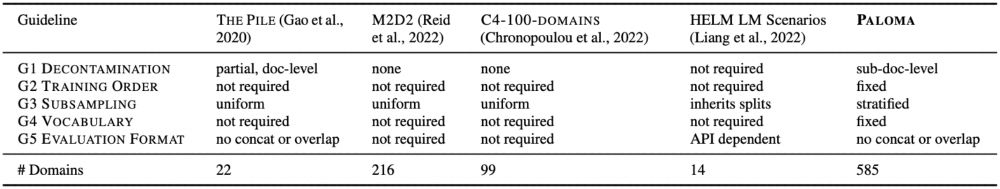

1. Remove contaminated pretraining

2. Fix train order

3. Subsample eval data based on metric variance

4. Fix the vocabulary unless you study changing it

5. Standardize eval format

1. Remove contaminated pretraining

2. Fix train order

3. Subsample eval data based on metric variance

4. Fix the vocabulary unless you study changing it

5. Standardize eval format

🧪 controls like benchmark decontamination

💸 measures of cost (parameter and training token count)

Find out more:

📃 arXiv (arxiv.org/pdf/2312.105...)

🤖 data and models (huggingface.co/collections/...)

🧪 controls like benchmark decontamination

💸 measures of cost (parameter and training token count)

Find out more:

📃 arXiv (arxiv.org/pdf/2312.105...)

🤖 data and models (huggingface.co/collections/...)

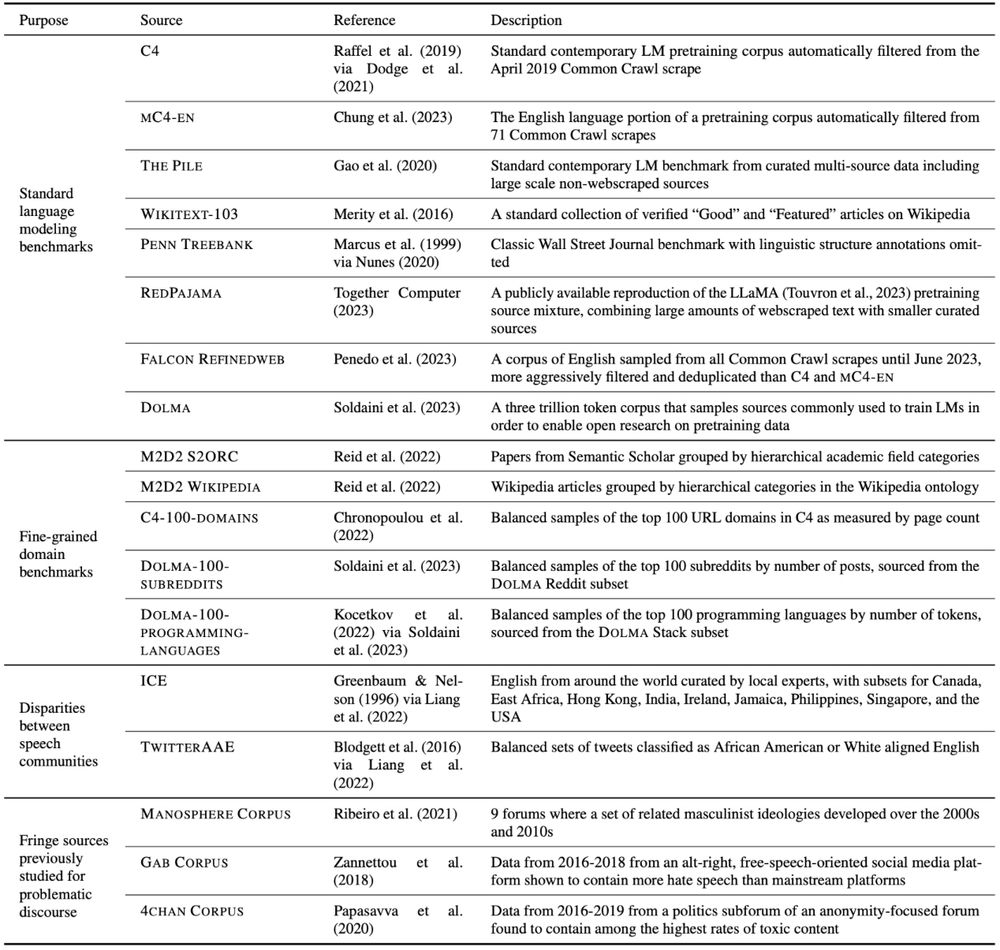

📈 Evaluating perplexity on just one corpus like C4 doesn't tell the whole story 📉

✨📃✨

We introduce Paloma, a benchmark of 585 domains from NY Times to r/depression on Reddit.

📈 Evaluating perplexity on just one corpus like C4 doesn't tell the whole story 📉

✨📃✨

We introduce Paloma, a benchmark of 585 domains from NY Times to r/depression on Reddit.