🌍 Is scaling diff by lang?

🧙♂️ Can we model the curse of multilinguality?

⚖️ Pretrain vs finetune from checkpoint?

🔀 X-lingual transfer scores across langs?

1/🧵

🌍 Is scaling diff by lang?

🧙♂️ Can we model the curse of multilinguality?

⚖️ Pretrain vs finetune from checkpoint?

🔀 X-lingual transfer scores across langs?

1/🧵

🌍 Is scaling diff by lang?

🧙♂️ Can we model the curse of multilinguality?

⚖️ Pretrain vs finetune from checkpoint?

🔀 X-lingual transfer scores across langs?

1/🧵

I'm excited to share our preprint answering these questions:

"Epistemic Diversity and Knowledge Collapse in Large Language Models"

📄Paper: arxiv.org/pdf/2510.04226

💻Code: github.com/dwright37/ll...

1/10

I'm excited to share our preprint answering these questions:

"Epistemic Diversity and Knowledge Collapse in Large Language Models"

📄Paper: arxiv.org/pdf/2510.04226

💻Code: github.com/dwright37/ll...

1/10

BigGen Bench introduces fine-grained, scalable, & human-aligned evaluations:

📈 77 hard, diverse tasks

🛠️ 765 exs w/ ex-specific rubrics

📋 More human-aligned than previous rubrics

🌍 10 languages, by native speakers

1/

BigGen Bench introduces fine-grained, scalable, & human-aligned evaluations:

📈 77 hard, diverse tasks

🛠️ 765 exs w/ ex-specific rubrics

📋 More human-aligned than previous rubrics

🌍 10 languages, by native speakers

1/

The @lmarena.bsky.social has become the go-to evaluation for AI progress.

Our release today demonstrates the difficulty in maintaining fair evaluations on the Arena, despite best intentions.

The @lmarena.bsky.social has become the go-to evaluation for AI progress.

Our release today demonstrates the difficulty in maintaining fair evaluations on the Arena, despite best intentions.

youtu.be/cRsbjGFPJaM?...

youtu.be/cRsbjGFPJaM?...

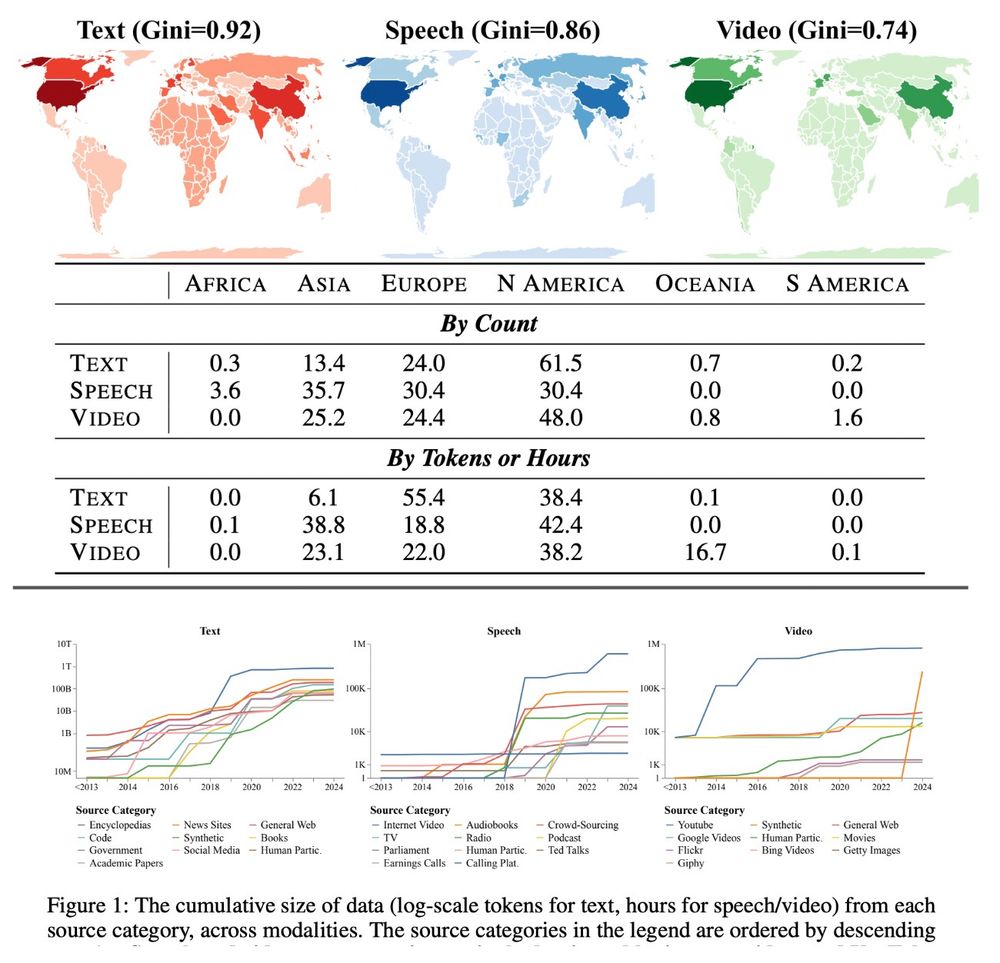

Empirically, it shows:

1️⃣ Soaring synthetic text data: ~10M tokens (pre-2018) to 100B+ (2024).

2️⃣ YouTube is now 70%+ of speech/video data but could block third-party collection.

3️⃣ <0.2% of data from Africa/South America.

1/

Empirically, it shows:

1️⃣ Soaring synthetic text data: ~10M tokens (pre-2018) to 100B+ (2024).

2️⃣ YouTube is now 70%+ of speech/video data but could block third-party collection.

3️⃣ <0.2% of data from Africa/South America.

1/

Watch the full event on our livestream here:

www.youtube.com/watch?v=X1gj...

Watch the full event on our livestream here:

www.youtube.com/watch?v=X1gj...

🌍 18 languages (high-, mid-, low-)

📚 21k questions (55% require image understanding)

🧪 STEM, social science, reasoning, and practical skills

🌍 18 languages (high-, mid-, low-)

📚 21k questions (55% require image understanding)

🧪 STEM, social science, reasoning, and practical skills

Panelists: @atoosakz.bsky.social, @randomwalker.bsky.social, @alondra.bsky.social, and Deirdre K. Mulligan.

Moderator: @shaynelongpre.bsky.social.

#AIDemocraticFreedoms

Panelists: @atoosakz.bsky.social, @randomwalker.bsky.social, @alondra.bsky.social, and Deirdre K. Mulligan.

Moderator: @shaynelongpre.bsky.social.

#AIDemocraticFreedoms

1/

1/

www.wired.com/story/ai-res...

www.wired.com/story/ai-res...

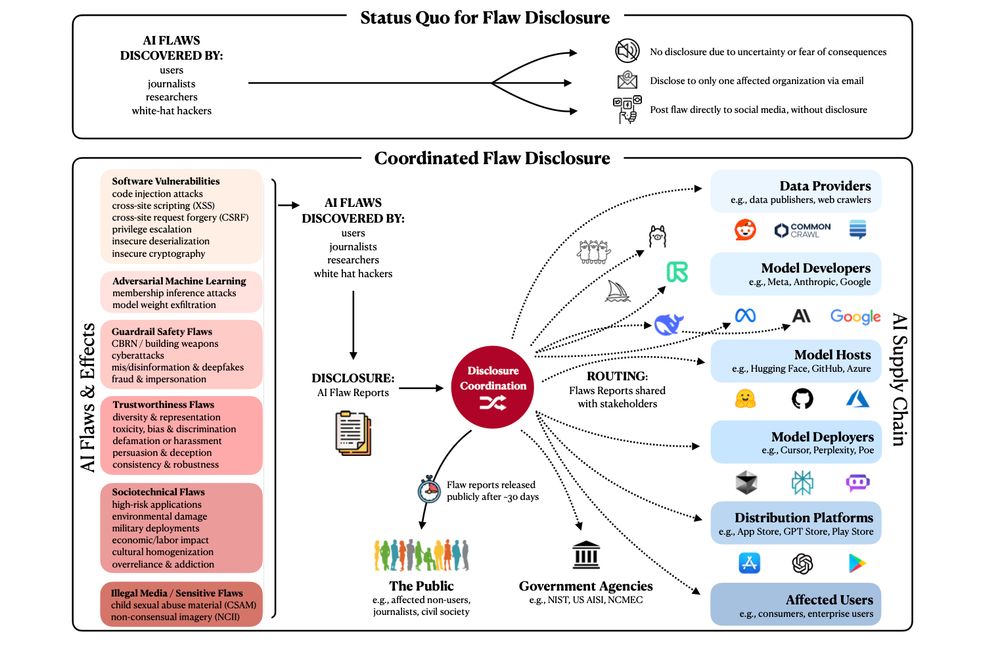

Our new paper, “In House Evaluation is Not Enough” has 3 calls-to-actions to empower evaluators:

1️⃣ Standardized AI flaw reports

2️⃣ AI flaw disclosure programs + safe harbors.

3️⃣ A coordination center for transferable AI flaws.

1/🧵

Our new paper, “In House Evaluation is Not Enough” has 3 calls-to-actions to empower evaluators:

1️⃣ Standardized AI flaw reports

2️⃣ AI flaw disclosure programs + safe harbors.

3️⃣ A coordination center for transferable AI flaws.

1/🧵

mitsloan.mit.edu/ideas-made-t...

mitsloan.mit.edu/ideas-made-t...

We’re presenting the state of transparency, tooling, and policy, from the Foundation Model Transparency Index, Factsheets, the the EU AI Act to new frameworks like @MLCommons’ Croissant.

1/

We’re presenting the state of transparency, tooling, and policy, from the Foundation Model Transparency Index, Factsheets, the the EU AI Act to new frameworks like @MLCommons’ Croissant.

1/

We *should* be concerned about platform power over speech, but it isn’t censorship.

As the Supreme Court said last year, the companies’ editorial decisions to moderate content are protected by the First Amendment.

1/

We *should* be concerned about platform power over speech, but it isn’t censorship.

As the Supreme Court said last year, the companies’ editorial decisions to moderate content are protected by the First Amendment.

1/

➡️ why is copyright an issue for AI?

➡️ what is fair use?

➡️ why are memorization and generation important?

➡️ how does it impact the AI data supply / web crawling?

🧵

➡️ why is copyright an issue for AI?

➡️ what is fair use?

➡️ why are memorization and generation important?

➡️ how does it impact the AI data supply / web crawling?

🧵