Head of Cohere Labs

@Cohere_Labs @Cohere

PhD from @UvA_Amsterdam

https://marziehf.github.io/

So how fair—and scientifically rigorous—is today’s most widely used evaluation benchmark?

We took a deep dive into Chatbot Arena to find out. 🧵

In our latest work we uncover the Verification Ceiling Problem: strict “all tests must pass” rules throw away useful data, while weak tests let errors through.

In our latest work we uncover the Verification Ceiling Problem: strict “all tests must pass” rules throw away useful data, while weak tests let errors through.

And we’re hiring a Senior Research Scientist to co-create with us.

If you believe in research as a shared, global effort — this is your chance.

And we’re hiring a Senior Research Scientist to co-create with us.

If you believe in research as a shared, global effort — this is your chance.

New post in collaboration with AI Singapore explores why Elo falls short for AI leaderboards and how we can do better.

New post in collaboration with AI Singapore explores why Elo falls short for AI leaderboards and how we can do better.

Entry points matter.

We started the Scholars Program 3 years ago to give new researchers a real shot — excited to open applications for year 4✨

This is your chance to collaborate with some of the brightest minds in AI & chart new courses in ML research. Let's change the spaces breakthroughs happen.

Apply by Aug 29.

Entry points matter.

We started the Scholars Program 3 years ago to give new researchers a real shot — excited to open applications for year 4✨

@microsoft.com's July seminar discussed how we can bridge the gap and build #AIforEveryone with @mziizm.bsky.social of @cohere.com.

📽️ www.microsoft.com/en-us/resear...

@microsoft.com's July seminar discussed how we can bridge the gap and build #AIforEveryone with @mziizm.bsky.social of @cohere.com.

📽️ www.microsoft.com/en-us/resear...

This limits who can use them and whose imagination they reflect.

We asked: can we build a small, efficient model that understands prompts in multiple languages natively?

This limits who can use them and whose imagination they reflect.

We asked: can we build a small, efficient model that understands prompts in multiple languages natively?

Turns out that standard methods miss out on gains on non-English languages. We propose more robust alternatives.

Very proud of this work that our scholar Ammar led! 🚀

Our latest work introduces a new inference time scaling recipe that is sample-efficient, multilingual, and suitable for multi-task requirements. 🍋

Turns out that standard methods miss out on gains on non-English languages. We propose more robust alternatives.

Very proud of this work that our scholar Ammar led! 🚀

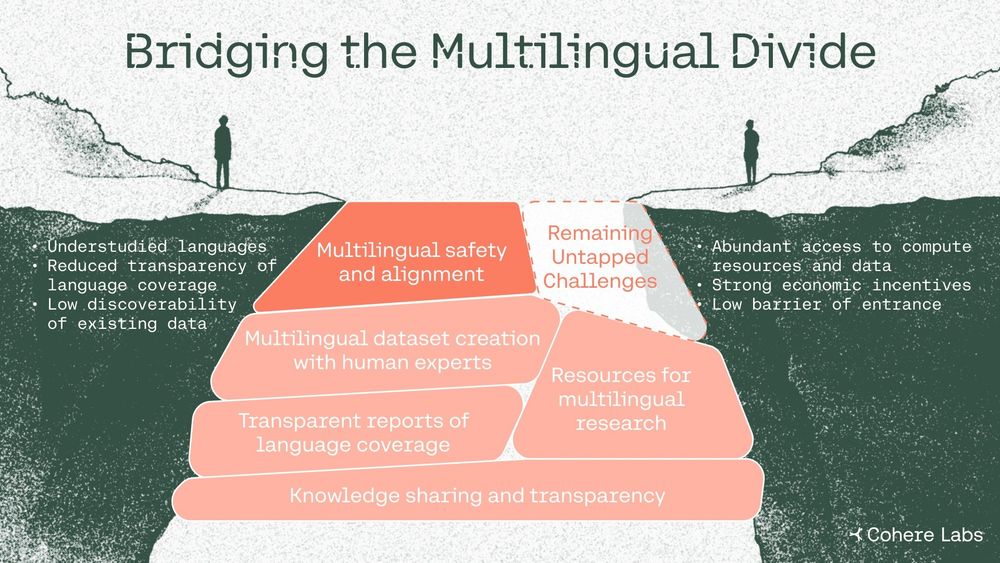

What's the current stage and how to progress from here?

This work led by @yongzx.bsky.social has answers! 👇

📏 Our comprehensive survey reveals that there is still a long way to go.

What's the current stage and how to progress from here?

This work led by @yongzx.bsky.social has answers! 👇

Our latest paper draws on our multi-year efforts with the wider research community to explore why this matters and how we can bridge the AI language gap.

Our latest paper draws on our multi-year efforts with the wider research community to explore why this matters and how we can bridge the AI language gap.

- Invited talks by @loubnabnl.hf.co (HF) @mziizm.bsky.social (Cohere) @najoung.bsky.social (BU) @kylelo.bsky.social (AI2) Yohei Oseki (UTokyo)

- Exciting posters by other participants

Register to attend and/or present your poster at cphnlp.github.io /1

- Invited talks by @loubnabnl.hf.co (HF) @mziizm.bsky.social (Cohere) @najoung.bsky.social (BU) @kylelo.bsky.social (AI2) Yohei Oseki (UTokyo)

- Exciting posters by other participants

Register to attend and/or present your poster at cphnlp.github.io /1

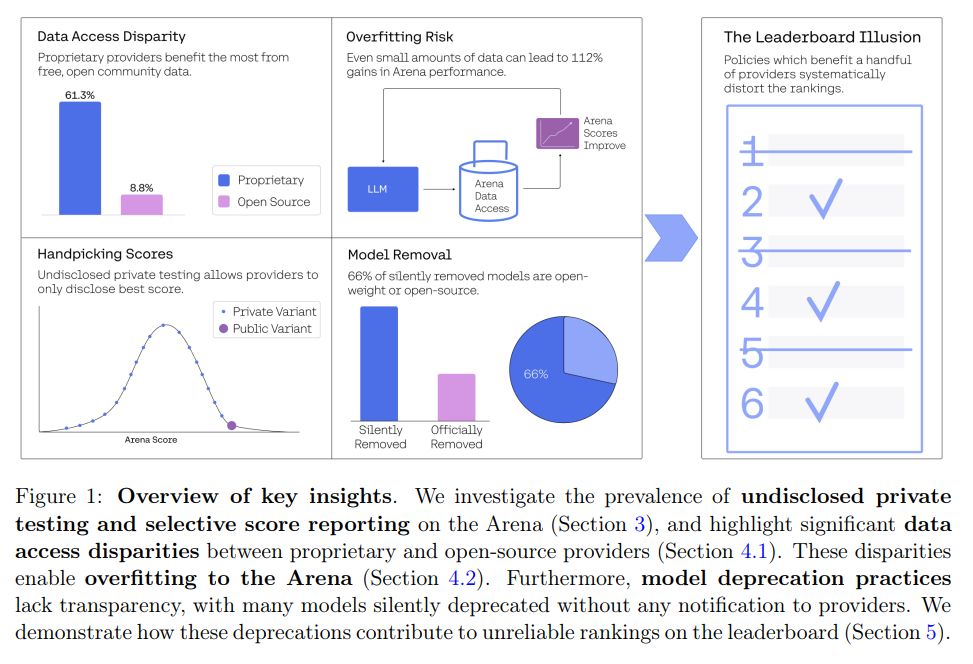

The @lmarena.bsky.social has become the go-to evaluation for AI progress.

Our release today demonstrates the difficulty in maintaining fair evaluations on the Arena, despite best intentions.

The @lmarena.bsky.social has become the go-to evaluation for AI progress.

Our release today demonstrates the difficulty in maintaining fair evaluations on the Arena, despite best intentions.

Paper: The Leaderboard Illusion - arxiv.org/abs/2504.20879

So how fair—and scientifically rigorous—is today’s most widely used evaluation benchmark?

We took a deep dive into Chatbot Arena to find out. 🧵

So how fair—and scientifically rigorous—is today’s most widely used evaluation benchmark?

We took a deep dive into Chatbot Arena to find out. 🧵

✨INCLUDE (spotlight) — models fail to grasp regional nuances across languages

💎To Code or Not to Code (poster) — code is key for generalizing beyond coding tasks

✨INCLUDE (spotlight) — models fail to grasp regional nuances across languages

💎To Code or Not to Code (poster) — code is key for generalizing beyond coding tasks

Multilingual LLMs are getting really good.

But the way we evaluate them? Not the best sometimes.

🌟 We show how decades of lessons from Machine Translation can help us fix it

arxiv.org/abs/2504.11829

🌍It reflects experiences from my personal research journey: coming from MT into multilingual LLM research I missed reliable evaluations and evaluation research…

Multilingual LLMs are getting really good.

But the way we evaluate them? Not the best sometimes.

🌟 We show how decades of lessons from Machine Translation can help us fix it

🌍 18 languages (high-, mid-, low-)

📚 21k questions (55% require image understanding)

🧪 STEM, social science, reasoning, and practical skills

🌍 18 languages (high-, mid-, low-)

📚 21k questions (55% require image understanding)

🧪 STEM, social science, reasoning, and practical skills

www2.statmt.org/wmt25/multil...

www2.statmt.org/wmt25/multil...

Are you excited about multilingual evaluation, human judgment, or meta-eval? Come help us explore how a rigorous eval really looks like while questioning the status quo in LLM evaluation.

I’m looking for an intern (EU timezone preferred), are you interested? Ping me!

Are you excited about multilingual evaluation, human judgment, or meta-eval? Come help us explore how a rigorous eval really looks like while questioning the status quo in LLM evaluation.

I’m looking for an intern (EU timezone preferred), are you interested? Ping me!

💎: cohere.com/research/pap...

💎: cohere.com/research/pap...

A multilingual, multimodal model designed to understand across languages and modalities (text, images, etc) to bridge the language gap and empower global users!

A multilingual, multimodal model designed to understand across languages and modalities (text, images, etc) to bridge the language gap and empower global users!