🔬We brought the rigor from Machine Translation evaluation to multilingual LLM benchmarking and organized the WMT25 Multilingual Instruction Shared Task spanning 30 languages and 5 subtasks.

🔬We brought the rigor from Machine Translation evaluation to multilingual LLM benchmarking and organized the WMT25 Multilingual Instruction Shared Task spanning 30 languages and 5 subtasks.

💭This paper has had an interesting journey, come find out and discuss with us! @swetaagrawal.bsky.social @kocmitom.bsky.social

Side note: being a parent in research does have its perks, poster transportation solved ✅

💭This paper has had an interesting journey, come find out and discuss with us! @swetaagrawal.bsky.social @kocmitom.bsky.social

Side note: being a parent in research does have its perks, poster transportation solved ✅

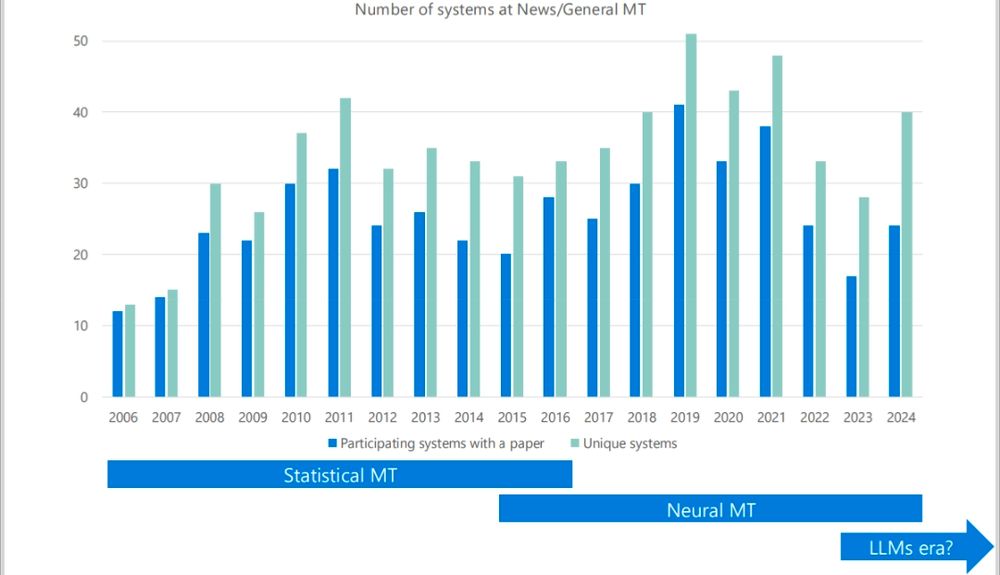

Yes, the systems are much stronger. But the other half of the story is that test sets haven’t kept up. It’s no longer enough to just take a random news article and expect systems to stumble.

Are today's #MachineTranslation systems flawless? When SOTA models all achieve near-perfect scores on standard benchmarks, we hit an evaluation ceiling. How can we tell their true capabilities and drive future progress?

Yes, the systems are much stronger. But the other half of the story is that test sets haven’t kept up. It’s no longer enough to just take a random news article and expect systems to stumble.

What began in January as a scribble in my notebook “how challenging would it be...” turned into a fully-fledged translation model that outperforms both open and closed-source systems, including long-standing MT leaders.

What began in January as a scribble in my notebook “how challenging would it be...” turned into a fully-fledged translation model that outperforms both open and closed-source systems, including long-standing MT leaders.

But don't draw conclusions just yet - automatic metrics are biased for techniques like metric as a reward model or MBR. The official human ranking will be part of General MT findings at WMT.

arxiv.org/abs/2508.14909

But don't draw conclusions just yet - automatic metrics are biased for techniques like metric as a reward model or MBR. The official human ranking will be part of General MT findings at WMT.

arxiv.org/abs/2508.14909

The deadline is next week on 3rd July.

www2.statmt.org/wmt25/

The deadline is next week on 3rd July.

www2.statmt.org/wmt25/

In our new paper, we experimentally illustrate common eval. issues and present how structured evaluation design, transparent reporting, and meta-evaluation can help us to build stronger models.

arxiv.org/abs/2504.11829

🌍It reflects experiences from my personal research journey: coming from MT into multilingual LLM research I missed reliable evaluations and evaluation research…

In our new paper, we experimentally illustrate common eval. issues and present how structured evaluation design, transparent reporting, and meta-evaluation can help us to build stronger models.

Are you excited about multilingual evaluation, human judgment, or meta-eval? Come help us explore how a rigorous eval really looks like while questioning the status quo in LLM evaluation.

I’m looking for an intern (EU timezone preferred), are you interested? Ping me!

Are you excited about multilingual evaluation, human judgment, or meta-eval? Come help us explore how a rigorous eval really looks like while questioning the status quo in LLM evaluation.

I’m looking for an intern (EU timezone preferred), are you interested? Ping me!

www2.statmt.org/wmt25/multil...

www2.statmt.org/wmt25/multil...

Learn more:

cohere.com/blog/aya-vis...

I really hope it puts the final nail in the coffin of FLORES or WMT14. The field is evolving, legacy testsets can't show your progress

arxiv.org/abs/2502.124...

I really hope it puts the final nail in the coffin of FLORES or WMT14. The field is evolving, legacy testsets can't show your progress

arxiv.org/abs/2502.124...

This isn’t just any repeat. We’ve kept what worked, removed what was outdated, and introduced many exciting new twists! Among the key changes are:

This isn’t just any repeat. We’ve kept what worked, removed what was outdated, and introduced many exciting new twists! Among the key changes are:

🚀 Participant numbers increased by over 50%!

🏗️ Decoder-only architectures are leading the way.

🔊 We've introduced a new speech audio modality domain.

🌐 Online systems are losing ground to LLMs.

🚀 Participant numbers increased by over 50%!

🏗️ Decoder-only architectures are leading the way.

🔊 We've introduced a new speech audio modality domain.

🌐 Online systems are losing ground to LLMs.