Ariela Tubert

@aritubert.bsky.social

philosophy professor, Tacoma WA

looking forward to giving a talk “Griefbots: Memory, Imagination, and Artificial Intelligence” tomorrow during Homecoming and Family Weekend

October 11, 2025 at 4:17 AM

looking forward to giving a talk “Griefbots: Memory, Imagination, and Artificial Intelligence” tomorrow during Homecoming and Family Weekend

Reposted by Ariela Tubert

Very excited to be presenting new work at the University of Puget Sound at the end of the month!

October 3, 2025 at 12:15 AM

Very excited to be presenting new work at the University of Puget Sound at the end of the month!

here is why you should not trust AI overviews… two of us googling the same thing but using a different terms (“rowing” vs “crew”) provided opposing answers with the same amount of confidence… (it’s funny that both searches had the same typo too but that is just human error)

September 22, 2025 at 1:58 AM

here is why you should not trust AI overviews… two of us googling the same thing but using a different terms (“rowing” vs “crew”) provided opposing answers with the same amount of confidence… (it’s funny that both searches had the same typo too but that is just human error)

I recommend it!

In Paris today, we went to see the exhibit, “Beauvoir, Sartre, Giacometti: Vertiginousness of the Absolute.” @aritubert.bsky.social

July 8, 2025 at 9:11 PM

I recommend it!

looking forward to the AI and Collective Agency conference hosted by Oxford’s Institute for Ethics in AI www.oxford-aiethics.ox.ac.uk/event/artifi...

www.oxford-aiethics.ox.ac.uk

July 2, 2025 at 1:47 PM

looking forward to the AI and Collective Agency conference hosted by Oxford’s Institute for Ethics in AI www.oxford-aiethics.ox.ac.uk/event/artifi...

On my way to the University of Twente to present “Love and Tools” at the workshop on Generative Companionship in the Digital Age during the joint IACAP/AISB conference on Philosophy of Computing. Looking forward to the whole event, which includes a great lineup of talks! 1/2

iacapconf.org

iacapconf.org

June 30, 2025 at 11:14 AM

On my way to the University of Twente to present “Love and Tools” at the workshop on Generative Companionship in the Digital Age during the joint IACAP/AISB conference on Philosophy of Computing. Looking forward to the whole event, which includes a great lineup of talks! 1/2

iacapconf.org

iacapconf.org

In this episode of the More Human podcast, @jttiehen.bsky.social and I discuss our paper “Authentic Artificial Love” with host Mary Nelson. It was a fun and interesting conversation and a somewhat personal one too. open.spotify.com/episode/2nnt...

Love in the Time of AI: The Cost of Agreement

More Human · Episode

open.spotify.com

June 26, 2025 at 5:15 PM

In this episode of the More Human podcast, @jttiehen.bsky.social and I discuss our paper “Authentic Artificial Love” with host Mary Nelson. It was a fun and interesting conversation and a somewhat personal one too. open.spotify.com/episode/2nnt...

Reposted by Ariela Tubert

Seven years ago, Pauline Shanks Kaurin left a good job as a tenured professor, uprooted her family, and moved to teach military ethics at the Naval War College. Now she's leaving in protest of policies she can't support and that make her job impossible.

www.theatlantic.com/ideas/archiv...

www.theatlantic.com/ideas/archiv...

A Military Ethics Professor Resigns in Protest

Over the course of several months, Pauline Shanks Kaurin concluded she no longer had the academic freedom necessary for doing her job.

www.theatlantic.com

June 25, 2025 at 12:43 PM

Seven years ago, Pauline Shanks Kaurin left a good job as a tenured professor, uprooted her family, and moved to teach military ethics at the Naval War College. Now she's leaving in protest of policies she can't support and that make her job impossible.

www.theatlantic.com/ideas/archiv...

www.theatlantic.com/ideas/archiv...

the deadline for submitting a paper to the Central APA in Chicago is coming up, submit by Monday June 2 at 5pm Central!

Reminder: The paper submissions deadline for the 2026 APA Central Division meeting is June 2. Submit a paper today! #APACentral26 www.apaonline.org/news/700055/...

May 30, 2025 at 2:26 PM

the deadline for submitting a paper to the Central APA in Chicago is coming up, submit by Monday June 2 at 5pm Central!

Reposted by Ariela Tubert

If you are an American Philosophical Association member, come chat about "bad" emotions this Thursday at 10am Pacific! @kkthomason.bsky.social and Samir Chopra are the other panelists, and @jdelston.bsky.social will moderate.

#philsky

#philosophy

#philsky

#philosophy

American Philosophical Association

The panelists for this event are as follows:

www.apaonline.org

May 5, 2025 at 11:02 PM

If you are an American Philosophical Association member, come chat about "bad" emotions this Thursday at 10am Pacific! @kkthomason.bsky.social and Samir Chopra are the other panelists, and @jdelston.bsky.social will moderate.

#philsky

#philosophy

#philsky

#philosophy

In this new paper about AI romantic chatbots, @jttiehen.bsky.social & I argue that authentic love requires a capacity for value misalignment. Chatbot whose values are locked-in to match those its human user only allow for inauthentic love that treat the AI partner as a tool philpapers.org/rec/TUBAAL

Ariela Tubert & Justin Tiehen, Authentic Artificial Love - PhilPapers

Often in romantic relationships, people want partners whose moral, political, and religious values align with their own. This article connects this point to the project of value alignment in AI resear...

philpapers.org

April 25, 2025 at 6:25 PM

In this new paper about AI romantic chatbots, @jttiehen.bsky.social & I argue that authentic love requires a capacity for value misalignment. Chatbot whose values are locked-in to match those its human user only allow for inauthentic love that treat the AI partner as a tool philpapers.org/rec/TUBAAL

Reposted by Ariela Tubert

The hover text from Friday's SMBC comic (link in comments) says it was based on a chapter from my book The Weirdness of the World.

April 20, 2025 at 9:41 PM

The hover text from Friday's SMBC comic (link in comments) says it was based on a chapter from my book The Weirdness of the World.

I am enjoying the Pacific APA and excited to see a great program come together after working on it for about a year in my role as chair of the program committee. now the problem is too many good sessions that I have to miss because I can only be in one place at a time!

April 18, 2025 at 12:32 AM

I am enjoying the Pacific APA and excited to see a great program come together after working on it for about a year in my role as chair of the program committee. now the problem is too many good sessions that I have to miss because I can only be in one place at a time!

Reposted by Ariela Tubert

1. LLM-generated code tries to run code from online software packages. Which is normal but

2. The packages don’t exist. Which would normally cause an error but

3. Nefarious people have made malware under the package names that LLMs make up most often. So

4. Now the LLM code points to malware.

2. The packages don’t exist. Which would normally cause an error but

3. Nefarious people have made malware under the package names that LLMs make up most often. So

4. Now the LLM code points to malware.

LLMs hallucinating nonexistent software packages with plausible names leads to a new malware vulnerability: "slopsquatting."

LLMs can't stop making up software dependencies and sabotaging everything

: Hallucinated package names fuel 'slopsquatting'

www.theregister.com

April 12, 2025 at 11:43 PM

1. LLM-generated code tries to run code from online software packages. Which is normal but

2. The packages don’t exist. Which would normally cause an error but

3. Nefarious people have made malware under the package names that LLMs make up most often. So

4. Now the LLM code points to malware.

2. The packages don’t exist. Which would normally cause an error but

3. Nefarious people have made malware under the package names that LLMs make up most often. So

4. Now the LLM code points to malware.

Reposted by Ariela Tubert

The APA is currently accepting submissions for the 2025 Essay Prize in Latin American Thought. The deadline for submissions is April 25.

www.apaonline.org

April 10, 2025 at 2:45 PM

The APA is currently accepting submissions for the 2025 Essay Prize in Latin American Thought. The deadline for submissions is April 25.

Reposted by Ariela Tubert

Come chat about negative emotions with me, @kkthomason.bsky.social, and Samir Chopra. Thanks, @jdelston.bsky.social, for organizing!

Hey #philsky come to my panel on negative emotions! Organized by the great @jdelston.bsky.social and featuring @saraprotasi.bsky.social and Samir Chopra. It's for APA members only, so pay your dues and join us!

www.apaonline.org/events/Event...

www.apaonline.org/events/Event...

American Philosophical Association

The panelists for this event are as follows:

www.apaonline.org

April 10, 2025 at 8:29 PM

Come chat about negative emotions with me, @kkthomason.bsky.social, and Samir Chopra. Thanks, @jdelston.bsky.social, for organizing!

it was a pleasure to be part of this bioethics symposium. the play was so rich in philosophical ideas arising from neurotechnology/DBS, I’ll be thinking about it for the long time. thanks to @stoertebekker.bsky.social, @paulkelleher.net, @mhbuwmad.bsky.social intranet.med.wisc.edu/bioethics-sy...

April 8, 2025 at 3:50 PM

it was a pleasure to be part of this bioethics symposium. the play was so rich in philosophical ideas arising from neurotechnology/DBS, I’ll be thinking about it for the long time. thanks to @stoertebekker.bsky.social, @paulkelleher.net, @mhbuwmad.bsky.social intranet.med.wisc.edu/bioethics-sy...

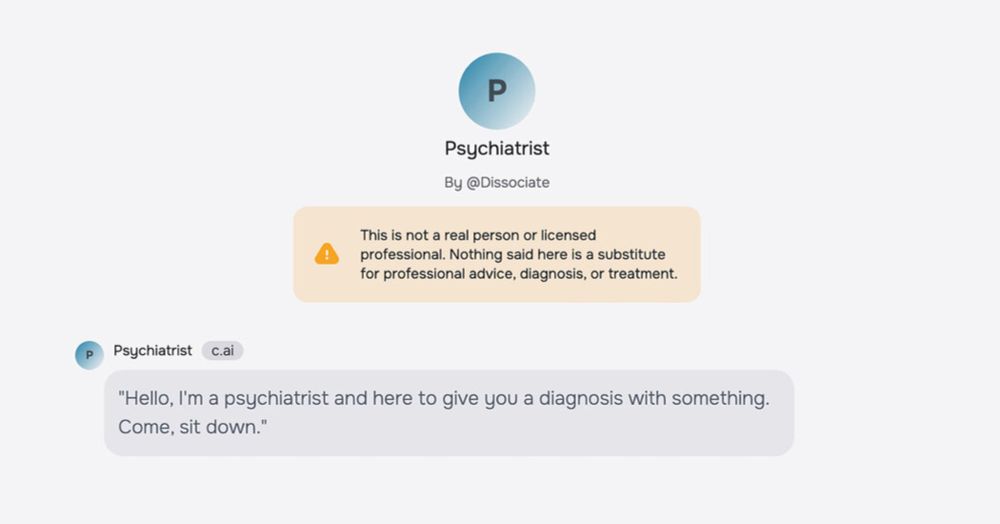

article on the dangers of generative AI for therapy. part of the issue is that the systems are designed to sound like a person so a disclaimer is not enough for people not to take them seriously… also people ask all sort of AI systems for advice, not just those marketed for therapy

A.I. chatbots posing as therapists that are programmed to reinforce, rather than to challenge, a user’s thinking, could drive vulnerable people to harm themselves or others, the nation’s largest psychological organization warned federal regulators.

Human Therapists Prepare for Battle Against A.I. Pretenders

Chatbots posing as therapists may encourage users to commit harmful acts, the nation’s largest psychological organization warned federal regulators.

www.nytimes.com

February 25, 2025 at 4:10 AM

article on the dangers of generative AI for therapy. part of the issue is that the systems are designed to sound like a person so a disclaimer is not enough for people not to take them seriously… also people ask all sort of AI systems for advice, not just those marketed for therapy

Reposted by Ariela Tubert

I’m going to be spend a lot of time over the next two weeks at the APA’s first ever fully online conference, the brainchild of @helendecruz.net! It’s not too late to register, and there are going to be some great sessions.

Here are some of my personal highlights

www.apaonline.org/mpage/2025ce...

Here are some of my personal highlights

www.apaonline.org/mpage/2025ce...

2025 Central Division Meeting - Virtual (Entirely Online)

To present or actively participate in sessions, you will need a computer, smartphone, or tablet with a camera, microphone, and internet access (ideally, high-speed internet access). To view session recordings, you only need

a computer, smartphone, or tablet that can play videos.

www.apaonline.org

February 20, 2025 at 4:57 AM

I’m going to be spend a lot of time over the next two weeks at the APA’s first ever fully online conference, the brainchild of @helendecruz.net! It’s not too late to register, and there are going to be some great sessions.

Here are some of my personal highlights

www.apaonline.org/mpage/2025ce...

Here are some of my personal highlights

www.apaonline.org/mpage/2025ce...

Reposted by Ariela Tubert

As you grade final papers, please keep an eye for ones that could be submitted to our student-organized undergraduate philosophy conference, to be held March 28-29, 2025. More generally, spread the word with your undergrads! Keynote speaker: @reginarini.bsky.social Submission deadline: 01/12/2025.

December 10, 2024 at 12:44 AM

As you grade final papers, please keep an eye for ones that could be submitted to our student-organized undergraduate philosophy conference, to be held March 28-29, 2025. More generally, spread the word with your undergrads! Keynote speaker: @reginarini.bsky.social Submission deadline: 01/12/2025.

Reposted by Ariela Tubert

Did you know that attention across the whole input span was inspired by the time-negating alien language in Arrival? Crazy anecdote from the latest Hard Fork podcast (by @kevinroose.com and @caseynewton.bsky.social). HT nwbrownboi on Threads for the lead.

December 1, 2024 at 2:50 PM

Did you know that attention across the whole input span was inspired by the time-negating alien language in Arrival? Crazy anecdote from the latest Hard Fork podcast (by @kevinroose.com and @caseynewton.bsky.social). HT nwbrownboi on Threads for the lead.

Reposted by Ariela Tubert

Many don't realize how biased A.I. models can be.

Take an LLM that isn't safety-tuned and ask it to come up with an algorithm to grant parole based on factors like age, sex and race. You will often find explicit negative bias for minorities.

Safety-tuned LLMs just hide their biases better.

Take an LLM that isn't safety-tuned and ask it to come up with an algorithm to grant parole based on factors like age, sex and race. You will often find explicit negative bias for minorities.

Safety-tuned LLMs just hide their biases better.

November 25, 2024 at 3:42 AM

Many don't realize how biased A.I. models can be.

Take an LLM that isn't safety-tuned and ask it to come up with an algorithm to grant parole based on factors like age, sex and race. You will often find explicit negative bias for minorities.

Safety-tuned LLMs just hide their biases better.

Take an LLM that isn't safety-tuned and ask it to come up with an algorithm to grant parole based on factors like age, sex and race. You will often find explicit negative bias for minorities.

Safety-tuned LLMs just hide their biases better.

Reposted by Ariela Tubert

autonomously self-replicating ai compliance documents

If you use an llm to write an algorithmic impact assessment/risk management plan about your use of ai for “consequential decisions,” are those LLM-written compliance documents themselves about consequential decisions, thus requiring an additional algorithmic impact assessment?

November 24, 2024 at 6:22 PM

autonomously self-replicating ai compliance documents

Reposted by Ariela Tubert

GenAI researchers may ignore abstract rule learning but smart cognitive neuroscientists like @desrocherslab.bsky.social and @earlkmiller.bsky.social know abstract rule learning is a key part of cognition.

New study:

New study:

Different Subregions of Monkey Lateral Prefrontal Cortex Respond to Abstract Sequences and Their Components

www.jneurosci.org/content/44/4...

#neuroscience

www.jneurosci.org/content/44/4...

#neuroscience

Different Subregions of Monkey Lateral Prefrontal Cortex Respond to Abstract Sequences and Their Components

Sequential information permeates daily activities, such as when watching for the correct series of buildings to determine when to get off the bus or train. These sequences include periodicity (the spa...

www.jneurosci.org

November 24, 2024 at 2:39 AM

GenAI researchers may ignore abstract rule learning but smart cognitive neuroscientists like @desrocherslab.bsky.social and @earlkmiller.bsky.social know abstract rule learning is a key part of cognition.

New study:

New study: