I'm using Optuna, and seriously, what a great API—it's super easy to use and makes the process so much cleaner. Getting closer to finding that optimal configuration!

#buildInPublic #chess

I'm using Optuna, and seriously, what a great API—it's super easy to use and makes the process so much cleaner. Getting closer to finding that optimal configuration!

#buildInPublic #chess

Not just knowledge, but practical expertise is democratized.

"How to train your LLM" tutorials with millions of views.

Content available:

End-to-end training

Hyperparameter optimization

Infrastructure setup

Cost optimization

Troubleshooting

Not just knowledge, but practical expertise is democratized.

"How to train your LLM" tutorials with millions of views.

Content available:

End-to-end training

Hyperparameter optimization

Infrastructure setup

Cost optimization

Troubleshooting

(1) The model used;

(2) The temperature OR reasoning effort hyperparameter setting;

(3) The demographic info provided;

(4) The way items were presented to the model.

(1) The model used;

(2) The temperature OR reasoning effort hyperparameter setting;

(3) The demographic info provided;

(4) The way items were presented to the model.

Here is the recording of the presentation:

www.youtube.com/watch?v=-gYn...

Here is the recording of the presentation:

www.youtube.com/watch?v=-gYn...

http://arxiv.org/abs/2307.10536

http://arxiv.org/abs/2307.10536

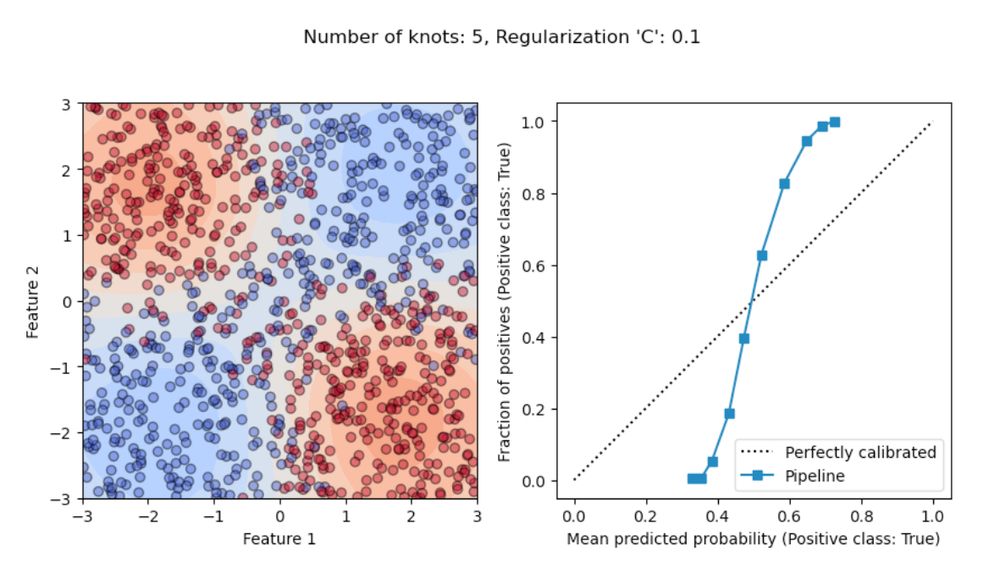

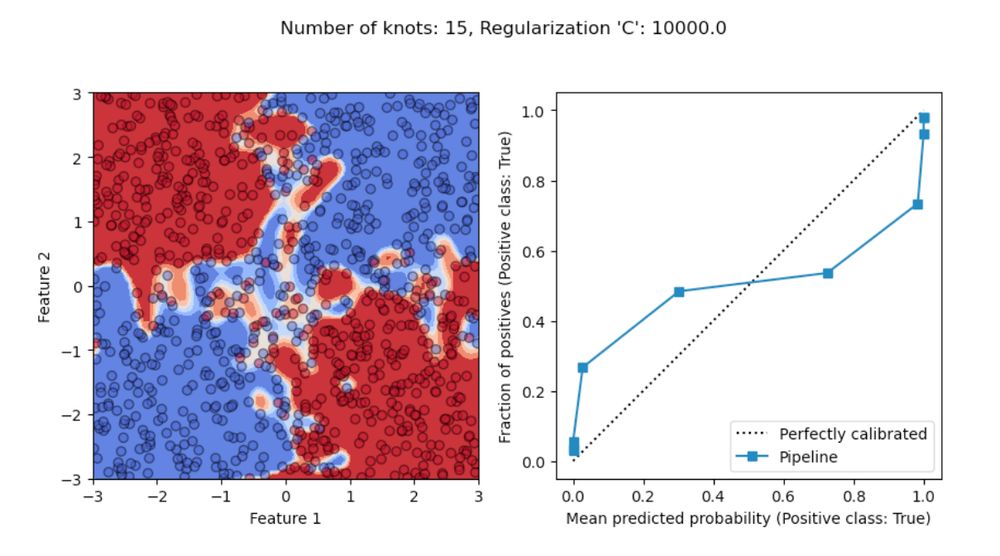

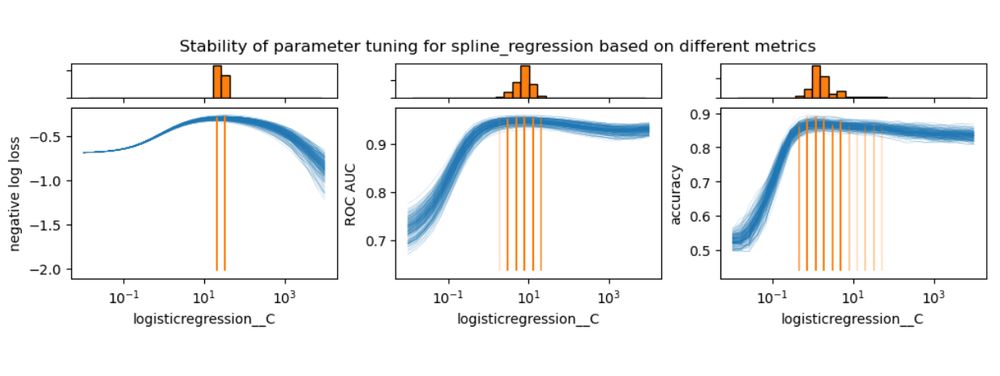

Some cool visualization of the hyperparameter landscape of some simple neural networks - quite chaotic and interesting.

Some cool visualization of the hyperparameter landscape of some simple neural networks - quite chaotic and interesting.

https://thierrymoudiki.github.io/blog/2024/06/09/python/quasirandomizednn/conformal-bayesopt

#Techtonique #DataScience #Python #rstats #MachineLearning

https://thierrymoudiki.github.io/blog/2024/06/09/python/quasirandomizednn/conformal-bayesopt

#Techtonique #DataScience #Python #rstats #MachineLearning

Data Augmentation and Hyperparameter Tuning for Low-Resource MFA

https://arxiv.org/abs/2504.07024

Data Augmentation and Hyperparameter Tuning for Low-Resource MFA

https://arxiv.org/abs/2504.07024