And also to scientists writing press releases, too - calling something "AI" when it was actually your student spending 12 months fitting and validating a model is disingenuous

And also to scientists writing press releases, too - calling something "AI" when it was actually your student spending 12 months fitting and validating a model is disingenuous

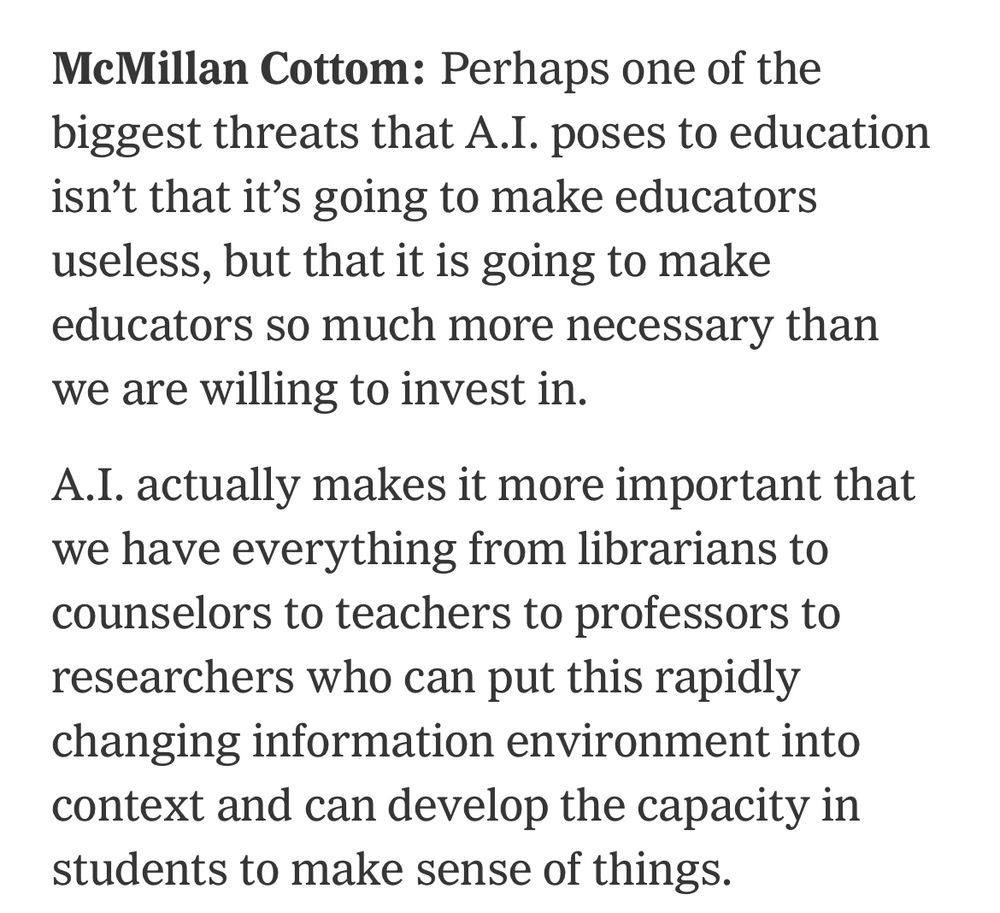

But a deeper problem is that an essential aspect of teaching is helping someone organize their thinking in new ways. LLMs — systems which cannot think or appreciate thinking — are incapable of doing this in a meaningful way.

Shared by @gizmodo.com: buff.ly/yAAHtHq

But a deeper problem is that an essential aspect of teaching is helping someone organize their thinking in new ways. LLMs — systems which cannot think or appreciate thinking — are incapable of doing this in a meaningful way.

Shared by @gizmodo.com: buff.ly/yAAHtHq

Shared by @gizmodo.com: buff.ly/yAAHtHq

Even if an LLM could be trusted to give you correct information 100% of the time, it would be an inferior method of learning it.

Shared by @gizmodo.com: buff.ly/yAAHtHq

Even if an LLM could be trusted to give you correct information 100% of the time, it would be an inferior method of learning it.

But something about US history, Black women, Native women, and Nazis

www.npr.org/2020/12/22/9...

But something about US history, Black women, Native women, and Nazis

Printing this to hand to my 13yo:

Printing this to hand to my 13yo:

Sigh.

Sigh.

Insisting we should only teach useful subjects is like saying science should only do research that gives positive results

If you honestly believe that's how anything works, *your* education was the wasted one

Insisting we should only teach useful subjects is like saying science should only do research that gives positive results

If you honestly believe that's how anything works, *your* education was the wasted one

Bitch one of us actually knows how many fingers humans have and evidently it’s not ChatGPT

Bitch one of us actually knows how many fingers humans have and evidently it’s not ChatGPT

It's 34% over the speed limit. It's the difference between 6/10 pedestrians surviving a collision vs 1-2/10.

Car brain is a hell of a thing.

It's 34% over the speed limit. It's the difference between 6/10 pedestrians surviving a collision vs 1-2/10.

Car brain is a hell of a thing.

...except all the photos of actual tumors had a ruler next to them for scale. And the algorithm just got really good at spotting rulers in a photograph.

...except all the photos of actual tumors had a ruler next to them for scale. And the algorithm just got really good at spotting rulers in a photograph.

Scientists speaking of abandoning humanities have not learned the core lessons of our history - and by our history I even mean THE HISTORY OF SCIENCE. Science is never separated from society.

Scientists speaking of abandoning humanities have not learned the core lessons of our history - and by our history I even mean THE HISTORY OF SCIENCE. Science is never separated from society.

Scientists who are bending to jingoism to try and protect funding aren't asking the right questions.

“scientists… are being punished for the sins of [humanities scholars] because we all live under one roof. I cannot see a compelling reason for our continued cohabitation.”

Scientists who are bending to jingoism to try and protect funding aren't asking the right questions.

A reminder: AI generates slop.