Tom Costello

@tomcostello.bsky.social

research psychologist. beliefs, AI, computational social science. prof at american university.

I’m going to be in Montreal for a few days starting tomorrow for COLM — anyone also at the conference / interested in meeting up, let me know!

October 7, 2025 at 9:14 PM

I’m going to be in Montreal for a few days starting tomorrow for COLM — anyone also at the conference / interested in meeting up, let me know!

Reposted by Tom Costello

This is a valid point, I think. The question is always what type of alternative information gathering processes AI chatbots replace. In the case of medical "self diagnosis", there is some reason to believe that common alternative mechanisms aren't superior.

Is there a strong case for AI helping, rather than harming, the accuracy of people's beliefs about contentious topics? In this

@nature.com Nature Medicine piece (focusing on vaccination), I argue the answer is YES. And it boils down to how LLMs differ from other sources of information.

@nature.com Nature Medicine piece (focusing on vaccination), I argue the answer is YES. And it boils down to how LLMs differ from other sources of information.

July 28, 2025 at 11:27 AM

This is a valid point, I think. The question is always what type of alternative information gathering processes AI chatbots replace. In the case of medical "self diagnosis", there is some reason to believe that common alternative mechanisms aren't superior.

Targeted ads have gone too far

July 24, 2025 at 4:10 PM

Targeted ads have gone too far

Reposted by Tom Costello

Thomas Costello argues that as patients move from WebMD to AI, we might be slightly optimism. Unlike former tools, LLMs can synthesize vast, shared knowledge, potentially helping users converge on more accurate beliefs.

The major caveat is: as long as the LLMs are not trained on bad data.

The major caveat is: as long as the LLMs are not trained on bad data.

Large language models as disrupters of misinformation - Nature Medicine

As patients move from WebMD to ChatGPT, Thomas Costello makes the case for cautious optimism.

www.nature.com

July 16, 2025 at 3:31 PM

Thomas Costello argues that as patients move from WebMD to AI, we might be slightly optimism. Unlike former tools, LLMs can synthesize vast, shared knowledge, potentially helping users converge on more accurate beliefs.

The major caveat is: as long as the LLMs are not trained on bad data.

The major caveat is: as long as the LLMs are not trained on bad data.

Is there a strong case for AI helping, rather than harming, the accuracy of people's beliefs about contentious topics? In this

@nature.com Nature Medicine piece (focusing on vaccination), I argue the answer is YES. And it boils down to how LLMs differ from other sources of information.

@nature.com Nature Medicine piece (focusing on vaccination), I argue the answer is YES. And it boils down to how LLMs differ from other sources of information.

July 17, 2025 at 8:38 PM

Is there a strong case for AI helping, rather than harming, the accuracy of people's beliefs about contentious topics? In this

@nature.com Nature Medicine piece (focusing on vaccination), I argue the answer is YES. And it boils down to how LLMs differ from other sources of information.

@nature.com Nature Medicine piece (focusing on vaccination), I argue the answer is YES. And it boils down to how LLMs differ from other sources of information.

Reposted by Tom Costello

This is an interesting take on LLMs and the RCT in vaccine hesitancy is fascinating.

www.nature.com/articles/s41...

www.nature.com/articles/s41...

Large language models as disrupters of misinformation - Nature Medicine

As patients move from WebMD to ChatGPT, Thomas Costello makes the case for cautious optimism.

www.nature.com

July 16, 2025 at 8:29 PM

This is an interesting take on LLMs and the RCT in vaccine hesitancy is fascinating.

www.nature.com/articles/s41...

www.nature.com/articles/s41...

Reposted by Tom Costello

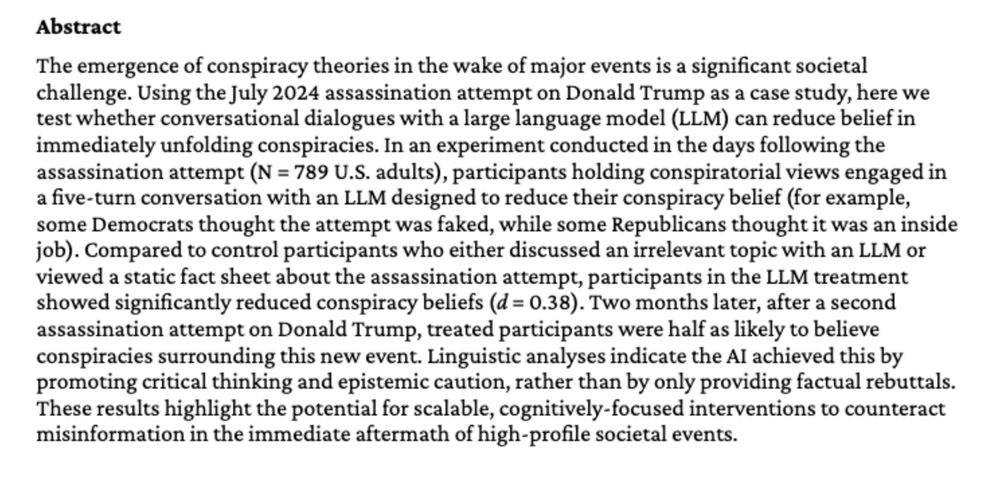

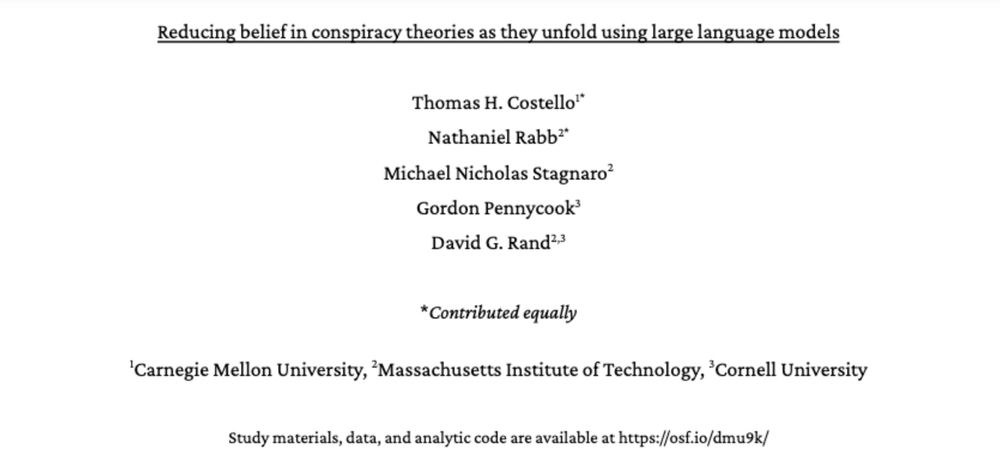

Conspiracies emerge in the wake of high-profile events, but you can’t debunk them with evidence because little yet exists. Does this mean LLMs can’t debunk conspiracies during ongoing events? No!

We show they can in a new working paper.

PDF: osf.io/preprints/ps...

We show they can in a new working paper.

PDF: osf.io/preprints/ps...

July 9, 2025 at 4:34 PM

Conspiracies emerge in the wake of high-profile events, but you can’t debunk them with evidence because little yet exists. Does this mean LLMs can’t debunk conspiracies during ongoing events? No!

We show they can in a new working paper.

PDF: osf.io/preprints/ps...

We show they can in a new working paper.

PDF: osf.io/preprints/ps...

Reposted by Tom Costello

I'm very excited about this new WP showing that LLMs effectively countered conspiracies in the immediate aftermath of the 1st Trump assassination attempt, and that treatment also reduced conspiratorial thinking about the subsequent 2nd assassination attempt

Conspiracies emerge in the wake of high-profile events, but you can’t debunk them with evidence because little yet exists. Does this mean LLMs can’t debunk conspiracies during ongoing events? No!

We show they can in a new working paper.

PDF: osf.io/preprints/ps...

We show they can in a new working paper.

PDF: osf.io/preprints/ps...

July 9, 2025 at 6:01 PM

I'm very excited about this new WP showing that LLMs effectively countered conspiracies in the immediate aftermath of the 1st Trump assassination attempt, and that treatment also reduced conspiratorial thinking about the subsequent 2nd assassination attempt

Conspiracies emerge in the wake of high-profile events, but you can’t debunk them with evidence because little yet exists. Does this mean LLMs can’t debunk conspiracies during ongoing events? No!

We show they can in a new working paper.

PDF: osf.io/preprints/ps...

We show they can in a new working paper.

PDF: osf.io/preprints/ps...

July 9, 2025 at 4:34 PM

Conspiracies emerge in the wake of high-profile events, but you can’t debunk them with evidence because little yet exists. Does this mean LLMs can’t debunk conspiracies during ongoing events? No!

We show they can in a new working paper.

PDF: osf.io/preprints/ps...

We show they can in a new working paper.

PDF: osf.io/preprints/ps...

Reposted by Tom Costello

Here's a bit of spice. Brain research clearly needs to tackle more complexity (than, say, Step 1: simple linear causal chains). But that leaves an ~infinite set of alternatives. Here, @pessoabrain.bsky.social advocates not for just a step 2, but a 3. /1

arxiv.org/abs/2411.03621

arxiv.org/abs/2411.03621

July 2, 2025 at 3:39 PM

Here's a bit of spice. Brain research clearly needs to tackle more complexity (than, say, Step 1: simple linear causal chains). But that leaves an ~infinite set of alternatives. Here, @pessoabrain.bsky.social advocates not for just a step 2, but a 3. /1

arxiv.org/abs/2411.03621

arxiv.org/abs/2411.03621

Reposted by Tom Costello

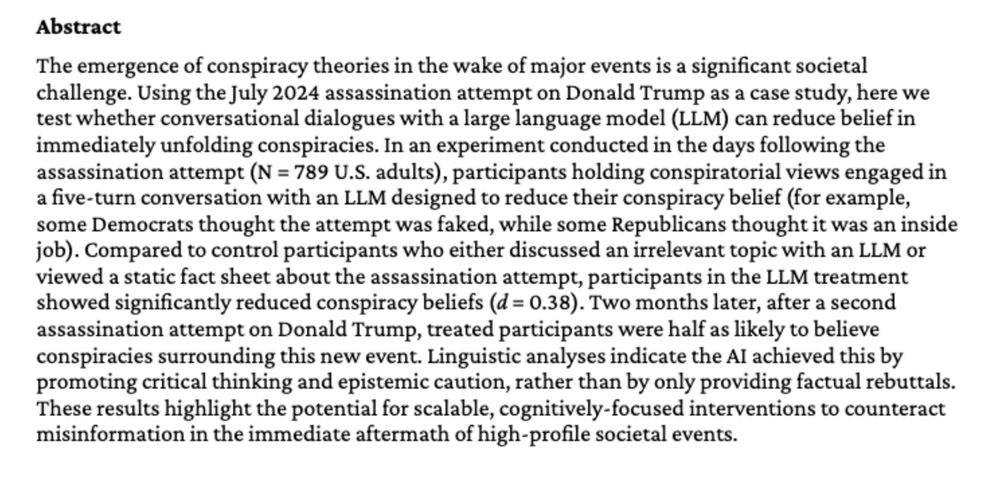

My paper with @stellalourenco.bsky.social is now out in Science Advances!

We found that children have robust object recognition abilities that surpass many ANNs. Models only outperformed kids when their training far exceeded what a child could experience in their lifetime

doi.org/10.1126/scia...

We found that children have robust object recognition abilities that surpass many ANNs. Models only outperformed kids when their training far exceeded what a child could experience in their lifetime

doi.org/10.1126/scia...

Fast and robust visual object recognition in young children

The visual recognition abilities of preschool children rival those of state-of-the-art artificial intelligence models.

doi.org

July 2, 2025 at 7:38 PM

My paper with @stellalourenco.bsky.social is now out in Science Advances!

We found that children have robust object recognition abilities that surpass many ANNs. Models only outperformed kids when their training far exceeded what a child could experience in their lifetime

doi.org/10.1126/scia...

We found that children have robust object recognition abilities that surpass many ANNs. Models only outperformed kids when their training far exceeded what a child could experience in their lifetime

doi.org/10.1126/scia...

This is a stellar paper -- highly recommend if you want an incisive birds-eye view of AI + political science

www.annualreviews.org/content/jour...

www.annualreviews.org/content/jour...

AI as Governance | Annual Reviews

Political scientists have had remarkably little to say about artificial intelligence (AI), perhaps because they are dissuaded by its technical complexity and by current debates about whether AI might ...

www.annualreviews.org

June 25, 2025 at 2:20 AM

This is a stellar paper -- highly recommend if you want an incisive birds-eye view of AI + political science

www.annualreviews.org/content/jour...

www.annualreviews.org/content/jour...

It’s my birthday, so today seems like as good a time as any to share that, this summer, I’ll be joining Carnegie Mellon University as an assistant professor in the Department of Social and Decision Sciences (w/ courtesy in HCI institute).

May 30, 2025 at 4:27 PM

It’s my birthday, so today seems like as good a time as any to share that, this summer, I’ll be joining Carnegie Mellon University as an assistant professor in the Department of Social and Decision Sciences (w/ courtesy in HCI institute).

Reposted by Tom Costello

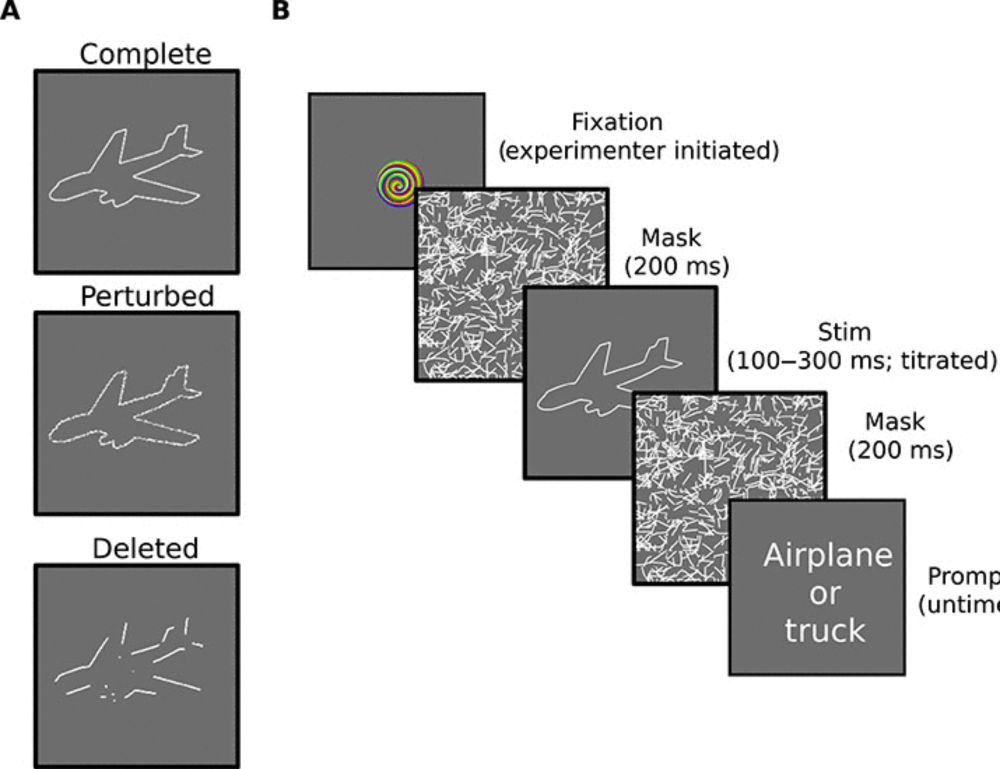

New paper in PSPB! journals.sagepub.com/doi/10.1177/...

Well, actually, not "new". We first put this paper online way back Dec 2022... in any case, we think it's really cool!

We find that conspiracy believers tend to be overconfident & really don't seem to realize that most disagree with them

Well, actually, not "new". We first put this paper online way back Dec 2022... in any case, we think it's really cool!

We find that conspiracy believers tend to be overconfident & really don't seem to realize that most disagree with them

May 29, 2025 at 5:18 PM

New paper in PSPB! journals.sagepub.com/doi/10.1177/...

Well, actually, not "new". We first put this paper online way back Dec 2022... in any case, we think it's really cool!

We find that conspiracy believers tend to be overconfident & really don't seem to realize that most disagree with them

Well, actually, not "new". We first put this paper online way back Dec 2022... in any case, we think it's really cool!

We find that conspiracy believers tend to be overconfident & really don't seem to realize that most disagree with them

In trying to get purple Connections first, I ended up with a sequence of guesses that was unique across all other players.

I think impossible to replicate this while earnestly trying to get puzzle right?

I think impossible to replicate this while earnestly trying to get puzzle right?

May 27, 2025 at 8:13 PM

In trying to get purple Connections first, I ended up with a sequence of guesses that was unique across all other players.

I think impossible to replicate this while earnestly trying to get puzzle right?

I think impossible to replicate this while earnestly trying to get puzzle right?

Reposted by Tom Costello

This + other work on LLM persuasiveness increasingly suggests these models are at human quality - a question that occurs is what happens when everyone is being microtargeted by LLMs from both sides of politics

🚨New WP🚨

Can AI do "deep canvassing"—the time-intensive, empathic persuasive dialogues that durably reduce prejudice when done by humans?

Spoiler: Yes! We find durable reduction in prejudice toward undocumented immigrants

LLMs make deep canvassing possible at massive scale

osf.io/preprints/os...

Can AI do "deep canvassing"—the time-intensive, empathic persuasive dialogues that durably reduce prejudice when done by humans?

Spoiler: Yes! We find durable reduction in prejudice toward undocumented immigrants

LLMs make deep canvassing possible at massive scale

osf.io/preprints/os...

May 21, 2025 at 8:38 PM

This + other work on LLM persuasiveness increasingly suggests these models are at human quality - a question that occurs is what happens when everyone is being microtargeted by LLMs from both sides of politics

I’ll be at APS @psychscience.bsky.social this Friday! But also, I live in DC, so if you want to get together tomorrow or Friday, reach out!

May 21, 2025 at 7:05 PM

I’ll be at APS @psychscience.bsky.social this Friday! But also, I live in DC, so if you want to get together tomorrow or Friday, reach out!

Reposted by Tom Costello

🚨New WP🚨

Can AI do "deep canvassing"—the time-intensive, empathic persuasive dialogues that durably reduce prejudice when done by humans?

Spoiler: Yes! We find durable reduction in prejudice toward undocumented immigrants

LLMs make deep canvassing possible at massive scale

osf.io/preprints/os...

Can AI do "deep canvassing"—the time-intensive, empathic persuasive dialogues that durably reduce prejudice when done by humans?

Spoiler: Yes! We find durable reduction in prejudice toward undocumented immigrants

LLMs make deep canvassing possible at massive scale

osf.io/preprints/os...

May 21, 2025 at 4:55 PM

🚨New WP🚨

Can AI do "deep canvassing"—the time-intensive, empathic persuasive dialogues that durably reduce prejudice when done by humans?

Spoiler: Yes! We find durable reduction in prejudice toward undocumented immigrants

LLMs make deep canvassing possible at massive scale

osf.io/preprints/os...

Can AI do "deep canvassing"—the time-intensive, empathic persuasive dialogues that durably reduce prejudice when done by humans?

Spoiler: Yes! We find durable reduction in prejudice toward undocumented immigrants

LLMs make deep canvassing possible at massive scale

osf.io/preprints/os...

🚨New WP🚨

Can AI do "deep canvassing"—the time-intensive, empathic persuasive dialogues that durably reduce prejudice when done by humans?

Spoiler: Yes! We find durable reduction in prejudice toward undocumented immigrants

LLMs make deep canvassing possible at massive scale

osf.io/preprints/os...

Can AI do "deep canvassing"—the time-intensive, empathic persuasive dialogues that durably reduce prejudice when done by humans?

Spoiler: Yes! We find durable reduction in prejudice toward undocumented immigrants

LLMs make deep canvassing possible at massive scale

osf.io/preprints/os...

May 21, 2025 at 4:55 PM

🚨New WP🚨

Can AI do "deep canvassing"—the time-intensive, empathic persuasive dialogues that durably reduce prejudice when done by humans?

Spoiler: Yes! We find durable reduction in prejudice toward undocumented immigrants

LLMs make deep canvassing possible at massive scale

osf.io/preprints/os...

Can AI do "deep canvassing"—the time-intensive, empathic persuasive dialogues that durably reduce prejudice when done by humans?

Spoiler: Yes! We find durable reduction in prejudice toward undocumented immigrants

LLMs make deep canvassing possible at massive scale

osf.io/preprints/os...

Reposted by Tom Costello

🚨🚨 NEW PRE-PRINT 🚨🚨

Prominent theories in political psychology argue that threat causes increases in conservatism. Early experimental work supported this idea, but many of these studies were (severely) underpowered, and examined only a few threats and ideological DVs. 1/n osf.io/preprints/ps...

Prominent theories in political psychology argue that threat causes increases in conservatism. Early experimental work supported this idea, but many of these studies were (severely) underpowered, and examined only a few threats and ideological DVs. 1/n osf.io/preprints/ps...

OSF

osf.io

May 20, 2025 at 10:47 PM

🚨🚨 NEW PRE-PRINT 🚨🚨

Prominent theories in political psychology argue that threat causes increases in conservatism. Early experimental work supported this idea, but many of these studies were (severely) underpowered, and examined only a few threats and ideological DVs. 1/n osf.io/preprints/ps...

Prominent theories in political psychology argue that threat causes increases in conservatism. Early experimental work supported this idea, but many of these studies were (severely) underpowered, and examined only a few threats and ideological DVs. 1/n osf.io/preprints/ps...

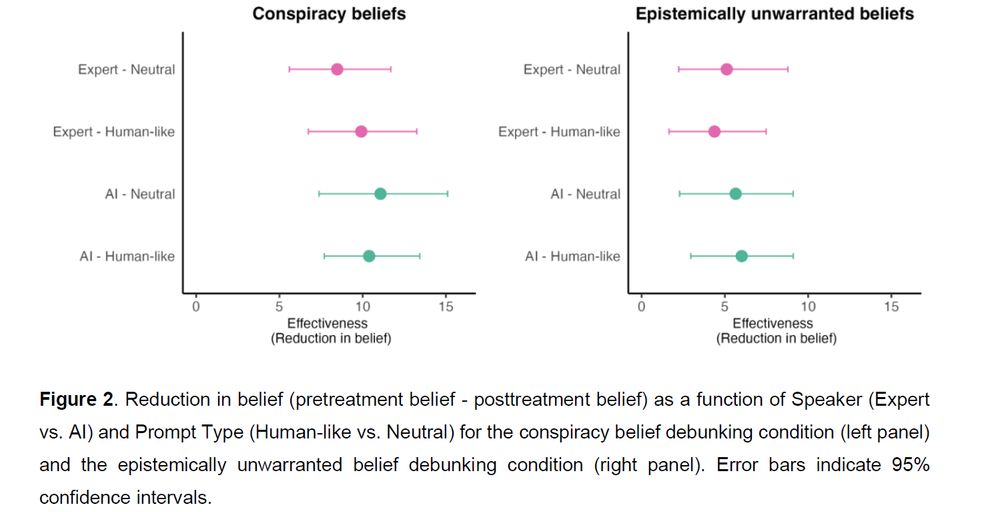

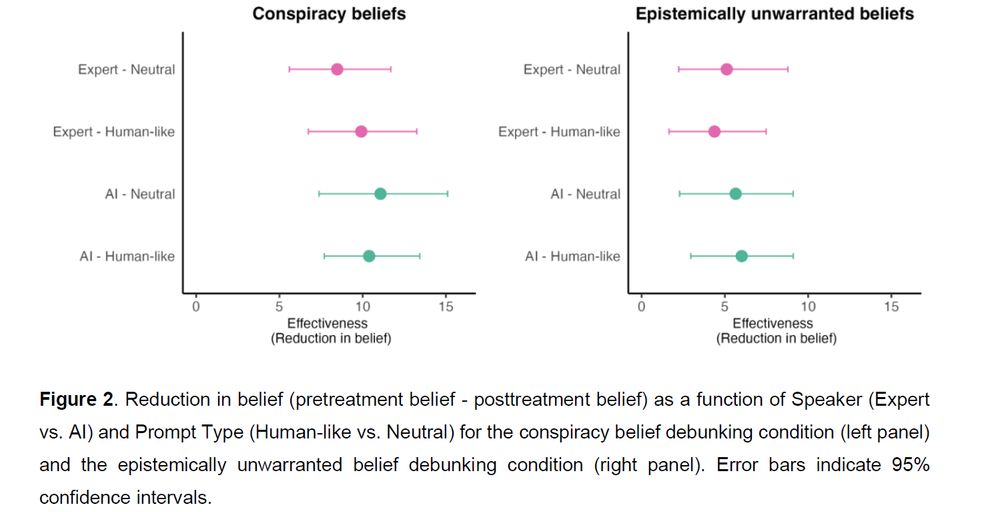

People do not believe me when I tell them this finding. Here is our full paper reporting it.

AI-delivered information is persuasive because of the information — when we trick people into thinking they’re talking to a human, the persuasive effect is identical!

AI-delivered information is persuasive because of the information — when we trick people into thinking they’re talking to a human, the persuasive effect is identical!

The primary result is straightforward: The effect was ~identical across conditions.

Believers updated their beliefs according to counterevidence. Doesn't matter if they thought they were talking to a human or an AI.

Making the AI seem more "human-like" also had no effect. osf.io/preprints/ps...

Believers updated their beliefs according to counterevidence. Doesn't matter if they thought they were talking to a human or an AI.

Making the AI seem more "human-like" also had no effect. osf.io/preprints/ps...

May 15, 2025 at 9:19 PM

People do not believe me when I tell them this finding. Here is our full paper reporting it.

AI-delivered information is persuasive because of the information — when we trick people into thinking they’re talking to a human, the persuasive effect is identical!

AI-delivered information is persuasive because of the information — when we trick people into thinking they’re talking to a human, the persuasive effect is identical!

Been asked this question many times, and now we have a clear data-driven answer.

Q: Are the persuasive effects of AI dialogues conditional on the source being AI? That is, if a human was saying the very same things, would the persuasiveness diminish?

A: No, it doesn't seem to matter whatsoever.

Q: Are the persuasive effects of AI dialogues conditional on the source being AI? That is, if a human was saying the very same things, would the persuasiveness diminish?

A: No, it doesn't seem to matter whatsoever.

Recent research shows that AI can durably reduce belief in conspiracies. But does this work b/c the AI is good at producing evidence, or b/c ppl really trust AI?

In a new working paper, we show that the effect persists even if the person thinks they're talking to a human: osf.io/preprints/ps...

🧵

In a new working paper, we show that the effect persists even if the person thinks they're talking to a human: osf.io/preprints/ps...

🧵

May 15, 2025 at 4:03 PM

Been asked this question many times, and now we have a clear data-driven answer.

Q: Are the persuasive effects of AI dialogues conditional on the source being AI? That is, if a human was saying the very same things, would the persuasiveness diminish?

A: No, it doesn't seem to matter whatsoever.

Q: Are the persuasive effects of AI dialogues conditional on the source being AI? That is, if a human was saying the very same things, would the persuasiveness diminish?

A: No, it doesn't seem to matter whatsoever.

Reposted by Tom Costello

Recent research shows that AI can durably reduce belief in conspiracies. But does this work b/c the AI is good at producing evidence, or b/c ppl really trust AI?

In a new working paper, we show that the effect persists even if the person thinks they're talking to a human: osf.io/preprints/ps...

🧵

In a new working paper, we show that the effect persists even if the person thinks they're talking to a human: osf.io/preprints/ps...

🧵

May 15, 2025 at 3:54 PM

Recent research shows that AI can durably reduce belief in conspiracies. But does this work b/c the AI is good at producing evidence, or b/c ppl really trust AI?

In a new working paper, we show that the effect persists even if the person thinks they're talking to a human: osf.io/preprints/ps...

🧵

In a new working paper, we show that the effect persists even if the person thinks they're talking to a human: osf.io/preprints/ps...

🧵

Had a chat about “debunkbot” and the research supporting it on CBS Mornings yesterday!

www.cbsnews.com/video/ai-cha...

www.cbsnews.com/video/ai-cha...

May 8, 2025 at 5:03 PM

Had a chat about “debunkbot” and the research supporting it on CBS Mornings yesterday!

www.cbsnews.com/video/ai-cha...

www.cbsnews.com/video/ai-cha...

Reposted by Tom Costello

There are a lot of critiques of LLMs that I agree with but "they suck and aren't useful" doesn't really hold water.

I understand people not using them because of social, economic, and environmental concerns. And I also understand people using them because they can be very useful.

Thoughts?

I understand people not using them because of social, economic, and environmental concerns. And I also understand people using them because they can be very useful.

Thoughts?

April 24, 2025 at 2:37 AM

There are a lot of critiques of LLMs that I agree with but "they suck and aren't useful" doesn't really hold water.

I understand people not using them because of social, economic, and environmental concerns. And I also understand people using them because they can be very useful.

Thoughts?

I understand people not using them because of social, economic, and environmental concerns. And I also understand people using them because they can be very useful.

Thoughts?